University of Central Florida University of Central Florida

STARS STARS

Electronic Theses and Dissertations

2004

Improving Project Management With Simulation And Completion Improving Project Management With Simulation And Completion

Distributi Distributi

Grant Cates

University of Central Florida

Part of the Engineering Commons

Find similar works at: https://stars.library.ucf.edu/etd

University of Central Florida Libraries http://library.ucf.edu

This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted

for inclusion in Electronic Theses and Dissertations by an authorized administrator of STARS. For more information,

please contact ST[email protected].

STARS Citation STARS Citation

Cates, Grant, "Improving Project Management With Simulation And Completion Distributi" (2004).

Electronic Theses and Dissertations

. 167.

https://stars.library.ucf.edu/etd/167

IMPROVING PROJECT MANAGEMENT WITH

SIMULATION AND COMPLETION DISTRIBUTION FUNCTIONS

by

GRANT R. CATES

B.S. Colorado State University, 1981

M.S. University of Central Florida, 1996

A dissertation submitted in partial fulfillment of the requirements

for the degree of Doctor of Philosophy

in the Department of Industrial Engineering and Management Sciences

in the College of Engineering and Computer Sciences

at the University of Central Florida

Orlando, Florida

Fall Term

2004

© 2004 Grant R. Cates

ii

ABSTRACT

Despite the critical importance of project completion timeliness, management

practices in place today remain inadequate for addressing the persistent problem of

project completion tardiness. Uncertainty has been identified as a contributing factor in

late projects. This uncertainty resides in activity duration estimates, unplanned upsetting

events, and the potential unavailability of critical resources.

This research developed a comprehensive simulation based methodology for

conducting quantitative project completion-time risk assessments. The methodology

enables project stakeholders to visualize uncertainty or risk, i.e. the likelihood of their

project completing late and the magnitude of the lateness, by providing them with a

completion time distribution function of their projects. Discrete event simulation is used

to determine a project’s completion distribution function.

The project simulation is populated with both deterministic and stochastic

elements. Deterministic inputs include planned activities and resource requirements.

Stochastic inputs include activity duration growth distributions, probabilities for

unplanned upsetting events, and other dynamic constraints upon project activities.

Stochastic inputs are based upon past data from similar projects. The time for an entity to

complete the simulation network, subject to both the deterministic and stochastic factors,

represents the time to complete the project. Multiple replications of the simulation are run

iii

to create the completion distribution function.

The methodology was demonstrated to be effective for the on-going project to

assemble the International Space Station. Approximately $500 million per month is being

spent on this project, which is scheduled to complete by 2010. Project stakeholders

participated in determining and managing completion distribution functions. The first

result was improved project completion risk awareness. Secondly, mitigation options

were analyzed to improve project completion performance and reduce total project cost.

iv

ACKNOWLEDGMENTS

First and foremost, I thank Dr. Mansooreh Mollaghasemi for introducing me to

discrete event simulation modeling and for mentoring me through the subsequent

projects, classes, and this resulting dissertation. Likewise, the members of my

dissertation committee, Dr. Linda Malone, Dr. Michael Georgiopoulos, Dr. Tim Kotnour,

and Dr. Luis Rabelo provided extremely valuable leadership as well. Dr. Martin Steele of

NASA, Dr. José Sepulveda of UCF, and Dr. Ghaith Rabadi of Old Dominion University

also provided greatly appreciated guidance.

My participation in a graduate program would not have been possible without the

tremendous encouragement and support I received from numerous NASA and UCF

officials. These include Conrad Nagel, Mike Wetmore, and Dave King all of whom

recommended me for the Kennedy Graduate Fellowship Program. My participation in

that program was expertly administered by Kari Heminger, June Perez, Chris Weaver,

and Ed Markowski all from NASA, along with Don Hutsko of the United States

Department of Agriculture. General Roy Bridges and Jim Kennedy as directors of the

Kennedy Space Center provided the vision and leadership for the partnership between

NASA and the University of Central Florida. Special thanks to Joy Tatlonghari, for

providing the administrative guidance and support throughout the entire program of

classes, research, and dissertation. Thanks to UCF thesis Editor Katie Grigg as well as

v

NASA’s Bill Raines, Sam Lewellen, and Wayne Ranow for their assistance during the

publication process.

The support and encouragement I received from a host of current and former

NASA officials all steadfastly determined to create the greatest Space Station ever built

was nothing short of fantastic. These skilled and dedicated individuals include Mike

Leinbach, Steve Cash, Pepper Phillips, Frank Izquierdo, Doug Lyons, Jeff Angermeier,

Tom Overton, Andy Warren, Jessica Mock, John Coggeshall, John Shannon, Ron

Dittemore, Bill Gerstenmaier, Bill Parsons, Tassos Abadiotakis, Scott Thurston,

Stephanie Stilson, Shari Bianco, Bill Hill, Elric McHenry, and Larry Ross.

In particular I wish to thank Janet Letchworth and Bill Cirillo both of NASA

along with Joe Fragola, Blake Putney, and Chel Stromgren all of SAIC, and Dan

Heimerdinger of Valador Inc., for their many contributions to the evolution of this

research.

I also received greatly appreciated support and encouragement from Dawn

Cannister and Tom Hayson of Rockwell Software with respect to the use of the Arena

simulation software. Dr. Averill Law of Averill M. Law and Associates provided

guidance on simulation modeling as well as the use of their excellent ExpertFit software.

My family provided great support throughout. Thanks to my parents, Chuck and

Iola Cates, my brother Rick, my sister Leslie and her husband Kevin Blackham, and my

sister Wendy and her husband Jeff Jones. My greatest appreciation is to my wife Alicia

for her love, encouragement, and patience.

vi

TABLE OF CONTENTS

LIST OF FIGURES ..........................................................................................................xii

LIST OF TABLES............................................................................................................ xv

LIST OF ABBREVIATIONS.......................................................................................... xvi

CHAPTER ONE: INTRODUCTION................................................................................. 1

Large Projects Advanced Science of Project Management............................................ 5

PAST and the International Space Station...................................................................... 8

Applicability to Other Projects ....................................................................................... 9

Overview of Subsequent Chapters................................................................................ 10

CHAPTER TWO: LITERATURE REVIEW................................................................... 11

Project Schedule Risk Analysis/Assessment................................................................ 11

Project Management Tools........................................................................................... 16

Gantt Charts.............................................................................................................. 17

CPM.......................................................................................................................... 17

PERT......................................................................................................................... 18

Activity Duration Specification............................................................................ 19

Estimating Project Completion Times.................................................................. 21

Precedence Diagramming......................................................................................... 25

Merging of PERT, CPM, and Precedence Diagramming......................................... 25

vii

Performance Measurement and Earned Value.......................................................... 27

GERT and Q-GERT.................................................................................................. 27

VERT........................................................................................................................ 29

The Project Management Institute................................................................................ 30

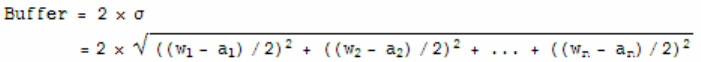

Critical Chain Project Management.............................................................................. 32

Activity Embedded Safety Time............................................................................... 35

Project and Feeding Buffers...................................................................................... 38

CCPM on Project Completion Time Distribution Function..................................... 40

Empirical Data for Input Analysis................................................................................ 41

CHAPTER THREE: METHODOLOGY ......................................................................... 44

Part I: The Project Assessment by Simulation Technique (PAST) .............................. 44

Managerial Controls Component of PAST............................................................... 47

Introductory Briefings........................................................................................... 48

Assessment Requirements .................................................................................... 49

Communications................................................................................................... 49

Simulation Modeling Component of PAST.............................................................. 50

Activity Construct................................................................................................. 53

Probabilistic Event Construct ............................................................................... 61

Cyclical Element Construct .................................................................................. 62

Input Analysis Component of PAST ........................................................................ 63

Output Analysis Component of PAST...................................................................... 67

Creating the PCDF................................................................................................ 68

viii

Determining the PCDF Confidence Band............................................................. 69

Verification and Validation for PAST...................................................................... 73

Verification........................................................................................................... 73

Validation.............................................................................................................. 74

Measuring Progress................................................................................................... 76

Part II: Research Methodology (Case Study) ............................................................... 77

Case Study Definitions ............................................................................................. 78

Components of Research Design.............................................................................. 79

Study Question...................................................................................................... 79

Proposition............................................................................................................ 80

Units of Measurement........................................................................................... 81

Logic for Linking Data to Proposition.................................................................. 82

Criteria for Interpreting the Findings.................................................................... 83

Ensuring Case Study Design Quality........................................................................ 83

Construct Validity................................................................................................. 84

Internal Validity.................................................................................................... 87

External Validity................................................................................................... 87

Reliability.............................................................................................................. 88

The Specific Case Study Design............................................................................... 88

CHAPTER FOUR: FINDINGS........................................................................................ 90

Section I: The Pilot Case Study.................................................................................... 90

Background............................................................................................................... 91

ix

Space Shuttle Processing Flow................................................................................. 94

Margin Assessment Results...................................................................................... 98

Initial Analysis of the Project Margin Problem...................................................... 100

Project Assessment by Simulation Technique Prototype ....................................... 105

Managerial Controls Component........................................................................ 105

Simulation Model Component............................................................................ 106

Input Analysis Component ................................................................................. 108

Verification......................................................................................................... 110

Output Analysis .................................................................................................. 111

Section II: Three Additional Case Studies.................................................................. 116

Background Information......................................................................................... 116

Case Study 1: Launch Window Analysis................................................................ 118

Case Study 2: Manifest Option 04A-29.................................................................. 122

Work Force Augmentation ................................................................................. 125

Reducing Project Content ................................................................................... 128

Case Study 3: Project Buffer Benefit...................................................................... 129

Section III: Evolution of PAST................................................................................... 133

Modeling Automation............................................................................................. 134

Output Analysis Automation .................................................................................. 135

Management Issues................................................................................................. 136

PAST: Step-by-Step............................................................................................ 137

Scale of Effort..................................................................................................... 139

x

Organizational Residence ................................................................................... 140

Analysis Distribution Controls ........................................................................... 140

The Completion Quantity Distribution Function Graphic...................................... 142

CHAPTER FIVE: CONCLUSION................................................................................. 146

Summary of Case Study Results................................................................................. 146

Observations and Recommendations.......................................................................... 148

Completion Quantity Distribution Function........................................................... 148

Timeliness of Analysis............................................................................................ 149

Politics of Risk Assessment.................................................................................... 150

Future Research .......................................................................................................... 152

Continued Use of PAST to Support ISS Assembly................................................ 152

PAST and Critical Chain Project Management ...................................................... 156

The PAST Input Analysis Methodology................................................................. 157

Closing Thoughts........................................................................................................ 157

END NOTES .................................................................................................................. 159

LIST OF REFERENCES................................................................................................ 161

xi

LIST OF FIGURES

Figure 1: The Four Components of PAST.......................................................................... 2

Figure 2: Example Project Completion Distribution Function........................................... 4

Figure 3: Improved Project Completion Timeliness........................................................... 5

Figure 4: Activity Duration Distribution Function........................................................... 35

Figure 5: Multitasking Increases Task Durations............................................................. 37

Figure 6: Visual Display of Project Completion Risk (Example) .................................... 44

Figure 7: Overview of the PAST Methodology................................................................ 45

Figure 8: PAST Flow Diagram......................................................................................... 46

Figure 9: Simulation Modeling Component..................................................................... 51

Figure 10: Activity Modeling Construct with Deterministic Duration and Reserve........ 55

Figure 11: Activity Modeling Construct with Stochastic Duration Added ...................... 56

Figure 12: Reserve Reduction Feature Added to Activity Construct............................... 58

Figure 13: Resource Element Added to Activity Construct ............................................. 59

Figure 14: Completed Activity Construct......................................................................... 60

Figure 15: Predictable Need to Use an Alternate Route................................................... 61

Figure 16: Cyclical Process .............................................................................................. 62

Figure 17: Input Analysis for Activity Construct ............................................................. 63

xii

Figure 18: Input Analysis Component of PAST............................................................... 64

Figure 19: Output Analysis Component of PAST............................................................ 67

Figure 20: Presentation of a Project Completion Time Density Function........................ 69

Figure 21: Graphical Presentation of Confidence Band................................................... 72

Figure 22: Notional Project Planned Completion Date versus PCDF.............................. 81

Figure 23: Research Methodology Chronological Overview........................................... 89

Figure 24: Space Shuttle Orbiter Space Station Assembly Sequence .............................. 92

Figure 25: Orbiter Resource Utilization Chart.................................................................. 93

Figure 26: Space Shuttle Mission Cycle........................................................................... 94

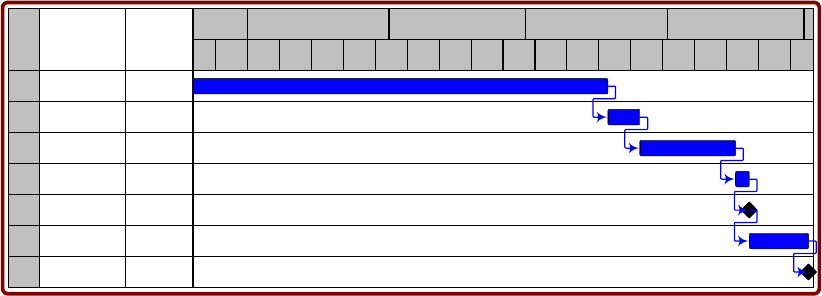

Figure 27: Shuttle Gantt Chart.......................................................................................... 95

Figure 28: Work Days Added to the OPF Flow Post Delta LSFR ................................. 101

Figure 29: Histogram and CFD for OPF Added Work Days.......................................... 102

Figure 30: Available Margin Diagram for the Node-2 Milestone.................................. 104

Figure 31: OPF Empirical Distribution for Added Work Days...................................... 109

Figure 32: Completion Time Distribution Function for STS-120 Launch Date............. 111

Figure 33: Working Holidays Improves STS-120 Launch Date .................................... 114

Figure 34: STS-120 Launch Date Improvement from Working Holidays..................... 115

Figure 35: Launch Window Analysis............................................................................. 120

Figure 36: Launch Probability with Winter Weather Modifier...................................... 121

Figure 37: Launch Probability Comparisons.................................................................. 122

Figure 38: Initial Analysis of 04A-29 Manifest Option.................................................. 124

Figure 39: Potential Benefit from Workforce Augmentation......................................... 127

xiii

Figure 40: CTDF for STS-141 Launch Month............................................................... 129

Figure 41: Analysis of 04A-49 Option with S1.O Assumptions.................................... 131

Figure 42: Confidence Bands for STS-136 Launch Date............................................... 132

Figure 43: Modeling Component of PAST..................................................................... 134

Figure 44: Organizational Hierarchy.............................................................................. 141

Figure 45: CQDF for Analysis Team.............................................................................. 143

Figure 46: CQDF with PLOV at .01............................................................................... 144

Figure 47: Improvement from Elimination of Launch on Need Requirement ............... 145

Figure 48: Accelerating ISS Assembly Completion....................................................... 153

xiv

LIST OF TABLES

Table 1: Buffer Sizes as Proposed by Yongyi Shou and Yeo........................................... 40

Table 2: Confidence Band Calculation............................................................................. 71

Table 3: Margin Assessment............................................................................................. 99

Table 4: Margin Days Required To Ensure Next Project Starts on Time ...................... 103

Table 5: 04A-29 Manifest Option................................................................................... 123

Table 6: 04A-49 Manifest Option................................................................................... 130

xv

LIST OF ABBREVIATIONS

AOA Activity on Arrow

AON Activity on Node

AXAF Advanced X-Ray Astrophysics Facility

CAIB Columbia Accident Investigation Board

CCPM Critical Chain Project Management

CPM Critical Path Method

CQDF Completion Quantity Distribution Function

CTD Completion Time Distribution

CTDF Completion Time Distribution Function

DES Discrete Event Simulation

DFRC Dryden Flight Research Center

DOD Department of Defense

GERT Graphical Evaluation and Review Technique

ISS International Space Station

KSC Kennedy Space Center

LON Launch on Need

MAST Manifest Analysis by Simulation Tool

MPS Main Propulsion System

xvi

NASA National Aeronautics and Space Administration

OPF Orbiter Processing Facility

PAST Project Assessment by Simulation Technique

PCDF Project Completion Distribution Function

PDM Precedence Diagramming Network

PERT Project Evaluation and Review Technique

PLOV Probability of Loss of Vehicle

PMI Project Management Institute

PRACA Problem Reporting and Corrective Action

STS Space Transportation System

TOC Theory of Constraints

VAB Vehicle Assembly Building

VERT Venture Evaluation and Review Technique

xvii

CHAPTER ONE: INTRODUCTION

Despite the critical importance of project completion timeliness, project

management practices in place today remain inadequate for addressing the persistent

problem of project completion tardiness. A major culprit causing project completion

tardiness is uncertainty, which most, if not all, projects are inherently subjected to

(Goldratt 1997). This uncertainty is present in many places including the estimates for

activity durations, the occurrence of unplanned and unforeseen events, and the

availability of critical resources. In planning, scheduling, and controlling their projects,

managers may use tools such as Gantt charts and or network diagrams such as those

provided by Project Evaluation and Review Technique (PERT) or Critical Path Method

(CPM). These tools, however, are limited in their ability to help managers quantify

project completion risk. Consequently, large and highly important projects can end up

finishing later than planned and project stakeholders including politicians, project

managers, and project customers may be unpleasantly surprised when this happens.

The typically cited method for quantitative schedule risk analysis has been called

“stochastic CPM” (Galway 2004). This process uses Monte Carlo simulation to analyze a

project network in order to produce a project completion distribution function. However,

as noted by Galway (2004) there is lack of literature on the use of this technique in

practice and a lack of case studies that illustrate when this method works or fails.

1

Consequently, developing a more complete practical methodology for quantitative project

completion timeliness analysis and demonstrating its effectiveness in a real world

application would seem to be advised.

This research develops a comprehensive methodology for project schedule risk

analysis. It is called the Project Assessment by Simulation Technique (PAST) and

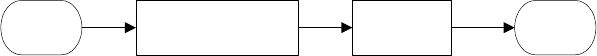

consists of four major components. These are the managerial controls, modeling, input

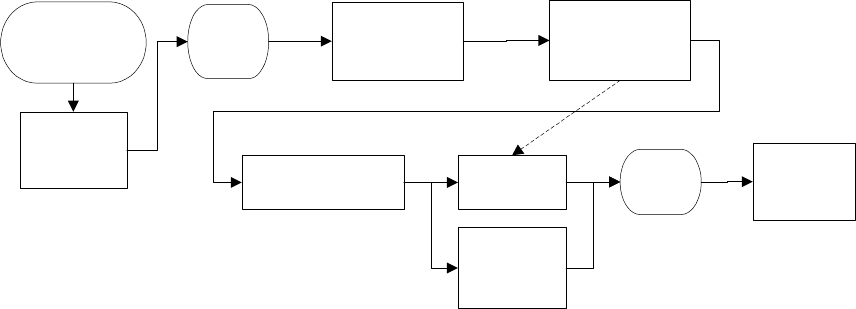

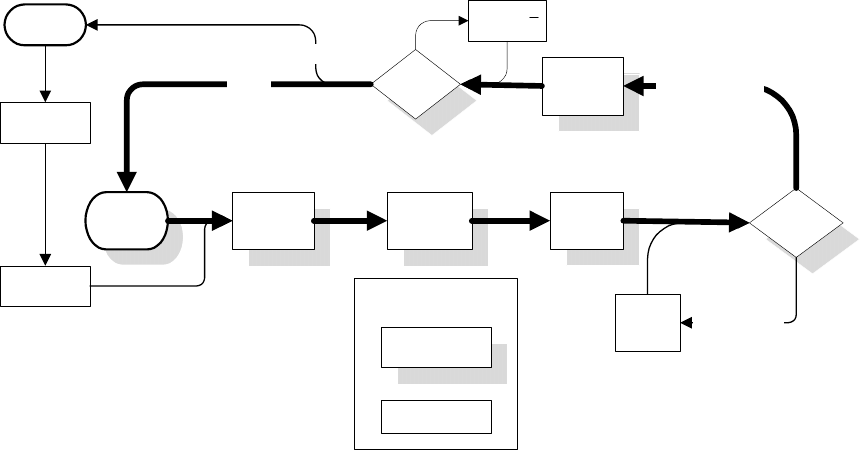

analysis, and output analysis components. Figure 1 shows the components of PAST and

how they are interconnected in a logical fashion.

Simulation

Modeling

Managerial

Controls

Input Analysis:

Deterministic

& Stochastic

Output

Analysis

Feedback

Loops

File: Project Analysis Process R1.vsd

Figure 1: The Four Components of PAST

PAST and its four major components are described briefly below. They are fully

described in Chapter 3. The managerial controls component provides a facilitating

interface between those performing the assessment and the project stakeholders. The

input analysis component contains both deterministic and stochastic elements. The

2

deterministic element includes the schedule information such as project activities, their

durations, along with their precedence and critical resource requirements. This

information is use to create a simulation model of the project. The simulation model

includes stochastic inputs in the form of potential growth in activity durations and the

probability of upsetting events occurring during the project. These upsetting events may

cause project stoppages or may require the addition of new work. The basis of estimate

for the stochastic inputs stems from empirical information. The simulation model of the

project is run for many hundreds or thousands of replications so as to produce a large

quantity of possible project completion end dates. This data set is then used by the output

analysis component to create a visual display of project completion uncertainty in the

form of a completion distribution function.

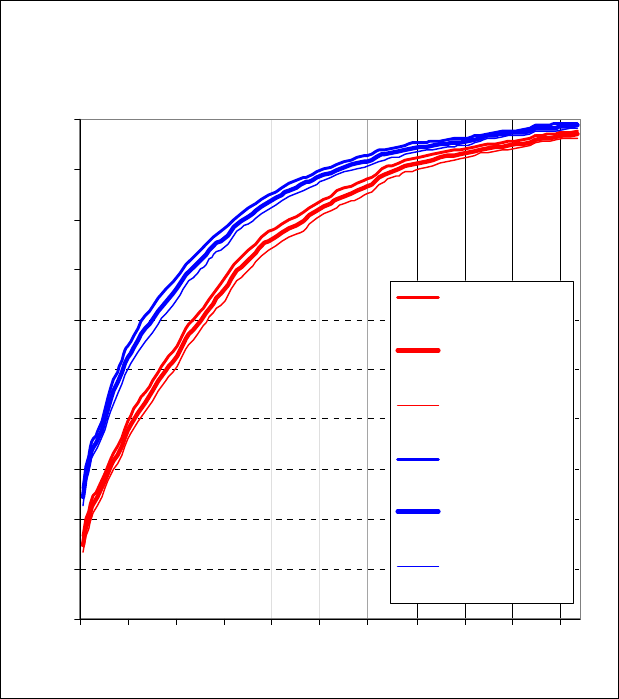

Project stakeholders can visualize project performance uncertainty, e.g. the

likelihood of the project completing late and the magnitude of the lateness, by being

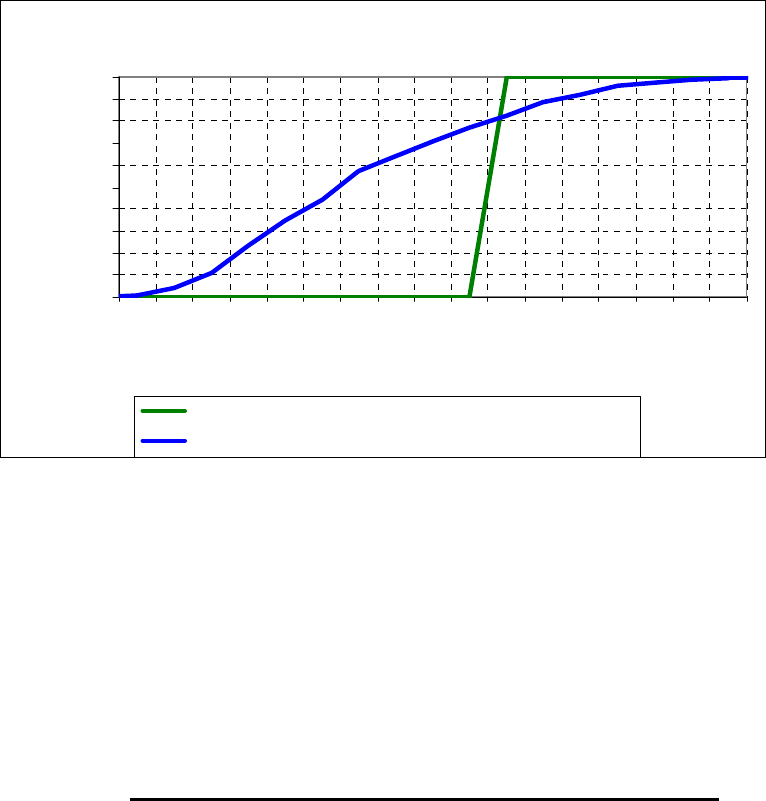

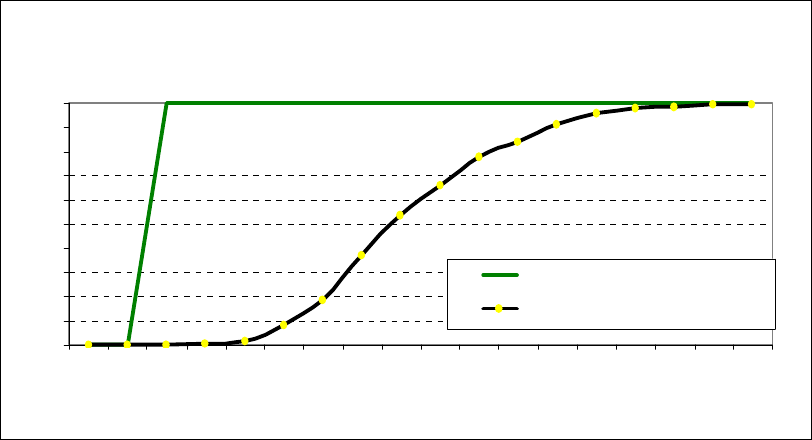

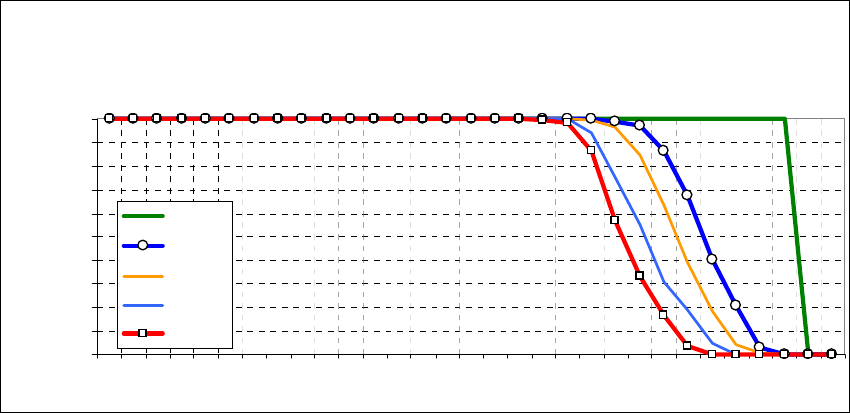

shown the completion distribution function for the project. Figure 2 is an example of a

project completion distribution function. For this notional project, the planned completion

time is October of 2010. The simulation derived completion distribution function for the

project indicates that there is only an approximately 30 percent chance of completing the

project by that time. Moreover, the project may finish as much as a year late.

3

Planned Project Completion

versus Simulation Derived PCDF

0%

10%

20%

30%

40%

50%

60%

70%

80%

90%

100%

Mar-10

Apr-10

May-10

Jun-10

Jul-10

Aug-10

Sep-10

Oct-10

Nov-10

Dec-10

Jan-11

Feb-11

Mar-11

Apr-11

May-11

Jun-11

Jul-11

Aug-11

Sep-11

Oct-11

Project Completion Month

Cumulative Percentage

Planned Project Completion

Simulation Derived Completion Distribution Function

File: Example_PCDFs G. Cates

Figure 2: Example Project Completion Distribution Function

The Project Assessment by Simulation Technique allows one to not only quantify

risk to project completion timeliness but also enables the analysis of managerial decisions

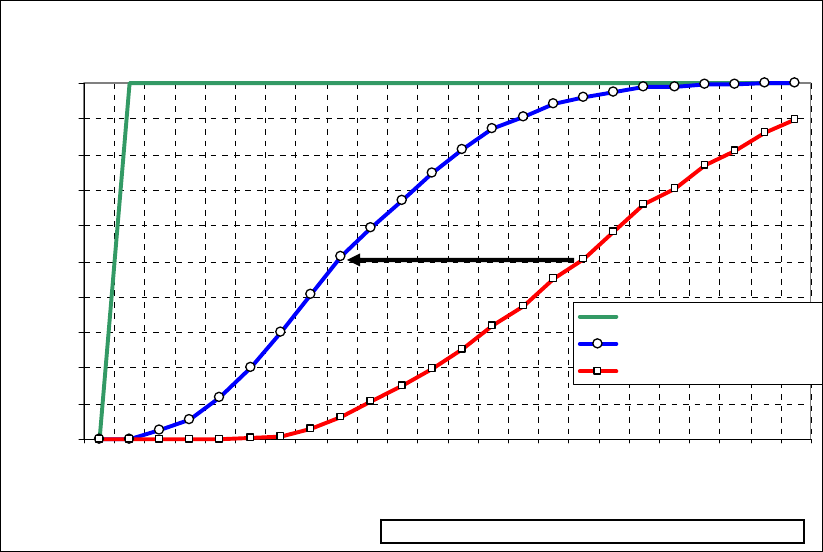

on project completion timeliness. For example, suppose the project manager responded

to the projection shown in Figure 2 by suggesting the elimination of project content, or by

increasing staffing on a critical path task, or any number of similar actions intended to

improve project completion timeliness. The likely results of those proposed actions could

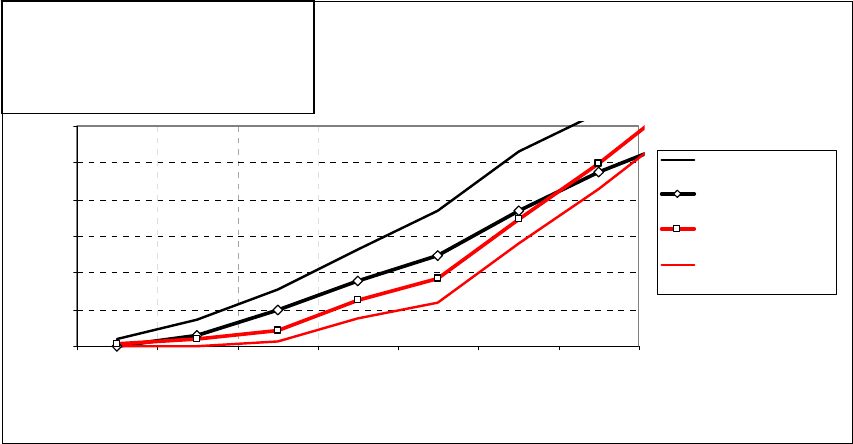

be quantified prior to implementing them. Figure 3 shows the improvement in the

notional project likely to result from the proposed project management actions.

4

Planned Project Completion

versus Simulation Derived CDF

0%

10%

20%

30%

40%

50%

60%

70%

80%

90%

100%

Dec-09

Jan-10

Feb-10

Mar-10

Apr-10

May-10

Jun-10

Jul-10

Aug-10

Sep-10

Oct-10

Nov-10

Dec-10

Jan-11

Feb-11

Mar-11

Apr-11

Project Completion Month

Cumulative Percentage

Planned Project Completion

Simulation Derived Completion Distribution Function

File: Example PCDF.xls G. Cates

Figure 3: Improved Project Completion Timeliness

In the above example the proposed action of the project manager increased the

likelihood of on-time project completion to approximately 80 percent.

Large Projects Advanced Science of Project Management

The importance of this research comes from the importance that is placed upon

project completion performance particularly with respect to large projects. Examples of

large projects include cargo ship building during World War I, the Manhattan Project to

build the first Atomic Bomb, the Polaris missile program, the Minuteman missile

program, and the Apollo program. The science of project management has been advanced

as a result of such critically important large projects.

5

During World War I there was a pressing need for cargo ships in order to transfer

war supplies from America to Europe. Henry Gantt reduced the time to build cargo ships

through the use of bar charts he invented. The charts, later to be named Gantt charts,

visually displayed the activities, and their durations, required to build each ship. In World

War II robust and accurate project planning, scheduling, and tracking methods were

required and developed to carry out the Manhattan Project (Morris 1994, 1997).

During the 1950s, the United States began to grow concerned about the Soviet

Union’s advancement in space and especially with respect to their deploying nuclear

capable intercontinental ballistic missiles. This fear had profound effects upon the

United States and served to spark a space race to develop ICBMs. The United States Air

Force managed the development of ground based ICBMs. The liquid fueled rocket was

called Atlas and the solid fueled rocket was called Minuteman. The Navy managed a

similar project—the Polaris Missile Project—to develop an ICBM to be launched from

submarines. It was critically important to national survival to develop these systems as

soon as possible. The science of project management was advanced out of this necessity.

The Project Evaluation and Review Technique (PERT) was developed to support

the Polaris project (Malcolm 1959; Levin and Kirkpatrick 1966; Moder et al 1983).

According to the Navy, through using PERT, the Polaris project was completed two years

ahead of the original estimated schedule completion date.

1

2

In 1957, the Soviet Union became the first nation to place a satellite—Sputnik—

in orbit around the earth. The event increased the pressure upon the American military to

deploy the Atlas and Polaris missile systems as soon as possible. Sputnik also prompted

6

the creation, in 1958, of the National Aeronautics and Space Administration (NASA).

The Mercury program, initiated in 1958, was NASA’s project for placing an American in

space before the Soviet Union. In April of 1961, the Soviets were the first to achieve this

milestone when they orbited Yuri Gagarin around the earth. In May the United States

responded with the suborbital launch of Alan Sheppard. As the Soviet Union was clearly

leading the space race President Kennedy established a project that was far enough away

and difficult enough to achieve such that the United States would have an opportunity to

catch and surpass the Soviets. This project was the landing of an American on the moon.

Kennedy set the completion milestone of ‘by the end of the 1960s.’

It is interesting to note that President Kennedy, in announcing the decision to go

to the moon gave the nation a large project completion buffer. The original estimate was

that the United States could land on the moon by as early as 1966 or 1967 (Johnson

1971). In his public announcement, Kennedy charged that the moon landing should occur

by the end of the decade. Thus, there was anywhere from a 3 to 4 year project buffer.

3

The Apollo program—the project to achieve the manned lunar landing—has been

called a “paradigm of modern project management” (Morris, 1997). During the program

the project management techniques described above were used and in some cases

improved upon. Additionally, new project management techniques were developed that

have since become commonplace in project management. Examples include phased

project planning and configuration control.

In 1962, NASA and the Department of Defense published the “DoD and NASA

Guide PERT/Cost Systems Design.” This newer PERT/Cost system included a cost

7

element, which was not included in the original PERT. Additionally, the 1962 guide

introduced government management to the Work Breakdown Structure. The Work

Breakdown Structure has since become a central component of management for large

government projects (Morris, 1997).

In 1963, Brigadier General Phillips on the Minuteman ICBM program developed

the Earned Value system (Morris 1994, 1997). Earned Value has also gone on to being a

widely used tool for project management (Fleming & Koppelman 2000).

In 1966 A. Alan B. Pritsker of the RAND Corporation developed the Graphical

Evaluation and Review Technique (GERT) for NASA’s Apollo program (Pritsker 1966).

In GERT branches of the project network can have a probabilistic chance of not having to

be accomplished whereas in PERT/CPM all branches must be accomplished. GERT

allows for looking back in the project and repeating earlier steps whereas PERT/CPM are

‘one time through’ networks.

PAST and the International Space Station

The Project Assessment by Simulation Technique (PAST) like Gantt charts,

PERT, and GERT was developed to meet the specific needs of a large and important

project. The particular project that sparked the need for PAST is the on-going effort to

assemble the International Space Station. Additionally, this research not only developed

a new methodology for project risk analysis, but also used case study research techniques

to demonstrate the use and effectiveness of this new management tool on that project.

8

Project stakeholders participated in developing and managing completion

distribution functions for that project. The results were improved project completion risk

awareness and projected improvements in project completion performance. Timely

completion of the International Space Station project will free up billions of dollars in

annual funding required to begin important follow-on space projects e.g. returning to the

moon and landing on Mars.

Furthermore, just like these earlier project management tools such as Gantt charts

and PERT, the PAST methods may be applicable to other projects.

Applicability to Other Projects

Financial benefit/penalty based on project completion is a widely used strategy on

highway construction projects (Jaraiedi et al 2002). The contractor is rewarded for

finishing early and penalized for finishing late. Consequently, it is desirable to accurately

quantify the completion time distribution function of the project. This ability may be

important during the project bid and/or planning phases as well during the project. For

example a contracting agency should have some idea of the risk associated with a project

before establishing the financial incentives and disincentives for the contract for that

project. The companies bidding on the contract require similar knowledge in order to

submit a competitive bid that if chosen will allow them to earn a profit.

9

Overview of Subsequent Chapters

The literature review in Chapter 2 focuses first on the most recent work

concerning project risk analysis and the use of simulation. The chapter then shifts to a

chronological review of management tools, including Gantt charts, PERT, etc., the

Project Management Institute, and the Critical Chain Project Management philosophy.

The chapter concludes with a brief review regarding using empirical data to develop (1)

probability distributions for activity durations and (2) event probabilities. Chapter 3

presents the details of the Project Completion Distribution Function methodology and

also develops the ground rules for conducting subsequent case studies in a real world

environment to determine if the methodology provides practical benefits. Chapter 4

describes the case studies in detail and presents the results. Chapter 5 summarizes and

concludes the research.

10

CHAPTER TWO: LITERATURE REVIEW

There is an extensive body of literature on the subject of project management.

This review focuses upon that subset pertaining to project analysis, risk, and uncertainty

with respect to completion performance. The more recent literature specific to project

schedule risk analysis and assessment is presented first as it is most germane to the

research presented in subsequent chapters. The chapter also includes a historical review

regarding the development of classic project management tools pertaining to schedule

risk during the twentieth century. These include Gantt Charts, PERT/CPM, Precedence

Diagramming, GERT/Q-GERT and VERT. The formation of the Project Management

Institute is presented along with the relevant recommendations from that organization

with respect to handling project risk and uncertainty. The Critical Chain Project

Management philosophy is also discussed. The chapter concludes with a review of

literature pertaining to the use of empirical data for estimating project activity durations.

Project Schedule Risk Analysis/Assessment

Galway (2004) states that the literature on project risk analysis, which typically

includes both cost and schedule risk, is found primarily in textbooks along with some

professional society sponsored tutorials and training seminars. There are a few textbooks

11

dedicated to project risk analysis such as Schuyler (2001). However, their content can be

found in more general texts on risk analysis including Cooper and Chapman 1987), Vose

(1996, 2000), and Bedford and Cooke (2001).

The typically cited procedure for project schedule risk analysis may be referred to

as ‘stochastic CPM,’ (Galway 2004). This process starts with a project network that is

then analyzed using Monte Carlo simulation. Soliciting expert opinion is the typically

recommended method for obtaining probability distributions for task durations and

probabilities for events. Bedford and Cooke (2001) state that, “it is the exception rather

than the rule to have access to reliable and applicable historical data.” The estimates of

the experts are most likely to be converted into triangular distributions in the case of

activity durations. For events, Bedford and Cooke suggest that experts be asked for the

probability of the event and if it occurs how long the resulting delay would be. Output

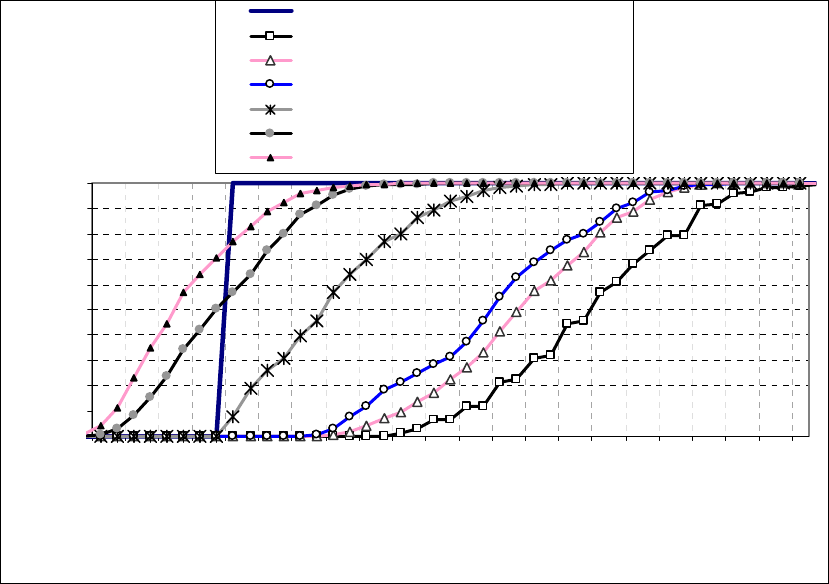

analysis focuses on (1) understanding the project completion distribution function and (2)

determining the probability that any given task is on the critical path.

The use of cumulative frequency distributions is the graphic of choice with

respect to presenting the completion time of the project. Vose (1996), Bedford and Cooke

(2001), and Roberts et al (2003) all utilize cumulative distribution functions for

representing the project completion time results from the Monte Carlo simulation.

However, none of these sources discuss the confidence interval or band for the CDF.

There is also typically little or no information presented with respect to how one

goes about managing such an analysis in a real world environment. Galway emphasized

the lack of literature on the actual use of this technique.

12

The striking lack in the textbook literature is that there is little literature cited

on the use of the techniques. That is, there are no pointers to critical literature

about the techniques such as when they are useful, or if there are any projects

or project characteristics that would make it difficult to apply these methods.

There are also few or no sets of case studies that would illustrate when the

methods worked or failed.

4

Galway (2004) expresses concern that the literature examples of simulation

assessments for project completion times were typically anecdotal. For example, Roberts

et al (2003) cited success in using project completion distribution functions provided via

Monte Carlo simulation but did not go into detail.

Monte Carlo Simulation, as opposed to Discrete Event Simulation (DES), seems

to dominate the project management literature. This may stem from the manner in which

DES is taught. Foundational books on DES such as Law and Kelton’s, Simulation,

Modeling, and Analysis, Kelton, Sadowski, and Sadowski’s, Simulation with Arena, and

Banks et al’s Discrete-Event System Simulation do not discuss using DES for project

management. Nonetheless, DES seems ideally suited for modeling projects and analyzing

when project completion may occur. First, there is the ability of DES to handle

stochastic input and output. Secondly, the basic constructs of commercially available

DES software e.g. Arena by Rockwell Software are similar to PERT-CPM constructs i.e.

activity on node network diagrams. DES software also allows one to include multiple

resource constraints, which all projects are subject to at least to some degree.

13

There are more recent examples of DES being suggested for use in project

management. Ottjes and Veeke (2000) and Simmons (2002) used discrete event

simulation to model PERT/CPM project networks so as to determine the completion time

distribution of example projects.

Williams (1995), in his classified bibliography of recent research on project risk

management, stated that no research had been done on analytical techniques for project

networks having resource constraints. He noted that simulation and modeling of projects

with either resource constraints or complex uncertainties was the advised technique. He

cited research by Kidd (1991) as an example.

Kidd (1991) demonstrated how a manager can get a different message regarding

the risk of completing a project on time based upon which tool is used i.e. CPM, PERT,

or VERT. In Kidd’s example, the project manager would have to pay a penalty if the

project duration was greater than 52 days. With the deterministic based CPM, the project

completed on time by definition. The same project modeled using the PERT method

showed a high probability of completing in 52 days. With the simulation based method

titled VERT (Venture and Evaluation and Review Technique), the project had a low

probability of completing in 52 days. Also of note here is that the VERT results were

presented as a project completion time distribution.

14

Kidd proposed that, as a rule, the simulation based VERT should be used for

projects having uncertainty and stochastic branching. Kidd acknowledged that VERT

was more expensive than PERT, which was in turn more expensive that CPM.

Nonetheless, VERT was warranted for complex projects where the costs of failure were

high.

While VERT has not grown into a widely used project management tool, the

capacity to conduct simulation modeling of projects has increased. Voss (1996) lists

several products that enable Monte Carlo modeling of projects. These include @RISK

(an add-on to Microsoft Excel and Microsoft Project) by Palisade; Monte Carlo by Euro

Log Limited; OPERA by Welcom Software Technology UK; PREDICT! by Risk

Decisions UK; RISK 7000 by Chaucer Group Ltd UK; and RISK+ by Program

Management Solutions, Inc.

The Project Management Institute, in the 2000 edition of the Project Management

Body of Knowledge (PMBOK®) identifies Monte Carlo simulation, along with decision

analysis, as a viable tool for conducting quantitative risk analysis. “A project simulation

uses a model that translates the uncertainties specified at a detailed level into their

potential impact on objectives that are expressed at the level of the total project. Project

simulations are typically performed using the Monte Carlo technique.” Examples of

outputs from quantitative risk analysis include forecasts for possible completion dates

and their associated confidence levels, and the probability of completing the project on

schedule. As the project progresses and assuming quantitative analysis is repeated, trends

can also be identified.

5

15

Project Management Tools

Morris (1994, 1997) provides an excellent in depth history and evolution of the

management of projects in general. He includes discussion and references for project

management tools including those directly related to schedule risk. Galway (2004), with

acknowledgement to Morris, presents a more narrowly focused discussion on the history,

evolution, and current state of quantitative risk analysis tools and methods for project

management.

The development of twentieth century project management tools began in the

early 1900s with the establishment of Gantt charts. In the late 1950s two analytical

methodologies for project management were developed independently. These were

Critical Path Method (CPM) and Project Evaluation and Review Technique (PERT).

CPM consists of precedence network diagrams that map or connect the activities required

to complete the project. PERT also consists of similar diagrams. Subsequent to the

independent development of CPM and PERT in the late 1950s and early 1960s, these

tools have become synonymous. They are sometimes referred to as PERT-CPM. While

both PERT and CPM are similar in form, there are differences in their functionality.

Development of the GERT and VERT tools followed shortly after the PERT/CPM tools

became well known.

16

Gantt Charts

In 1908 Henry Lawrence Gantt of the Philadelphia Naval Shipyard developed

milestone charts that displayed transatlantic shipping schedules (Gantt, 1919; Rathe,

1961; Devaux, 1999). These charts were later adapted so as to be used on any project. A

Gantt chart displays tasks to be done in a project in a waterfall fashion along with the

duration of the tasks. The typically cited weakness of Gantt charts is their inability to

shows the interrelationships between the tasks (Morris, 1997). This is especially true for

complex projects (Galway, 2004). Nonetheless, Gantt charts, which are sometimes

referred to as “bar charts,” are an excellent visual tool and are still widely used (Devaux,

1999).

6

CPM

Critical Path Method (CPM) was developed in the 1956-1959 timeframe for plant

maintenance and construction projects by the du Pont Company and Remington Rand

Univac (Walker and Sayer 1959). Kelly (1961) described the mathematical basis for

CPM. Moder et al (1983) stated that a prime focus of the CPM developers was to create

a method for quantifying the tradeoffs between project completion time and project cost.

7

CPM is adept at handling activity cost variability with respect to normal activity

versus “crash” activity duration. An activity is considered to be crashed when its

duration has been minimized as a result of applying additional resources e.g. people,

17

machines, or overtime. Using CPM, one can calculate a deterministic range of dates

based upon the level of money that will be spent on “crashing” or reducing the activity

durations. CPM allows an analyst to determine optimal use of resources with respect to

various completion dates. However, CPM techniques and methodologies do not allow

for consideration of the stochastic nature of activity durations and project completion

dates.

PERT

Malcolm et al (1959) described the basics of PERT as well as its development

history. PERT (Program Evaluation and Review Technique) was developed by a joint

government/industry team consisting of the U.S. Navy, Lockheed Aircraft Corporation,

and the consulting firm of Booz, Allen & Hamilton.

Malcolm et al (1959) described the

basics of PERT as well as its development history. PERT was developed in support of the

Navy’s Polaris project—an effort to build the first submarine launched nuclear armed

ballistic missile. The Polaris project consisted of 250 prime contractors and

approximately 9,000 subcontractors. According to the Navy, through using PERT, the

Polaris project was completed two years ahead of the original estimated schedule

completion date.

8

9

Sapolsky (1972), however, writes that there was internal resistance to using

PERT, that it was used by only a small portion of the Polaris team, and that its main

18

benefit was in the manipulation of external stakeholders. The senior leadership of the

Polaris program made a point to advertise the development and use of PERT. The

publicly held appearance that the Polaris program was being managed with a modern

management methodology i.e. PERT was beneficial in deflecting micromanagement

attempts coming from higher Naval headquarters and Congress.

Levin and Kirkpatrick (1966) have indicated that a forerunner of PERT may have

been Gantt’s milestone charts. The networks that formed the basis of PERT represented a

considerable improvement upon Gantt charts. PERT networks could show more of the

interrelationships, were more adept at supporting large and complicated projects, and

employed probability theory when specifying task durations and overall project

completion.

10

Activity Duration Specification

A fundamental premise of PERT is that when specifying activity i.e. task

durations, only estimates can be provided, typically because the specific activity has not

been done before. Note that PERT was developed for a research and development

project in which much of the work was new. Consequently, Malcolm et al (1959)

established a process by which competent engineers responsible for the specific activity

would provide estimates for activity durations in the form of the most likely, most

optimistic, and most pessimistic duration. PERT typically utilizes the notation of a

19

(optimistic), b (pessimistic), and m (most likely). This practice was intended to

“disassociate the engineer from his built-in knowledge of the existing schedule and to

provide more information concerning the inherent difficulties and variability in the

activity being estimated” (Malcolm et al, 1959).

11

The expected activity duration (Te) is

derived by using the weighted average method calculation shown in Equation 1.

Te = (a +4m + b)/6 (Equation 1)

This method was based upon a decision by the developers of PERT that the beta

distribution was most appropriated for specifying the range of uncertainty with task

durations. It was also assumed that the distance between the most optimistic and

pessimistic duration estimates would equate to 6 standard deviations. Standard

deviations, based upon this assumption, can be calculated for each estimated task

duration in accordance with Equation 2.

Standard Deviation = (a +b)/6 (Equation 2)

The expected project completion date is given by the Te calculation and then the

standard deviation can be applied to it to create a normal curve.

Levin and Kirkpatrick (1966) noted that the need to use the single value of the

weighted average came about because PERT could not deal with continuous distributions

or even only three different times simultaneously. They also stated that in PERT, ‘a

20

probability of .6 or better of finishing on time is considered good.”

12

It is also noteworthy that the three estimate basis for activity durations has proved

difficult in practice. For example, in the early 1960s NASA used PERT but abandoned

the utilization of three estimates (Morris 1997).

Estimating Project Completion Times

PERT provides for the capability to estimate a project’s completion time

distribution function. However, that estimate is based upon simplifying assumptions that

may not be warranted for the particular project. Additionally, the PERT estimate is

known to be biased optimistically.

After a PERT network of a project has been created, an expected completion date

can be calculated by summing the weighted averages for each of the project activities

along the critical path. With this method, the stochastic nature of project completion time

is essentially reduced to a deterministic estimate. However, it is possible to create a

standard normal curve about the calculated project completion date by summing the

activity standard deviations. This implies that projects will have an equal probability for

completing before or after the calculated completion date. For most projects, however, it

is far easier for projects to be delayed than it is for them to be completed early.

The PERT method is also subject to a “merge-event bias problem,” whereby

project completion times are underestimated. Following the introduction of PERT in the

21

late 1950s, it was soon recognized that the PERT methodology for determining the

completion time distribution function of a project was biased optimistically because of a

number of issues. MacCrimmon and Ryanec (1962 and 1965) discussed these bias

causing factors, which included the use of the Beta distribution for activity durations, the

methodology for calculating activity duration mean and variance, the assumed

independence of activities, and assumption that the completion time for the project is

normally distributed. For projects having multiple paths, with one being identified as the

critical path and several other paths being identified as non-critical, there was some

probability that one of the non-critical paths would grow to become the critical path.

This probability increased when non-critical paths were close to being critical.

Ultimately, the issue of PERT providing optimistic completion time estimates became

known as the ‘merge-event bias problem’ or the ‘PERT problem’.

Beginning in the early 1960s several methods for mitigating the merge-event bias

problem have been studied. One of these has been Monte Carlo simulation. Van Slyke

(1963) showed that Monte Carlo techniques could be used to analyze PERT networks.

He noted that with Monte Carlo there was great flexibility in that any distribution could

be used for the activity durations. More importantly, he showed that these techniques

provided an accurate and unbiased estimate of the mean duration of a project. Van Slyke

also considered the issue of the project-duration cumulative distribution function (c.d.f.).

In the 1963 time frame, excessive time was required to generate random numbers

for Monte Carlo analysis. To reduce this problem, Van Slyke suggested that one discard

activities that are rarely or never on the critical path. In that way the size of the model

22

can be reduced. To go along with this technique, he suggested two heuristic methods to

identify such non-critical activities.

In the first method—min-max path deletion—one sets all the activity durations at

their minimum and then identifies the critical path. All activities not on the critical path

then have their durations set to the maximum. All the paths, and their corresponding

activities, that do not take longer than the critical path can be discarded from the analysis

because they can never be on the critical path. In the second method—statistical path

deletion—one models the entire network, and then runs a “relatively view” initial set of

replications. All activities that were not on the critical path in any of these initial

replications are eliminated. The simulation is then run for a full set of replications.

Cook and Jennings (1979) added a third heuristic method, called dynamic shut-off

in order to further increase simulation efficiency by reducing the total number of

replications required. “After each one hundred iterations the cumulative density function

of project completion time is compared to the c.d.f from one hundred iterations earlier.

If, using a standard Kolmogorov-Smirnov test, there is no significant difference between

two successive c.d.f’s at the 0.05 level, the simulation terminates.” Note that Cook and

Jennings concluded after comparing the three heuristics that path deletion methods

provided the most benefit.

Because modern Discrete Event Simulation running on modern desktop

computers has such increased computational speed, the necessity to identify non-critical

tasks through the methods proposed by Van Slyke and Cook/Jennings has been reduced.

Researchers have also proposed non-Monte Carlo techniques for analyzing PERT

23

networks. Some of these researchers validated their proposed techniques by comparing

them to the results of Monte Carlo simulation. All of these techniques are limited to

acyclic networks in which there are no resource-constraints.

Martin (1965) proposed a generic method for transforming a directed acyclic

network to series-parallel form so as to accommodate series-parallel reduction to a single

polynomial that represents the time through the network. The advantageous to this

approach is that it provides accuracy that is only limited by the approximation of activity

durations with polynomials. However, Robillard and Trahan (1977) noted that a

drawback is the large amount of calculation required to implement the method.

Hartley and Wortham (1966) presented integral operators for non-crossed

networks and those containing a Wheatstone bridge. Ringer (1969) developed integral

operators for more complex crossed networks—networks that could be described as

containing double Wheatstone bridges or crisscross network.

Lower and or Upper Bound Analysis has been used to estimate the completion

time distribution of PERT networks. Kleindorfer (1971) used distributions to bound the

activity completion distribution function. Robillard and Trahan (1977) developed a

method by which one uses a lower bound approximation. Dodin (1985) further

developed techniques to reduce PERT networks to a single equivalent activity and to then

estimate the bounds of the completion distribution.

Sculli (1983) proposed an approximation algorithm based on the assumptions that

the activity durations are normally distributed and the paths in the network are

independent. Kamburoski built upon Sculli’s work by adding upper and lower bounds.

24

Adlakha and Kulkarni (1989) published a classified bibliography of research on

stochastic PERT networks. Of particular interest to stochastic PERT networks were the

distribution of the project completion time along with the mean and variance of the

completion time. Also of interest were the criticality indexes for any particular path or

activity. The bibliography was organized into sections including exact analysis,

approximation and bounds, and Monte Carlo sampling.

Precedence Diagramming

John W. Fondahl of Stanford University, in doing research for the Navy Bureau

of Yards and Docks developed precedence matrices, along with lag values, for projects

(Fondahl 1961). He also developed and advocated the use of activity on circle a.k.a. node

notation rather than activity on arrow notation for network development. Creation of

activity on node networks was found to be faster, less prone to error, and easier to revise

than activity on arrow diagrams (Fondahl 1962). Fondahl is largely credited with the

development of Precedence Diagramming (Morris, 1997).

Merging of PERT, CPM, and Precedence Diagramming

In 1964 Moder and Phillips published Project Management with CPM and PERT.

This book, and its subsequent editions, is the standard textbook for project network

25

scheduling (Morris 1994, 1997). Moder and Phillips recognized the similarities between

CPM and PERT with respect to the creation of a project network. Both CPM and PERT

methodologies started with a high level Gantt chart, then developed more detailed bar

charts for lower level activities, and finally created project network diagrams to indicate

activity relationships. Moder and Phillips described the generic network component of

CPM and PERT as being represented by ‘activity on arrow’ since both represented

activities by arrows. The difference between CPM and PERT, with respect to activity

durations i.e. deterministic versus stochastic, was a function of the information their

developers had. In the projects of interest to Du Pont, there was a relatively good

understanding of how long activities could be expected to take because similar activities

had been done in the past. Whereas, the content and duration of activities was not well

understood for Navy’s research, development, and activation program to develop the first

ever submarine based ICBM. The merging of PERT and CPM into PERT/CPM was

enabled with the assumption that either stochastic of deterministic inputs can be used for

activity durations.

The subsequent merging of PERT/CPM with Precedence Diagramming is related

to the change from activity on arrow to the ‘activity on node’ convention of Precedence

Diagramming. Showing complex project activity relationships is easier with the activity

on node convention. In 1965, Engineering News Record reported that private industry

was shifting to Precedence Diagramming. By the later half of the 1970s, Morris (1994,

1997) indicates that Activity on Node became more popular than the original PERT/CPM

convention of Activity on Arrow. Signifying the merging of PERT/CPM with

26

Precedence Diagramming, in 1983 Moder and Phillips incorporated Precedence

Diagramming into the title of their third edition—Project Management with CPM, PERT,

and Precedence Diagramming.

Performance Measurement and Earned Value

In 1963 Brigadier General Phillips developed a simple project tracking metric for

PERT/COST that was part of his Minuteman Contractor Performance Measurement

System (Morris, 1997). This metric, which was a comparison of the actual versus planned

progress, was known as the Earned Value system. This system developed the measures of

Budgeted Cost of Work Scheduled (BCWS), Budgeted Cost of Work Performed

(BCWP), and Actual Cost of Work Performed (ACWP). These measures can be used to

estimate in a deterministic fashion when a project is likely to finish and how much it will

ultimately cost. Performance Measurement is often used synonymously with Earned

Value (Morris, 1997).

GERT and Q-GERT

Pritsker (1966) developed the Graphical Evaluation and Review Technique

(GERT) technique for analyzing stochastic networks (Pritsker 1966, 1968). Supporting

Pritsker in the development of GERT were Happ and Whitehouse (Pritsker and Happ

27

1966; Pritsker and Whitehouse 1966). In PERT and CPM all branches of the network are

performed during the project. However, in GERT probabilities could be assigned to the

various branches in order to indicate that branches might or might not actually be

performed during the project. GERT also allowed for looping back in a project network

so as to repeat earlier activities. Activity durations were not limited to deterministic

estimates (CPM) or the beta distribution (PERT), but could instead be represented by

continuous or discrete variables.

Analysis of the network proceeded by first determining the Moment Generating

Function (M.G.F) of the activity duration variables. The MGFs for each activity duration

were then multiplied by the probability of occurrence of that activity. This multiplication

provided a “w” function that formed a system of linear independent equations that could

then be reduced to one equation. As originally presented, the GERT procedure could be

used to determine the mean and variance of the time to complete a network. The

completion time distribution function could be determined by Monte Carlo simulation. It

was noted that further work was required in order to be able to determine confidence

intervals.

GERT was subsequently enhanced with a cost processing module (Arisawa and

Elmahgraby 1972) and the ability to model resource constraints (Hebert 1975). In 1977

Pritsker’s book Modeling and Analysis using Q-GERT Networks presented the updated

version of GERT that contained these and other improvements.

After its development and through the 1980s, GERT was not widely used (Morris,

1997). This was due to the computational complexities, high costs, and the limited

28

performance of computers. In the 1990s, advances in statistics and improved desktop

computing powers resulted in increased interest in risk analysis that is enabled by

probabilistic network schedules as provided by GERT.

VERT

Venture Evaluation and Review Technique (VERT) was developed to assess risks

involved with new ventures. Moeller (1972) introduced VERT and it was later updated

by Moeller and Digman (1981) along with Lee, Moeller, and Digman (1982). VERT is

similar to PERT/CPM in that it is structured as a network. However, each activity is

characterized by costs incurred and performance generated in addition to time consumed.

VERT was based upon the thinking that there is a relationship between time, cost, and

performance. With VERT, a manager could obtain a more integrated analysis of a

venture or project. Thirteen statistical distributions, including uniform, triangular, normal,

and lognormal, are available for direct use in VERT. There is also a histogram input

capability for other distributions. Analysis of a VERT network is done via simulation.

Information on completion time, cost, and performance can thus be obtained. For

example, distributions for completion time and completion cost are produced.

The VERT method was found to provide accurate estimates of time required to

complete projects (Kidd, 1987). Kidd advocated the use of VERT by project managers.

He noted that the creating the VERT based model of the project would be time

29

consuming, but that the resultant ability through the simulation process to pose ‘what-if’

questions would be beneficial. However, VERT has not become a widely used tool like

PERT/CPM. It is used even less often than GERT (Morris 1994, 1997).

The Project Management Institute

In 1969 the Project Management Institute (PMI) was founded by a group of

project managers in the United States. PMI is dedicated to providing global leadership in

developing standards for the practice of project management. This institute has over

100,000 members worldwide. PMI publishes three periodicals—PM Network (a monthly

magazine), Project Management Journal (a quarterly journal), and PMI Today (a

monthly newsletter). The Project Management Institute also publishes the PMBOK

®

Guide (A Guide to the Project Management Body of Knowledge). As the title implies,

this book covers a wide variety of topics pertaining to project management.

Chapter 6—project time management—addresses activities including their

definition, sequencing and duration estimating, along with the topics of schedule

development and control. Precedence Diagramming Method (PDM), which is also called

Activity-On-Node (AON), is presented as the method for activity sequencing. The

diagrams created by PDM are commonly called PERT charts. “Finish-to-Start” is the

recommended precedence relationship for project activities. According to that

relationship, a successor activity starts immediately following the completion of the

predecessor activity. The PMBOK

®

Guide notes that PDM does not allow loops or

30

conditional branching. GERT and System Dynamics models are listed as examples of

project network diagramming tools that allow loops.

Forrester (1961) described System Dynamics and its development in the late

1950s. System Dynamics is a simulation approach to modeling complex problems.

Forrester defined it as “the study of the information-feedback characteristics of industrial

activity to show how organization structure, amplification and time delays interact to

influence the success of the enterprise.” Sterman (1992) states that System Dynamics is

widely used in project management. Howick (2002) describes its frequent use to support

litigation ensuing from large projects that have time and cost overruns. However, the fact

that System Dynamics receives no mention in Morris’s book on the Management of

Project suggests that System Dynamics is not a main stream project management tool on

par with PERT/CPM.

Activity duration estimates according to the PMBOK

®

Guide are based upon

project scope and resources, and are typically provided by those most familiar with the

specific activity. Relevant historical information is often available from previous project

files, commercial databases, and the memories of project team members. The latter

source is said to be “far less reliable” than documented results. Along with historical

information, expert judgment, analogous estimating, and quantitative (rate based)

calculations methods are recommended.

The PMBOK

®

Guide also states that reserve time (a.k.a. contingency or buffer)

may be added into the activity duration or elsewhere in the project network. No

quantitative guidance is provided for how big the reserve should be.

31

CPM, GERT, and PERT are listed as the most widely used mathematical analysis

tools for supporting the schedule development process. The PMBOK

®

Guide

acknowledges that these tools do not consider resources.

Under the heading of resource leveling heuristics, critical chain is mentioned as “a

technique that modifies the project schedule to account for limited resources.”

Simulation is described as a tool for analyzing project schedules and alternatives.

According to PMBOK, the most common simulation technique is Monte Carlo Analysis.

Critical Chain Project Management

Goldratt (1997) proposed new project management methods in his “business

novel” Critical Chain. He noted that the current project management methods and tools

were ineffective in keeping projects on time and within budget. A common problem was

found to be uncertainty—‘uncertainties embedded in projects are the major causes of

mismanagement.’

13

The new methods proposed by Goldratt were in part an extension of

his earlier work on the Theory of Constraints (TOC), which had been described in his

1984 book titled The Goal. Under the tenets of TOC, a production system is limited by a

constraint i.e. a bottleneck. In order to maximize system output a feeding buffer is placed

in front of the bottleneck so that the bottleneck is continuously operating to full capacity.

The rate at which the bottleneck can operate represents the drumbeat to which the rest of

the system operates. As applied to project management, the constraint or bottleneck is

analogous to the critical path. Additionally, however, the critical path must take into

32

account the added constraint of critical resources. When resources are taken into

account, the critical path may change. This is called the critical chain.

Interestingly, Goldratt noted that the lateness of a project could be far more

financially devastating to a company than the project’s financial overrun. The reason for

this was revenues, or payback, from the project are delayed and potentially reduced or

even eliminated by the project being late. Consequently, the Critical Chain methods tend

to be focused on improving project completion timeliness.

The Critical Chain methods for project management included identifying the true

critical path i.e. the critical chain, which includes resource considerations, and

strategically placing safety time in the project network. These strategic locations are at

the end of the project, called a “project buffer,” and at nodes, called “feeding buffers,”

where non-critical activities feed into the critical chain. Goldratt also stresses limiting

safety time in all other areas. Because of the relationship between theory of constraints

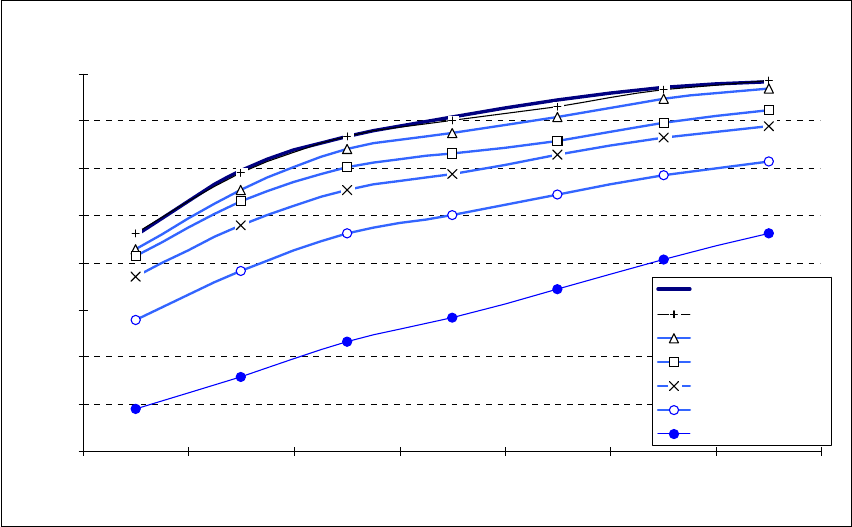

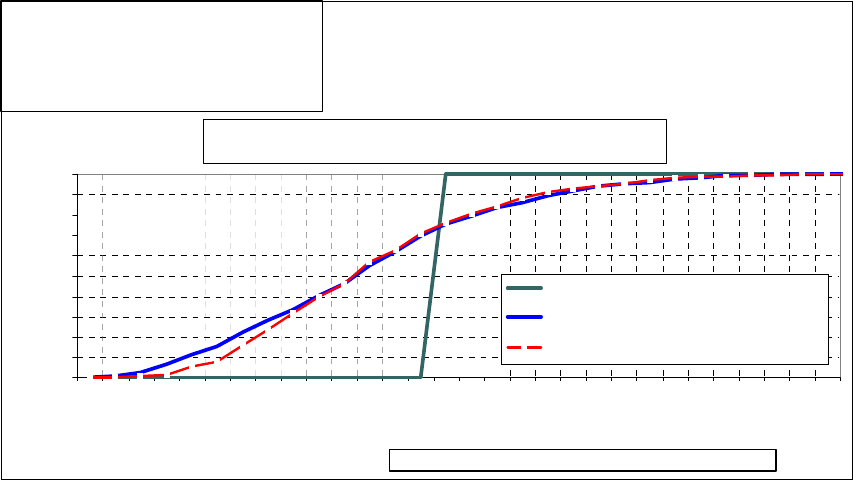

and the critical chain methods the new project management methods have become known