AMBIENT AIR MONITORING AND QUALITY

ASSURANCE/QUALITY CONTROL GUIDELINES

National Air Pollution Surveillance Program

PN 1599

ISBN 978-1-77202-056-4 PDF

© Canadian Council of Ministers of the Environment, 2019

i

NOTE TO READERS

The Canadian Council of Ministers of the Environment (CCME) is the primary minister-led

intergovernmental forum for collective action on environmental issues of national and

international concern.

This document contains guidance related to air quality monitoring procedures at National Air

Pollution Surveillance (NAPS) sites across the country and replaces the Ambient Air Monitoring

Protocol for PM

2.5

and Ozone: Canada-wide Standards for Particulate Matter and Ozone (CCME

2011) and the manual titled National Air Pollution Surveillance Network Quality Assurance and

Quality Control Guidelines (EC 2004). It provides recommendations and establishes minimum

requirements to ensure that data collected by the NAPS Program are of known quality, defensible,

and comparable across Canada.

This document was developed for the Air Management Committee, by staff of the Analysis and

Air Quality Section of Environment and Climate Change Canada. CCME would like to thank all

individuals that participated in completing this document and more specifically the following

working group members representing provincial and territorial governments: Melynda Bitzos and

Mike Noble (Ontario), Eric Blanchard (New Brunswick), Fran Di Cesare (Nova Scotia), Chris

Gray (Saskatchewan), Jany McKinnon (Québec) and Ryan Wiederick (British Columbia), and

Christian Vezina for his contribution as an external reviewer.

ii

TABLE OF CONTENTS

NOTE TO READERS ..................................................................................................................... i

LIST OF TABLES .......................................................................................................................... v

LIST OF FIGURES ....................................................................................................................... vi

ACRONYMS AND ABBREVIATIONS ..................................................................................... vii

GLOSSARY ................................................................................................................................ viii

1.0 INTRODUCTION .............................................................................................................. 1

2.0 NAPS PROGRAM AND MONITORING OBJECTIVES................................................. 1

2.1 NAPS Monitoring Objectives ......................................................................................... 2

3.0 NAPS PROGRAM ORGANIZATION .............................................................................. 3

3.1 NAPS Program Operation ............................................................................................... 3

Environment and Climate Change Canada ................................................................. 3

Provincial and Territorial Governments, Metro Vancouver and Montréal ................. 4

4.0 DATA QUALITY OBJECTIVES (DQO) .......................................................................... 4

5.0 TRAINING ......................................................................................................................... 7

6.0 DOCUMENTATION AND RECORDS ............................................................................ 8

6.1 Network Quality Assurance Plan (NQAP) ...................................................................... 9

6.2 Site Classification ......................................................................................................... 11

6.2.1 Class 1: Urbanization ................................................................................................ 11

6.2.2 Class 2: Neighbourhood Population ......................................................................... 12

6.2.3 Class 3: Local Land Use ........................................................................................... 12

6.2.4 Class 4: Site Type ..................................................................................................... 13

6.3 Equipment Information and Inventory.......................................................................... 17

6.4 Standard Operating Procedures (SOP) for the NAPS Program ................................... 17

7.0 NETWORK DESIGN AND SITE LOCATION .............................................................. 17

7.1 Network Design ............................................................................................................ 20

7.1.1 Core Sites .................................................................................................................. 20

7.1.2 Program-specific Sites .............................................................................................. 23

7.1.3 Pollutant-specific Sites.............................................................................................. 25

7.2 Site Location Selection Process ...................................................................................... 26

7.2.1 Prioritizing Monitoring Objectives ........................................................................... 27

7.2.2 Spatial Scales of Representativeness ........................................................................ 27

7.2.3 Site Types.................................................................................................................. 30

7.2.4 Population Density .................................................................................................... 31

7.2.5 Local Land Use ......................................................................................................... 32

8.0 MONITORING STATION DESIGN ............................................................................... 32

8.1 Station Design ............................................................................................................... 32

8.2 Sampling Inlet System Design ....................................................................................... 32

8.2.1 Sampling Inlet Placement ......................................................................................... 33

8.3 Manifold Design ........................................................................................................... 35

iii

8.3.1 Residence Time (Rt) Calculations ............................................................................ 37

8.3.2 Pressure Drop Measurements ................................................................................... 37

9.0

MONITORING, SAMPLING

AND ANALYTICAL METHODS ..................................... 37

9.1 Continuous Methods ..................................................................................................... 38

9.2 Integrated Methods ....................................................................................................... 41

9.3 New Instrument Pre-deployment Testing and Inspection ............................................. 43

9.3.1 Continuous Gas Analyzers ........................................................................................ 43

9.3.2 PM Instruments ......................................................................................................... 43

10.0 ROUTINE OPERATION ................................................................................................. 43

10.1 Routine Inspection and Maintenance Checks ................................................................ 43

10.1.1 Integrated Samples: Specific Requirements ......................................................... 44

10.2 Quarterly and Semi-annual Station Visits..................................................................... 45

10.3 Site and Station Logs .................................................................................................... 45

10.4 Instrument Maintenance Records ................................................................................. 46

11.0 VERIFICATION AND CALIBRATION ......................................................................... 46

11.1 Gas Analyzers................................................................................................................ 47

11.1.1 QC Checks for Gas Analyzers .............................................................................. 47

11.1.2 Verification and Calibration ................................................................................. 47

11.1.3 Tolerance Levels and Acceptance Criteria ........................................................... 49

11.1.4 Multi-Point Verification and Calibration Considerations ..................................... 51

11.1.5 Automatic Zero or Span Adjustments................................................................... 51

11.2 PM

Instruments ............................................................................................................. 51

11.2.1 PM Instrument Tolerance Levels and Acceptance Criteria .................................. 52

11.2.2 PM Instrument Verification and Calibration Considerations ............................... 53

11.3 Verification and Calibration Documentation ................................................................ 54

11.4 Integrated VOC, Carbonyls and PAH Samplers ........................................................... 54

11.5 Traceability of Calibration and Standards .................................................................... 54

11.5.1 Traceability ........................................................................................................... 55

11.5.2 Reference and Transfer Standards ........................................................................ 56

12.0 DATA COLLECTION AND VALIDATION: CONTINUOUS DATA .......................... 57

12.1 Data Collection .............................................................................................................. 57

12.1.1 Sample Rates and Averaging Intervals ................................................................. 58

12.1.2 Datalogger Reading Verification .......................................................................... 59

12.2 Data Validation Process ................................................................................................ 59

12.3 Data Flags and Validation Logs .................................................................................... 62

12.4 Level 0 Verification ...................................................................................................... 62

12.5 Level 1 Validation......................................................................................................... 63

12.5.1 Review of Field Records ....................................................................................... 63

12.5.2 Review of Operational and Instrument Parameters .............................................. 63

12.5.3 Review of Multi-point Verification Results ......................................................... 63

12.5.4 Over-range Values ................................................................................................ 64

12.5.5 Review of Automatic Zero Adjustments .............................................................. 64

12.5.6 Baseline Adjustments............................................................................................ 64

12.5.7 Below Zero Adjustments ...................................................................................... 66

12.5.8 Derived Parameter Relationship of NO/NO

2

/NO

X

.............................................. 66

iv

12.6 Level 2 Validation......................................................................................................... 67

12.7 Level 3 Validation......................................................................................................... 68

12.8 Post Validation .............................................................................................................. 68

13.0 DATA COLLECTION AND VALIDATION: INTEGRATED DATA .......................... 69

13.1 Integrated Sample Metadata ......................................................................................... 71

13.2 Sample Collection ......................................................................................................... 71

13.3 Level 0 Verification ...................................................................................................... 72

13.4 Level 1 Validation......................................................................................................... 72

13.5 Level 2 Validation......................................................................................................... 73

13.6 Level 3 Validation......................................................................................................... 74

13.7 Post Validation .............................................................................................................. 75

14.0 REPORTING REQUIREMENTS .................................................................................... 76

14.1 Continuous Data Reporting........................................................................................... 76

14.1.1 Real-time Reporting of Continuous Data ............................................................. 76

14.1.2 Continuous Data Reporting to the CWAQD ........................................................ 77

14.1.3 Posting Continuous Data to the NAPS Data Portal .............................................. 77

14.2 Integrated Data Reporting ............................................................................................. 79

14.2.1 Integrated Data Reporting to the CWAQD ........................................................... 79

14.2.2 Integrated Data Reporting to the NAPS Data Portal ............................................ 80

14.3 Other NAPS Data Reporting Requirements ................................................................. 80

14.3.1 Data Reported to the Canadian Environmental Sustainability Indicators (CESI) 81

14.3.2 Ozone Annex to the 1991 Canada-US Air Quality Agreement ............................ 81

14.3.3 Air Quality Management System (AQMS) .......................................................... 81

14.3.4 NAPS Annual Data Summary Reports ................................................................. 82

15.0 ASSESSMENTS AND CORRECTIVE ACTION ........................................................... 82

15.1 Performance and Systems Audits ................................................................................. 83

15.1.1 Performance Audit ................................................................................................ 83

15.1.2 Systems Audit ....................................................................................................... 84

15.1.3 Audit Response ..................................................................................................... 84

15.2 Inter-Agency Measurement Study ................................................................................ 85

15.3 Data Quality Assessments (DQA) ................................................................................ 85

REFERENCES ............................................................................................................................. 87

APPENDIX A – AMBIENT AIR MONITORING EQUIPMENT .............................................. 88

APPENDIX B – NAPS METHOD AND SOP REFERENCE LIST ........................................... 89

APPENDIX C – TECHNICAL SYSTEMS AUDIT QUESTIONNAIRES................................. 91

APPENDIX D – PERFORMANCE AUDIT QUESTIONNAIRE ............................................. 106

v

LIST OF TABLES

Table 4-1 NAPS Program DQO criteria ......................................................................................... 5

Table 4-2 NAPS Program DQO and relevant determination methodologies ................................. 6

Table 6-1 Recommended documentation and records .................................................................... 8

Table 6-2 NAPS site classification system ................................................................................... 16

Table 7-1 Parameters monitored at core ....................................................................................... 21

Table 7-2 Monitoring objective priorities by pollutant and spatial scales of representativeness . 29

Table 7-3 Pollutants and relevant spatial scale of representativeness .......................................... 30

Table 7-4 Site types and spatial scale of representativeness......................................................... 30

Table 8-1 Sampling inlet material and Residence Time (Rt) ....................................................... 33

Table 8-2

Minimum separation distance between roadways and sampling inlets for neighbourhood

and urban scale sites.............................................................................................................. 34

Table 8-3 Specifications for sampling inlet siting ........................................................................ 34

Table 9-1 NAPS minimum performance specifications and operating ranges for continuous

methods ................................................................................................................................. 39

Table 9-2 Principles of operation for NAPS continuous methods ................................................ 40

Table 9-3 Principles of sampling and analysis for NAPS integrated methods ............................. 42

Table 11-1 Gas analyzer QC, verification and calibration activity frequencies ........................... 49

Table 11-2 Multi-point verification and calibration ranges .......................................................... 49

Table 11-3 QC checks tolerance levels for gas analyzers............................................................. 50

Table 11-4a Multi-point verification: Zero-point tolerance levels for gas analyzers ................... 50

Table 11-4b Multi-point verification: Upscale points acceptance criteria for gas analyzers ....... 50

Table 11-6 QC check tolerance and acceptance criteria for PM instruments ............................... 53

Table 11-7 Transfer standards certification frequency ................................................................. 57

Table 12-1 Data verification and validation review ..................................................................... 60

Table 12-2 Multi-point verification: Zero-point tolerance levels for gas analyzers ..................... 65

Table 12-3 Zero adjustment criteria .............................................................................................. 66

Table 13-1 Data verification and validation review ..................................................................... 69

Table 14-0-1 Required significant figures and units by parameter............................................... 79

Table 15-1 ECCC audit and assessment schedule ........................................................................ 83

vi

LIST OF FIGURES

Figure 1-1 NAPS Network.............................................................................................................. 1

Figure 1-2 Air quality parameters currently measured by NAPS ................................................... 1

Figure 3-1 NAPS MOU parties....................................................................................................... 3

Figure 6-1 Elements of an NQAP ................................................................................................... 9

Figure 6-2 Urbanization ................................................................................................................ 11

Figure 6-3 Neighbourhood Population ......................................................................................... 12

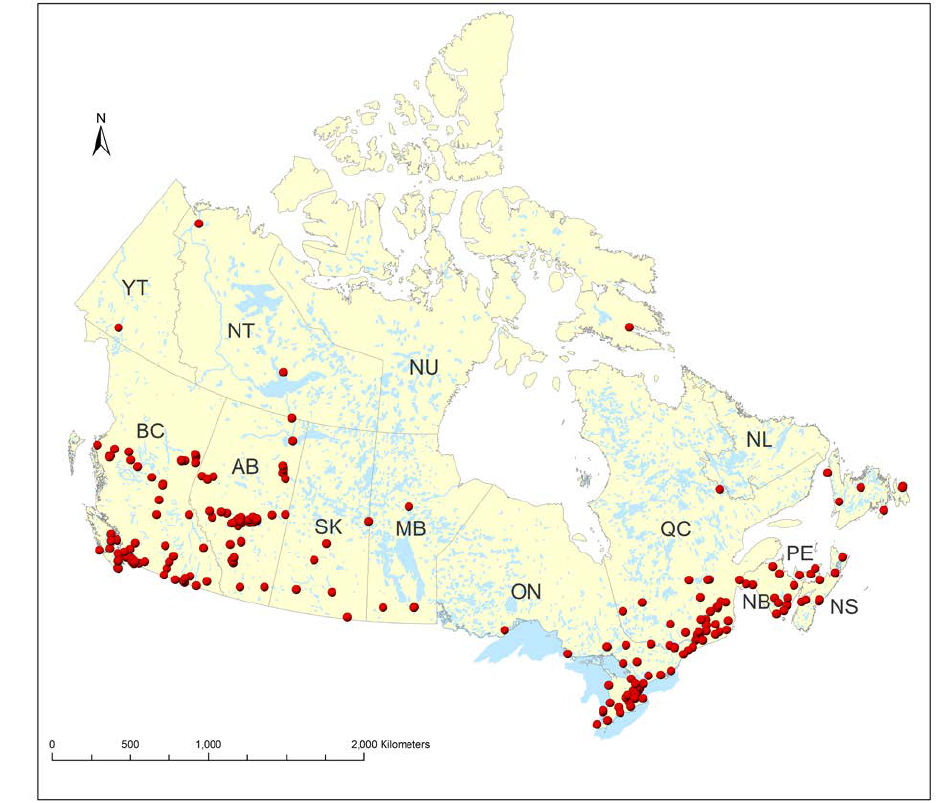

Figure 7-1

NAPS sites ................................................................................................................... 18

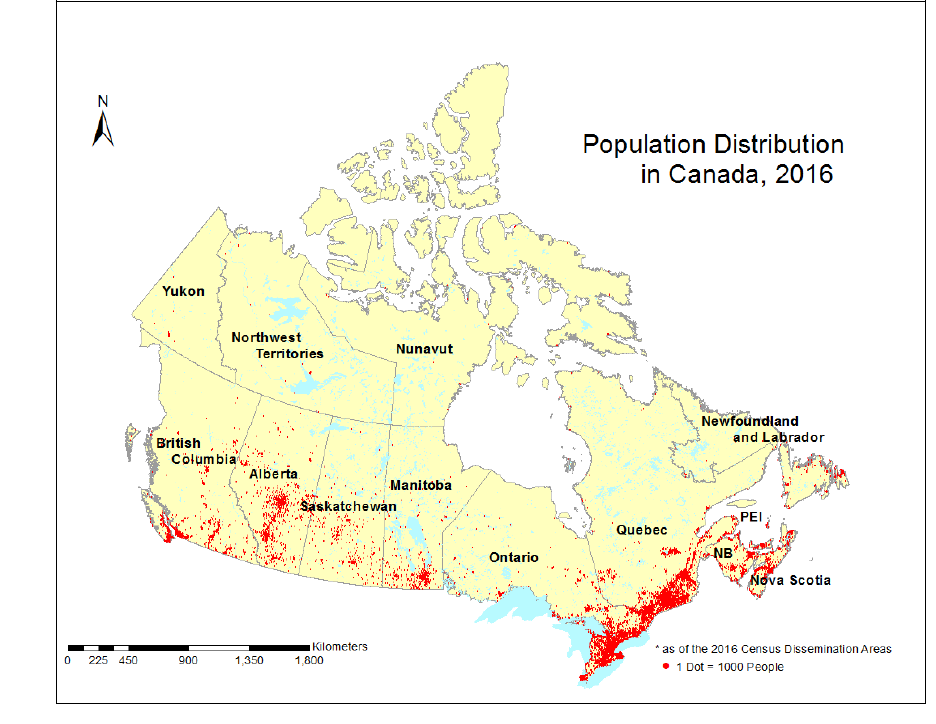

Figure 7-2 Population distribution in Canada, 2016 ..................................................................... 19

Figure 7-3 Graphical concept of core sites ................................................................................... 22

Figure 7-4 NAPS site hierarchy .................................................................................................... 23

Figure 7-5 AQMS air zones .......................................................................................................... 24

Figure 7-6 Design requirements of the monitoring network and the selection of sites ................ 27

Figure 7-7

Spatial scales ............................................................................................................... 28

Figure 8-1 Example of a conventional 1-, 2- and 4-inch manifold system (which can be configured

horizontally or vertically) ..................................................................................................... 36

Figure 8-2 Example of octopus-style manifold system ................................................................ 36

Figure 11-1 Multi-point verification ............................................................................................. 48

Figure 11-2 Allowable levels of traceability from reference standard to station gas analyzers

(excluding ozone).................................................................................................................. 55

Figure 11-3 Allowable levels between calibrations from the reference standard to station ozone

analyzers ............................................................................................................................... 56

Figure 12-1 Data collection and management .............................................................................. 58

Figure 12-2 Continuous data validation flow chart ...................................................................... 61

Figure 12-3 Zero drift ................................................................................................................... 65

Figure 12-4 PM

2.5

data of two buddy sites ................................................................................... 68

Figure 13-1 Data process flow chart for integrated samples ........................................................ 70

Figure 13-2 Reconstructed PM

2.5

mass by major component for the 10 highest mass days (2012–

2015) ..................................................................................................................................... 74

Figure 13-3 Cobalt concentrations, 2010–2016 ............................................................................ 75

Figure 14-1 Continuous data flow ................................................................................................ 76

Figure 14-2 Calculation of Daily Maximum 8-hour Ozone ......................................................... 78

Figure 14-3 Integrated data flow ................................................................................................... 79

vii

Figure 14-4 Air Quality Management System .............................................................................. 82

Figure 15-1 Collocated PM

2.5

continuous monitor vs NAPS RM measurements ........................ 86

ACRONYMS AND ABBREVIATIONS

µg/m

3

microgram per cubic metre

µm micron/micrometre

AADT annual average daily traffic

AAQS Analysis and Air Quality Section

AQHI Air Quality Health Index

AQMS Air Quality Management System

CA census agglomeration

CAAQS Canadian Ambient Air Quality Standards

CAPS cavity attenuated phase shift

CCME Canadian Council of Ministers of the Environment

CEPA Canadian Environmental Protection Act 1999

CESI Canadian Environmental Sustainability Indicators

CFR Code of Federal Regulations

CO carbon monoxide

CMA census metropolitan area

CWAQD Canada-wide Air Quality Database

DNPH 2,4-dinitrophenylhydrazine

DQA data quality assessment

DQO data quality objective

ECCC Environment and Climate Change Canada

ED-XRF energy-dispersive X-ray fluorescence

FEM federal equivalent method (US)

FRM federal reference method (US)

HEPA high efficiency particulate air

ICP-MS inductively coupled plasma mass spectrometry

IR infrared

MOU memorandum of understanding

NALC North American Landscape Characterization

NAPS National Air Pollution Surveillance Program

NAPS RM NAPS reference method

NIST National Institute of Standards and Technology (United States)

NO nitric oxide

NO

2

nitrogen dioxide

NO

x

oxides of nitrogen

NQAP Network Quality Assurance Plan

xi

O

3

ozone

OC/EC organic carbon/elemental carbon

PAH polycyclic aromatic hydrocarbon

PC population centre

PM particulate matter

PM

2.5

particulate matter ≤2.5 µm (fine)

PM

2.5–10

particulate matter ≤10 µm and ≥2.5 µm (coarse)

PM

10

particulate matter ≤10 µm

ppb parts per billion

ppm parts per million

QA/QC quality assurance/quality control

RM reference method

RMS root mean square

Rt residence time

SCC sharp cut cyclone

SO

2

sulphur dioxide

SOP standard operating procedure

SRM standard reference material

SRP standard reference photometer

TEOM tapered element oscillating microbalance

TRAP traffic-related air pollution

US EPA United States Environmental Protection Agency

UV ultraviolet

VOC volatile organic compound

VSCC very sharp cut cyclone

VSL Dutch National Metrology Institute

WHMIS Workplace Hazardous Materials Information System

GLOSSARY

Accuracy: The comparison of a measurement to a known value. Accuracy can include measures

of agreement among repeated measurements (precision) and measurements of positive or

negative systematic errors (bias).

Actual conditions: The ambient temperature and pressure of a gas during the time its volume (or

volumetric flow rate) is measured.

Buddy sites: Sites that are in close proximity or that would be expected to measure similar

concentrations.

Calibration: Adjustment of an instrument or firmware that establishes the relationship between

instrument response and expected concentration. It compares values delivered by a device

under testing with those of a calibration standard of known accuracy (traceable).

xi

Calibration range: Scale used for multi-point verification and calibration.

Comparability: A qualitative term that expresses the confidence that data sets or methods can be

compared with those at other sites for common interpretation and analysis. Data

comparability is achieved via uniform procedures and methods.

Completeness: Comparison of the valid data collected versus the total number of data points

expected for the measurement frequency (e.g., hourly, daily, seasonally, annually).

Completeness confirms whether enough information is being collected to ensure

confidence in the conclusion or decisions made with the resulting data.

Continuous Data: Data that is collected using continuous monitoring equipment.

Data flag: Metadata applied to each continuous data record during data collection; can be modified

during the validation process.

Datalogger/data acquisition system: Device that collects data and other information from

instruments at the monitoring site.

Data qualifier code: Metadata applied to each integrated data record during the validation process.

Data validation: Process of examining objective evidence to confirm that the data are fit for

purpose.

Detection limit: The lowest value that a method can report with confidence.

Integrated Data: Data that are integrated from chemical and gravimetric analysis by the NAPS

Laboratory on integrated samples.

Multi-point verification: Establishes and subsequently verifies the accuracy and linearity of the

instrument at regular intervals to ensure data validity. It must include a pre- and post-zero

and at least three upscale points (100%, 60% and 30% of calibration range) in

recommended ranges.

Outlier: Data point that is statistically separate from the rest of the data set.

Performance audit: A quantitative evaluation of a measurement system by an independent auditor

to determine if criteria are meeting specifications.

Quality assurance (QA): An integrated system that involves determination of monitoring and data

quality objectives, network design, site selection, equipment evaluation and training to

ensure measurements meet defined standards of quality.

Quality control (QC): Operational procedures and checks used to assess equipment performance

relative to desirable or specified criteria. QC is also a check or comparison performed

during data validation for the purpose of identifying data that may be invalid, suspect or in

need of adjustment.

Reference standard: The standard used by the monitoring organization to which all other gas

mixtures or instruments are compared. It can be a standard reference material (SRM) or a

transfer standard but not both.

Representativeness: The degree to which data accurately and precisely represent the pollutant

concentration of an air parcel surrounding the site for a specific averaging period.

xi

Residence time: The amount of time in seconds that it takes for a sample of air to travel from the

sampling inlet to the instrument.

Rise/fall time: The time interval between initial response (the first observable change in analyzer

output) and a level of signal output that is 95% of the steady state output after a step

increase (rise) or decrease (fall) in input concentration.

Sampling inlet: An opening through which air enters the sampling system before continuing to an

analyzer, monitor or sampler.

Span check: The introduction of a known concentration of a gas near the calibration range. The

span check point is compared to a reference span value established at the time of multi-

point verification or calibration.

Span drift: The percent change in analyzer response to a constant upscale pollutant concentration

over a certain number of hours of unadjusted continuous operation.

Standard reference material: A material or gas mixture whose composition is known and is taken

as the standard to which all other gas mixtures are compared. In the NAPS Network, this

refers to the NIST or VSL materials and the NIST Standard Reference Photometer (SRP).

Suspect data: Data that do not follow expected behaviour (e.g., statistical, historical trend, temporal

or spatial). They can also be data that do not have the required documentation or the

supporting QC checks.

Tolerance levels: Levels at which calibrations or repair should be initiated to address issues before

acceptance criteria are exceeded and data becomes invalid.

Traceability: An unbroken chain of calibrations linked to national/international standards such as

NIST, VSL, National Research Council of Canada’s Measurement Science and Standards

Research Centre (NRC-MSS), and Innovation, Science and Economic Development

Canada.

Transfer standard: A gas mixture of known concentration or an instrument of known accuracy

verified against a reference standard. It is used in the field for comparison and analytical

purposes.

Ultra-fine particles: Particulate matter of size less than 0.1μm (100 nm) in diameter.

Zero air/zero gas: A gas mixture that is free of contaminants to a concentration below the detection

limit of the analyzer.

Zero drift: The absolute change in analyzer response to a constant zero air input over a certain

number of hours of unadjusted continuous operation.

Zero noise: Measure of the deviations from zero while sampling constant zero air. The noise is

measured as the root mean square (RMS) of the deviations from zero.

Zero check: Pollutant-free air introduced to measure responses below the analyzer’s detection

limit.

1

1.0 INTRODUCTION

The National Air Pollution Surveillance (NAPS) Program is managed by provincial and territorial

governments across Canada in cooperation with Environment and Climate Change Canada

(ECCC) (Figure 1.1).

In existence since 1969, NAPS was established

to facilitate the collection of air quality data

primarily in urban areas (Figure 1-2). Program

goals include providing a long-term air quality

data record that conforms to quality standards

designed to ensure data are reliable, defensible

and easily accessible.

The quality of monitoring data is contingent

upon the entire air quality monitoring system:

station siting, instrumentation selection and

performance, and data collection, validation and

dissemination. The NAPS Ambient Air

Monitoring and Quality Assurance/Quality

Control (QA/QC) Guidelines (hereafter referred

to as the “Guidance”) are intended to assist air

monitoring networks reporting data to the

Canada-wide Air Quality Database (hereafter

referred to as “Networks”) to develop and

implement quality systems to meet, at a

minimum, the NAPS data quality objectives

(DQO).

This Guidance is designed to be both practical

and achievable by the participating Networks.

2.0 NAPS PROGRAM AND

MONITORING OBJECTIVES

The NAPS Program was established to facilitate

and coordinate the collection of ambient air quality

data that is representative of populated areas

across the country. NAPS is the primary source of

air monitoring information in Canada, with nearly

260 stations located in approximately 150

communities reporting to the Canada-wide Air

Quality Database (CWAQD). Ambient air quality

monitoring is an essential component of Canada’s

Air quality data are currently collected from nearly

300 stations Canada

-wide, including:

Continuous measurements:

• ground-level ozone (O

3

)

• oxides of nitrogen (NO/NO

2

/NO

X

)

• sulphur dioxide (SO

2

)

• carbon monoxide (CO)

• PM

2.5

Integrated measurements:

• particulate matter (PM

2.5

/PM

2.5–10

)

• volatile organic compounds (VOC) including

carbonyls

• polycyclic aromatic hydrocarbons (PAH)

The resulting data support government policies,

programs and research studies.

Figure 1-1 NAPS Network

Figure 1-2 Air quality parameters currently

measured by NAPS

2

air pollution management and research program. Air quality data collected by the NAPS Program

are used by governments to assess air quality, produce reports, and develop air quality monitoring

programs.

The NAPS Program:

• supports the air quality data needs of Air Quality Management System (AQMS); the

Canada-United States Air Quality Agreement; the Canadian Air Quality Prediction

Program; the Canadian Environmental Sustainability Indicators (CESI) Program; and

other regional, Canada-wide and international air quality initiatives that may arise

• provides common guidance on collection, measurement, validation and transmission of

data from participating monitoring networks across Canada

• provides centralized laboratory facilities to ensure common analysis techniques that

meet or exceed Canadian laboratory standards

• provides a centralized data repository to facilitate access to information

• provides Canada-wide data summary reports that highlight spatial patterns and regional

assessments of air quality conditions and long-term trends

• performs technical and scientific research to identify additional potential pollutants of

concern and to evaluate appropriate monitoring methods

• shares information and experiences among Networks on air quality monitoring.

2.1 NAPS Monitoring Objectives

The most important consideration in designing or implementing any monitoring system is defining

its monitoring objectives. Monitoring objectives are statements that clarify the purpose for

monitoring and ensure that the data collected are fit for the intended use. The following are the

primary and secondary monitoring objectives of the NAPS Program.

Primary objectives:

• track and report on progress for achieving air quality objectives or standards

• measure representative pollutant concentrations in populated areas across Canada and

determine long-term trends in air quality

• provide air quality information to the public.

Secondary objectives (not in order of priority):

• support the development of air management strategies

• support regional, Canada-wide and international air quality agreements and initiatives

• support research studies to assess air pollution impacts on both health and ecosystems

• verify and validate emissions inventories, models, mapping applications, and support

forecast and advisory programs

• support development and evaluation of new monitoring technologies and their

application in the NAPS Program

• measure the highest representative pollutant concentrations in populated areas

• measure the upwind and downwind air pollution affecting urban areas

• measure regional background concentrations and transport of pollutants from regional

sources (including transboundary sources).

3

3.0 NAPS PROGRAM ORGANIZATION

Participation in the NAPS Program is formalized through a memorandum of understanding (MOU)

between the federal, provincial and territorial governments (Figure 3-1).

All parties and NAPS sites are specified in the MOU, which outlines the general terms and

conditions of cooperation among the parties that participate in overall management and support of

the NAPS Program.

Figure 3-1 NAPS MOU parties

3.1 NAPS Program Operation

The NAPS Program is operated by ECCC, provincial and territorial governments as well as Metro

Vancouver Regional District and Ville de Montréal.

Their respective roles are defined below.

Environment and Climate Change Canada

The Analysis and Air Quality Section (AAQS) of the Air Quality Research Division within the

Science and Technology Branch of ECCC oversees the NAPS Program.

4

The roles of NAPS Operations with respect to this Guidance are to:

• direct and coordinate a Canada-wide quality assurance/quality control (QA/QC) program

(including monitoring performance and system audits, as well as inter-agency testing

programs)

• provide laboratory calibration services and field transfer standards

• provide technical training and support to participating Networks.

The roles of NAPS Laboratory are to:

• provide sampling media for manual or integrated samplers (including filters, canisters

and cartridges) and to receive samples

• analyze integrated samples for particulate matter (PM) components, polycyclic aromatic

hydrocarbons (PAH) and volatile organic compounds (VOC)

• conduct research and special studies in air quality monitoring and coordinate method

development with participating Networks.

The roles of NAPS Data Management are to:

• maintain the CWAQD and the NAPS Data Products portal

• manage NAPS ambient air quality data and information requests

• report validated NAPS laboratory results to the NAPS Data Products portal

• report validated continuous data received from provinces and territories to the NAPS

Data Products portal

• analyze and report on ambient air quality.

Provincial and Territorial Governments, Metro Vancouver and Montréal

Provincial and territorial governments as well as Metro Vancouver Regional District and Ville de

Montréal contribute to the NAPS Program.

In accordance with this Guidance, they should:

• prepare and submit a Network Quality Assurance Plan (NQAP)

• select, maintain, calibrate and operate NAPS sites, analyzers, monitors and samplers

• validate and archive continuous data collected at NAPS sites

• inform ECCC of any change or updates to monitoring sites and equipment

• report final validated continuous data to NAPS Data Management.

4.0 DATA QUALITY OBJECTIVES (DQO)

DQO are qualitative and quantitative statements that define the tolerable level of data uncertainty.

The DQO of the NAPS Program ensure that the data collected and reported by monitoring sites

are of acceptable quality to meet Program objectives (as described in Section 2.0). This Guidance

has been designed to help ensure that uncertainties associated with the DQO are controlled through

appropriate planning, implementation and assessment.

Controlling data quality to achieve the DQO requires identifying both the appropriate criteria and

5

the methodologies that can be used to achieve DQO.

Important DQO elements for the NAPS Program are:

• Accuracy: The comparison of a measurement to a known value. Accuracy can include

measures of agreement among repeated measurements (precision) and measures of

positive or negative systematic errors (bias).

• Comparability: A measure of confidence that one data set or method can be compared

with those at other NAPS sites.

• Completeness: Comparison of the valid data collected versus the total number of data

points expected for the measurement frequency. This assessment confirms whether

enough information is being collected to ensure confidence in the conclusion or decisions

made with the resulting data.

• Detection limit: Detection limit is the lowest value that the method can report with

confidence.

• Representativeness: The degree to which data accurately and precisely represent the

pollutant concentration of an air parcel surrounding the site for a specific averaging

period. A monitoring site may also be representative of surrounding environments and

other influences (e.g., exposure of the general population or the impacts of emissions

from traffic). These sites can be used for grouping, interpreting and extrapolating NAPS

data.

The NAPS Program has defined specific criteria for some of the DQO, listed in Table 4-1.

Table 4-1 NAPS Program DQO criteria

Pollutant

Sampling frequency

Averaging period

Accuracy

Completeness

1

O

3

, NO/NO

2

/NO

X

, SO

2

,

CO

continuous

1 hour

15%

75%

PM

2.5

continuous/semi-

continuous

24 hours

15%

75%

Speciated particulate

compounds

1 per 3 or 6 days

2

24 hours

see note 3

75%

VOC/carbonyls/PAH 1 per 6 days

24 hours

2

see note 3

75%

1

Completeness refers to the amount of valid data represented for the indicated averaging period (e.g., 45 valid one-minute

averages within an hour, or 18 valid one-hour averages in a 24-hour period).

2

Varies by location.

3

Accuracy values are pollutant-specific and compound-specific, as indicated in specific analytical laboratory standard operating

procedures (SOP) and methods.

Table 4-2 identifies the DQO elements and their associated determination methodology as

provided in this Guidance. If methods follow guidelines and meet the quality acceptance criteria

described in the Guidance, the NAPS DQO should be met. Adherence to this Guidance should be

verified as described in Assessments and Corrective Action (Section 15.0). If deficiencies are

noted, the assessments can help inform either the need for corrective action or a reassessment of

DQO (as well as associated methods and measurement objectives for future use).

6

Table 4-2 NAPS Program DQO and relevant determination methodologies

DQO

Determination methodologies

Accuracy

Accuracy is assessed through comparisons to certified, traceable reference

standards or

methods. Reference to the relevant sections addressing accuracy are:

• Use of analyzers, samplers and methods with appropriate performance

specification, including method-detection limits, as described in:

– Section 9.0, Monitoring and Sampling Analytical Methods

• Calibration and audit checks against certified, traceable reference

standards, as described in:

– Section 11.0, Verification/Calibration

– Section 15.0, Assessments and Corrective Action

• The use of QC checks and performance acceptance criteria to invalidate

data that do not meet data quality objectives (DQO) related to data

accuracy, as described in:

– Section 11.0, Verification/Calibration

– Section 12.0, Data Collection and Validation – Continuous Data

– Section 13.0, Data Collection and Validation – Integrated Data

• Collection of collocated and duplicate samples

– Section 13.0, Data Collection and Validation – Integrated Data

Comparability

Consistency of measurements throughout the NAPS Program. Reference to the

relevant sections addressing comparability are:

•

consistent site design as described in:

– Section 7.0, Network Design and Site Location

– Section 8.0, Monitoring Station Design

• use of monitoring and analysis methods that meet defined minimum

performance specifications, as described in:

– Section 9.0, Monitoring, Sampling and Analytical Methods

• consistent operation and implementation of monitoring and analysis

methods, as described in:

– Section 10.0, Routine Operation

– Section 11.0, Verification and Calibration

• consistent data validation techniques, as described in:

– Section 12.0, Data Collection and Validation: Continuous Data

– Section 13.0, Data Collection and Validation – Integrated Data

Detection limit

• Use of analyzers, samplers and methods with appropriate performance

specification, including method-detection limits, as described in Section 9.0,

Monitoring, Sampling and Analytical Methods

Completeness

Meeting this requirement requires that data collection issues that could result in

missing or invalid data are minimized.

Reference to the relevant sections addressing completeness are:

• ensuring adequate education and training for field and data personnel, as

described in Section 5.0, Training

• ensuring sufficient documentation exists to provide procedure references,

track issues and reduce errors, as described in Section 6.0,

Documentation and Records

• verifying the safe unattended operation of the monitoring station and

equipment, and appropriate preventative maintenance to improve system

reliability, as described in Section 10.0, Routine Operation.

7

DQO

Determination methodologies

Representativeness

Representativeness related to the temporal and spatial scale of pollutant

concentrations, along with methods that are able to appropriately represent the

pollutants measured.

Reference to the relevant sections addressing representativeness are:

• sufficient sampling frequency, as listed in Table 4-1

• site classification, as defined in Section 6.0

• appropriate site location to represent target populations, as described in

Section 7.0, Network Design and Site Location

• section 8.0, Monitoring Station Design

• selection of appropriate sampling/analytical methods, as described in

Section 9.0, Monitoring, Sampling and Analytical Methods

5.0 TRAINING

Relevant education and training are essential for the ability of a monitoring program to achieve

network objectives. Networks are responsible for ensuring that appropriate training is available to

employees supporting the NAPS Program, commensurate with functions and activities listed in

this Guidance. The Networks’ NQAP and SOP are important references for training purposes.

Often, more experienced staff will provide training to newer staff, but a number of additional

training opportunities are available. Networks should maintain records of personnel qualifications

and training that are accessible for review during audit activities.

NAPS Operations will facilitate training related to instrument operation, maintenance and repair;

station set-up; and QA/QC. NAPS Program managers will also facilitate training that may be

offered by equipment manufacturers.

Furthermore, a number of courses have been developed for personnel involved with ambient air

monitoring. These courses are offered through government and professional organizations such as

the Air & Waste Management Association and the United States Environmental Protection Agency

(US EPA) Air Pollution Training Institute. Many of these training opportunities are offered online

and in person. In addition to training related to air quality monitoring, it is recommended that

Networks implement a health and safety training program.

Field technicians who work at NAPS sites to install, troubleshoot and repair electrical equipment

should have training related to:

• workplace electrical safety (CSA Group)

• Workplace Hazardous Materials Information System (WHMIS)

• working alone

• working at heights and ladder safety

• proper lifting techniques

• safe driving techniques

• first aid.

Transportation of Dangerous Goods training is required for any staff who handle, ship or offer

for shipping dangerous goods.

8

6.0 DOCUMENTATION AND RECORDS

Maintaining appropriate documentation and records is an essential component to ensure that

objectives are met and that defensible data are collected and reported. Table 6-1 outlines the

recommended documentation for several categories of air quality monitoring activities.

Components of NQAP are also described in this section.

All documentation should be easily accessible and retained for a minimum of five years. Most

documentation referred to in this section can be in electronic form, enabling ease of accessibility

and longer-term storage.

Responsibility for identifying, preparing and supervising quality management documents and

records lies with the Network that created these documents or records. Networks may work with

NAPS Operations to incorporate documents into a control system, either as a new document or as

part of an existing document (e.g., NQAP, SOP, calibrations). Previous versions of documents

should be archived if no longer in use. Effective document management includes a system for

generating, updating, maintaining and disseminating quality management–related documents and

records. Actual, ongoing and completed records are to be maintained as part of the Network’s

overall record management system and should be available to NAPS Operations upon request.

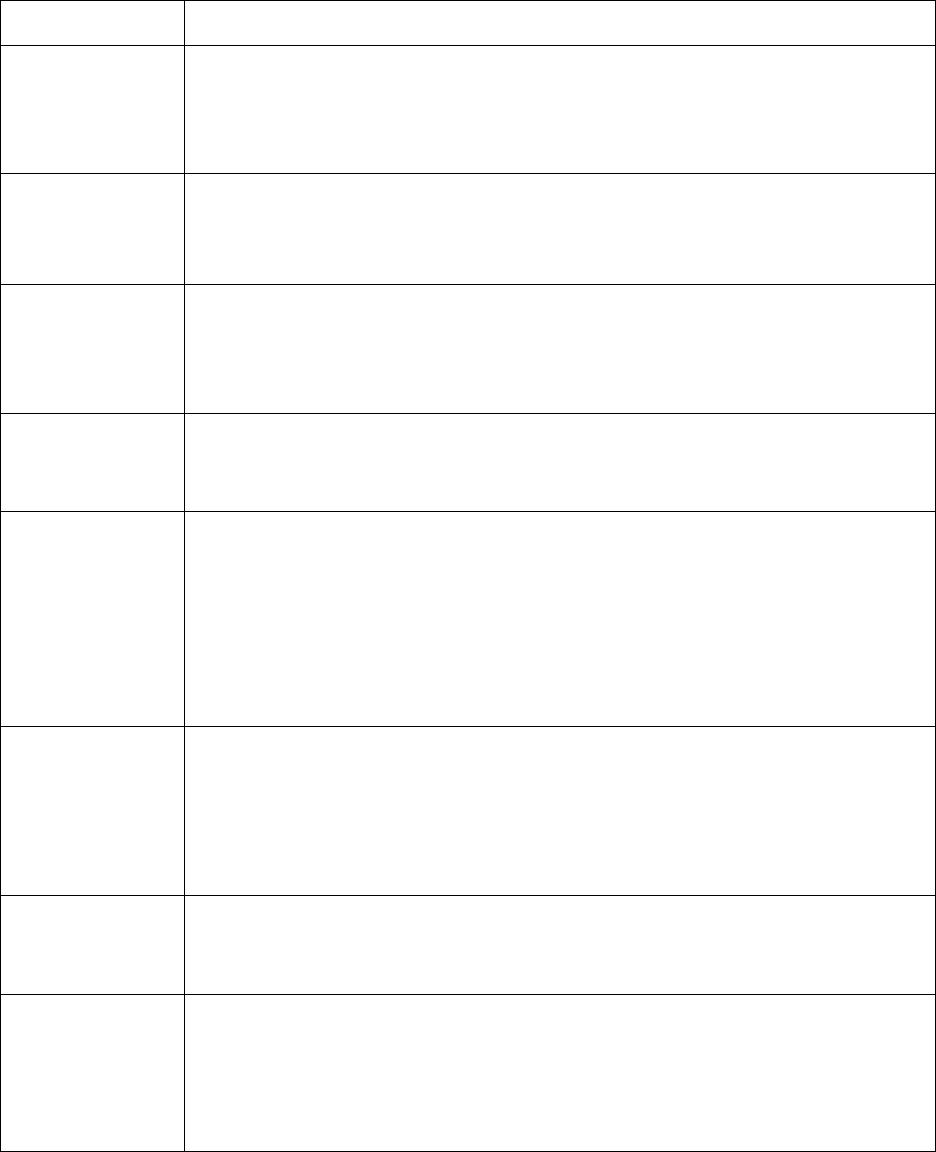

Table 6-1 Recommended documentation and records

Documentation Relevant section

NQAP

Site information

Equipment inventory

SOP

Section 6.0, Documentation and Records

Instrument maintenance records

Sample handling

Records

Station logs

Section 10.0, Routine Operation

Calibration documentation Section 11.0, Verification and Calibration

Raw data

Validation logs

Validated data

Section 12.0, Data Collection and Management:

Continuous Data

Integrated field data sheets

Integrated validated data

Section 13.0, Data Collection and Management:

Integrated Data

Audit records

Audit reports

Section 15.0, Assessments and Corrective Action

9

6.1

Network

Quality Assurance Plan (NQAP)

It is important to note that while this Guidance provides QA criteria and recommended procedures

to meet QA goals, specific procedures to meet these goals should be determined by the Network

performing the actual monitoring and reporting.

The NAPS Program is initiating a process that requests all participating Networks and ECCC

develop NQAP. The NQAP will describe how Networks are implementing the Guidance in order

to meet the DQO. These plans should be completed by 2021 and reviewed annually thereafter. An

NQAP facilitates communication among data users, staff, Networks and external users of the data.

An NQAP can relate to a single site or a group of sites operated by the same Network using similar

methodologies.

NAPS Program managers will submit their

respective NQAP and subsequent updates to

ECCC prior to the annual management

meeting. The NQAP will be available to

Networks on a document-sharing website with

restricted access.

Figure 6-1 and the rest of this section describe

proposed elements of the NQAP as related to

this Guidance. Networks should indicate

criteria that were not met and what corrective

actions will be implemented to address these.

Monitoring Program Management

Provide a revision history of the NQAP, including the following:

• Program organization, including a list of individuals and Networks involved with the

program identifying their roles and responsibilities

• an organizational chart showing the relationship and lines of communication among

project personnel is also helpful

• special training/certifications (Section 5.0)

• DQO (Section 4.0)

• documentation and records, including a list of types of records generated (Table 6-1).

Routine Operation (Section 10.0)

• Provide schedule for routine operations, including site visits and verification/calibration

checks

• provide references to any inspection/maintenance requirements in instrument or method-

specific SOP

• describe any instrument testing, inspection and maintenance checklists and schedules

• describe corrective action to be taken if issues are noted.

• Monitoring program management

• Routine operation

• Verification and calibration

• Data collection and validation for continuous

data

• Sample collection for integrated data

• Data reporting

• Assessments and corrective action

• Site and equipment information

Figure 6-1 Elements of an NQAP

10

Verification and Calibration (Section 11.0)

• List verification/calibration frequency for each instrument type requiring verification

checks and calibrations

• provide references to any calibration procedures in instrument-specific SOP (Note that

ECCC has developed a number of NAPS SOP, but these should be modified to

accurately reflect the specific methods and procedures used by a monitoring Network)

• describe methods for calibration and example forms

• provide references to QC check acceptance criteria used (Section 11.0)

• describe methods for tracking traceability/frequency and certification for calibration

standards.

Data Collection and Validation for Continuous Data (Section 12.0)

• Describe any software used and procedures for data collection, handling and storage

• identify data flags used to screen or invalidate data

• identify “on the fly” rules for flagging and correcting data from sites

• identify levels of validation and individuals responsible for each level

• list any criteria used to accept, reject or qualify data (at a minimum, performance-check

acceptance criteria listed in Section 10.0 should be used)

• describe analysis methods used for identification and treatment of outliers.

Sample Collection for Integrated Data (Section 13.0)

• Describe handling of integrated samples (see method-specific SOP)

• describe or provide procedures for inspection/acceptance of sample supplies and media

• outline procedures for filling out and submitting field data sheets for sample-tracking

purposes

• describe corrective action to be taken if problems arise.

Data Reporting (Section 14.0)

• Describe methods and schedules for data reporting (e.g., how data are submitted, format,

hour-ending or hour-beginning)

• describe any Network-specific reports

• provide schedule and level of validation for data reporting to the NAPS CWAQD.

Assessments and Corrective Action (Section 15.0)

• Provide an approximate schedule for any internal or external assessments

• include procedures for assessment review and responses.

Site and Equipment Information

Gathering and maintaining accurate site information is a vital part of records management for air

monitoring networks. Site information records should include, but are not limited to:

11

• the unique NAPS ID for each site

• site name and location, including geographical coordinates (lat/long, elevation above sea

level), postal code and street address (if available)

• spatial scale of representativeness (Section 7.2).

• monitoring start date (and stop date, if applicable)

• site photographs in each cardinal direction

• site map or satellite image of the area (e.g., Google Earth image)

• sampling inlet placement (Section 8.2.1), including height above ground (metres),

distances from local pollutant sources (e.g., roads) and flow obstructions (e.g., trees).

• site diagrams, sketches or photos (e.g., manifold flow diagram, service lines

(electrical/communication, equipment configurations)

• instrumentation, sampling and analysis method for each parameter at each site (Section

9.0)

• indicate averaging time for continuous measurements and sampling frequency for

integrated samples (see minimum requirements in Section 4.0).

Note that a number of these site details are required metadata when reporting to the CWAQD.

6.2 Site Classification

For site classification, the NAPS Program has adopted a hierarchical classification system (Table

6-2) based on work by University of British Columbia researchers (Brauer et al. 2011; Brauer et

al. 2013; Brauer and Hystad 2012). This classification system includes variables derived in a

geographic information system (GIS) and captures urbanization, neighbourhood population, local

land use and site type characteristics as described below. This system provides important metadata

information that can be used for grouping, interpreting and extrapolating NAPS measurement data.

6.2.1 Class 1: Urbanization

Class 1 identifies the degree of urbanization

around the monitoring site (Figure 6-2). The

Statistics Canada census population centre

(PC) classification (Statistics Canada 2017a,

Dictionary) is used to define levels of

urbanization. A PC is a populated place or a

cluster of interrelated populated places having

a population of at least 1,000 people and a

density of no fewer than 400 people per km

2

,

based on the latest census. All areas outside

PCs are classified as non-urban (rural) areas.

Taken together, PCs and non-urban areas cover

all of Canada.

A 250 m buffer was used to capture sites that

Figure 6-2 Urbanization

12

are adjacent to but not contained within the PC boundaries.

6.2.2 Class 2: Neighbourhood Population

Class 2 summarizes the size of residential populations residing within 4 km of NAPS monitoring

sites (Figure 6-3). A distance of 4 km represents the maximum distance associated with a

neighbourhood scale of spatial representativeness (Section 7.2). Census “block-face” population

(the smallest geographic area for which

census information is collected) was

used to determine the residential

population within 4 km.

Six population size categories have

been selected to maximize the

differences between sites. They are

meant to aid in the grouping of similar

sites and provide insights into emission

source strengths, such as domestic

heating. The neighbourhood population

provides further information to inform

population exposure assessment.

6.2.3 Class 3: Local Land Use

Class 3 represents the dominant land use category within a 400 m radius around each site (Figure

6-4). A radius of 400 m was selected because this distance represents the middle scale of spatial

representativeness (Section 7.2) and corresponds to the US Air Quality System metadata, which

summarizes land use within a quarter-mile radius of sites.

Land use data sets are used to assess this

classification. The DMTI Spatial

©

data set,

at a spatial resolution of 30 m, covers

exclusively urban areas and includes

residential, industrial, commercial,

government and institutional, open, parks,

and waterbody classifications. The

government and institutional classifications

are not included in the NAPS classification,

as this category captures a diverse mix of

land use types.

The forested and agriculture land use

classifications come from the 2010 North

American Landscape Characterization

Figure 6-4 Local land use

Figure 6-3 Neighbourhood Population

13

(NALC) data set, which covers all areas of Canada, at a spatial resolution of 250 m, since these

are not available in the DMTI Spatial

©

data set. The higher spatial resolution (30 m) 2010 land use

data set produced by Agriculture and Agri-Food Canada was also used in conjunction with the

NALC for the current version update in 2017. Similar to NALC, this data set covers the entirety

of Canada and includes the classifications of forest, cropland, settlement, wetland and waterbody.

Aerial imagery is required to classify monitoring sites that meet one of the following criteria:

• have incomplete DMTI Spatial

©

data within the 400 m buffers

• are under DMTI Spatial

©

’s government and institutional classification

• are under DMTI Spatial

©

’s open area category and have a NALC urban classification

• are under DMTI Spatial

©

’s resource and industrial classification.

Eight categories are used to describe the land use surrounding NAPS sites (Table 6-2).

6.2.4 Class 4: Site Type

Class 4 characterizes sites in terms of source influences. These include general population

exposure, regional background and local source–influenced (transportation and point source).

General Population Exposure

General population exposure sites measure urban background conditions where concentration

gradients are usually small, so measurements tend to be quite representative of larger areas and

thus are suitable to assess community-wide or neighbourhood-wide population exposure. This site

type is the most common in the NAPS Program (Figure 6-5).

14

Figure 6-5 Site Types

Regional Background

Regional background sites are sited outside of urban areas to measure:

• air pollutants flowing into an urban area from distant sources, including transboundary

sources

• air pollutants flowing out of an urban area

• background concentrations.

These sites are used to determine the contribution of local sources versus distant sources to air

pollutant concentrations. They may also be sited to extend the spatial coverage of monitoring for

use in air quality forecasting, mapping, modelling and remote sensing applications.

Local Source–Influenced

Local source–influenced sites tend to be pollutant-specific and include both transportation-

influenced and point source–influenced sites. They are sited to represent air pollutant exposure to

populations residing within the influence of the source(s).

Transportation-influenced sites

These sites are located in areas significantly impacted by transportation emissions (defined as

within 100 m of a major roadway). A distance of 100 m was selected based on a review conducted

by the Health Effects Institute (2010) on traffic-related air pollution (TRAP) gradients and health

15

effects. Major roadways are classified as having volumes greater than 15,000 annual average daily

traffic (AADT) counts. Only sites within 100 m of major roadways in large urban or medium urban

areas are classified as transportation-influenced based on a review of the traffic volumes in

urbanization classes.

Other types of transportation-influenced sites (off-road vehicles and engines, rail, marine and

aviation) are classified according to their proximity to these sources and based on an analysis of

the air quality data.

Point source–influenced sites

These sites are located in populated areas close to a major VOC (typically within 10 km) and SO

2

(~1 kt or greater per year) stationary emissions source. An analysis of point source sites indicated

much higher levels of SO

2

or VOC compared with transportation and general population exposure

site types, confirming that the point source sites are being significantly influenced by these sources.

Site classification should be documented in the Networks’ central databases as metadata records

and will help end-users and analysts with data interpretation and reporting.

16

Table 6-2 NAPS site classification system

Site class

Variables

Definition

Code

Urbanization

large urban area

large PC

1

(population ≥100,000)

LU

medium urban area

medium PC

1

(population between 30,000 and 99,999)

MU

small urban area

small PC

1

(population between 1,000 and 29,999)

SU

non-urban (rural) area

non-urban area

1

(population <1,000)

NU

Neighbourhood

population

<500

categories of residential population within 4 km of site

P1

500–9,999 P2

10,000–49,999 P3

50,000–99,999 P4

100,000–149,999 P5

≥150,000 P6

Local land use residential

the dominant land use category within a 400 m radius

R

commercial C

industrial I

parks P

water W

agriculture A

forested F

open O

Site type

general population

exposure

site located in an urban area where populations live,

work, shop, play, and that are not classified as

transportation or point sources

PE

regional backgrounds

site outside urban area RB

transportation

source–influenced

site within 100 m of a major road

2

or influenced by off-

road vehicles and engines, rail, marine or aviation

sources located in an urban area

T

point source–

influenced

site near (< ~10 km) a major stationary emissions

source

3

located in an urban area; classification based

on VOC and SO

2

4

ambient measurement data

PS

1 A population centre (PC) is defined as having a minimum population concentration of 1,000 people and a population density of at

least 400 people per square km. All areas outside PCs are classified as non-urban (rural areas) (Statistics Canada 2017a).

2 All freeways, highways, and arterial and collector roads with an AADT >15,000 (US EPA 2018).

3 Stationary sources include: industrial facilities, power generation, incinerators and waste-treatment plants.

4 SO

2

emissions greater than ~1,000 tonnes per year (RWDI 2016)

17

6.3 Equipment Information and Inventory

Along with station documentation, each Network should maintain an up-to-date inventory of all

monitoring equipment in use. This inventory should include:

• type of equipment used

• ownership information

• purchase price and date of receipt

• equipment description (name and manufacturer)

• equipment identification number (where applicable: e.g., model and serial number)

• equipment location and history (e.g., date of installation).

6.4 Standard Operating Procedures (SOP) for the NAPS Program

SOP are task-specific or method-specific documents that detail the method for an operation,

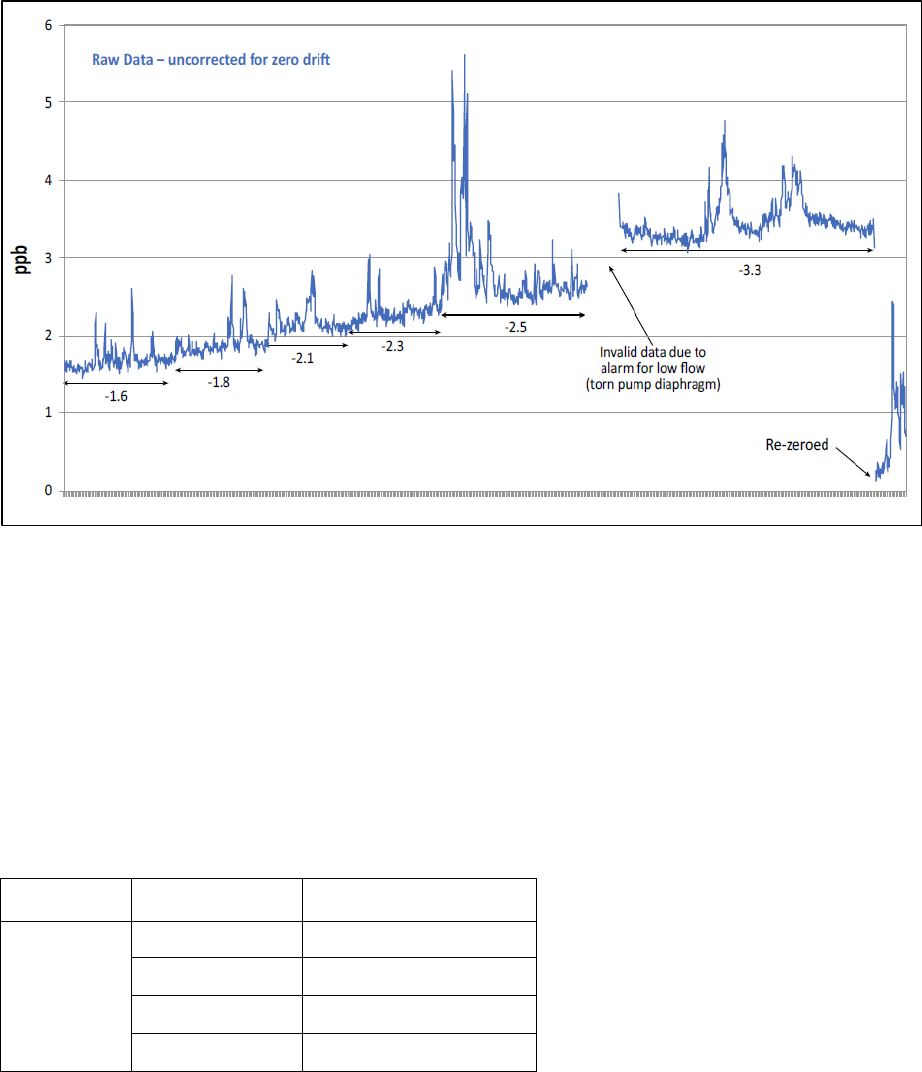

analysis or action with thoroughly prescribed steps. SOP can help ensure consistent performance

with organizational practices. They can also serve as training aids, provide ready reference and

documentation of proper procedures, reduce error occurrences in data, and improve data

comparability, credibility and defensibility. Networks should reference relevant SOP in their

NQAP and also ensure that applicable SOP and all manufacturer operation and maintenance

manuals are accessible for reference on site.

NAPS Operations has developed a number of technical SOP and forms. NAPS SOP related to

continuous analyzers and monitors, procedures for collecting integrated samples, data collection,

management and reporting processes are referenced in Appendix A.

These SOP contain most of the information needed for a given method or procedure, but users

should modify them to accurately reflect the methods and procedures implemented.

7.0 NETWORK DESIGN AND SITE LOCATION

The NAPS Program was established in 1969 to monitor and assess the quality of ambient air in

the populated regions of Canada. The primary purpose of the Program was to support the

development of Canada-wide air quality objectives for criteria air contaminants and subsequently

to track progress towards achieving these objectives. The scope of the Program has evolved to

include monitoring the precursors and components of air pollutants, identifying the sources and

regions that contribute to air pollutant levels, and providing timely air quality information to the

public. However, characterizing air quality for the achievement of Canada-wide ambient standards

and objectives continues to be the primary focus of the NAPS Program.

18

Figure 7-1

NAPS sites

Human health is the key driver for the establishment of the Canadian Ambient Air Quality

Standards (CAAQS). As such, locating NAPS sites (Figure 7-1) in populated areas is the highest

priority. Canada has a small population living in a large land area, leading to a low population

density compared to other countries. The Canadian population, however, is highly concentrated

geographically (Figure 7-2). In 2016, two out of three people (66%) lived within 100 km of the

southern Canada–United States border, an area that represents about 4% of Canada’s territory

(Statistics Canada, 2017b).

19

Figure 7-2 Population distribution in Canada, 2016

The network design will depend on human health effects as well as the magnitude and distribution

of pollutant concentrations within a defined area or region. Monitoring of air pollutants for which

there is no safe level for health effects (e.g., NO

2

, O

3

, PM

2.5

) should focus on sites located within

densely populated areas.

All census metropolitan areas (CMA) and census agglomerations (CA) with a population greater

than 100,000 should have at least one monitoring site. There are 41 CMA and CA in Canada with

a population greater than 100,000 (2016 Census; see Statistics Canada 2017a). These account for

73% of the total population. However, regionally important urban areas and communities with air

quality concerns should also be considered as a priority for air quality monitoring sites.

Within urban areas, Networks must determine the number of sites to be deployed and their

distribution (for many communities there will only be one site). The typical approach to monitoring

in urban areas involves placing sites at carefully selected representative locations, chosen to meet

the desired monitoring objectives and considering the emission and dispersion patterns of the

pollutants being monitored. The representativeness of a site will depend on the spatial variability

of air pollutant concentrations across a defined area. These variations occur as a result of several

factors, including emission characteristics, atmospheric conditions, topography, urban effects,

chemical transformations, and natural removal processes. Modelling and other assessment

20

techniques may be used to assess how representative a monitoring site is of a community or

neighbourhood.

Sites for monitoring pollutants associated with local sources (e.g., CO, PAH, SO

2

, VOC) should

be optimally sited for measurement data to be useful. Data from these sites may also be used to

identify sources or regions (as tracers for certain emission types) that contribute to poor air quality

and to assess long-term trends in air quality.

Another consideration for network design is the extent of the air pollution coverage. If the air

pollutant is mostly of local origin, then sites will be focused in urban areas. If there is a substantial

regional contribution to the pollutant concentrations, additional sites may be located outside of

urban areas to measure the portion that comes from regional transport or background sources (e.g.,

O

3

, PM

2.5

, VOC).

There has been and continues to be a very large monetary investment in air quality monitoring in

Canada. This investment and the importance of air quality data demand strategic planning and

design to ensure that the network provides adequate coverage to characterize air quality conditions

that address NAPS monitoring objectives. Selecting sites that satisfy multiple objectives will

reduce costs and maximize the efficiency of the network. Wherever feasible, air pollutants that

share similar characteristics (e.g., spatial variability, common sources, health or environmental

impacts) should be monitored at the same site.

Networks should recognize that air monitoring networks are dynamic and should adapt to changes

in air pollution patterns, as well as address new and emerging air quality issues. Each monitoring

network should be evaluated periodically to assess whether its objectives are being met. Networks

may relocate sites to meet changes in requirements, as warranted. However, sites with long trend

record should not be moved unless continued operation is no longer possible. In such a case, every

effort should be made to ensure that the new location measures comparable data. This will allow

for analysis of long-term air quality trends.

7.1 Network Design

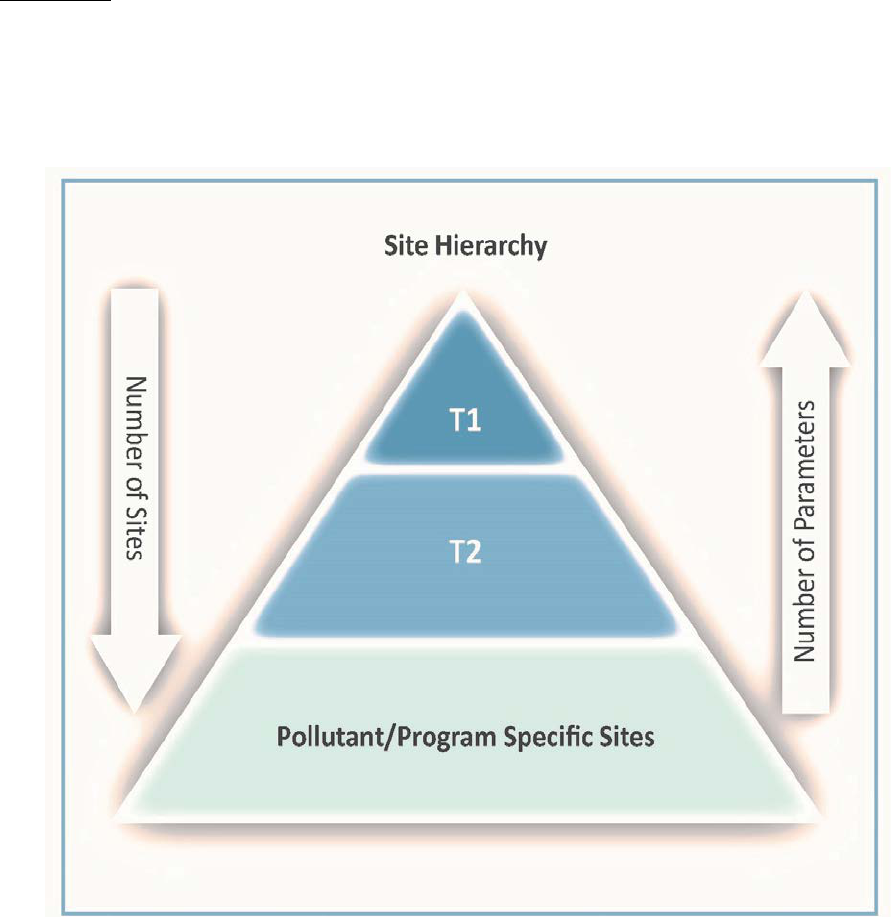

The NAPS Network has, as its foundation, a subset of “core” monitoring sites that measure a

comprehensive set of air pollutant parameters. These sites satisfy many monitoring objectives and

provide the basis for multi-pollutant characterizations across a range of site types. The core sites

operate in addition to the other NAPS sites, which are designed specifically to meet various

program and pollutant-specific requirements (Figure 7-3 and Figure 7-4).

7.1.1 Core Sites

Core sites include a comprehensive set of measurements at a select number of representative

locations across Canada that addresses multiple monitoring objectives.

21

The integrated NAPS PM

2.5

reference method

(RM) sampling sites form the basis of the core

sites. In addition, continuous PM

2.5

, O

3

and

NO

2

parameters should be included, as a

minimum. Additional parameters (e.g., CO,

PAH, integrated PM

10-2.5,

SO

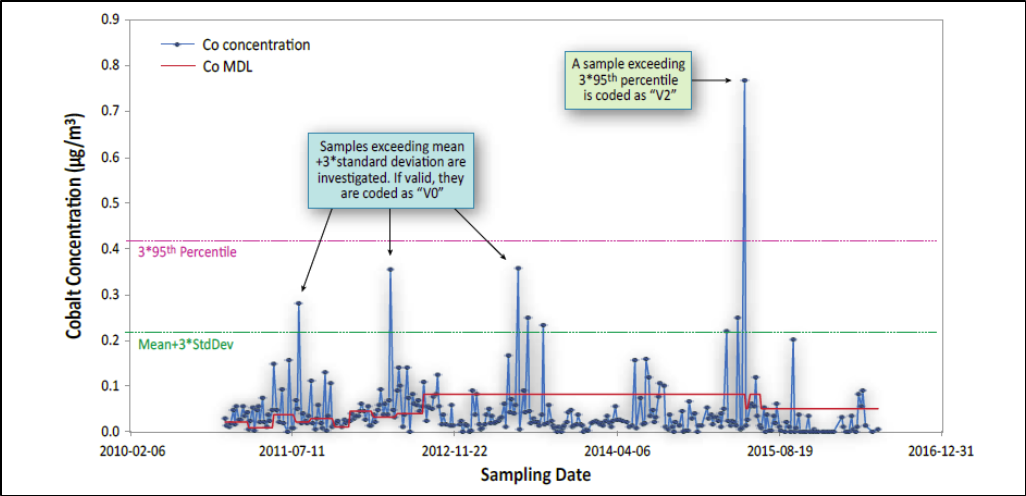

2

, VOC) are

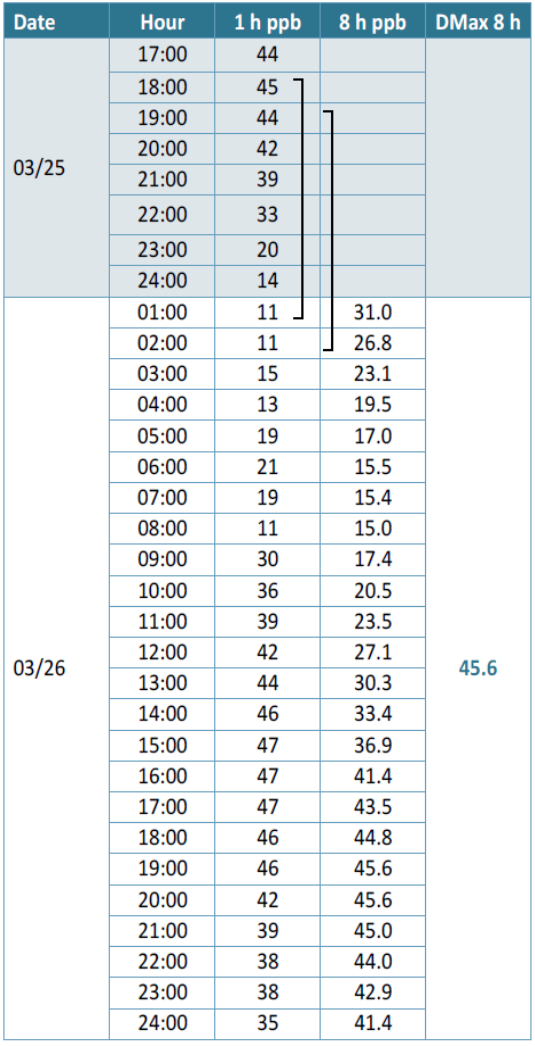

measured at a subset of core sites.

Factors that are to be considered for locating

core sites include:

• Population (including regional

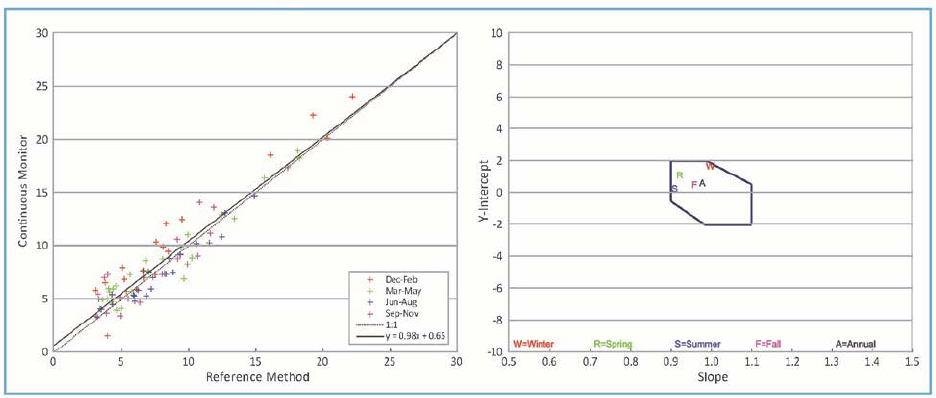

population centres)

• geographical and spatial

representativeness

• areas with known or suspected high

pollutant concentrations

• areas influenced by local emission

sources.

It is not feasible or necessary to measure all