Strategies for a Semantified Uniform Access to

Large and Heterogeneous Data Sources

Dissertation

zur

Erlangung des Doktorgrades (Dr. rer. nat.)

der

Mathematisch-Naturwissenschaftlichen Fakultät

der

Rheinischen Friedrich-Wilhelms-Universität Bonn

von

Mohamed Nadjib Mami

aus

Médéa - Algeria

Bonn, 20.07.2020

Dieser Forschungsbericht wurde als Dissertation von der Mathematisch-Naturwissenschaftlichen

Fakultät der Universität Bonn angenommen und ist auf dem Hochschulschriftenserver der ULB

Bonn https://nbn-resolving.org/urn:nbn:de:hbz:5-61180 elektronisch publiziert.

1. Gutachter: Prof. Dr. Sören Auer

2. Gutachter: Prof. Dr. Jens Lehmann

Tag der Promotion: 28.01.2021

Erscheinungsjahr: 2021

Acknowledgments

"Who does not thank people does not

thank God."

Prophet Muhammad PBUH

To the two humans who brought me to existence and afforded life’s most severe scarifies so

I can stand today in dignity and pride, to my dear mother Yamina and my father Zakariya.

There is no amount of words that can suffice to thank you. I am eternally in your debt. To

my two heroines, my two sisters Sarah and Mounira, I cannot imagine living my life without

you, thank you for the enormous unconditional love that you overwhelmed me with. To my

wife, to my self, Soumeya, to the pure soul that held me straight and high during my tough

times. You were and continue to be a great source of hope and regeneration. To my non-

biological brother and life-mate Oussama Mokeddem, it has been such a formidable journey

together. To my other brother, Mohamed Amine Mouhoub, I’ve lost count of your favors to

me in easing the transition to the European continent. I am so grateful for having met both of you.

To the person who gave me the opportunity that changed the course of my life. The person

who provided me with every possible facility so I can pursue this lifelong dream. Both in your

words and silence wisdom, I learned so much from you; to you my supervisor Prof. Dr. Sören

Auer, a thousand Thank You! To my co-supervisor who supported me from the very first day to

the very last, Dr. Simon Scerri. You are the person who set me in the research journey and

showed me its right path. You will be the person who I will miss the most. I so much appreciate

everything you did for me! To my other co-supervisor, Dr. Damien Graux, my journey flourished

during your presence; a pure coincidence?! No. You are a person who knows how to get the

most and best out of people. You are a planning master. Merci infiniment!

Thank you Prof. Dr. Maria-Esther Vidal, I will not be complimenting to say that the energy

of working with you is incomparable. You have built and continue to build generations of

researchers. The Semantic Web community will always be thankful for having you among its

members. Thank you Prof. Dr. Jens Lehmann, I have the immense pleasure to collaborate with

you and have your name on my papers. Working on your project SANSA marked my journey

and gave it a special flavor. Thank you Dr. Hajira Jabeen, I am so grateful for your advice and

orientations, you are the person with whom I wish if I could work more. Thanks, Dr. Christoph

Lange and Dr. Steffen Lohmann, you were always there and supportive in the difficult situations.

To Lavdim, Fathoni, Kemele, Irlan, and many others, the journey was much nicer with your

colleagueship. To my past Algerian university respectful teachers who have equipped me with

the necessary fundamentals. To you go all the credits. Thank you!

To this great country Germany, "Das Land der Ideen" (the country of ideas). Danke!

iii

Abstract

The remarkable advances achieved in both research and development of Data Management as

well as the prevalence of high-speed Internet and technology in the last few decades have caused

unprecedented data avalanche. Large volumes of data manifested in a multitude of types and

formats are being generated and becoming the new norm. In this context, it is crucial to both

leverage existing approaches and propose novel ones to overcome this data size and complexity,

and thus facilitate data exploitation. In this thesis, we investigate two major approaches to

addressing this challenge: Physical Data Integration and Logical Data Integration. The specific

problem tackled is to enable querying large and heterogeneous data sources in an ad hoc manner.

In the Physical Data Integration, data is physically and wholly transformed into a canonical

unique format, which can then be directly and uniformly queried. In the Logical Data Integration,

data remains in its original format and form and a middleware is posed above the data allowing

to map various schemata elements to a high-level unifying formal model. The latter enables

the querying of the underlying original data in an ad hoc and uniform way, a framework which

we call Semantic Data Lake, SDL. Both approaches have their advantages and disadvantages.

For example, in the former, a significant effort and cost are devoted to pre-processing and

transforming the data to the unified canonical format. In the latter, the cost is shifted to the

query processing phases, e.g., query analysis, relevant source detection and results reconciliation.

In this thesis we investigate both directions and study their strengths and weaknesses. For

each direction, we propose a set of approaches and demonstrate their feasibility via a proposed

implementation. In both directions, we appeal to Semantic Web technologies, which provide

a set of time-proven techniques and standards that are dedicated to Data Integration. In the

Physical Integration, we suggest an end-to-end blueprint for the semantification of large and

heterogeneous data sources, i.e., physically transforming the data to the Semantic Web data

standard RDF (Resource Description Framework). A unified data representation, storage and

query interface over the data are suggested. In the Logical Integration, we provide a description

of the SDL architecture, which allows querying data sources right on their original form and

format without requiring a prior transformation and centralization. For a number of reasons

that we detail, we put more emphasis on the logical approach. We present the effort behind an

extensible implementation of the SDL, called Squerall, which leverages state-of-the-art Semantic

and Big Data technologies, e.g., RML (RDF Mapping Language) mappings, FnO (Function

Ontology) ontology, and Apache Spark. A series of evaluation is conducted to evaluate the

implementation along with various metrics and input data scales. In particular, we describe an

industrial real-world use case using our SDL implementation. In a preparation phase, we conduct

a survey for the Query Translation methods in order to back some of our design choices.

v

Contents

1 Introduction 1

1.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.2 Problem Definition and Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Research Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.4 Thesis Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.4.1 Contributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.4.2 List of Publications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.5 Thesis Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2 Background and Preliminaries 13

2.1 Semantic Technologies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.1.1 Semantic Web . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

2.1.2 Resource Description Framework . . . . . . . . . . . . . . . . . . . . . . . 14

2.1.3 Ontology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

2.1.4 SPARQL Query Language . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

2.2 Big Data Management . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.1 BDM Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.2 BDM Challenges . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

2.2.3 BDM Concepts and Principles . . . . . . . . . . . . . . . . . . . . . . . . 18

2.2.4 BDM Technology Landscape . . . . . . . . . . . . . . . . . . . . . . . . . 20

2.3 Data Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.3.1 Physical Data Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.3.2 Logical Data Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.3 Mediator/Wrapper Architecture . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.4 Semantic Data Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

2.3.5 Scalable Semantic Storage and Processing . . . . . . . . . . . . . . . . . . 25

3 Related Work 29

3.1 Semantic-based Physical Integration . . . . . . . . . . . . . . . . . . . . . . . . . 29

3.1.1 MapReduce-based RDF Processing . . . . . . . . . . . . . . . . . . . . . . 29

3.1.2 SQL Query Engine for RDF Processing . . . . . . . . . . . . . . . . . . . 31

3.1.3 NoSQL-based RDF Processing . . . . . . . . . . . . . . . . . . . . . . . . 32

3.1.4 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

3.2 Semantic-based Logical Data Integration . . . . . . . . . . . . . . . . . . . . . . . 35

3.2.1 Semantic-based Access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

3.2.2 Non-Semantic Access . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

3.2.3 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

vii

4 Overview on Query Translation Approaches 41

4.1 Considered Query Languages . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.1.1 Relational Query Language . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.1.2 Graph Query Languages . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.1.3 Hierarchical Query Languages . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.1.4 Document Query Languages . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2 Query Translation Paths . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

4.2.1 SQL ↔ XML Languages . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.2 SQL ↔ SPARQL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.3 SQL ↔ Document-based . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.4 SQL ↔ Graph-based . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

4.2.5 SPARQL ↔ XML Languages . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.2.6 SPARQL ↔ Graph-based . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.3 Survey Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

4.4 Criteria-based Classification . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

4.5 Discussions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.6 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

5 Physical Big Data Integration 65

5.1 Semantified Big Data Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.1.1 Motivating Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.1.2 SBDA Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

5.1.3 SBDA Blueprint . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

5.2 SeBiDA: A Proof-of-Concept Implementation . . . . . . . . . . . . . . . . . . . . 70

5.2.1 Schema Extractor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

5.2.2 Semantic Lifter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5.2.3 Data Loader . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

5.2.4 Data Server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

5.3 Experimental Study . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

5.3.1 Experimental Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

5.3.2 Results and Discussions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

5.3.3 Centralized vs. Distributed Triple Stores . . . . . . . . . . . . . . . . . . . 81

5.4 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

6 Virtual Big Data Integration - Semantic Data Lake 83

6.1 Semantic Data Lake . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

6.1.1 Motivating Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

6.1.2 SDL Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

6.1.3 SDL Building Blocks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

6.1.4 SDL Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

6.2 Query Processing in SDL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

6.2.1 Formation of and Interaction with ParSets . . . . . . . . . . . . . . . . . . 90

6.2.2 ParSet Querying . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

6.3 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

7 Semantic Data Lake Implementation: Squerall 97

7.1 Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

viii

7.2 Mapping Declaration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

7.2.1 Data Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

7.2.2 Transformation Mappings . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

7.2.3 Data Wrapping and Querying . . . . . . . . . . . . . . . . . . . . . . . . . 103

7.2.4 User Interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

7.3 Squerall Extensibility . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

7.3.1 Supporting More Query Engines . . . . . . . . . . . . . . . . . . . . . . . 105

7.3.2 Supporting More Data Sources . . . . . . . . . . . . . . . . . . . . . . . . 106

7.3.3 Supporting a New Data Source: the Case of RDF Data . . . . . . . . . . 108

7.4 SANSA Stack Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

7.5 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

8 Squerall Evaluation and Use Cases 113

8.1 Evaluation Over Syntactic Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

8.1.1 Experimental Setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 114

8.1.2 Results and Discussions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

8.2 Evaluation Over Real-World Data . . . . . . . . . . . . . . . . . . . . . . . . . . 121

8.2.1 Use Case Description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

8.2.2 Squerall Usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

8.2.3 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

8.3 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 126

9 Conclusion 129

9.1 Revisiting the Research Questions . . . . . . . . . . . . . . . . . . . . . . . . . . 129

9.2 Limitations and Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

9.2.1 Semantic-based Physical Integration . . . . . . . . . . . . . . . . . . . . . 132

9.2.2 Semantic-based Logical Integration . . . . . . . . . . . . . . . . . . . . . . 134

9.3 Closing Remark . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

Bibliography 139

List of Figures 163

List of Tables 165

ix

CHAPTER 1

Introduction

"You must be the change you wish to see

in the world."

Mahatma Gandhi

The technology wheel has turned remarkably quickly in the last few decades. Advances

in computation, storage and network technologies have led to the extensively digital-ized era

we are living in today. At the center of the computation-storage-network triangle lays data.

Data, which is an encoded information at its simplest, has become one of the most valuable

human assets exploiting of which governments and industries are mobilized. Mathematician

Clive Humby once said: "data is the new oil". Like oil, the exploitation process of data faces

many challenges, of which we emphasis the following categories. First, the large sizes of data

render simple computational tasks prohibitively time and resource-consuming. Second, the very

quick pace at which modern high-speed and connected devices, sensors and engines generate

data. Third, the diverse formats that are result of the diversification of data models and the

proliferation of data management and storage systems. These three challenges, denoted Volume,

Velocity and Variety, respectively form the 3-D Data Management Challenges put forward by

Doug Laney [1], which was later adapted to the 3-V Big Data Challenges. Beyond the 3-V

model, few other challenges have also been deemed significant and, thus, been incorporated,

such as Veracity and Value. The former is raised when data is collected en mass with little to

no attention dedicated to its quality and provenance. The latter is raised when a lot of data is

collected but with no plausibly added value to its stakeholders or external users.

Nowadays, every agent, be it human or mechanical, is a contributor to the Big Data phe-

nomenon. Individuals produce data through their connected handheld devices that they use to

perform many of their daily life activities. For example, communicating with friends, surfing

the Internet, partaking in large social media discourses, playing video games, traveling using

GPS-empowered maps, even counting steps and heartbeats, etc. Industries are contributors

through e.g., analyzing and monitoring the functionality of their manufactured products, health

and ecological institutions through caring for the environment sanity and general human health,

governments through providing inhabitants safety and well-being, etc.

Traditionally, data was stored and managed in the Relational Model [2]. The Relational Data

Management is a well-established paradigm under which a large body of research, a wide array

of market offerings and a broad standardization efforts have emerged. The Relational Data

Management offers a great deal of system integrity, data consistency, and access uniformity, all

1

Chapter 1 Introduction

the while reducing the cost of system maintainability and providing advanced access control

mechanisms. However, the advent of extremely large-scale Big Data applications revealed the

weakness of Relational Data Management at dynamically and horizontally scaling the storage

and querying of massive amounts of data. To overcome these limitations and extend application

possibilities, a new breed of data management approaches have been suggested, in particular

under the so-called NoSQL (Not only SQL) family. With the opportunities it brings, this

paradigm shift contributes to broadening the Variety challenge.

Therefore, out of the five Big Data challenges, our main focus in this thesis is Variety with

the presence of variably large volumes of data. Unlike Volume and Velocity problems that can

be addressed in a once-for-all fashion, the Variety problem space is dynamic inherent to the

dynamicity of data management and storage technologies it has to deal with. For example, data

can be stored under a relational, hierarchical, or graph model; as it can also be stored in plain

text, in more structured file formats, or under the various modern NoSQL models. Such data

management and storage paradigms are in continuous development, especially under the NoSQL

umbrella that does not cease to attract significant attention to this very day.

1.1 Motivation

Amid a dynamic, complex and large data-driven environment, enabling data stakeholders,

analysts and users to maintain a uniform access to the data is a major challenge. A typical

starting point for the exploration and analysis of the large repository of heterogeneous data

is the Data Lake. Data Lake is named as an analogy to a natural lake. A lake is a large

pool of water that is in its purest form, which different people frequent for various purposes.

Professionals come to fish or mine, scuba-divers to explore, governments to extract water, etc.

By analogy, the digital Data Lake is a pool of data that is in its original form accessible for

various exploration and analysis purposes. This includes Querying, Search, Machine Learning,

etc. Data Lake management is an integral part of many Cloud providers such as Amazon AWS,

Microsoft Azure, and Google Cloud.

In the Querying use case, data in the Data Lake can directly be accessed, thus, maintaining data

originality and freshness. Otherwise, data can be taken out of the Data Lake and transformed

into another unique format, which then can be queried in a more uniform and straightforward

way. Both approaches, direct and transformed access, have their merits and reasons to exist.

The former (direct) is needed when data freshness is an uncompromisable requirement, or if for

privacy or technical reasons, data cannot be transformed and moved to another environment.

The latter (transformed) is needed if the previous requirements are not posed, if the query

latency is the highest priority, or if there is a specifically desired quality in a certain data model

or management system, in which case data is exhaustively transformed to that data model and

management system.

In this thesis, we focus on enabling a uniform interface to access heterogeneous data sources.

To this end, data has to be homogenized either physically or on-demand. The physical homo-

genization, or as commonly called Physical Integration, transforms data into a unique format,

which then can be naturally queried in a uniform manner. The on-demand homogenization,

or as commonly called Logical Integration, retrieves pieces of data from various sources and

combines them in response to a query. Exploring the two paths, their approaches and methods,

and in what context one can be superior to the other are what motivate the core of this thesis.

Figure 1.1 illustrates the differences between the Virtual and Physical Integration. The latter

2

1.2 Problem Definition and Challenges

Physical Integration

Logical Integration

QUERY

1. Access Original Data

QUERY

2. Access

Transformed

Data

Answer

1. Transform All

Answer

3. Output2. Output

Figure 1.1: General overview. For Physical Integration (right side), all data is transformed then queried.

For Virtual Integration (left side), data is not transformed; the query accesses (only) the relevant original

data sources.

accesses all the data after being thoroughly transformed, while the former accesses directly the

original data sources. Query execution details are hidden from the figure; it is to be found

within the two big boxes of the figure, gray (left) and colored (right).

1.2 Problem Definition and Challenges

As stated in the Introduction (Chapter 1), the Variety challenge is still less adequately addressed

in comparison to the other main Big Data dimensions Volume and Velocity. Further, observing

the efforts that try to address the Variety challenge, cross-accessing large heterogeneous data

sources is one of the least tackled sub-challenges (see Related Work, Chapter 3). On the other

hand, traditional Data Integration systems fall short in embracing the new generation of data

models and management systems. The latter are based on substantially different design principles

and assumptions. For example, traditional Data Integration assumes a certain level of model

uniformity that new Data Management systems comply against. Modern distributed database

systems (e.g, NoSQL stores) opened the flour to more flexible models such as the document

or wide columnar. Additionally, traditional Data Integration also assumes the centralized

nature of all the accessed data, while modern Data Management systems are decentralized and

distributed. For example, distributed file systems (e.g., HDFS [5]) invalidate the traditional

assumption that data has to fit in memory or storage of a single machine in order for the various

retrieval mechanisms (e.g., indexes) to be operational and effective. Such assumptions hinder

the capability of traditional Data Integration systems to cater for the large scale and various

types of the new Data Management paradigms.

3

Chapter 1 Introduction

Therefore, the general problem that this thesis addresses is providing a uniform access to

heterogeneous data, with a focus on the large-scale distributed space of data sources. The

specific challenges that the thesis targets are the following:

Challenge 1: Uniform querying of heterogeneous data sources

There are numerous approaches to access and interact with a data source, which we can

categorize into APIs and query languages. APIs, for Application Programming Interfaces, expose

programmatic methods allowing users to write, manipulate and read data using programming

languages. A popular example is JDBC (Java Database Connectivity), which offers a standardized

way to access databases. Programmatic methods can also be triggered distantly via a Web

protocol (HTTP), turning an API into a Web API. There are two main approaches by which

Web APIs receive commands from users. The first is by providing the method name to execute

remotely, an approach called RPC (Remote Procedure Call). The second is by issuing more

structured requests ultimately following a specific standard; an example of a prominent approach

is REST (Representational State Transfer). As these methods are effectively executed by a Data

Management system, they can only access its underlying data.

The second approach of interacting with a data source is via query languages. The range of

options here is wider than APIs. Examples include SQL for accessing relational databases (e.g.,

SQL Server) or tabular data in a more general sense (e.g., Parquet files), SQL-derived languages

(e.g., Cassandra Query Language, CQL), XPath/XQuery for XML data, JSON-based operations

for Document stores, Gremlin and Cypher for graph data, etc. An access method that falls in

between the two categories is GraphQL, which allows to run queries via a Web API. However,

similarly to APIs, queries in these languages are only posed against a specific data source to

interact with its underlying data. On the other hand, a few modern frameworks (e.g., Presto,

Drill) have used SQL syntax to allow the explicit reference to heterogeneous data sources in the

course of a single query, and then to retrieve the data from heterogeneous sources accordingly.

However, the challenge is providing a method that accesses several sources at the same time

in an ad hoc manner without having to explicitly reference the data sources, like if the user is

querying a single database.

Challenge 2: Querying original data without prior data transformation

As mentioned earlier in this chapter, in order to realize a uniform access to heterogeneous data

sources, data needs to be either physically or virtually integrated. Unlike the Physical Integration

that unifies the data model in a pre-processing ingestion phase, the Virtual Integration requires

to pose queries directly against the original data without prior transformation. The challenge

here is incorporating an intermediate phase that plays three roles (1) interpreter, which analyses

and translates the input query into requests over data sources, (2) explorer, which leverages

metadata information to find the sub-set of data sources containing the needed sub-results, and

(3) combiner, which collects and reconciles the sub-results forming the final query answer. As

we are dealing with a disperse distributed data environment, an orthogonal challenge to the

three-role middleware is to ensure the accuracy of the collected results. If it issues an incorrect

request as interpreter, it will not detect the correct sources (false positives) as explorer, and,

thus, will not return the correct sub-results as combiner. Similarly, if it detects incorrect links

between query parts as interpreter, it will perform a join yielding no results as combiner.

4

1.2 Problem Definition and Challenges

Challenge 3: Coping with the lack of schema and semantics in data lakes

By definition, a Data Lake is a schema-less repository of original data sources. In the absence of

a general schema or any mechanism that allows to deterministically transition from a query to

a data source, there is no way to inter-operate, link and query data over heterogeneous data

sources in a uniform manner. For example, a database or a CSV file uses a column that has

no meaning, e.g., col0 storing first names of customers. Also, different data sources can use

different naming formats and conventions to denote the same schema concept. For example, a

document database uses a field called fname to store customer’s first names. The challenge is

then to incorporate a mechanism that allows to (1) abstract away the semantic and syntactic

differences found across the schemata of heterogeneous data sources, and (2) maintain a set of

association links that allow detecting a data source given a query or a part of a query. The

former mechanism relies on a type of domain definition containing abstract terms, which are to

be used by the query. The latter mechanism maintains the links between these schema abstract

terms and the actual attributes in the data sources. These mechanisms can be used to fully

convert the data in a Physical Integration of query the original data on-the-fly in a Virtual

Integration.

Challenge 4: Scalable processing of expensive query operations

As the scope of our work is mostly dealing with distributed heterogeneous data sources, the

challenge is to provide a scalable query execution environment that can accommodate the

expensive operations involving those data sources. The environment is typically based on the

main memory of multiple compute nodes of a cluster. A query can be unselective, i.e., it requests

a large set of results without filters, or with filters that do not significantly reduce the size

of the results. For example, an online retailer is interested in knowing the age and gender of

all customers who have purchased at least three items during November’s promotional week

of the last three years. Although we filtered on the year, month and number of purchased

items, the result set is still expected to be large because promotional weeks are very attractive

shopping periods. A query can also join data coming from multiple data sources, which is typical

in heterogeneous environments such as the Data Lake. For example, the retailer asks to get

the logistics company that shipped the orders of all the customers who purchased fewer items

this year in comparison to the previous year and who left negative ratings about the shipping

experience. This query aims at detecting which logistic companies have caused inconvenient

shipping experience making certain customers rely less on the online retailer, and, thus, shop less

therefrom. To do so, the query has to join between Logistics, Customers, Orders, and Ratings,

which is expected to generate large intermediate results only containable and processable in a

distributed manner.

Challenge 5: Enabling joinability of heterogeneous data sources

When joining various data sources, it is not guaranteed that identifiers on which join queries

are posed match, and, thus, the join is successful and the query returns (the correct) results.

The identifiers can be of a different shape or lay in a different value range. For example, given

two data sources to join, values of the left join operand are in small letters and values of the

right are in big letters; or values of the left side are prefixed and contains an erroneous extra

character, and values of the right size are not prefixed and without any erroneous characters.

The challenge is then to correct these syntactic value misrepresentations on the fly during query

5

Chapter 1 Introduction

execution, and not in a pre-processing phase generating a new version of the data, which then is

queried.

1.3 Research Questions

To solve the above challenges we appeal in this thesis to Semantic technologies, which provide

a set of primitives and strategies dedicated to facilitating Data Integration. Such primitives

include ontologies, mapping language and a query language, with strategies for how these can

collectively be used to uniformly integrate and access disparate data. These primitives and

strategies allow to solve both Physical and Logical Data Integration problems. In the Physical

Integration, data is fully converted under the Semantic Web data model, namely RDF [6]. In

the Virtual Integration, data is not physically converted but integrated on-demand and accessed

as-is. In both cases Semantic Web query language, SPARQL [7], is used to access the data.

We intend to start our study with a fully Physical Integration where data is exhaustively

transformed into RDF, then we perform the necessary steps to bypass the transformation and

directly access the original data. The advantage of this approach is twofold. First, it will reveal

to us what are the necessary adaptations to transition from a physical off-line to a virtual on-line

on-demand data access. Second, it will unveil the various trade-offs to make in each direction in

order to reap the benefit of each. Finally and for the Virtual Integration in particular, data

remains in its source only effectively accessible using its native query language. Therefore, it is

necessary to ensure the suitability of SPARQL as a unified access interface, or a more efficient

language exists to which SPARQL should be translated. Having clarified the context, our thesis

work is based on the following research questions:

RQ1.

What are the criteria that lead to deciding which query language can be most

suitable as the basis for Data Integration?

As Data Integration entails a model conversion, either as physical or virtual, it is important to

check the respective query language’s suitability to act as a universal access interface. Since we

are basing on Semantic Web data model RDF (introduced later in chapter 2) at the conceptual

level, we default to using its query language SPARQL (also introduced in chapter 2) as the

unified query interface. However, at the physical level, a different data model may be more

suitable (e.g., space efficiency, query performance, technology-readiness, etc.), which requires the

translation of the query in SPARQL to the data model’s query language. An example of this can

be adopting a tabular or JSON data model, in which case SPARQL query needs to be translated

to SQL or a JSON-based query formalism, respectively. On the Virtual Data Integration side,

we may resort to another language if it has conversions to more query languages, thus, enables

access to more data sources. Therefore, we start our thesis by reviewing the literature of query

translation approaches that exist between various query languages, including SPARQL.

RQ2.

Do Semantic Technologies provide ground and strategies for solving Physical

Integration in the context of Big Data Management i.e., techniques for the representation,

storage and uniform querying of large and heterogeneous data sources?

To answer this, we investigate Semantic-based Data Integration techniques for ingesting and

querying heterogeneous data sources. In particular, we study the suitability of its data model in

capturing both the syntax and semantics found across the ingested data. We examine Semantic

6

1.4 Thesis Overview

techniques for the mapping between the data schema and the general schema that will guide

the Data Integration. One challenge in this regard is the heterogeneous data models e.g., table

and attributes in relational and tabular data, document and fields in document data, type and

properties in graph data, etc. We aim at forming a general reusable blueprint that is based

on Semantic Technologies and state-of-the-art Big Data Management technologies to enable

the scalable ingestion and processing of large volumes of data. Since data will be exhaustively

translated to the Semantic data model, we will dedicate special attention to optimizing the size

of the resulted ingested data, e.g., by using partitioning and clustering strategies.

RQ3.

Can Semantic Technologies be used to incorporate an intermediate middleware

that allows to uniformly access original large and heterogeneous data sources on demand?

To address this question, we analyze the possibility of applying existing Semantic-based Data

Integration techniques to provide a virtual, uniform and ad hoc access to original heterogeneous

data. By original, we mean data as and where it was initially collected without requiring

prior physical model transformation. By uniform, we mean using only one method to access

all the heterogeneous data sources, even if the access method is based on a formalism that is

different from that of one or more of the data sources. By ad hoc, we mean that from the user’s

perspective, the results should be derived directly starting from the access request and not by

providing an extraction procedure. A uniform ad hoc access can be a query in a specific query

language, e.g., SQL, SPARQL, XPath, etc. The query should also be decoupled from the data

source specification, meaning that it should not explicitly state the data source(s) to access.

Given a query, we are interested in analyzing the different mechanisms and steps that lead to

forming the final results. This includes loading the relevant parts of the data into dedicated

optimized data structures where they are combined, sorted or grouped distributedly in parallel.

RQ4.

Can Virtual Semantic Data Integration principles and their implementation be

applied to both synthetic and real-world use cases?

To answer this, we evaluate the implementation of the previously presented principles and

strategies for Virtual Data Integration. We consider several metrics, namely data execution

time, results accuracy, and resource consumption. We use both an existing benchmark with

auto-generated data and queries, as well as a real-world use case and data. Such evaluations

allow us to learn lessons that help improve the various implementations, e.g., performance

pitfalls, recurrent SPARQL operations and fragments to enrich the supported fragment, new

demanded data sources, etc.

1.4 Thesis Overview

In order to prepare the reader for the rest of this document, we present here an overview of the

main contributions brought by the thesis, with references to the addressed research questions.

We conclude with a list of publications and a glance at the structure of the thesis.

1.4.1 Contributions

In a nutshell, this thesis looks at the techniques and strategies that enable the ad hoc and uniform

querying of large and heterogeneous data sources. Consequently, the thesis suggests multiple

7

Chapter 1 Introduction

methodologies and implementations that demonstrate its findings. With more details, the

contributions are as follows:

1. Contribution 1:

A survey of Query Translation methods. Querying heterogeneous data in

a uniform manner requires a form of model adaptation, either permanently in the Physical

Integration or temporary in the Virtual Integration of diverse data. For this reason, we

conduct a survey on Query Transformation methods that exist in the literature and as

published tools. We propose eight criteria according to which we classify translation

methods from more than forty articles and published tools. We consider seven popular

query languages (SQL, XPath/XQuery, Document-based, SPARQL, Gremlin, and Cypher)

where popularity is judged based on a set of defined selection criteria. In order to facilitate

the reading of our findings vis-à-vis the various survey criteria, we provide a set of graphical

representations. For example, a historical timeline of the methods’ emergence years, or

star ratings to show the translation method’s degree of maturity. The survey allows us to

discover which translation paths are missing in the literature or that are not adequately

addressed. It also allows us to identify gaps and learn lessons to support further research

on the area. This contribution aims to answer RQ1.

2. Contribution 2:

A unified semantic-based architecture, data model and storage that are

adapted and optimized for the integration of both semantic and non-semantic data. We

present a unified architecture that enables the ingestion and querying of both semantic

and non-semantic data. The architecture presents a Semantic-based Physical Integration,

where all input data is physically converted into the RDF data model. We suggest a set of

requirements that the architecture needs to fulfill, such as the preservation of semantics

and efficient query processing of large-scale data. Moreover, we suggest a scalable tabular

data model and storage for the ingested data, which allows for efficient query processing.

Under the assumption that the ingestion of RDF data may not be as straightforward as

other flat models like relational or key-value, we suggest a partitioning scheme whereby

an RDF instance is saved into a table representing its class, with the ability to capture

also the other classes within the same class table if the RDF instance is multi-typed. We

physically store the loaded data into a state-of-the-art storage format that is suitable for

our tabular partitioning scheme, and that is queried in an efficient manner using large-

scale query engines. We conduct an evaluation study to examine the performance of our

implementation of the architecture and approaches. We also compare our implementation

against a state-of-the-art triple store to showcase the implementation ability to deal with

large-scale RDF data. This has answers to answer RQ2.

3. Contribution 3:

A virtual ontology-based data access to heterogeneous and large data

sources. We suggest an architecture for the Virtual Integration of heterogeneous and

large data sources, which we call Semantic Data Lake, SDL. It allows to query original

data sources without requiring to transform or materialize the data in a pre-processing

phase. In particular, it allows to query the data in a distributed manner, including

join and aggregation operations. We formalize the concepts that are underlying the

SDL. The suggested Semantic Data Lake builds on the principles of Ontolog-Based Data

Access, OBDA [8], hence mappings language and SPARQL query are cornerstones in the

architecture. Furthermore, we allow users to declaratively alter the data on query time

in order to enable two sources to join. This is done on two levels, the mappings and

SPARQL query. Finally, we suggest a set of requirements that SDL implementations

8

1.4 Thesis Overview

have to comply with. We implement the SDL architecture and its underlying mechanisms

using state-of-the-art Big Data technologies, Apache Spark and Presto. It supports several

popular data sources, including Cassandra, MongoDB, Parquet, Elasticsearch, CSV and

JDBC (experimented with MySQL, but many other data sources can be connected to via

JDBC in the same way). This contribution aims to answer RQ3.

4. Contribution 4:

Evaluating the implementation of the Semantic Data Lake through a

synthetic and a real-world industrial use case. We explore the performance of our imple-

mentation in two use cases, one synthetic and one based on a real-world application from

the Industry 4.0 domain. We are interested in experimenting with queries that evolve a

different number of data sources to assess the ability to (1) perform joins and (2) the effect

of increasing the number of joins on the overall query execution time. We breakdown

the query execution time into its constituent sub-phases: query analyses, relevant data

detection, and query execution time by the query processing engine (Apache Spark and

Presto). We also experiment with increasing sizes of the data to evaluate the implement-

ation scalability. Besides query-time performance, we measure results accuracy and we

collect resource consumption information. For the former, we ensure 100% accuracy of the

returned results; for the latter, we monitor data read/write, data transfer and memory

usage. This contribution has answers to RQ4.

1.4.2 List of Publications

The content of this thesis is mostly based on scientific publications presented at international

conferences and published within their respective proceedings. The following is an exhaustive

list of these publications along with other publications that did not make part of the thesis.

A comment is attached to the publications where I have a partial contribution, and to one

publication (number 2) with another main author involvement. To the other publications, I

have the major contribution, with the co-authors contributing mostly by reviewing the content

and providing supervision advice.

• Conference Papers:

3. Mohamed Nadjib Mami

, Damien Graux, Simon Scerri, Hajira Jabeen, Sören Auer,

Jens Lehmann. Uniform Access to Multiform Data Lakes Using Semantic Technologies.

In Proceedings of the 21st International Conference on Information Integration and

Web-based Applications & Services (iiWAS), 313-322. 2019. I conducted most of the

study; co-author Damien Graux contributed to the performance evaluation (Obtaining

and representing Resource Consumption figures, Chapter 8).

4. Mohamed Nadjib Mami

, Damien Graux, Simon Scerri, Hajira Jabeen, Sören Auer,

Jens Lehmann. Squerall: Virtual Ontology-Based Access to Heterogeneous and Large

Data Sources. In Proceeding of the 18th International Semantic Web Conference

(ISWC), 229-245, 2019.

5.

Gezim Sejdiu, Ivan Ermilov, Jens Lehmann,

Mohamed Nadjib Mami

. Distlodstats:

Distributed computation of RDF dataset statistics. In Proceedings of the 17th

International Semantic Web Conference (ISWC), 206-222. 2018. This is a joint work

with Gezim Sejdiu. I contributed to formulating and presenting the problem and

solution, and in evaluating the complexity of the implemented statistical criteria.

9

Chapter 1 Introduction

6.

Kemele M. Endris, Mikhail Galkin, Ioanna Lytra,

Mohamed Nadjib Mami

, Maria-

Esther Vidal, Sören Auer. MULDER: querying the linked data web by bridging

RDF molecule templates. In Proceedings of the 18th International Conference on

Database and Expert Systems Applications (DEXA), 3-18, 2017. This is a joint work

with Kemele M. Endris and Mikhail Galkin. I contributed to the experimentation,

particularly preparing graph partitioning.

7.

Farah Karim,

Mohamed Nadjib Mami

, Maria-Esther Vidal, Sören Auer. Large-scale

storage and query processing for semantic sensor data. In Proceedings of the 7th

International Conference on Web Intelligence, Mining and Semantics, 8, 2017. This

is a joint work with Farah Karim. I contributed to describing, implementing and

evaluating the tabular representation of RDF sensor data, including the translation

of queries from SPARQL to SQL.

8.

Sören Auer, Simon Scerri, Aaad Versteden, Erika Pauwels,

Mohamed Nadjib Mami

,

Angelos Charalambidis, et al. The BigDataEurope platform–supporting the variety

dimension of big data. In Proceedings of the 17th International Conference on Web

Engineering (ICWE), 41-59, 2017. This is a project outcome paper authored by its

technical consortium. I was responsible for describing the foundations of the Semantic

Data Lake concept, including its general architecture. I also contributed to building

the platform’s high-level architecture.

9. Mohamed Nadjib Mami

, Simon Scerri, Sören Auer, Maria-Esther Vidal. Towards

Semantification of Big Data Technology. International Conference on Big Data

Analytics and Knowledge Discovery (DaWaK), 376-390, 2016.

• Journal Articles:

1.

Kemele M. Endris, Mikhail Galkin, Ioanna Lytra,

Mohamed Nadjib Mami

, Maria-

Esther Vidal, Sören Auer. Querying interlinked data by bridging RDF molecule tem-

plates. In Proceedings of the 19th Transactions on Large-Scale Data-and Knowledge-

Centered Systems, 1-42, 2018. This is a joint work with Kemele M. Endris and

Mikhail Galkin. An extended version with the same contribution as the conference

number 6 below.

2. Mohamed Nadjib Mami

, Damien Graux, Simon Scerri, Harsh Thakkar, Sören Auer,

Jens Lehmann. The query translation landscape: a survey. In ArXiv, 2019. To be

submitted to an adequate journal. I conducted most of this survey, including most

of the review criteria and the collected content. Content I have not provided is the

review involving Tinkerpop and partially Neo4J (attributed to Harsh Thakkar).

• Demonstration Papers:

12. Mohamed Nadjib Mami

, Damien Graux, Simon Scerri, Hajira Jabeen, Sören Auer,

Jens Lehmann. How to feed the Squerall with RDF and other data nuts?. In Proceed-

ing of the 18th International Semantic Web Conference (ISWC), Demonstrations and

Posters Track, 2019.

13. Mohamed Nadjib Mami

, Damien Graux, Simon Scerri, Hajira Jabeen, Sören Auer.

Querying Data Lakes using Spark and Presto. In Proceedings of the World Wide Web

Conference (WWW), 3574-3578. Demonstrations Track, 2019.

10

1.5 Thesis Structure

14.

Gezim Sejdiu, Ivan Ermilov,

Mohamed Nadjib Mami

, Jens Lehmann. STATisfy

Me: What are my Stats?. In Proceedings of the 17th International Semantic Web

Conference (ISWC), Demonstrations and Posters Track, 2018. This is a joint work

with Gezim Sejdiu. I contributed to explaining the approach.

• Industry Papers:

1. Mohamed Nadjib Mami

, Irlán Grangel-González, Damien Graux, Enkeleda Elezi,

Felix Lösch. Semantic Data Integration for the SMT Manufacturing Process using

SANSA Stack. I contributed to deploying, applying and evaluating SANSA-DataLake

(Squerall), and partially establishing the connection between SANSA-DataLake and

another component of the project (Visual Query Builder).

1.5 Thesis Structure

The thesis is structured in nine chapters outlined as follows:

•

Chapter 1: Introduction. This chapter presents the main motivation behind the thesis,

describes the general problem it tackles and lists its challenges and main contributions.

•

Chapter 2: Background and Preliminaries. This chapter lays the foundations for all the

rest of the thesis, including the required background knowledge and preliminaries.

•

Chapter 3: Related Work. This chapter examines the literature for related efforts in

the topic of Big Data Integration. The reviewed efforts are classified into Physical Data

Integration and Virtual Data Integration, each category is further divided into a set of

sub-categories.

•

Chapter 4: Overview on Query Translation Approaches. This chapter presents a literature

review on the topic of Query Translation, with a special focus on the query languages

involved in the thesis.

•

Chapter 5: Physical Big Data Integration. This chapter describes the approach of Semantic-

based Physical Data Integration, including a performance comparative evaluation.

•

Chapter 6: Virtual Big Data Integration - Semantic Data Lake. This chapter describes the

approach of Semantic-based Virtual Data Integration, also known as Semantic Data Lake.

•

Chapter 7: Semantic Data Lake Implementation: Squerall. This chapter introduces an

implementation of the Semantic-based Virtual Data Integration.

•

Chapter 8: Squerall Evaluation and Use Cases. This chapter evaluates and showcases two

applications of the SDL implementation, one synthetic and one from a real-world use case.

•

Chapter 9: Conclusion and Future Directions. This chapter concludes the thesis revising

the research questions, discussing lessons learned and outlining a set of future research

directions.

11

CHAPTER 2

Background and Preliminaries

"The world is changed by your example,

not by your opinion."

Paulo Coelho

In this chapter, we introduce the foundational concepts that are necessary to understand the

rest of the thesis. We divide these concepts into Semantic Technologies, Big Data Management

and Data Integration. We dedicate section 2.1 to Semantic Technologies concepts, including

Semantic Web canonical data model RDF, its central concept of ontologies and its standard

query language SPARQL. In section 2.2, we define Big Data Management related concepts, in

particular concepts from distributed computing and from modern data storage and management

systems. Finally, Data Integration concepts are introduced in section 2.3.

2.1 Semantic Technologies

Semantic Technologies are a set of technologies that aim to realize Semantic Web principles, i.e.,

let machines understand the meaning of data and interoperate thereon. In the following, we

define the Semantic Web as well as its basic building blocks.

2.1.1 Semantic Web

The early proposal of the Web [9] as we know it today was defined as part of a Linked Information

Management system presented by Tim Berners Lee to his employer CERN in 1989. The purpose

of the system was to keep track of the growing information collected within the company, e.g.,

code, documents, laboratories, etc. The system was imagined to be a ‘web’ or a ‘mesh’ of

information where a person can browse and traverse from one information source (e.g., reports,

notes, documentation) to another. The traversal used a previously known mechanism called

HyperText (1987) [10]. The proposal received encouragement, so Tim, together with colleague

Robert Cailliau, expanded on the key concepts and presented a reformulated proposal entitled:

"WorldWideWeb: Proposal for a HyperText project"[11] with focus on the Web. Years later, in

2001, Tim Berners Lee and colleagues suggested a transition from a Web of documents [12], as it

was conceived, to a Web of data where textual information inside a document is given a meaning,

e.g., athlete, journalist, city, etc. This meaning is specified using predefined vocabularies and

taxonomies in a standardized format, which machines can understand and inter-operate on.

13

Chapter 2 Background and Preliminaries

ex:

Car

rdf:type

“250”

ex:

producer

ex:

assembly

ex:

BMW

ex:

Germany

“7.1”

ex:

topSpeed

ex:

battery

ex:

Country

ex:

Model

ex:i8

rdf:type

rdf:type

ex:

Butterfly

ex:

doorType

Schema / Ontology

Data / Instances

ex:

producer

ex:

assembly

Figure 2.1: An example of RDF triples forming an RDF graph. Resources are denoted by circles and

literals are denoted by rectangles. Instance resource circles are located on the lower box, and Schema

resources are located on the upper box.

The new Web, called Semantic Web, has not only given meaning to previously plain textual

information but also promoted data sharing and interoperability across systems and applications.

2.1.2 Resource Description Framework

To express and capture that meaning, Semantic Web made use of RDF, the Resource Description

Framework, introduced earlier in 1998 by the W3C Consortium [6]. RDF is a standard for

describing, linking and exchanging machine-understandable information on the Web. It denotes

the entities inside Web pages as resources and encodes them using a triple model subject-

predicate-object, similar to subject-verb-object of an elementary sentence. RDF triple can

formally be defined as follows (Definition 2.1):

Definition 2.1: RDF Triple [13]

Let

I

,

B

,

L

be disjoint infinite sets of URIs, blank nodes, and literals, respectively. A tuple

(

s, p, o

)

∈

(

I ∪ B

)

× I ×

(

I ∪ B ∪ L

) is denominated an RDF triple, where

s

is called the

subject, p the predicate, and o the object.

Subject refers to a resource, object refers to a resource or a literal value, predicate refers

to a relationship between the subject and the object. Figure 2.1 shows an example of a set

14

2.1 Semantic Technologies

1 @p refix ex : <h ttp s : / / examples . org / automob i l e /> .

2 @p refix rdf : <htt p : / /www. w3 . or g /1999/02/22 − rdf −syntax −ns#> .

3

4 ex : Ge rma ny rdf : type ex : Country .

5 ex : BMW rdf : type ex : Model .

6 ex : I8 rdf : type ex : Car .

7 ex : I8 ex: as sembly ex : Germany .

8 ex : I8 ex: pr oducer ex : BMW .

9 ex : I8 ex: do orType ex : B utte rfly .

10 ex : I8 ex: to pSpeed " 150 " ^^ xsd : integer .

11 ex : I8 ex: b attery " 7.1 " ^^ xsd : float .

Listing 2.1: RDF triples represented by the graph in Figure 2.1.

of RDF triples, collectively forming an RDF graph. We distinguish between data or instance

resources and schema resources

1

. Data resources are instances of schema resources. For example,

in Figure 2.1, I8, BMW, Germany, and Butterfly are instances while Car, Model and country are

schema resources, also called classes. I8, BMW and Germany are instances of Car, Model and

Country classes, respectively. All resources of the figure belong to the ontology of namespace

’ex’. In the same figure, "7.1" and "250" are literals of type float and integer respectively.

Resources are uniquely identified using URIs (Uniform Resource Identifiers). Unlike URL

(Uniform Resource Locator) that has necessarily to locate an existing Web page or a position

therein, URI is a universal pointer allowing to uniquely identify an abstract or a physical

resource [14] that does not necessarily have (although recommended) a representative Web

page. For example, <http://example.com/automobile/Butterfly> and

ex:Butterfly

are URIs.

In the latter, the prefix

ex

denotes a namespace [14], which uniquely identifies the scheme

under which all related resources are defined. Thanks to the use of prefixes, the car door type

Butterfly

of URI

ex:Butterfly

defined inside the scheme

ex

can be differentiated from the

insect

Butterfly

of URI

ins:Butterfly

defined inside the scheme

ins

. The triples of the RDF

graph of Figure 2.1 are presented in Listing 2.1.

2.1.3 Ontology

Ontology is a specification of a common domain understanding that can be shared between

people and applications. It promotes interoperability between systems and reusability of the

shared understanding across disparate applications [15–17]. For example, ontologies define all

the concepts underlying a medical or an ecological domain that enable medical and ecological

institutions to universally share and interoperate over the same data. By analogy, an ontology

to RDF data, among others, plays the role of a schema to relational data. Ontologies, however,

are encoded using the same model as the data, namely the subject-predicate-project triple

model. Further, ontologies are much richer in expressing the semantic characteristics of the

data. For example, they allow to build hierarchies between classes (e.g., Car as sub-class

of Vehicle) or between properties (e.g., topSpeed as sub-property of metric) or establishing

relations between properties (e.g., inverse, equivalent, symmetric, transitive) by using a set

of axioms. Axioms are partially similar to integrity constraints in relational databases, but

with a richer expressivity. Ontologies can be shared with the community, in contrast to the

1

Another common appellation is A-Box for instance resources and T-Box for schema resources.

15

Chapter 2 Background and Preliminaries

relational schema and integrity constraints, both expressed using SQL query language, that

only live inside and concern a specific database. A major advantage of sharing ontologies is

allowing the collaborative construction of universal schemata or models that outlive the data

they were initially describing. This characteristic complies with one of the original Web of

Data and Linked Open Data principles [18]. Last but not least, ontologies are the driver of one

of the most prominent Semantic Data applications, namely the Ontology-based Data Access

(OBDA) [8]. An ontology can be formally defined as follows (Definition 2.2):

Definition 2.2: Ontology [19]

Let

C

be a conceptualization, and

L

a logical language with vocabulary

V

and ontological

commitment

K

. An ontology

O

K

for

C

with vocabulary

V

and ontological commitment

K

is a logical theory consisting of a set of formulas of

L

, designed so that the set of its

models approximates as well as possible the set of intended models of L according to K.

2.1.4 SPARQL Query Language

Both data and ontology are modeled after the triple model, RDF. Several query languages for

querying RDF have been suggested since its conception. RQL, SeRQL, eRQL, SquishQL, TriQL,

RDQL, SPARQL, TRIPLE, Versa [20, 21]. Among these languages, SPARQL has become a

W3C standard and recommendation [7, 22]. SPARQL, for SPARQL Protocol and RDF Query

Language, extracts data using pattern matching technique, in particular graph pattern matching.

Namely, the query describes the data it needs to extract in the form of a set of patterns. These

patterns are triples with some of their terms (subject, predicate or object) being variable, or

as also called unbound. Triple patterns of a SPARQL query are organized into so-called Basic

Graph Pattern and are formally defined as follows (Definition 2.3):

Definition 2.3: Triple Pattern and Basic Graph Patter [23]

Let

U, B, L

be disjoint infinite sets of URIs, blank nodes, and literals, respectively. Let

V

be

a set of variables such that

V ∩

(

U ∪ B ∪ L

) =

θ

. A triple pattern

tp

is a member of the set

(

U ∪V

)

×

(

U ∪V

)

×

(

U ∪L∪V

). Let

tp

1

, tp

2

, . . . , tp

n

be triple patterns. A Basic Graph Pattern

(BGP)

B

is the conjunction of triple patterns, i.e.,

B

=

tp

1

AND tp

2

AND . . . AN D tp

n

For convenience, SPARQL shares numerous common query operations with other query

languages. For example, the following are operations that have identical syntax to SQL:

SELECT

/

WHERE

for projection and selection,

GROUP BY

/

HAVING

for grouping,

AVG|SUM|MEAN|MAX|MIN

...

for aggregation,

ORDER BY ASC|DESC

/ DISTINCT /

LIMIT

/

OFFSET

as solution modifiers

(

SELECT

is also a modifier). However, SPARQL contains query operations and functions that

are specific to RDF e.g., blank nodes, which are resources (subject or object) for which the URI

is not specified, functions isURI(), isLiteral(), etc. Additionally, SPARQL allows other forms

of queries than the conventional

SELECT

queries. For example,

CONSTRUCT

is used to return

the results (matching triple patterns) in the form of an RDF graph, instead of a table like in

SELECT queries.

ASK

is used to verify whether (

true

) or not (

false

) triple patterns can be

matched against the data.

DESCRIBE

is used to return an RDF graph that describes a resource

given its URI. See Listing 2.2 for a SPARQL query example, which is used to count the number

16

2.2 Big Data Management

of cars that are produced by BMW, have butterfly doors and can reach a top speed of 180km/h

or above.

1 PREFIX ex : < https :// e xamples . org / automobil e />

2 PREFIX rdf : < http :// www . w3 . org /1999/02/22 - rdf - syntax - ns # >

3

4 SELECT ( COUNT (? car ) AS ? count )

5 WHERE {

6 ? car rdf : type ex : Car .

7 ? car ex : pr oducer ex : BMW .

8 ? car ex : do orType ex : B utte rfly .

9 ? car ex : to pSpeed ? topSpeed .

10 FILTER (? topS peed >= " 180 ")

11 }

Listing 2.2: Example SPARQL Query.

2.2 Big Data Management

Data Management is a fundamental topic in the Computer Science field. The first so-called Data

Management systems to appear in the literature dates to the sixties [24, 25]. Data Management

is a vast topic grouping all disciplines that deal with the data. This ranges from data modeling,

ingestion, governance, integration, curation, to processing, and more. Recently, with the

emergence of Big Data phenomena, Big Data Management concept was suggested [26–29]. In

the following, we define Big Data Management, BDM, and its underlying concepts.

2.2.1 BDM Definitions

Big Data can be seen as an umbrella term covering the numerous challenges that traditional

centralized Data Management techniques faced when dealing with large, dynamic and diverse

data. Big Data Management is a sub-set of Data Management addressing data management

problems caused by the collection of large and complex datasets. Techopedia

2

defines Big Data

Management as "The efficient handling, organization or use of large volumes of structured and

unstructured data belonging to an organization.". TechTarget

3

defines it as "the organization,

administration and governance of large volumes of both structured and unstructured data.

Corporations, government agencies and other organizations employ Big Data management

strategies to help them contend with fast-growing pools of data, typically involving many

terabytes or even petabytes of information saved in a variety of file formats". Datamation

4

defines it as "A broad concept that encompasses the policies, procedures and technology used for

the collection, storage, governance, organization, administration and delivery of large repositories

of data. It can include data cleansing, migration, integration and preparation for use in reporting

and analytics.".

2.2.2 BDM Challenges

The specificity of Big Data Management is, then, its ability to address the challenges that arise

from processing large, dynamic and complex data. Examples of such challenges include:

2

https://www.techopedia.com/dictionary

3

https://whatis.techtarget.com

4

https://www.datamation.com/

17

Chapter 2 Background and Preliminaries

•

The user base of a mobile app has grown significantly that the app started generating a

lot of activity data. The large amounts of data eventually exhausted the querying ability

of the underlying database and overflew the available storage space.

•

An electronic manufacturing company improved the performance of its automated electronic

mounting technology. The latter started to generate data at a very high pace handicapping

the monitoring system ability to collect and analyze the data in a timely manner.

•

Data is averagely large but the type and complexity of the analytical queries, which span

a large number of tables became a bottleneck in generating the necessary dashboards.

•

New application needs of a company required to incorporate new types of data management

and storage systems, e.g., graph, document and key-value, which do not (straightforwardly)

fit into the original system data model.

These challenges are commonly observed in many traditional Database Management Systems,

e.g. the relational, or RDBMS. The latter was the de facto data management paradigm for four

decades [30, 31]. However, the rapid growth of data in the last decade has revealed RDBMSs

weakness at accommodating large volumes of data. The witnessed performance downgrade is the

product of their strict compliance with ACID properties [32, 33], namely Atomicity, Consistency,

Isolation and Durability. In a nutshell, Atomicity means that a transaction has to succeed

as a whole or rolled back, Consistency means that any transaction should leave the database

in a consistent state, Isolation means that transactions should not interfere, and Durability

means that any change made has to persist. Although these properties guarantee data integrity,

they introduce a significant overhead when performing lots of transactions over large data. For

example, a write operation to a primary key attribute may be bottlenecked by checking the

uniqueness of the value to be inserted. Other examples include checking the type correctness, or

checking whether the value to be inserted in a foreign key attribute exists already as a primary

key in another referenced table, etc. These challenges are hugely reduced by modern Data

Management systems, thanks to their schema flexibility and distributed nature.

2.2.3 BDM Concepts and Principles

To mitigate these issues, approaches in BDM were suggested e.g., to relax the strict consistency

and integrity constraints of RDBMSs and, thus, accelerate reading and writing massive amounts

of data in a distributed manner. The following is a listing of concepts that contribute to solving

the aforementioned challenges, which mostly stem from Distributed Computing field:

• Distributed Indexing.

An index in traditional RDBMSs may itself become a source of

overhead when indexing large volumes of data. In addition to the large storage space it

occupies, maintaining a large index with frequent updates and inserts becomes an expensive

process. Modern distributed databases cope with the large index size by distributing

it across multiple nodes. This is mostly the case of hashing-based indexes, which are

distribution-friendly. They only require an additional hash index that allows to tell

in which node a requested indexed data item is located, then the local index is used

to reach the exact item location. This additional hash index is generally a lot smaller

than the data itself, and it can easily be distributed if needed. For example, an index

created for a file stored in HDFS (Hadoop [34] Distributed File System) [5] can be hash-

indexed to locate which file part is stored in which HDFS block (HDFS unit of storage

18

2.2 Big Data Management

comprising the smallest split of a file, e.g., 128MB). Similarly, Cassandra, a scalable

database, maintains a hash table to locate in which partition (sub-set of the data) a tuple

should be written; the same hash value is used to retrieve that tuple given a query [35].

Traditional tree-based indexes, however, are less used in BDM, as tree data structures are

less straightforward to split and distribute than hashing-based indexes. Just as indexes with

conventional centralized databases, however, write operations (insert/update) can become

slower when the index size grows. Moreover, the indexes in distributed databases are

generally not as sophisticated as their centralized counterparts, e.g. secondary indexes [36].

In certain distributed frameworks, an index may have a slightly different meaning, e.g., in

Elasticsearch search engine an index is the data itself to be queried [37].

• Distributed Join.

Joining large volumes of data is a typical case where query performance

can deteriorate. To join large volumes of data, distributed frameworks

5

typically try to

use distributed versions of hash join. Using a common hash-based partitioner, the system

tries to bring the two sides of a join into the same node, so the join can be partially

performed locally and thus less data is transferred between nodes. Another common type

of join in distributed frameworks is broadcast join, which is used when a side of the join

is known to be small and thus loadable in the memory of every cluster node. This join

type significantly minimizes data transfer as all data needed from one side of the join is

guaranteed to be locally accessible. Sort-merge join is also used in cases where hash join

involves significant data transfer.

• Distributed Processing.

Performing computations e.g., query processing, in a distrib-

uted manner can be substantially different than in a single machine. For example,

MapReduce [38] is a prominent distributed processing paradigm that solves computation

bottlenecks by providing two simple primitives allowing to execute computational tasks

in parallel. The primitives are map, which converts an input data item to a (key,value)

pair, and reduce, which subsequently receives all the values attached to the same key

and performs a common operation thereon. This allows to accomplish a whole range

of computation, from as simple as counting error messages in a log file to as complex

as implementing ETL integration pipelines. Alternative approaches are based on DAG

(Directed Acyclic Graph) [39, 40] processing models, where several tasks (e.g., map, reduce,

group by, order by, etc.) are chained and executed in parallel. These tasks operate on the

same chunk of data; data is only transferred across the cluster if the output of multiple

tasks needs to be combined (e.g., reduce, join, group by, etc.). Knowing the tasks order

in the DAG, it is possible to recover from failure by resuming the failed task from the

previous step, instead of starting from the beginning.

• Partitioning.

It is the operation of slicing a large input data into disjoint sub-sets called

partitions. These partitions can be stored in several compute nodes and, thus, be operated

on in parallel. Partitions are typically duplicated and stored in different compute nodes to

provide fault tolerance and improve system availability.

• Data Locality.

It is a strategy, adopted by MapReduce and other frameworks, to run

compute tasks on the node where the data needed is located. It aims at optimizing

processing performance by reducing the amount of data transfer across the nodes.

5

We mean here engines specialized in querying (e.g., Hive, Presto) or having query capabilities (e.g., Spark,

Flink). Many distributed databases (e.g., MongoDB, Cassandra, HBase) lack the support of joins

19

Chapter 2 Background and Preliminaries

• BASE Properties.

A new set of database properties called BASE, by opposition to the

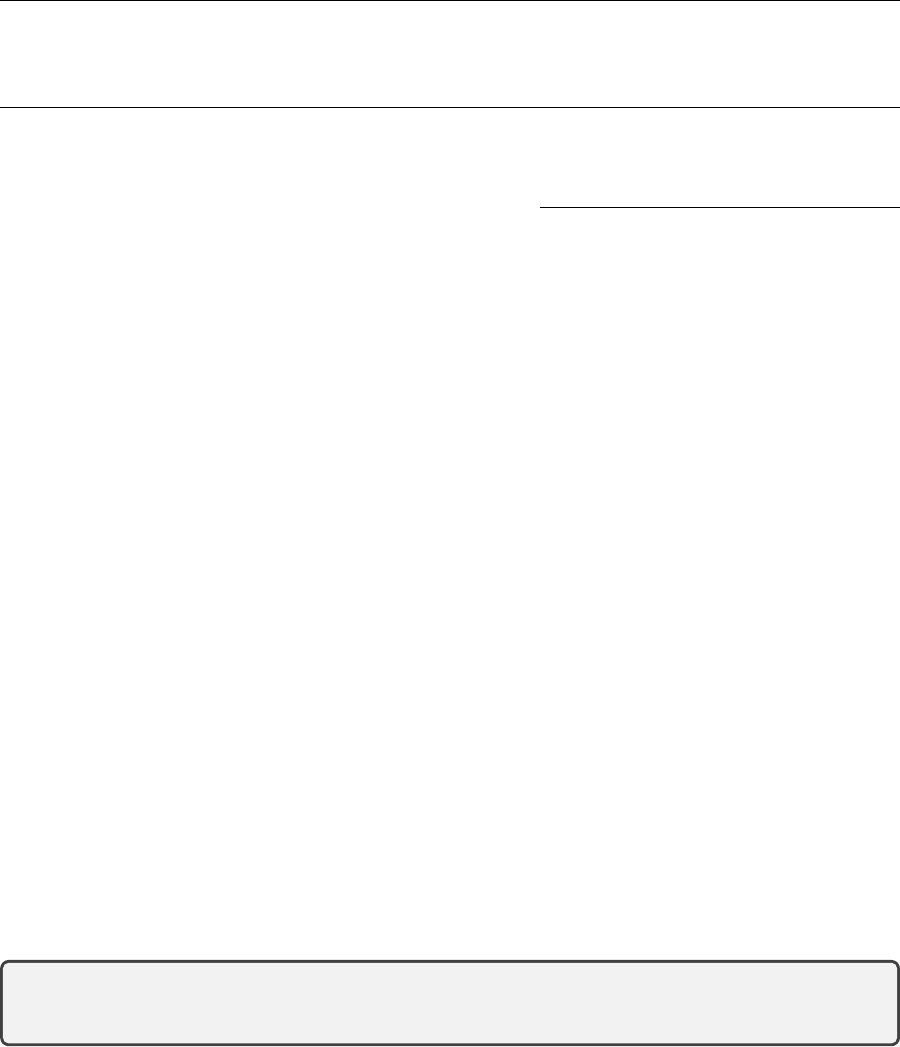

traditional ACID, has been suggested [41]. BASE stands for Basic Availability, Soft state,