SYSTEMS APPROACH TO TRAINING

(SAT) MANUAL

JUNE 2004

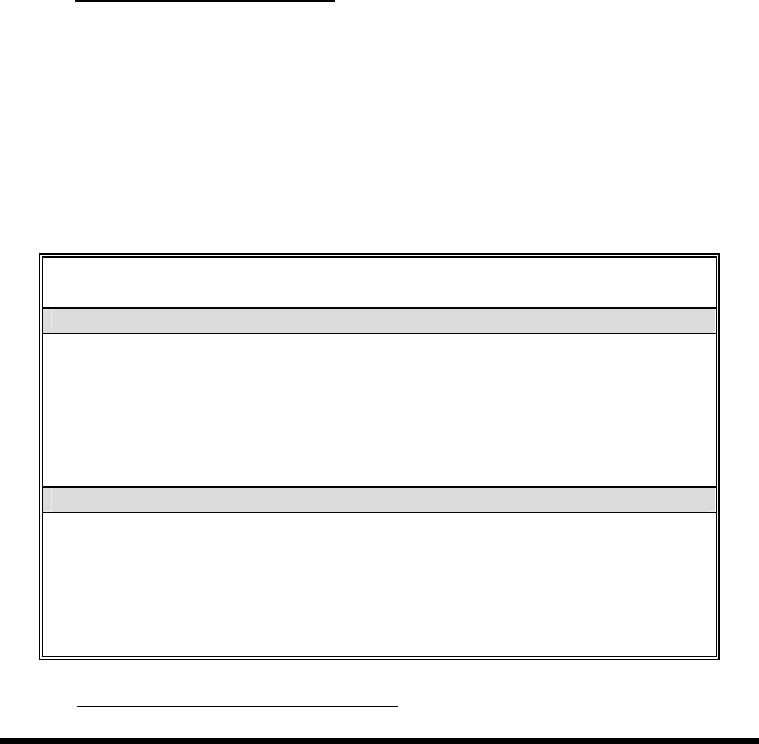

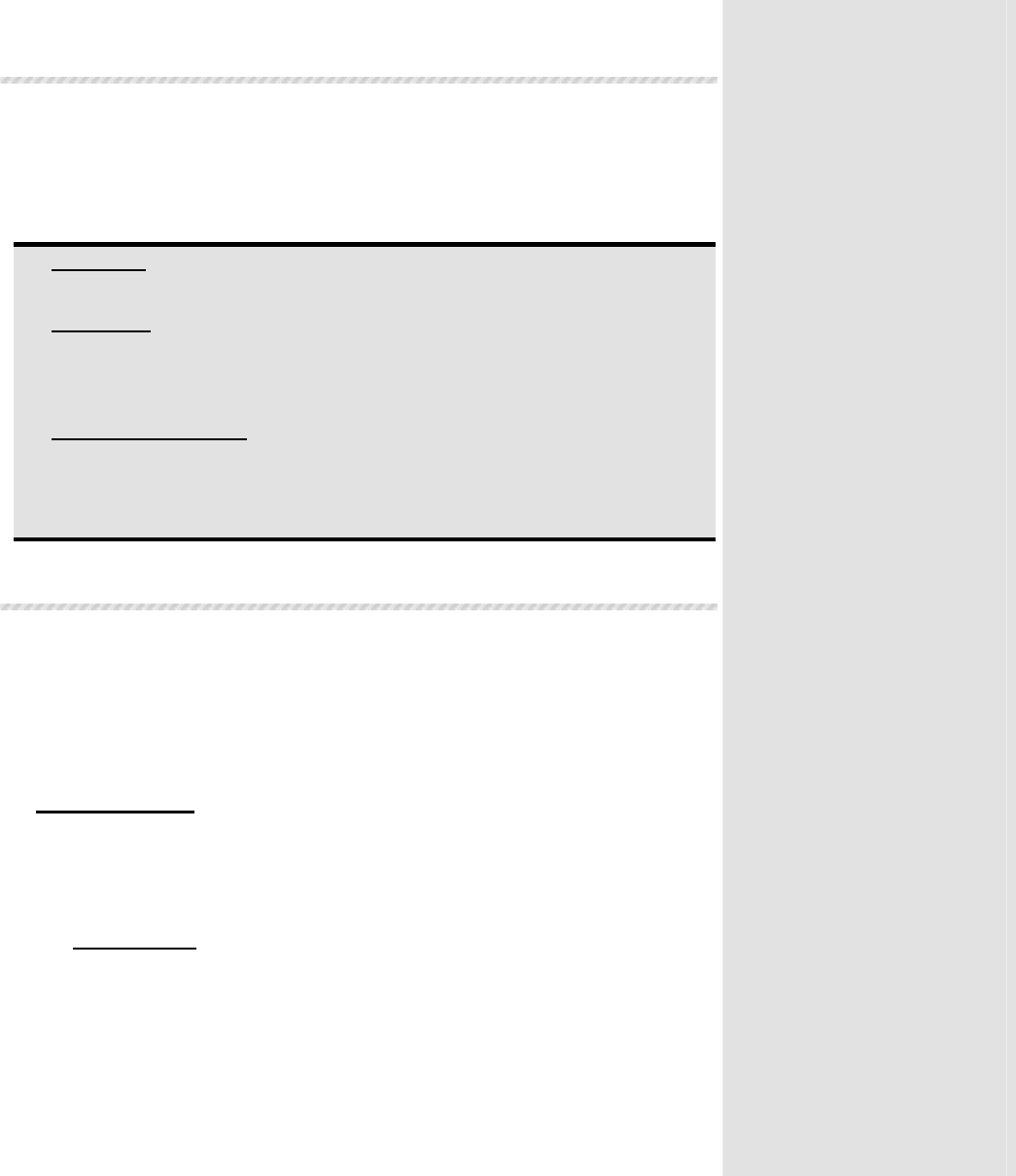

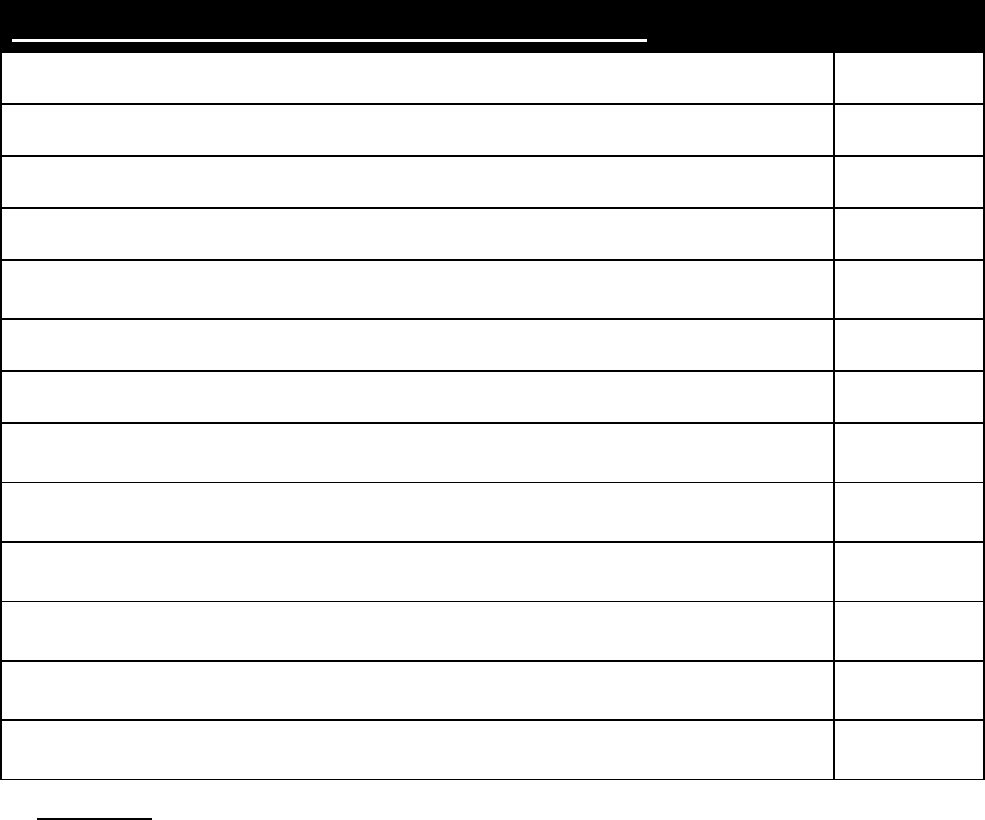

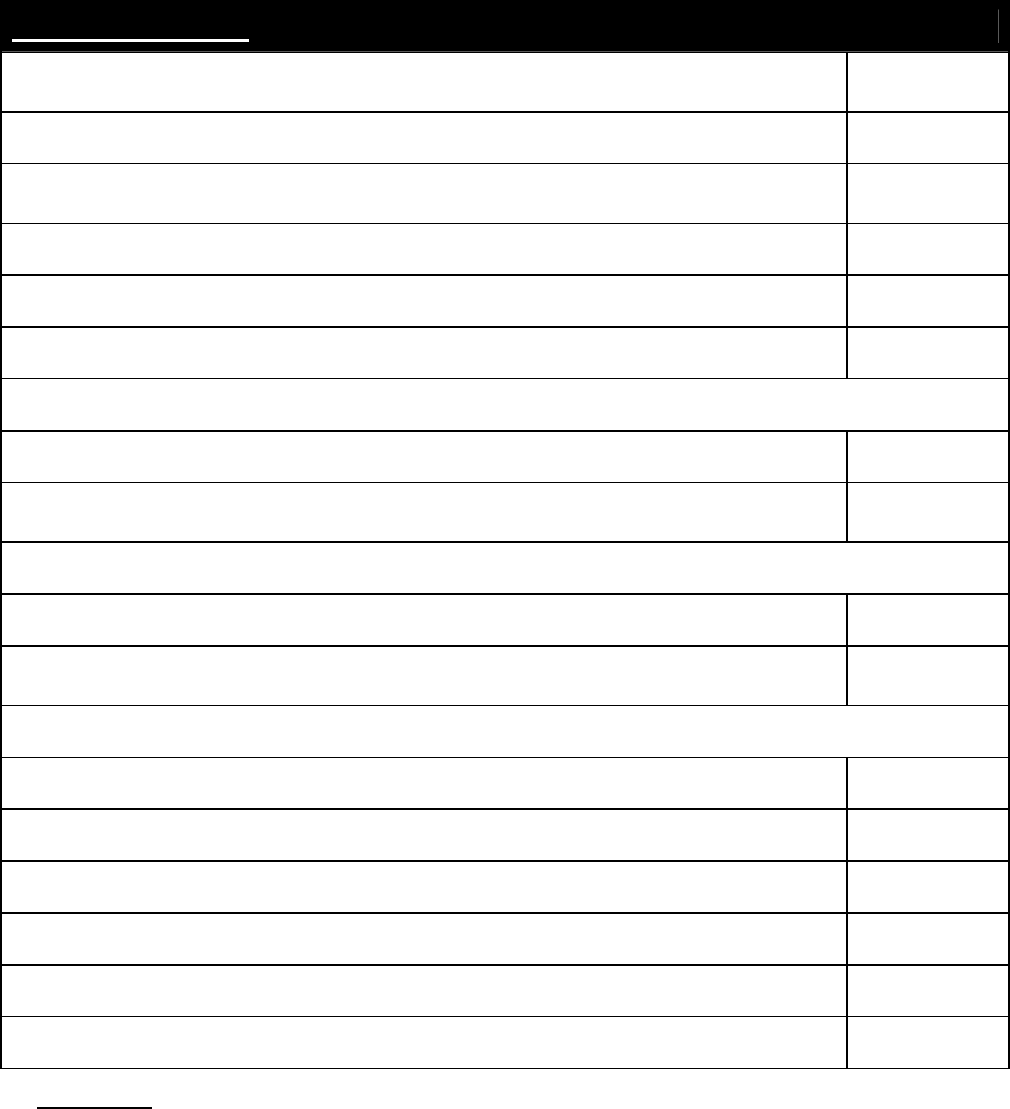

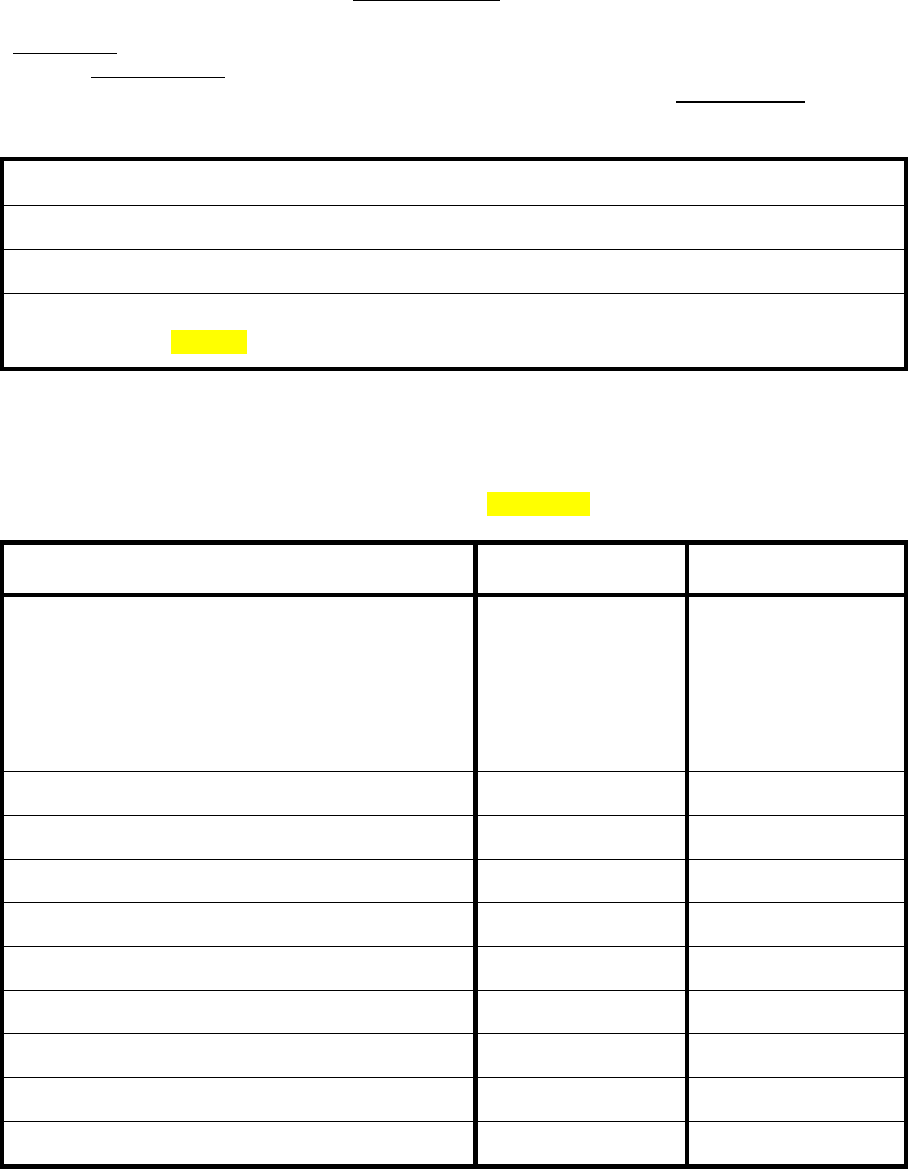

SUGGESTION FORM

From:

Subj: RECOMMENDATION FOR IMPROVEMENT TO THE SAT MANUAL

1. In accordance with the Forward to the Systems Approach to

Training (SAT) Manual, which encourages commands to provide

suggestions for improving the publication; the following

unclassified recommendation is submitted:

______ __________ _________ _______________

Page Para. No. Line No. Figure/Appendix

Nature of Change: ___Add ___Delete ___Change___Correct

2. Proposed New Text: (Verbatim, double-spaced; continue on

additional pages, if necessary.)

3. Justification/Rationale/Impact of Proposed Change: (Include

source; may be single spaced.)

PREFACE

The Systems Approach to Training (SAT) Manual was developed

to support Marine Corps training/education policy and Department

of Defense (DoD) military training program requirements. This

Manual serves as a primary source of information and guidance,

mainly for use by the formal school/training centers'

instructional staff, for instructional program development and

management.

The SAT Manual is divided into five chapters, each chapter

corresponding to a phase within the SAT model: Analyze, Design,

Develop, Implement, and Evaluate. In many of the sections

within each chapter, topic material is presented first, followed

by procedural steps for performing a task or function.

Throughout the Manual, hypothetical examples are provided to

illustrate a concept, topic, or procedure.

While the information contained in the SAT Manual is based

on and derived from accepted adult learning theories and current

instructional development practices, the Manual is designed as

an introduction to these topics. Additional research in

education-related fields is recommended for those personnel who

participate in the development or management of instruction.

i

EXECUTIVE SUMMARY

Overview. The mission of any instructional system is to

determine instructional needs and priorities, develop effective

and efficient solutions to achieving these needs, implement

these solutions in a competent manner, and assess the degree to

which the outcomes of the system meet the specified needs. To

achieve this in the most effective way possible, a systems

approach to the process and procedures of instruction was

developed. The resulting model, entitled Instructional Systems

Design (ISD), was later adopted by the Marine Corps as the

Systems Approach to Training (SAT). The model, whether it is

referred to as ISD or SAT, is a recognized standard governing

the instructional process in the private sector and within the

Department of Defense (DoD).

Goal of Instruction

The goal of Marine Corps instruction is to develop

performance-based, criterion-referenced instruction that

promotes student transfer of learning from the instructional

setting to the job. For a learning outcome to be achieved,

instruction must be effective and efficient. Instruction is

effective when it teaches learning objectives based on job

performance requirements and efficient when it makes the best

use of resources.

SAT is a comprehensive process that identifies what is

performed on the job, what should be instructed, and how this

instruction should be developed and conducted. This systematic

approach ensures that what is being instructed are those tasks

that are most critical to successful job performance. It also

ensures that the instructional approach chosen is the most time

and cost efficient. The SAT process further identifies

standards of performance and learning objectives. This ensures

that students are evaluated on their ability to meet these

objectives and that instructional courses are evaluated based on

whether or not they allow student mastery of these objectives.

Finally, the SAT identifies needed revisions to instruction and

allows these revisions to be made to improve instructional

program effectiveness and efficiency.

ii

Intent of SAT

The SAT was created to manage the instructional process for

analyzing, designing, developing, implementing, and evaluating

instruction. The SAT serves as a blueprint for organizing or

structuring the instructional process. The SAT is a set of

comprehensive guidelines, tools, and techniques needed to close

the gap between current and desired job performance through

instructional intervention.

The Marine Corps originally targeted the SAT for use in

formal schools, but the comprehensive system applies to

unit/field training as well as to education. The SAT is a

flexible, outcome-oriented system based on the requirements

defined by education and training. Whether referred to as

education or training, the instructional process is the same; it

is the outcomes that are different. Therefore, in keeping with

the intention of the SAT model, throughout this SAT Manual, the

term instruction will be used to discuss both training and

education.

Benefits of SAT

The Systems Approach to Training is a dynamic, flexible

system for developing and implementing effective and efficient

instruction to meet current and projected needs. The SAT

process is flexible in that it accounts for individual

differences in ability, rate of learning, motivation, and

achievement to capitalize on the opportunity for increasing the

effectiveness and efficiency of instruction. The SAT process

reduces the number of school management decisions that have to

be made subjectively and, instead, allows decisions to be made

based on reasonable conclusions which are based on carefully

collected and analyzed data. More than one solution to an

instructional problem may be identified through the SAT, however

the selection of the best solution is a goal of SAT.

The SAT is a continuous, cyclical process allowing any one

of the five phases, and their associated functions, to occur at

any time. In addition, each phase within SAT further builds

upon the previous phase, providing a system of checks and

balances to insure all instructional data are accounted for and

that revisions to instructional materials are identified and

made.

iii

It is not the intent of the SAT process to create an

excessive amount of paperwork, forms, and reporting requirements

that must be generated by each formal school/training center

conducting instruction. This would serve only to detract from

the instructional program. The SAT process does not provide a

specific procedure for every instructional situation that can be

encountered. Instead, it presents a generalized approach that

can be adapted to any instructional situation.

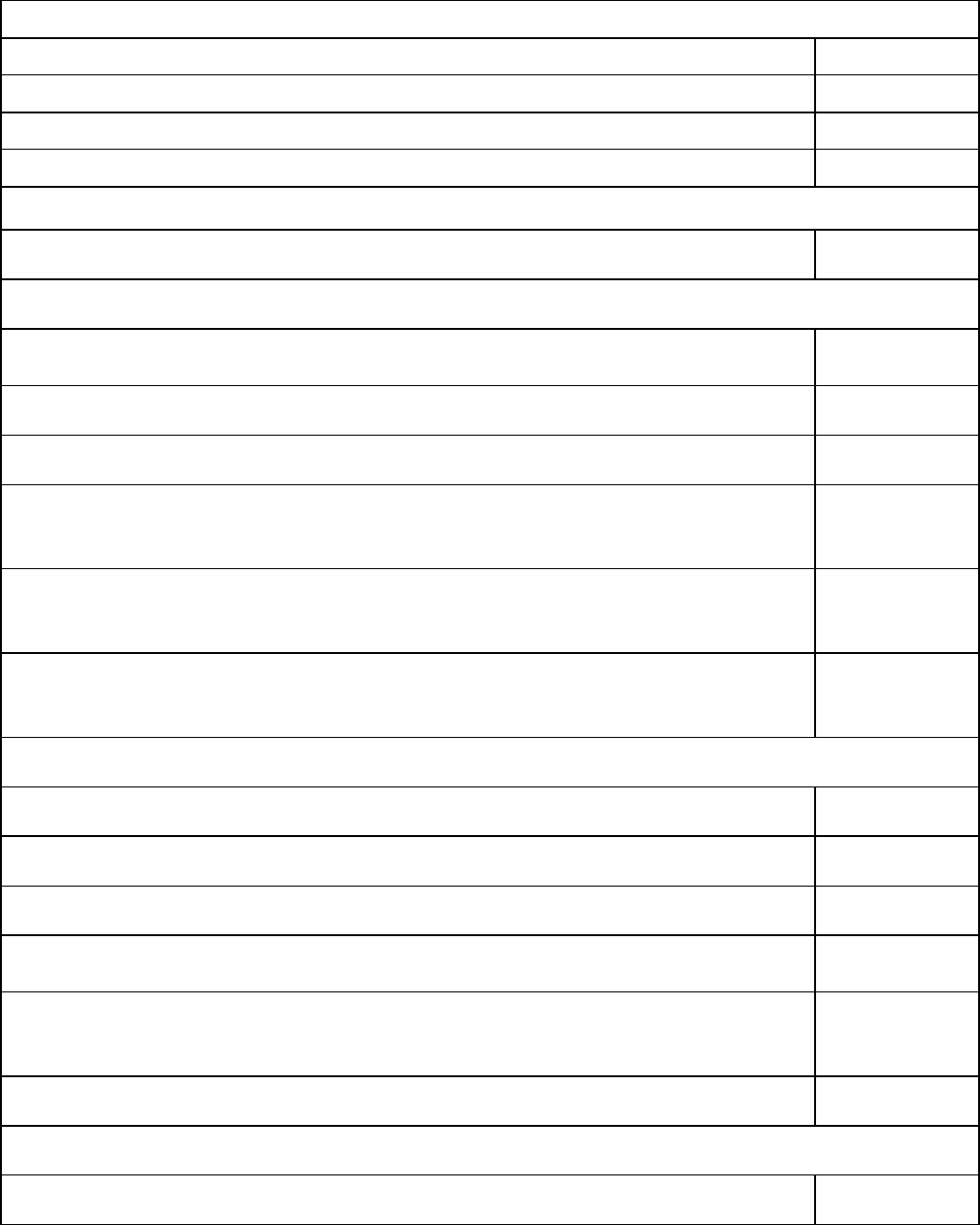

SAT Phases

. The SAT model simplifies and standardizes the

instructional process into manageable subsets. The SAT process

is made up of five distinct phases, each serving a specific

purpose. The five phases are Analyze, Design, Develop,

Implement, and Evaluate. Each of these phases involves inputs,

a process, and outputs. The successive phases of the SAT build

upon the outcomes of the previous phase(s).

1. Analyze

. During the Analyze Phase of SAT, a particular job

or Occupational Field/Military Occupational Specialty

(OccFld/MOS) is analyzed by Marine Corps Combat Development

Command (MCCDC, C 461) to determine what job holders perform on

the job, the order in which they perform it, and the standard of

performance necessary to adequately perform the job. The

result, or outcome, of the Analyze Phase is Individual Training

Standards (ITS) selected for instruction. ITSs are behavior

statements that define job performance in the Marine Corps and

serve as the basis for all Marine Corps instruction. The

elements of the Analyze Phase are:

Job Analysis. Job or occupational analysis is performed to

determine what the job holder must know or do on the job. Job

analysis results in a verified list of all duties and tasks

performed on the job.

Task Analysis

. Task analysis (sometimes called Training

Analysis) is performed to determine the job performance

requirements requisite of each task performed on the job. Job

performance requirements include a task statement, conditions,

standard, performance steps, administrative instructions, and

references. Job performance requirements in the Marine Corps

are defined by Individual Training Standards (ITSs). ITSs

define the measures of performance that are to be used in

diagnosing individual performance and evaluation of instruction.

iv

Selection of Tasks for Instruction. Current instructional needs

are determined by selecting tasks for instruction. Tasks are

selected based on data collected concerning several criteria

relating to each task. A by-product of this process is the

determination of the organization responsible for conducting the

instruction and the instructional setting assigned to each task.

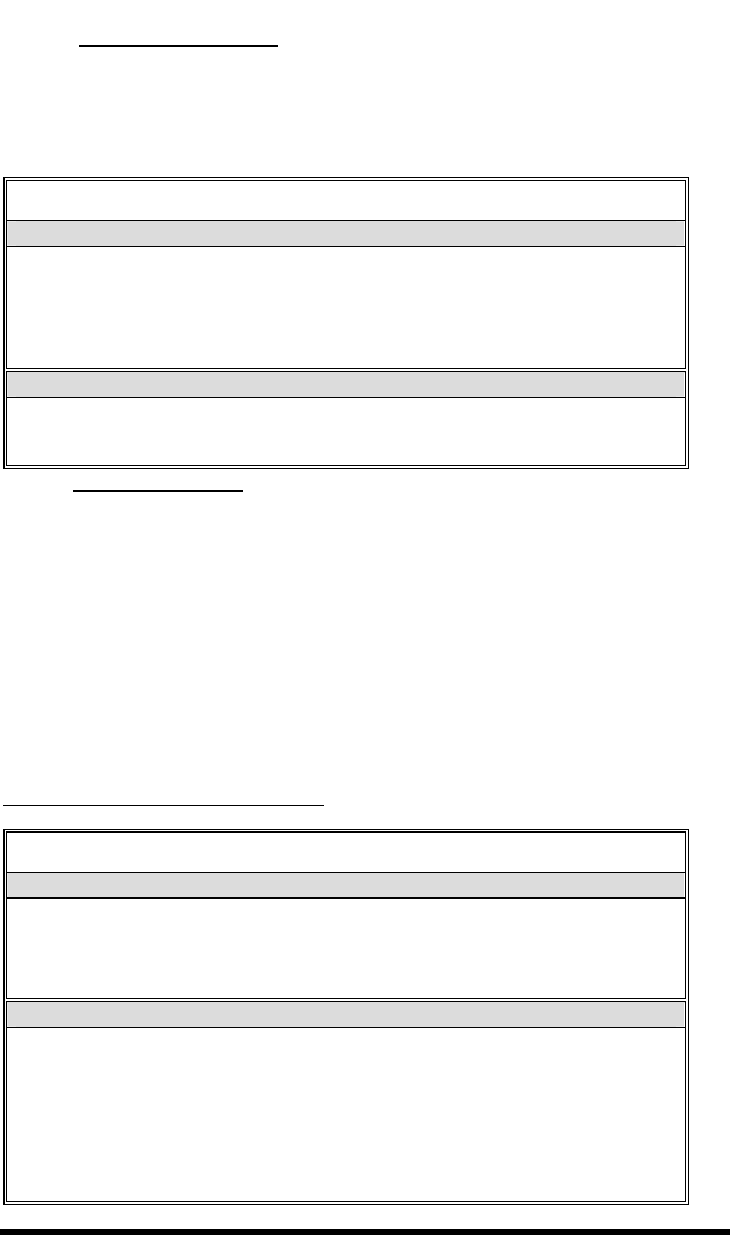

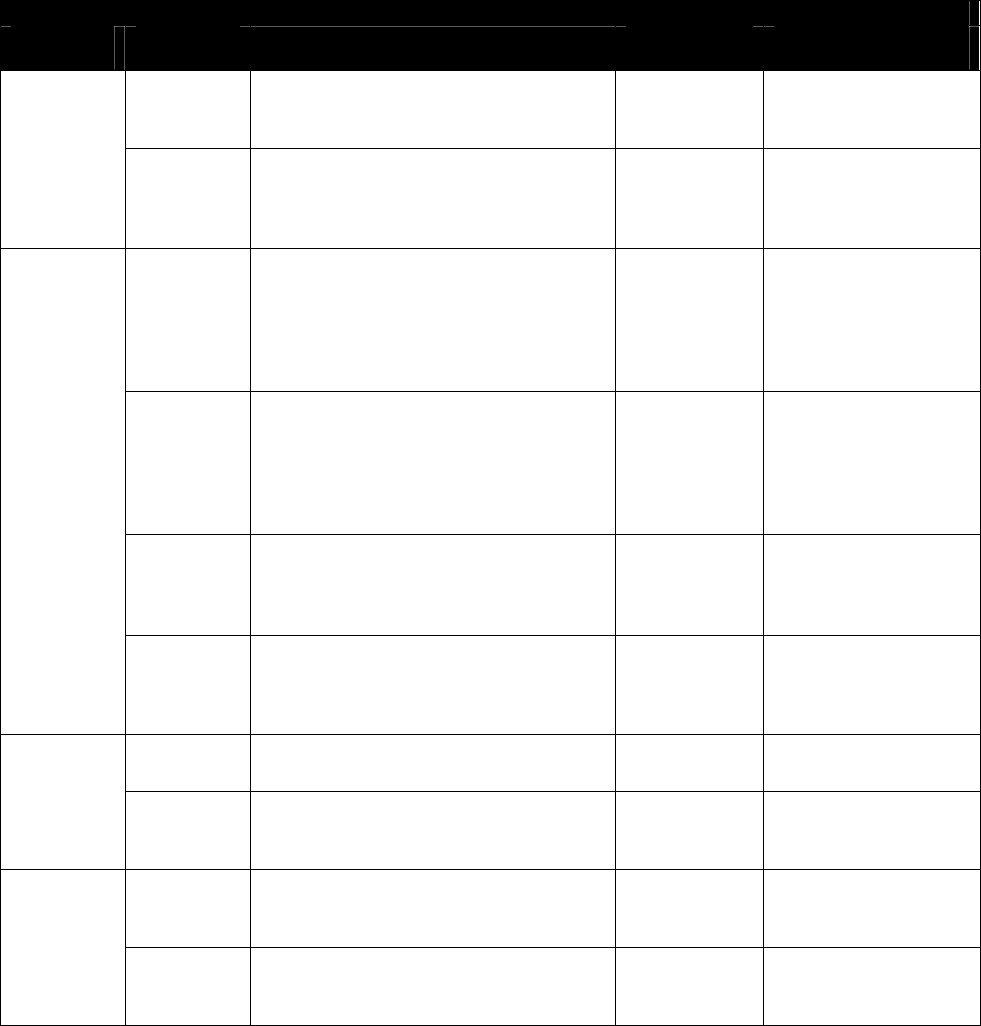

Input

Process Outcome

Job task Job analysis Task List

data

Task analysis Individual Training

Standards (ITS)

Instructional Setting

2. Design. During the Design Phase of SAT, formal

school/training center instructional developers equate task

performance under job conditions (ITSS) to task performance

within the instructional setting (learning objectives). The

goal of this phase is to simulate as closely as possible the

real-world job conditions within the instructional environment.

The closer the instructional conditions are to the required in

the work setting, the more likely it is that the student will

transfer the learning to the job. The Design Phase is made up

of five separate sections, each of which has a specific purpose:

Write a Target Population Description (TPD). The TPD defines

the student population entering a course.

Conduct Learning Analysis. The learning analysis is conducted

to develop the learning objectives. The learning analysis

describes what the students will do during instruction.

Write Test Items

. Test items are derived from the learning

objectives and are used to determine if the students have

mastered the learning objectives.

Select Delivery System. The delivery system is the primary

means by which the instruction is presented to the students.

Sequence Learning Objectives

. Learning objectives are sequenced

to allow students to make logical transitions from one subject

to the next. Sequenced learning objectives provide efficient

instruction and serve as a draft course structure.

v

Input Process Outcome

ITS Define student Target Population

population Descripton (TPD)

Conduct learning Learning Objectives

analysis

Define evaluation Test Items

Select media Delivery System

and method

Organize Sequenced Terminal

instruction Learning Objectives

(TLO)

3. Develop. The Develop Phase of SAT builds on the outcomes of

the Analyze and Design Phases. The Analyze Phase identifies

those tasks to be instructed and the desired standard to which

those tasks must be performed. The Design Phase outlines how to

reach the instructional goals determined in the Analyze Phase by

converting job tasks to tasks taught in the instructional

environment, and further builds the foundation for instruction.

During the Develop Phase, instructional developers from the

formal schoo1/training center modify the instructional program

to fit the requirements identified in the Analyze and Design

Phases. The elements of the Develop Phase are:

Develop Course Schedule. The course schedule provides a

detailed structure for the course to include lesson times,

titles, designators, locations, and references to be used.

Develop Instruction

. This section details the process for

developing the lesson plans and supporting course materials that

instructors will present during the Implement Phase. Maximizing

the transfer of learning is the goal of developing instruction.

Develop Media. This section takes the media selected during the

Design Phase and develops them into their final form for

presentation to the students. The purpose of media is to

enhance the instruction and the transfer of learning by

presenting lesson material in a manner that appeals to many

senses, complements student comprehension level, and stimulates

student interest.

vi

Validate Instruction. The goal of validation is to determine

the effectiveness of instructional material and to make any

necessary revisions prior to implementation.

Develop Course Descriptive Data (CDD) and Program of Instruction

(POI). The CDD provides a detailed summary of the course

including instructional resources, class length, and curriculum

breakdown. The POI provides a detailed description including

structure, delivery system, length, learning objectives, and

evaluation procedures. A formal course of instruction must have

an approved POI.

Input Process Outcome

Learning Organize course Course Schedule

Objectives

TPD Develop Master Lesson

Instruction Files (MLF)

Delivery Develop media Media

System

Test Items Validate Revised

Instruction instructional

materials

Develop supporting CDD/POI

course materials

4. Implement. During the Implement Phase of SAT, instructors

within the formal school/training center prepare the class and

deliver the instruction. The purpose of the Implement Phase is

the effective and efficient delivery of instruction to promote

student understanding of material to achieve student mastery of

learning objectives, and to ensure a transfer of student

knowledge from the instructional setting to the job. The

elements of the Implement Phase are:

Prepare for Instruction

. Preparation involves all those

activities that instructors and support personnel must perform

to ready themselves for delivering the instruction.

vii

Implement Instruction. Implementing instruction is the

culmination of the analysis, design, and development of

instructional materials. Although the instructional developer

designed and developed the instructional material so that it

maximizes transfer of learning, the way the instructor presents

the material will play a crucial part in determining whether

students learn and transfer that learning to the job.

Implementation is the instructor’s delivery of instruction to

the students in an effective and efficient manner.

Input Process Outcome

Instructional Prepare for Delivery of

Materials instruction instruction

Implement Course data

instruction

5. Evaluate. The Evaluate Phase of SAT measures instructional

program effectiveness and efficiency. Evaluation and revision

drive the SAT model. Evaluation consists of formative and

summative evaluation and management of data. Formative

evaluation involves validating instruction before it is

implemented and revising instruction to improve the

instructional program prior to its implementation. Formative

evaluation is ongoing at all times both within and between each

phase of the SAT model. Summative evaluation is conducted after

a course of instruction has been implemented. Summative

evaluation assesses the effectiveness of student performance,

course materials, instructor performance, and/or the

instructional environment. There are three parts to evaluation:

Plan and Conduct. The purpose of planning and conducting

evaluation is to develop and implement a strategy for

determining the effectiveness and efficiency of an instructional

program.

Analyze and Interpret. After the evaluation data have been

gathered during the conduct of evaluation, the results are

analyzed and interpreted to assess instructional program

effectiveness and efficiency.

viii

Document and Report. Evaluation data is managed and the results

of evaluation are documented and reported so that instruction is

revised, if necessary.

Input Process Outcome

Course Data Conduct Formative Revisions to

Evaluation instruction

Conduct Summative Data on

Evaluation instructional

effectiveness

Manage Data Course Content

Review Board (CCRB)

ix

SYSTEMS APPROACH TO TRAINING MANUAL

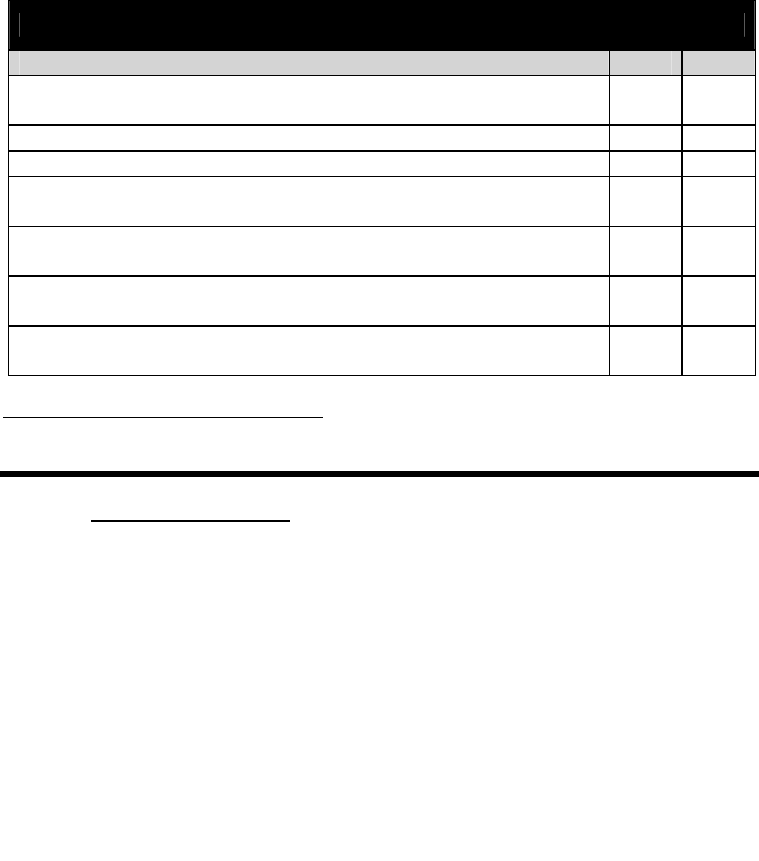

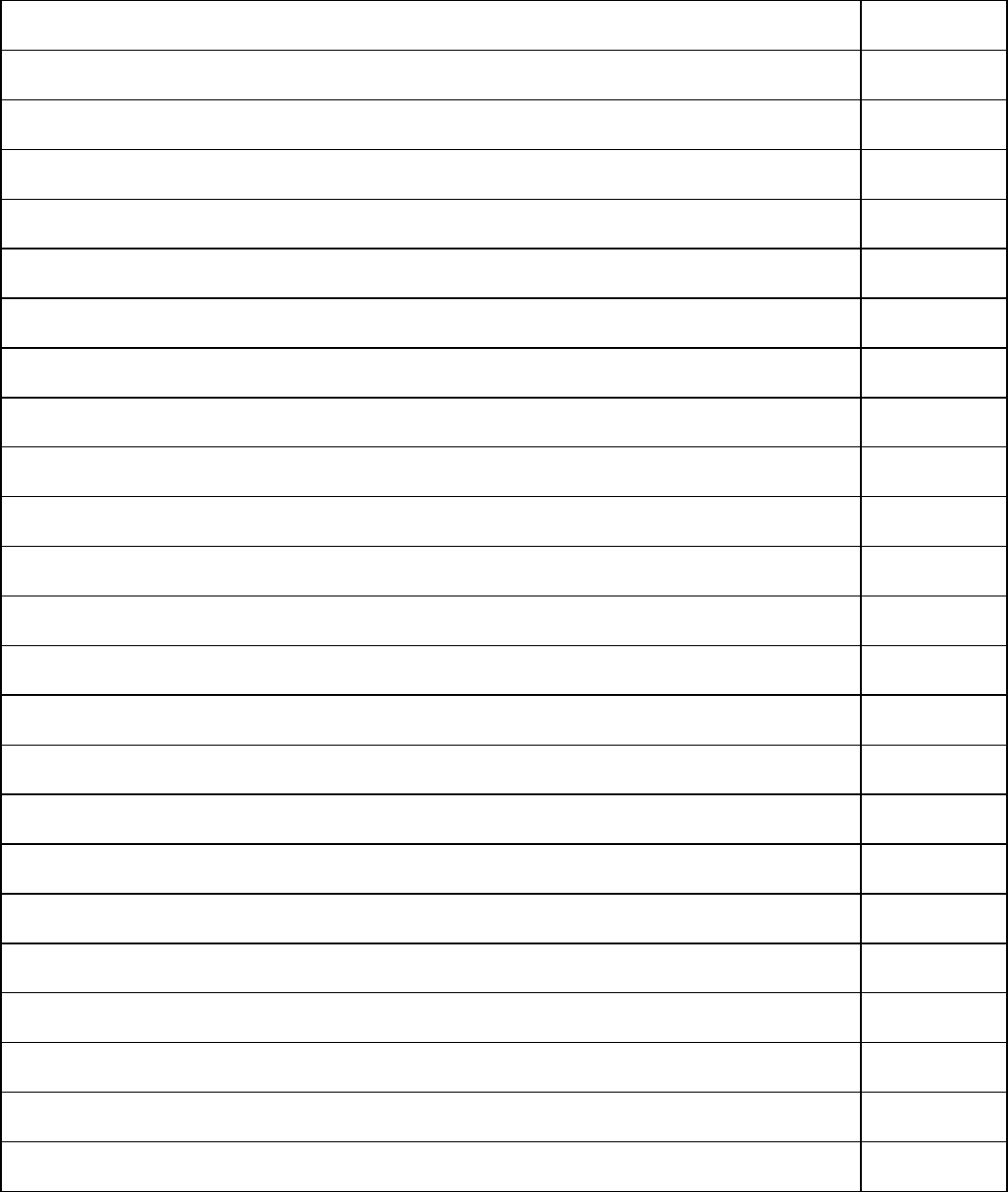

TABLE OF CONTENTS

CHAPTER

1 ANALYZE

2 DESIGN

3 DEVELOP

4 IMPLEMENT

5 EVALUATE

6 ADULT LEARNING

7 ADMINISTRATION

Systems Approach To Training Manual Analyze Phase

Chapter 1

ANALYZE PHASE

In Chapter 1:

1000 INTRODUCTION

1200 JOB ANALYSIS

Job Analysis

Requirements

Task Criteria

Duty Areas

Initial Task List

Development

Task List Verification

Refining the Task List

Identifying Tasks for

Instruction

1300 TASK ANALYSIS

Purpose

Training Standard

Development

ITS Components

T&R Components

ITS/T&R Staffing

1400 INSTRUCTIONAL

SETTING

1500 ROLES AND

RESPONSIBILITIES

TECOM Responsibilities

Formal School/Det

Responsibilities

1-1

1-2

1-3

1-3

1-4

1-4

1-5

1-5

1-5

1-6

1-6

1-7

1-7

1-8

1-9

1-10

1-11

1-11

1-11

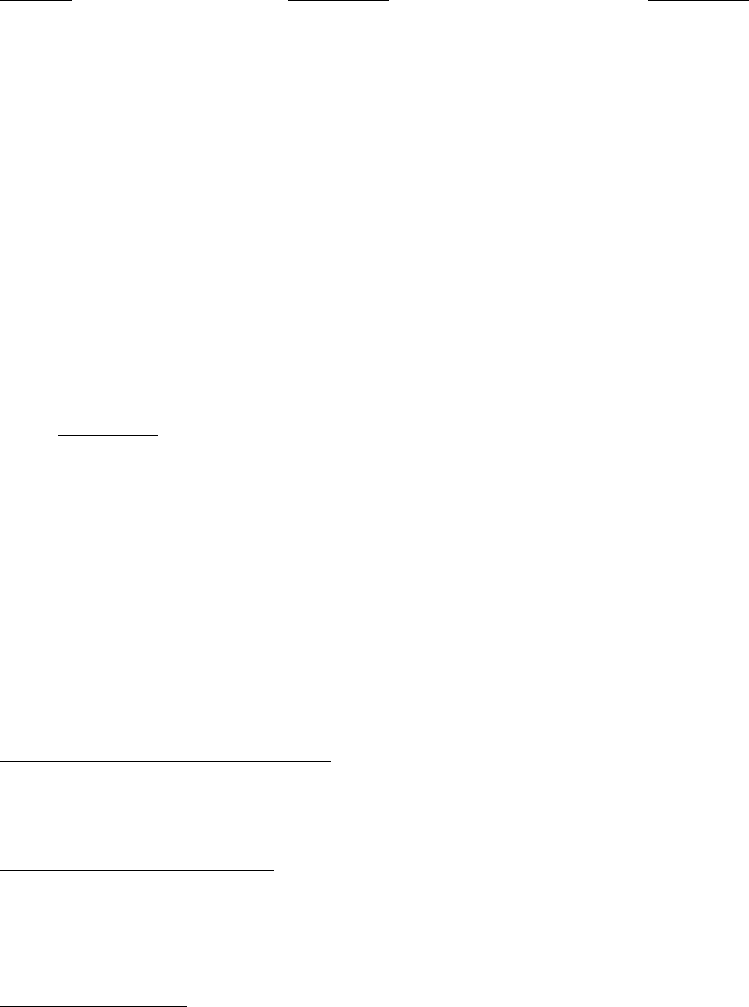

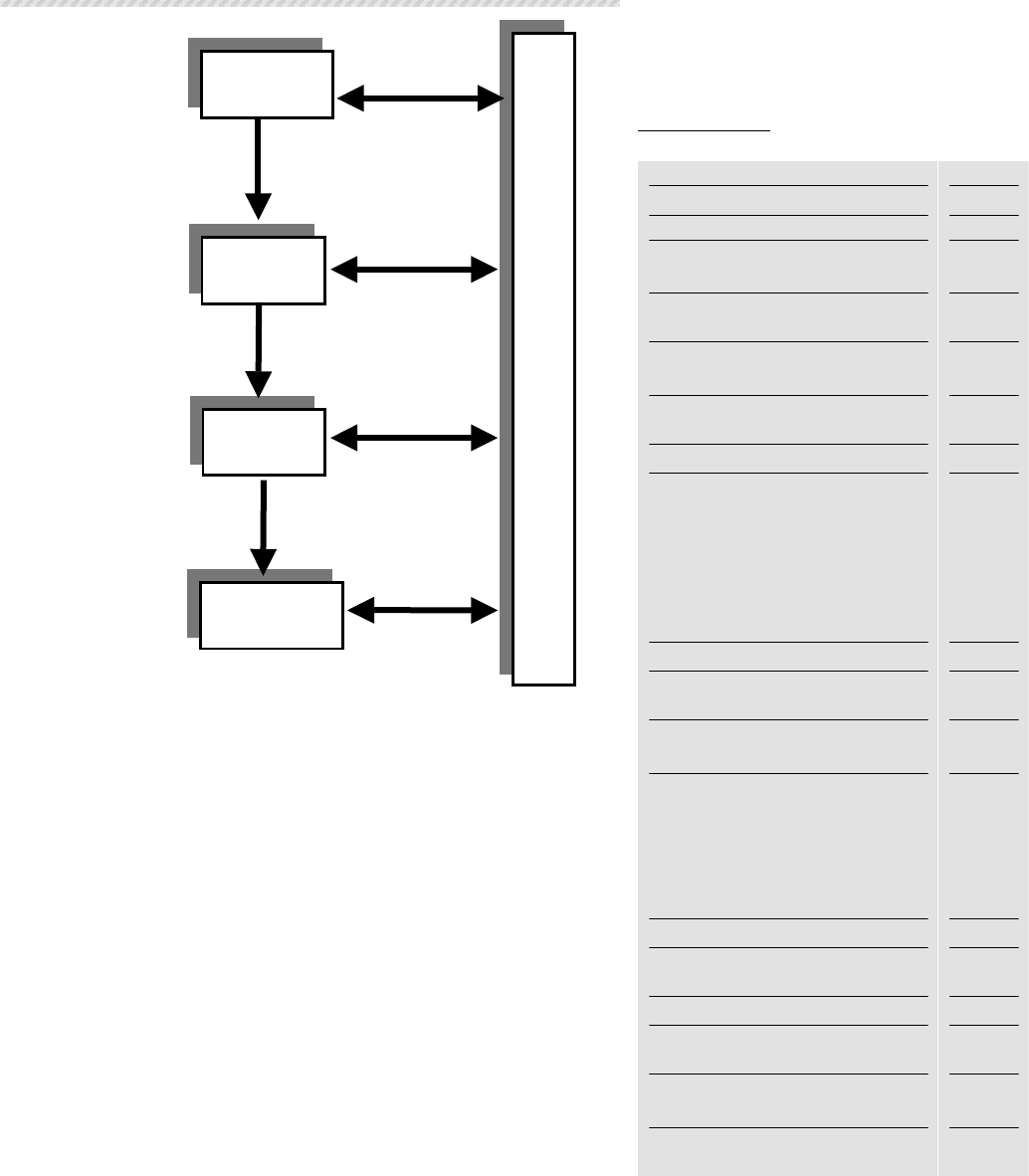

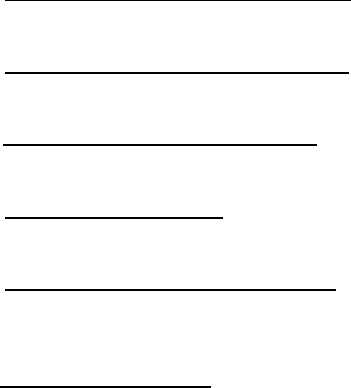

IMPLEMENT

EVALUATE

DESIGN

DEVELOP

A

NALYZE

Job Analysis

Task Analysis

Determination

of

Instructional

Setting

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-1

Chapter

1

1000. INTRODUCTION

The Analyze Phase is a crucial phase in the Systems Approach to Training

(SAT) process. During this phase, job performance data is collected,

analyzed, and reported.

This analysis results in a comprehensive list of tasks

and performance requirements selected for instructional development. In

the Marine Corps, job performance requirements are defined as Individual

Training Standards (ITS) Orders and Training and Readiness (T&R) Manuals.

The Analyze Phase consists of three main processes: job analysis, task

analysis, and determining instructional setting.

This chapter has four separate sections. The first three cover the three

Analyze Phase processes and the fourth provides the administrative

responsibilities.

1. Job Analysis: “What are the job requirements?”

2. Task Analysis: “What are the tasks required to perform the job?”

3. Determine Instructional Setting: “Will the Marine receive job

training in a formal school/detachment setting or through MOJT?”

4. Requirements and Responsibilities in the Analyze Phase: “What

are the roles and responsibilities of each element in the training

establishment?”

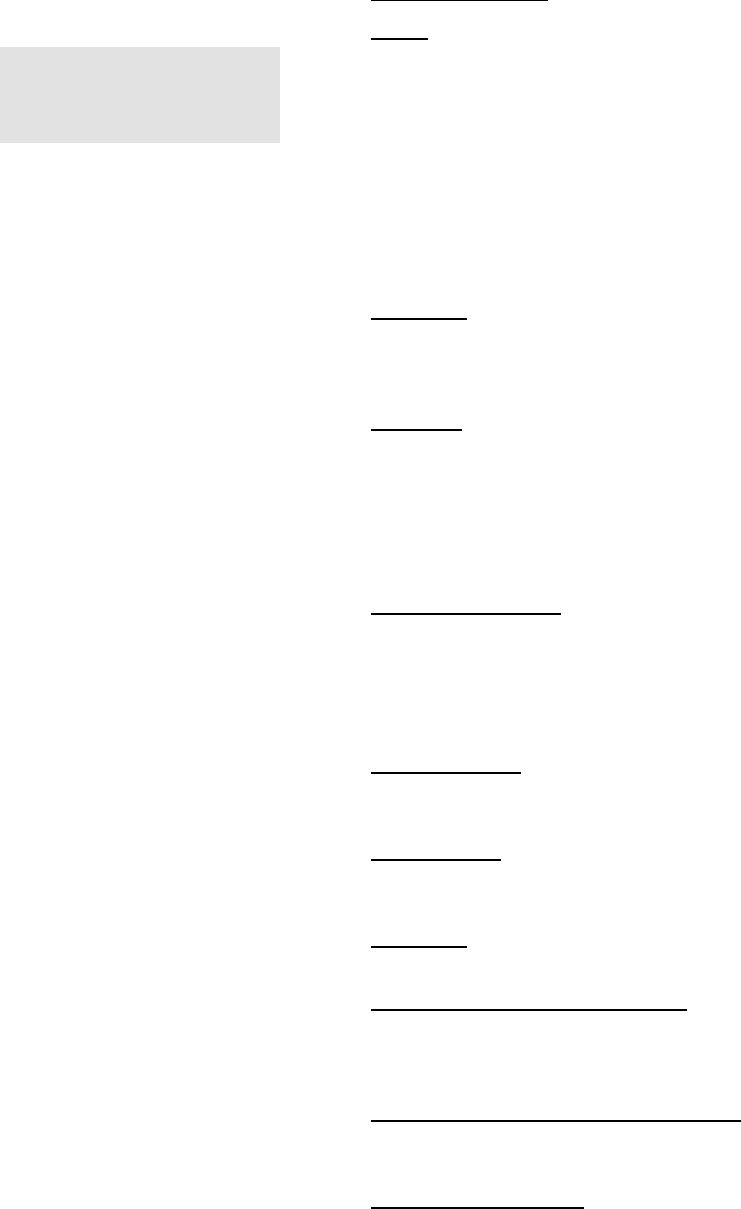

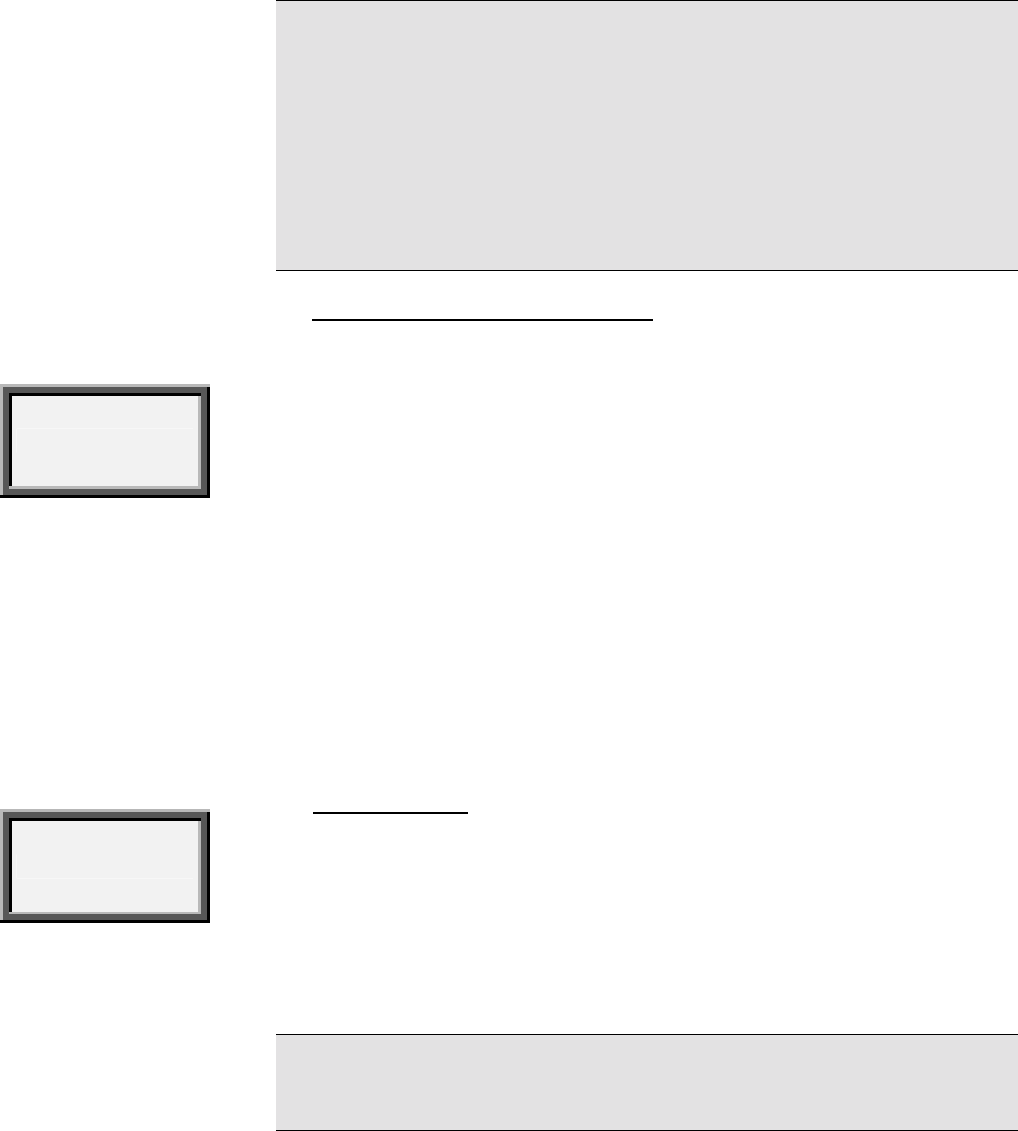

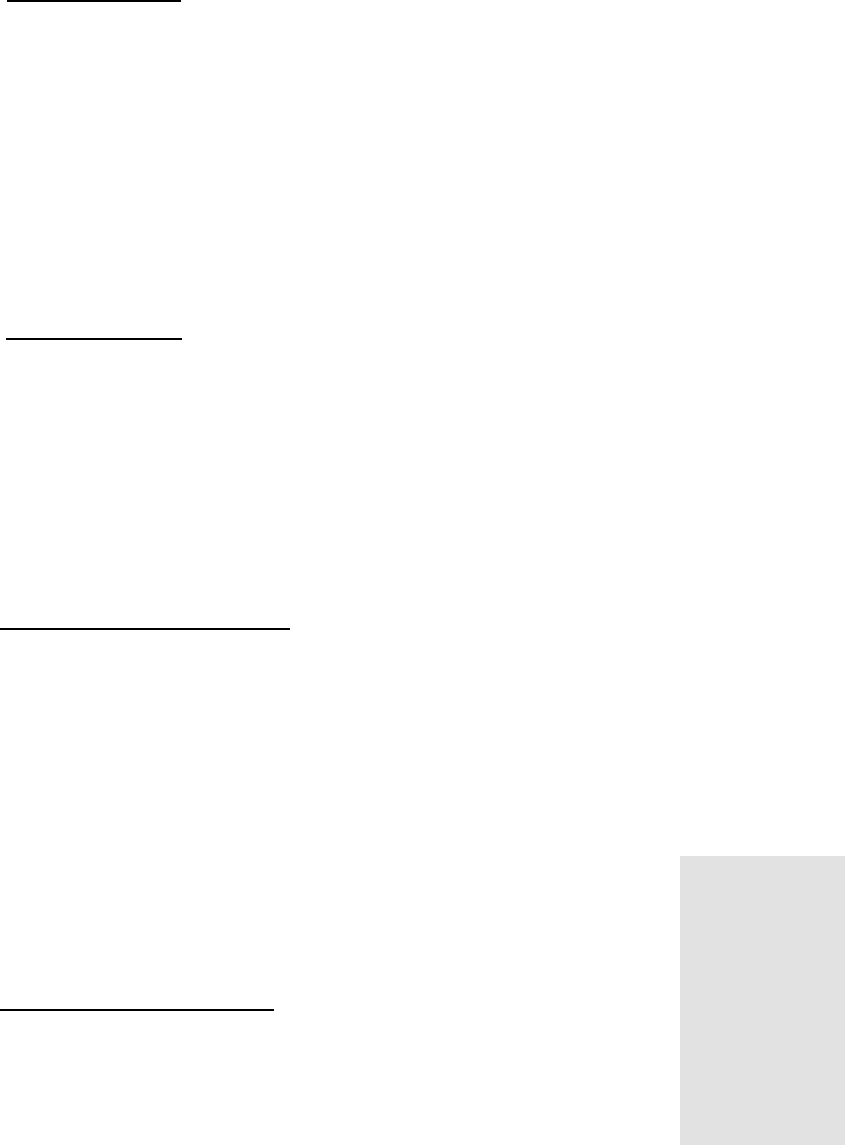

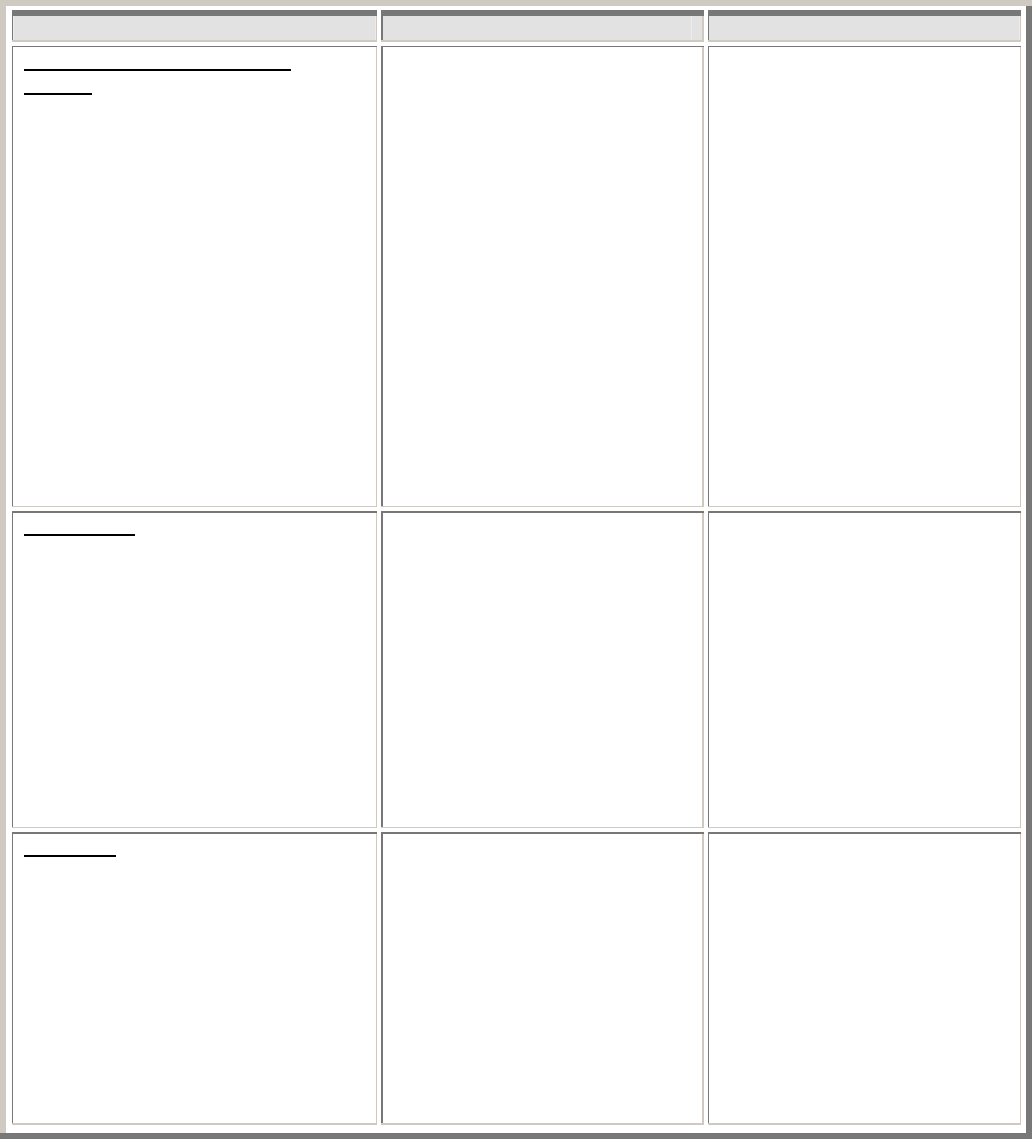

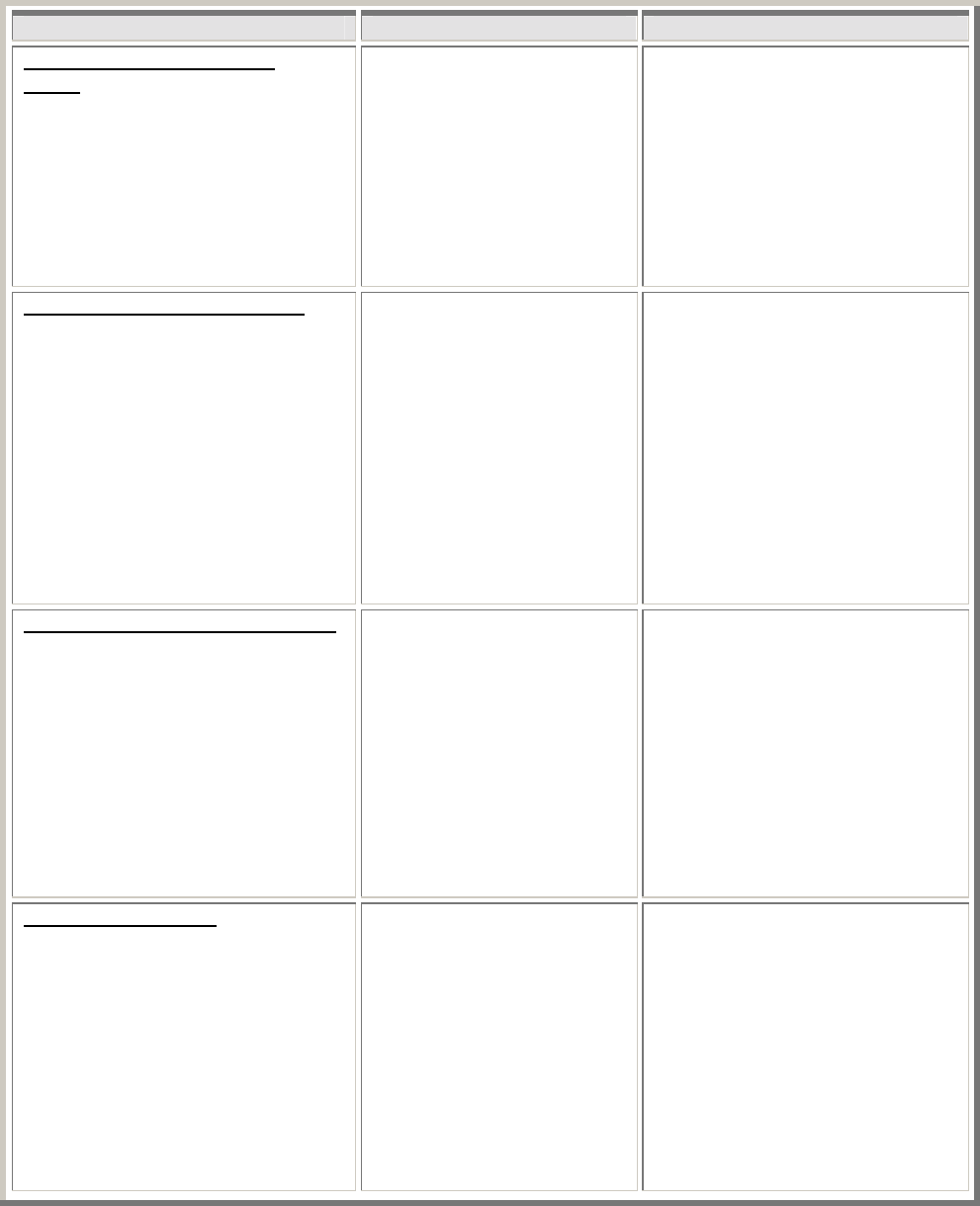

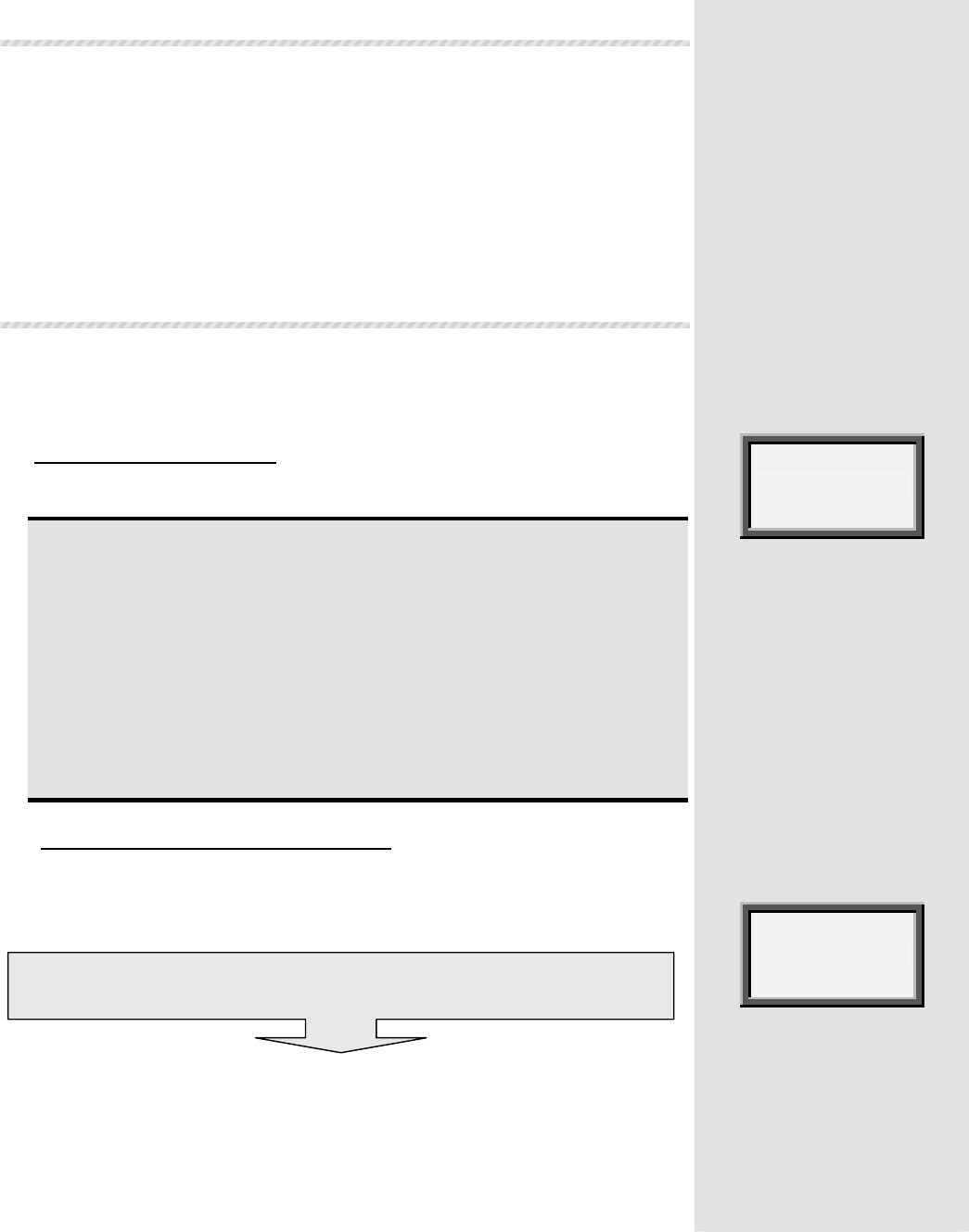

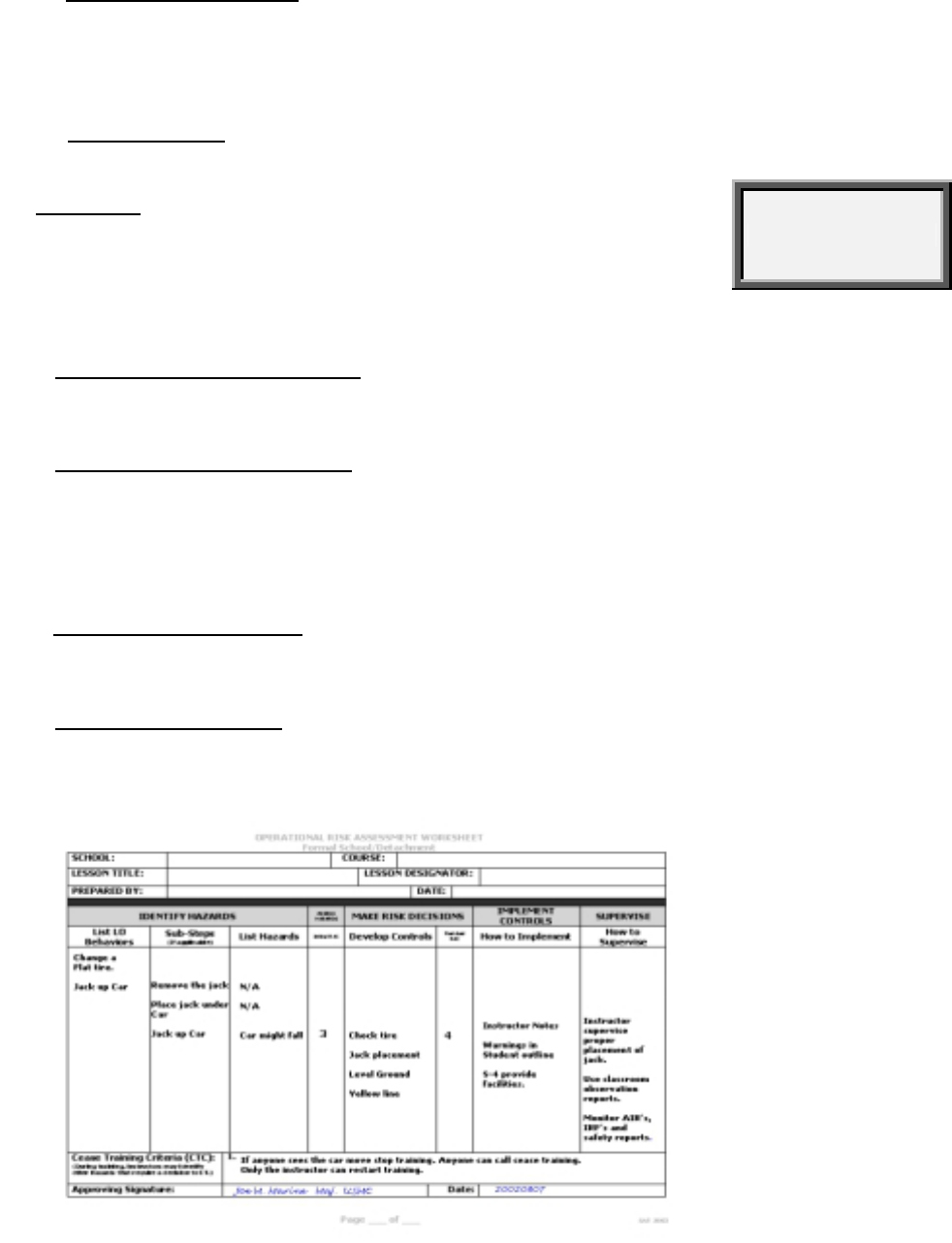

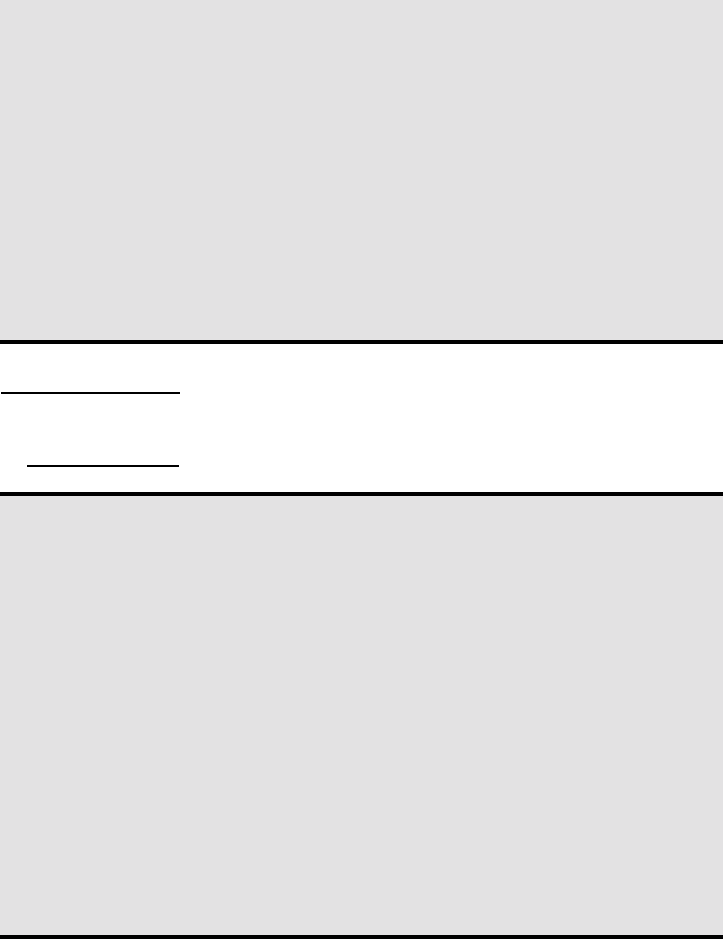

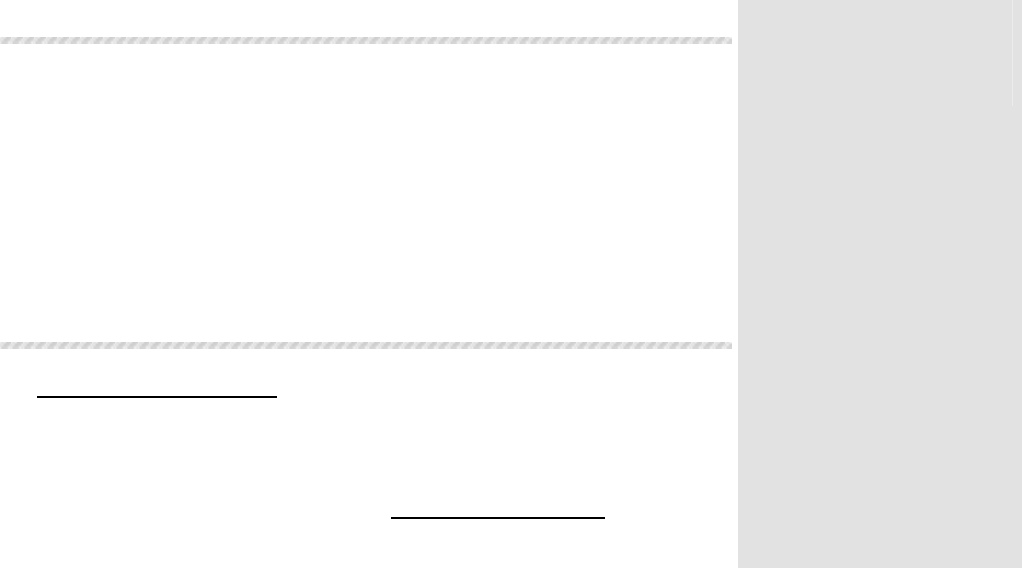

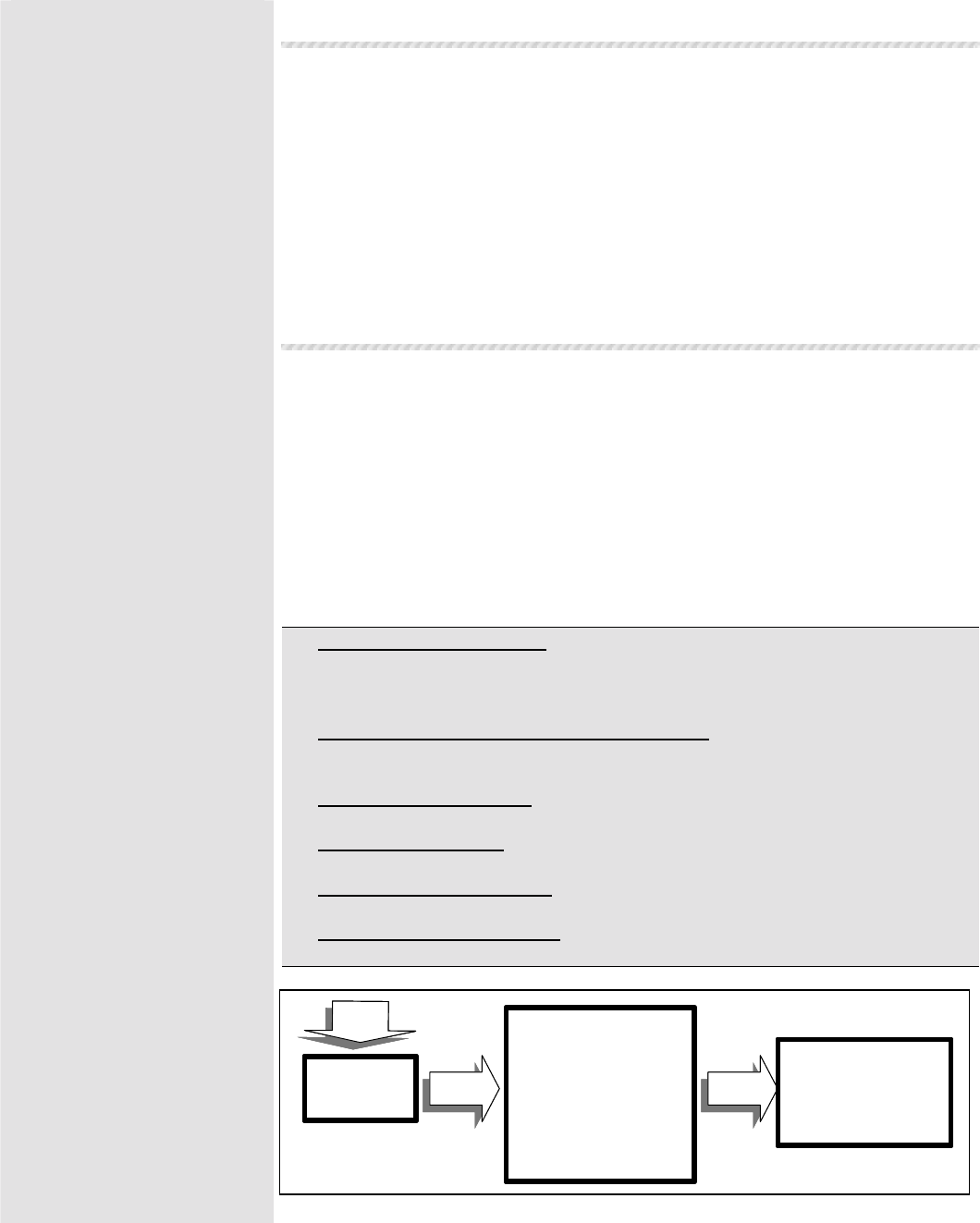

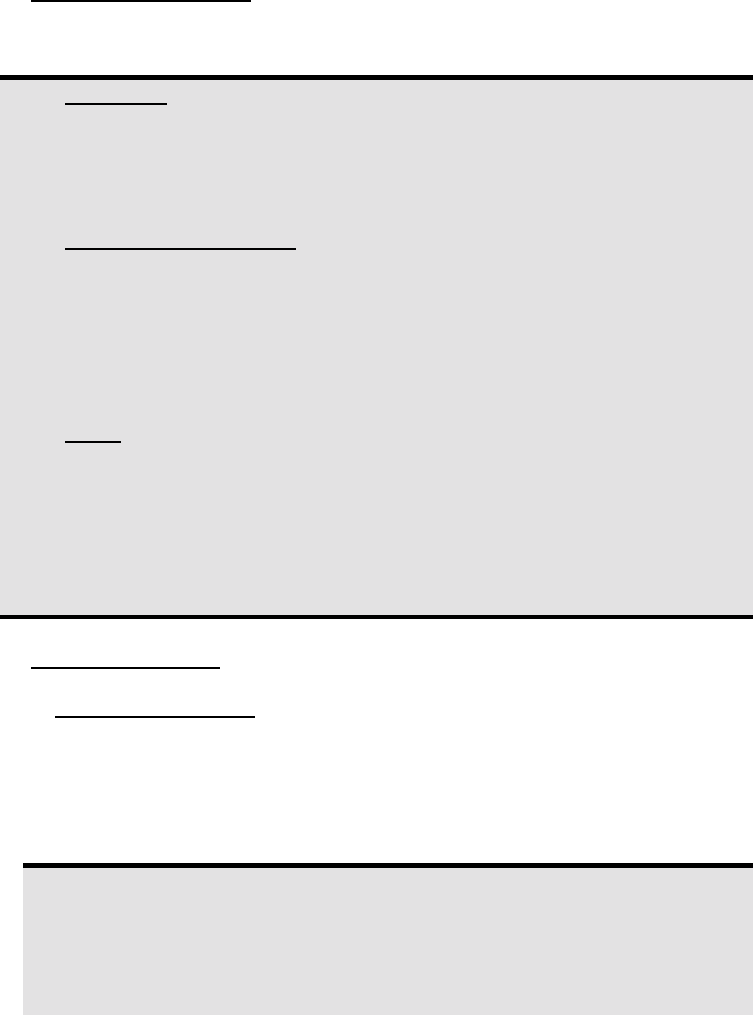

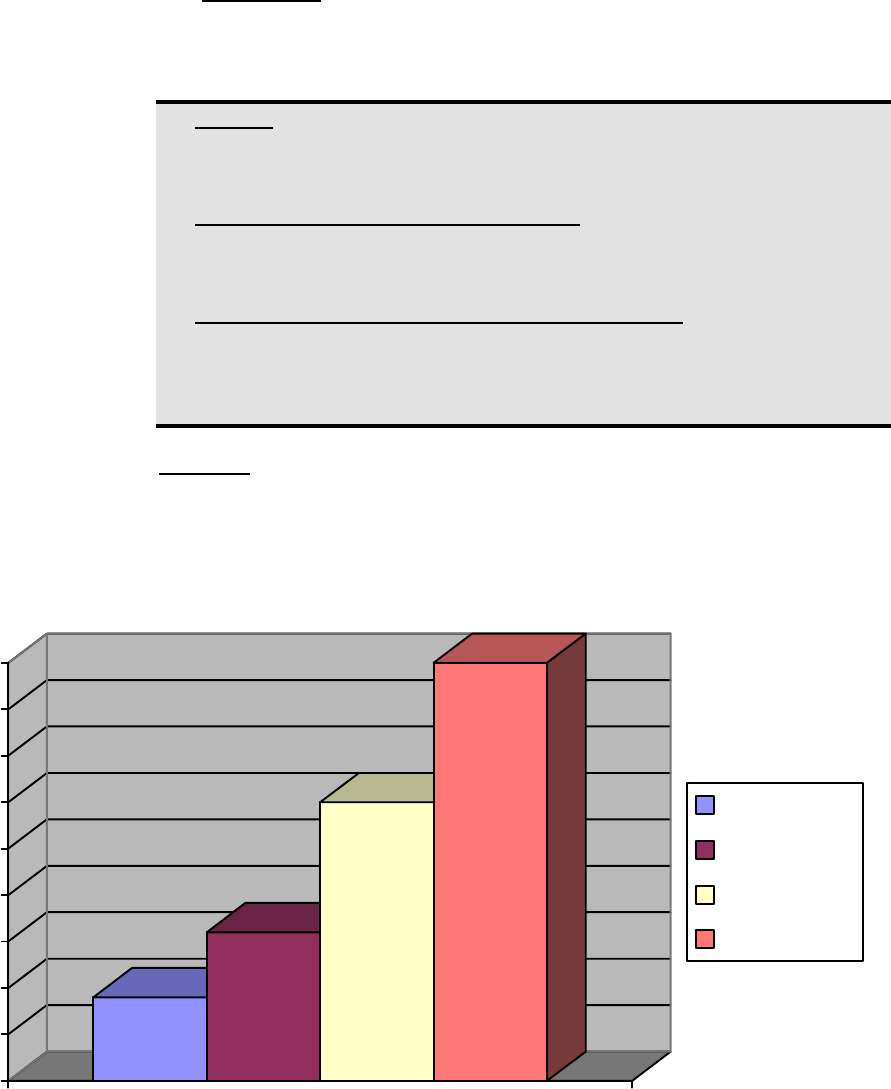

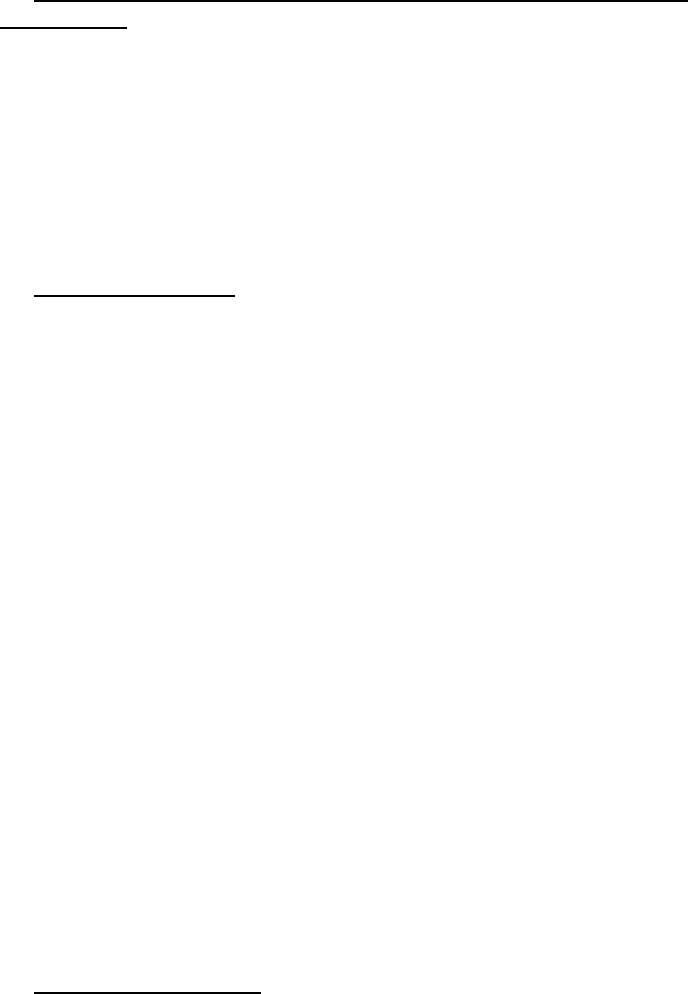

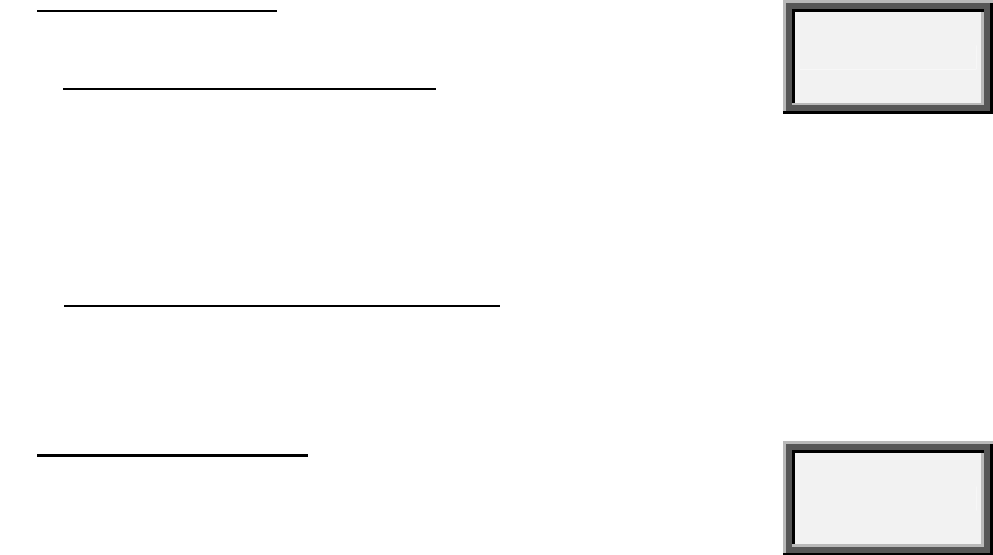

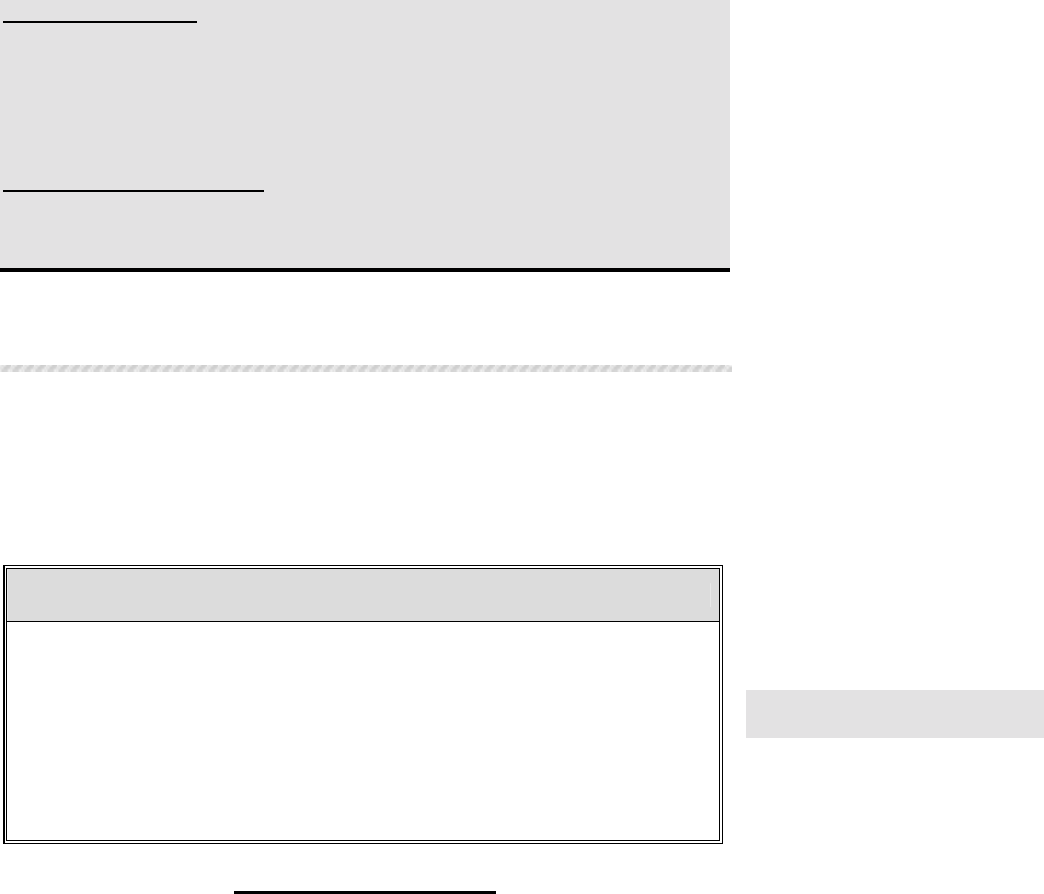

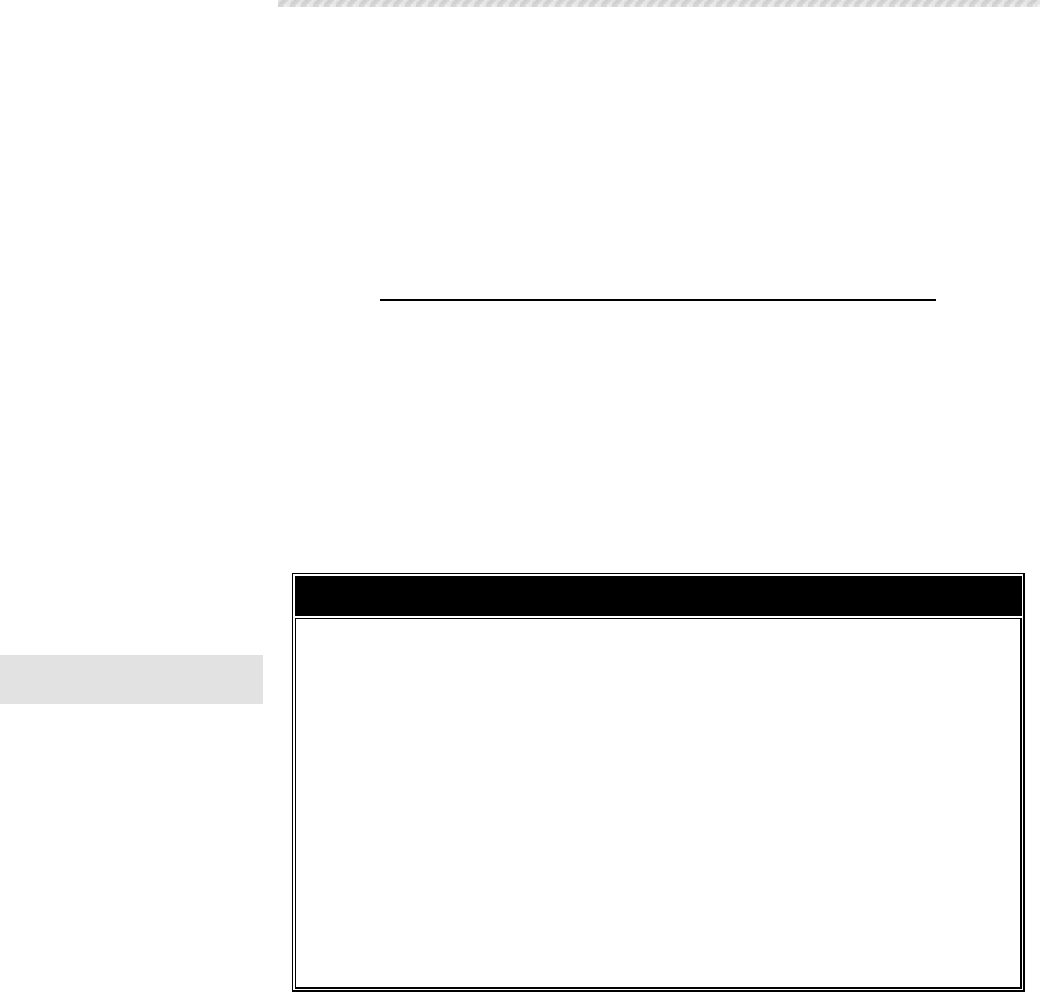

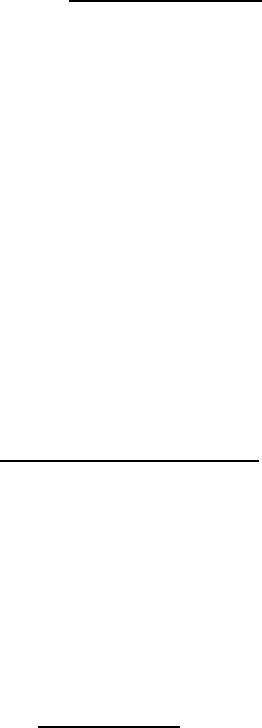

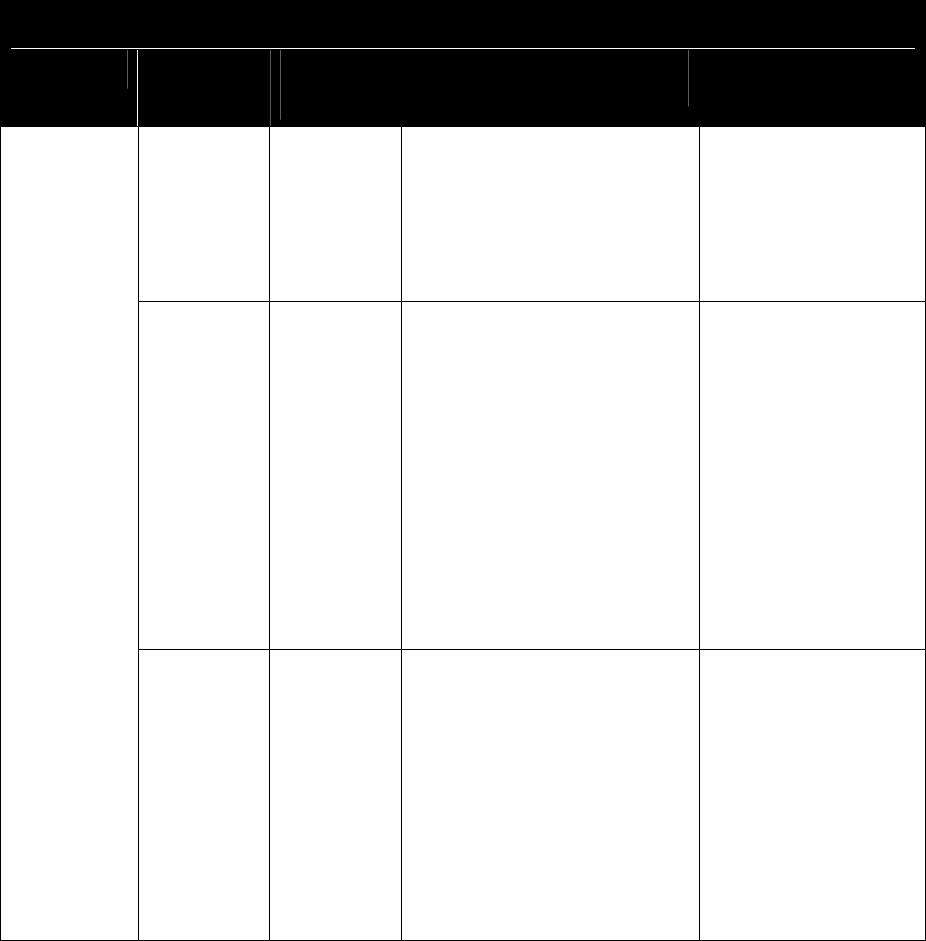

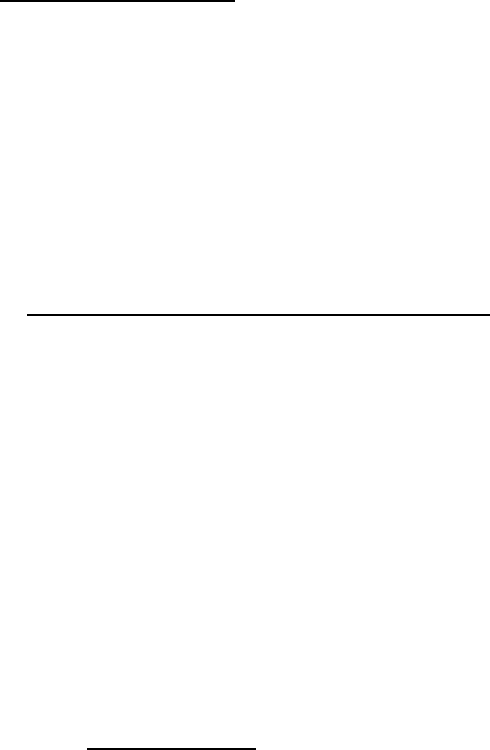

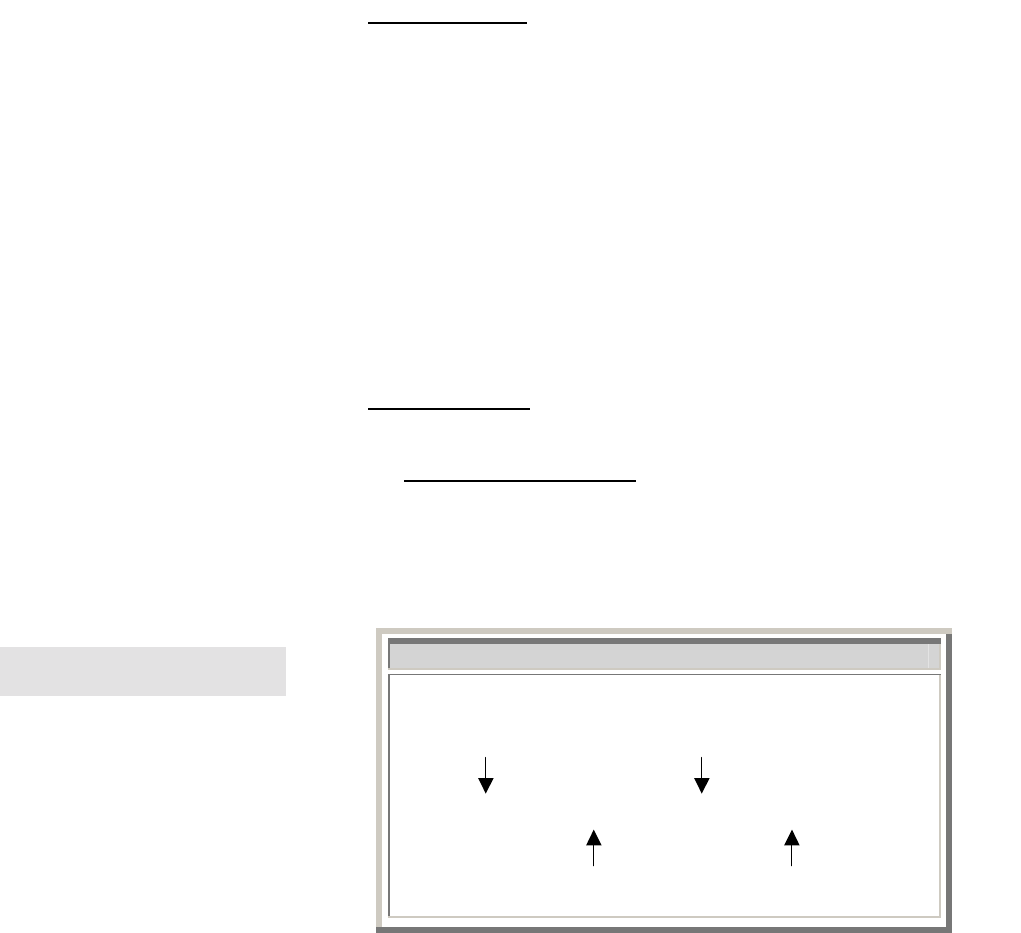

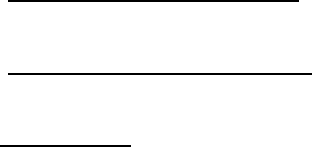

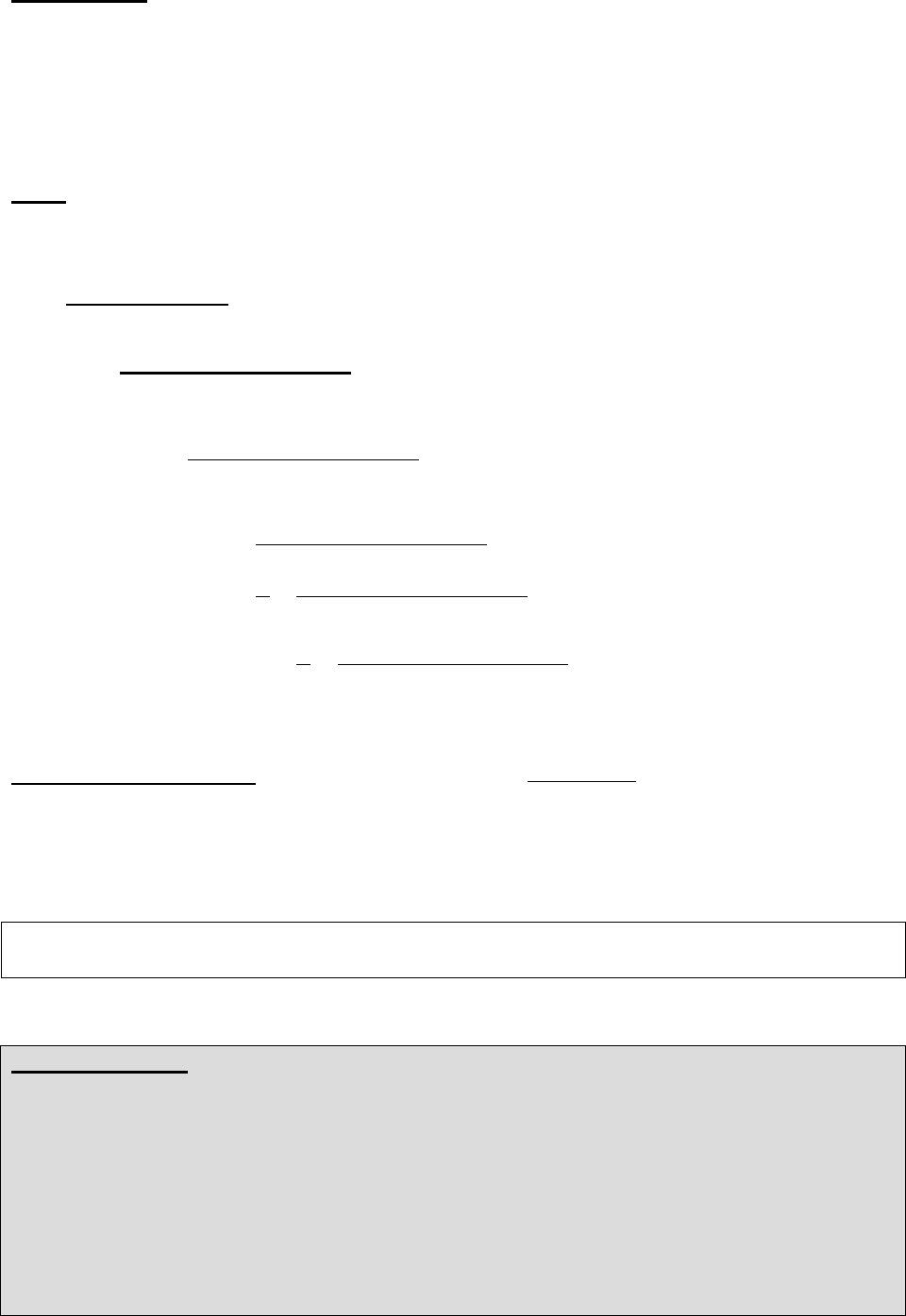

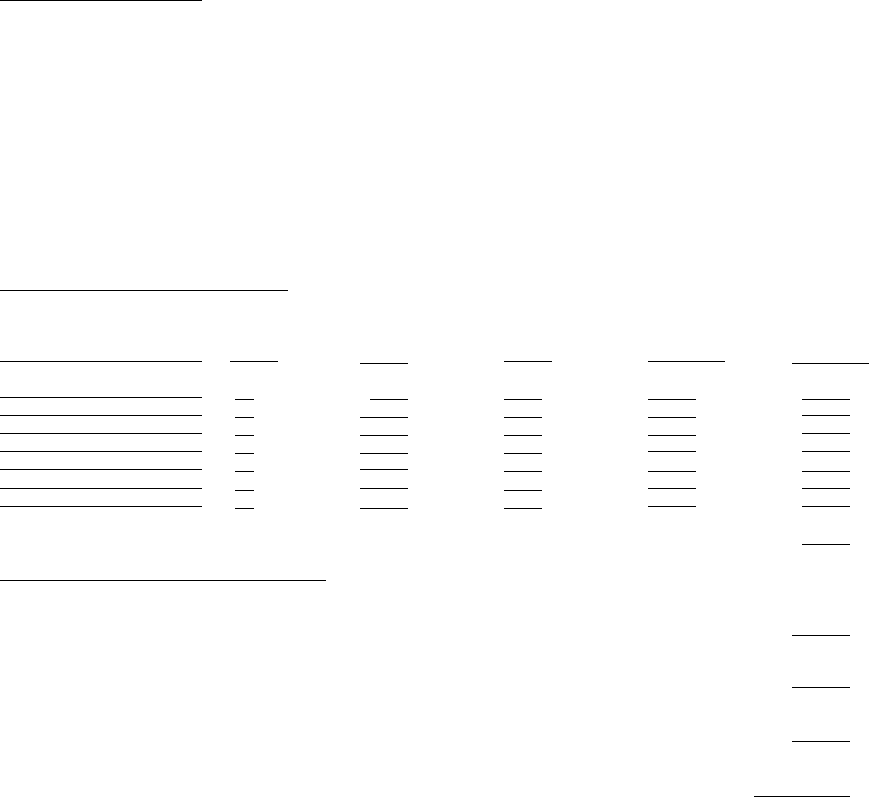

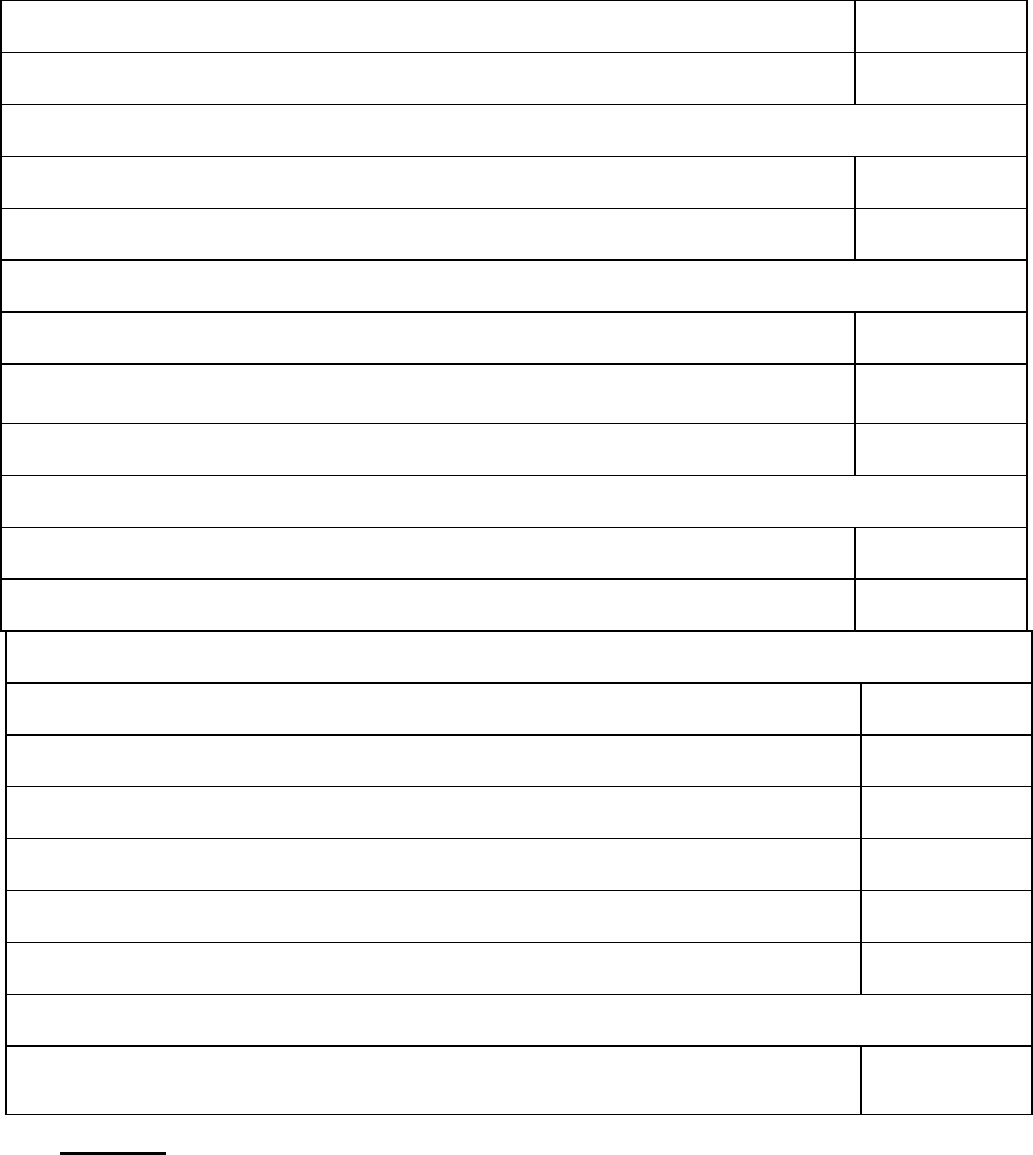

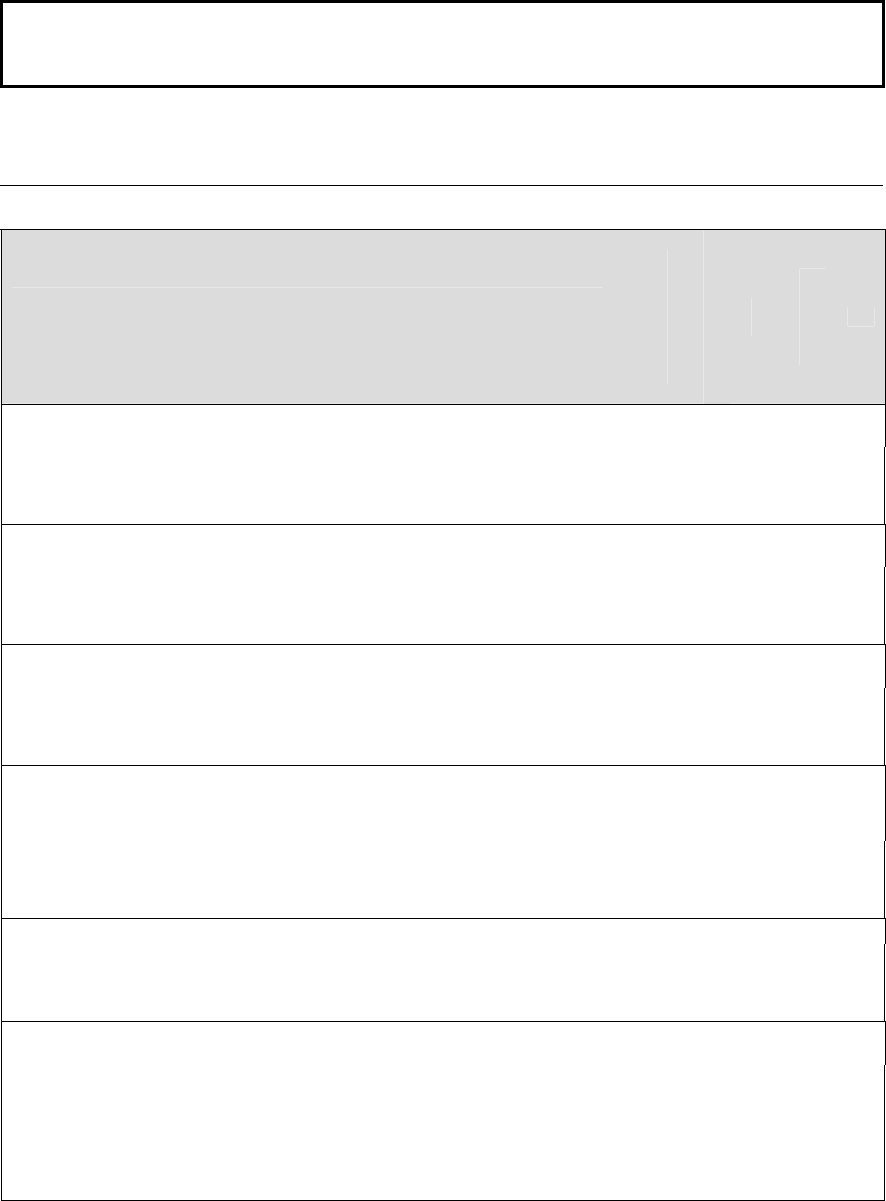

Figure 1-1

PROCESS

PROCESS

OUTPUT

OUTPUT

New Doctrine

New Equipment

Manpower Reqs

OccFld Reorg

Job Analysis

Task Analysis

Determine

Instructional

Setting

ITS Order

or

T&R Manual

INPUT

PROCESS

PROCESS

OUTPUT

OUTPUT

New Doctrine

New Equipment

Manpower Reqs

OccFld Reorg

Job Analysis

Task Analysis

Determine

Instructional

Setting

ITS Order

or

T&R Manual

ITS Order

or

T&R Manual

INPUTINPUT

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-2

1100. PURPOSE

The purpose of the Analyze Phase is to accurately determine what the Marine

must know and do on-the-job. Job Analysis is done through a systematic

research process called the Front-End Analysis (FEA) to collect, collate, and report

job performance data. Task analysis is accomplished by convening a Subject

Matter Expert (SME) conference. This conference, attended by representatives

from the operating forces, formal school, occupational field sponsor, and TECOM,

reviews the results of the FEA and produces a draft ITS Order to describe training

standards. SMEs then determine the instructional setting for each task and finally

produce the draft Target Population Description (TPD). The draft ITS/T&R is

then staffed and, when final changes are made, it is published in the MCO 1510

or 3500 series.

The formal schools are responsible for reviewing the signed ITS/T&R to identify

those tasks/events that they are responsible for teaching. The curriculum

developers then enter the relevant tasks into MCAIMS and begin the development

of the Program of Instruction. To accelerate the design and development phases

of the SAT process, schools can begin the process of entering the tasks into

MCAIMS from the draft ITS/T&R that is published immediately following the SME

conference.

As part of instruction, formal schools and detachments design, develop,

implement, and evaluate their curricula based on existing ITS/T&R. The

development of ITS/T&R within the Analyze Phase is unique to TECOM, and is

normally performed under the guidance of Ground Training Branch (GTB) or

Aviation Training Branch (ATB). Formal schools/training detachments within the

Marine Corps will not attempt to develop ITS/T&R independently without prior

approval of TECOM (GTB/ATB).

1. When ITS/T&R already exist for an MOS, the school developing instruction for

that MOS does not have to analyze the job. However, the formal

school/detachment is responsible for reviewing the ITS/T&R for accuracy and

completeness, and for recommending changes to TECOM (GTB/ATB).

2. If the ITS/T&R is awaiting signature following an SME conference, the school

responsible for instruction should obtain authorization from CG, TECOM to

commence course design, development, and implementation based on the

draft training standards.

The results of this phase form the basis for the entire instructional process by

clearly defining the target population, what Marines are actually performing on

the job, what they will need to learn in the formal school, and what will be

learned though managed on-the-job training (MOJT). The Analyze Phase is

concerned with generating an inventory of job tasks, selecting tasks for

instruction, developing performance requirements, and analyzing tasks to

determine instructional setting.

Section

1

A Front-End Analysis is a

process utilized to

collect, collate, and

report job performance.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-3

Section

2

Job analysis involves finding

out exactly what the Marine

does on the job rather than

what the Marine must know

to perform the job.

1200. JOB ANALYSIS

The first step in the Analyze Phase is the completion of a Job Analysis that is

conducted through the FEA process. TECOM (GTB) collects, examines, and

synthesizes data regarding each Occupational Field/Military Occupational

Specialty (OccFld/MOS). This data may include time in grade and MOS, career

progression, tasks performed on the job, instructional location, level of

instruction, etc. Job analysis is the collection and organization of data that

results in a clearly defined description of duties, tasks, and indicative

behaviors that define that job. Job analysis involves finding out exactly what

the Marine does on the job rather than what the Marine must know to perform

the job. The product of job analysis is a verified list of all duties and tasks

performed on the job and the identification of those tasks that must be taught

in the formal school/detachment. Once the Job Analysis is complete, an FEA

Report is produced and serves as a key input to the Subject Matter Expert

(SME) conference held to define the training standards and determine

instructional setting.

Job Analysis Requirements

Job analysis begins once a requirement for training has been identified and

validated. Job analysis requirements are typically generated by:

1. The introduction of new or better weapons/support systems.

2. Organizational changes such as changes in MOS structure and career

field realignments.

3. Doctrinal changes required by new laws, Department of Defense (DoD)

requirements, and Marine Corps needs.

4. Evaluations indicating that a change in instruction is required.

5. Direction from higher headquarters.

Task Criteria

A task is a behavior performed on the job. A task is defined by specific criteria

and must:

1. Be a logical and necessary unit of work.

2. Be observable and measurable or produce an observable and

measurable result.

3. Have one action verb and one object.

4. Be a specific act done for its own sake.

5. Be independent of other actions.

6. Have a specific beginning and ending.

7. Occur over a short period of time.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-4

Duty Areas

To facilitate survey of job incumbents and correlation of survey data, closely related

tasks within a task list are grouped by duty area for the purposes of job analysis. A

duty area is an organizer of data consisting of one or more tasks performed within

one functional area. Duties are generally very broad categories. One or more duties

make up a job. A duty may be defined by:

1. a system (e.g., Small Arms Weapons, Mines and Demolitions, Communication

Equipment).

2. a function (e.g., Administrative Functions, Patrolling Functions).

3. a level of responsibility (e.g., Train Logistics Personnel, Supervise Intelligence

Personnel).

1. Initial Task List Development The first step in Job Analysis is the

development of an initial task list and is conducted primarily by TECOM (GTB). This

process can include the initial identification of duties or functional areas in which the

tasks will be organized. An initial task list is developed by a combination of the

following means:

a. Reviewing technical documentation and references pertaining to the job. This

documentation might also be obtained from various sources outside the Marine

Corps. These sources may address similar jobs and tasks and have generated

materials that may be applicable for task list development. These sources include:

1) Other Service Schools

. These include Navy, Army, Air Force, or Coast Guard

formal schools, such as U.S. Army Engineer School at Ft. Leonard Wood, MO,

U.S. Army Signal School at Ft. Gordon, GA, and Air Force Communications

Technical School at Lowry Air Force Base, CO.

2) Trade Organizations/Associations

. Civilian or industry trade

organization/associations, such as Society for Applied Learning Technology

(SALT) or Association of Naval Aviation can provide additional resources and

technical support.

3) Defense Technical Information Center (DTIC)

. DTIC offers training studies,

analyses, evaluations, technical articles and publications.

b. Convening a board of subject matter experts (SME) who can detail the

requirements of a specific job.

c. Conducting interviews with SMEs.

d. Soliciting input from Marine Corps formal schools/detachments and Centers of

Excellence (COE).

Develop an initial task

list.

STEP 1

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-5

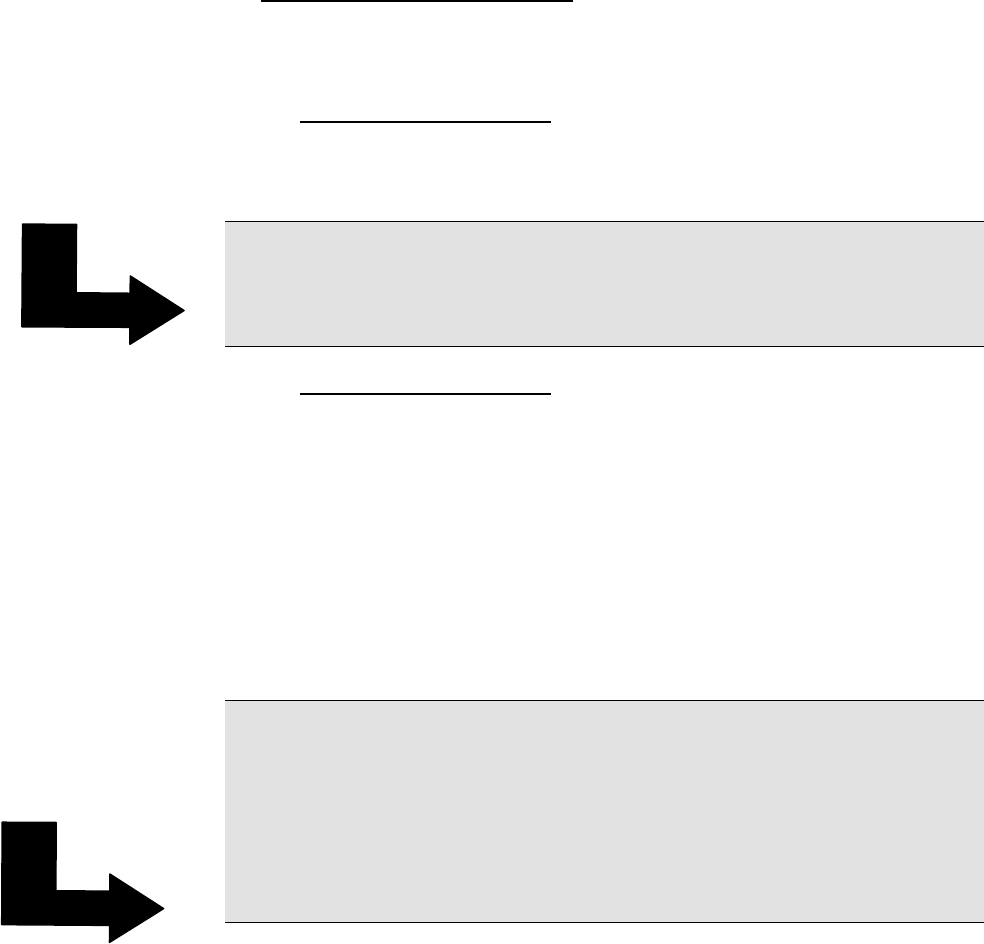

Verify the task list for

accuracy and completeness.

Refine and consolidate the

task list.

Identify tasks for formal

instruction.

STEP 2

STEP 3

STEP 4

2. Task List Verification. The next step in Job Analysis involves

verifying the task list in terms of accuracy and completeness. Verification

ensures that the tasks on the list are actually those performed by members of

the OccFld or MOS. Task list verification is normally conducted by TECOM

(GTB) during the FEA by one or more of the following methods:

a. Administering survey questionnaires to job incumbents.

b. Conducting interviews with SMEs.

c. Observing actual job performance of tasks at the job site.

d. Convening a board of SMEs to review the task list.

3. Refining the Task List. After the data in the previous two steps have

been collected, the task list is refined and consolidated. A final review of the

task list should be made to ensure all tasks meet the criteria for a task

discussed previously in this Section.

4. Identifying Tasks for Instruction.

The final step in job analysis

involves identifying specific tasks that may require formal instruction. Some

tasks may not be taught because they are relatively simple to perform, are

seldom performed, or only minimum job degradation would result if the tasks

were not performed. To properly select tasks for instruction, TECOM (GTB)

collects data on several criteria relating to each task. This is accomplished

through administration of a survey questionnaire sent to job incumbents and

SMEs. The data collected represents the judgments of a statistically valid

sample of job incumbents and SMEs who are familiar with the job. The

responses to the survey are analyzed using statistical analysis procedures. The

following criteria may be considered when selecting tasks for instruction and

are included in the survey questionnaire administered by TECOM (GTB).

a. 1. Percent of jobholders performing the task.

b. 2. Percentage of time spent performing the task.

c. Criticality of the task to the job.

d. Frequency of task performance.

e. Probability of inadequate performance

f. Task learning difficulty.

g. Time between job entry and task performance (task delay

tolerance).

h. Resource constraints at the schoolhouse.

Survey responses to each of these criteria are then analyzed and a Front End

Analysis Report (FEAR) is produced that will assist in the task analysis and

determination of instructional setting.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-6

1300. TASK ANALYSIS

The second step in the Analyze Phase is to conduct a Task Analysis that sequences

and describes observable, measurable behaviors involved in the performance of a

task or job. Task analysis is conducted by a SME conference. It involves the

systematic process of identifying specific tasks to be trained, and a detailed analysis

of each of those tasks in terms of frequency, difficulty, and importance.

The purpose of task analysis is to:

1. Define the task list based on SME input.

2. Develop ITS/T&R that identify the conditions, standards, and

performance steps necessary for the successful completion of a task.

3. Determine where the tasks will be instructed (formal school or via MOJT

at the unit level).

4. Produce a target population description that will guide the formal school

or unit in the preparation of instruction/training.

Below are questions to ask when performing a Task Analysis:

1. How difficult or complex is the task?

2. What behaviors are used in the performance of the job?

3. How frequently is the task performed?

4. How critical is the task to the performance of the job?

5. To what de

g

ree is the task performed individually, or to what de

g

ree is the

task part of a set of collective tasks?

6. If a subset of a set of collective tasks, what is the relationship between the

various tasks?

7. What is the consequence if the task is performed incorrectly or is not

performed at all?

8. To what extent can the task be trained on the job?

9. What level of task proficiency is expected following training?

10. How critical is the task?

11. What information is needed to perform the task? What is the source of

information?

12. What are the performance requirements?

13. Does execution of the task require coordination between other personnel

or with other tasks?

14. Are the demands (perceptual, cognitive, psychomotor or physical) imposed

by the task excessive?

15. How often is the task performed during a specified time-frame (i.e., daily,

weekly, monthly, yearly)?

16. How much time is needed to perform this task?

17. What prerequisite skills, knowledge, and abilities are required to perform

the task?

18. What are the current criteria for acceptable performance?

19. What are the desired criteria?

20. What behaviors distinguish good performers from poor performers?

21. What behaviors are critical to the performance of the task?

SECTION

3

Task Analysis

identifies specific

tasks to be trained,

and a detailed analysis

of each of those tasks

in terms of frequency,

difficulty, and

importance.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-7

1. Training Standard Development. Once the task list is finalized,

performance requirements must be developed for every task selected for

instruction. In the Marine Corps, performance requirements for all

occupational field specialties (OccFld) are defined by Individual Training

Standards (ITS). ITS published in either an ITS Order or a Training and

Readiness (T&R) Manual serve as the basis for all individual instruction in units

and in formal schools/detachments. Formal schools/ detachments are

responsible for teaching the training standards designated for instruction in

the formal school. These ITS/T&R events appear as tasks in Appendix B of

the Course Descriptive Data (CDD) produced by the formal school (see

Chapter 3).

2. Development of ITS/T&R.

Once tasks are verified and the task

lists are refined, ITS/T&R may be developed. Often, many elements of the

ITS (e.g., performance steps, conditions, standards) are collected while the

task list is being refined. This enables a better understanding of the task and

can serve as a check to ensure the tasks are actually performed on the job. A

working group conference composed of subject matter experts (SME) is

particularly effective for examining how a task is to be completed by

identifying the performance steps and the sequence of those performance

steps, conditions, and standards necessary to successfully accomplish the

task.

a. ITS Components

1) Task

. The task describes what the job holder must do.

2) Condition(s)

. The conditions set forth the real-world

circumstances in which the tasks are to be performed. Conditions

describe the equipment and resources needed to perform the task

and the assistance, location, safety considerations, etc., that

relate to performance of the task.

3) Standard(s)

. Standards provide the proficiency level expected

when the task is performed. Standards can measure a product, a

process, or a combination of both. Standards must reflect a

description of how well the task must be performed. This standard

can cite a technical manual or doctrinal reference (e.g., ...in

accordance with FMFM 1-3), or the standard can be defined in

terms of completeness, time, and accuracy.

4) Performance Step(s)

. Performance steps specify the actions

required to accomplish a task. Performance steps follow a logical

progression.

5) Reference(s)

. References are doctrinal publications (e.g.,

technical manuals, field manuals, Marine Corps Orders) that

provide guidance in performing the task in accordance with the

given conditions and standards. References cited should be

current and readily available to the Marine.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-8

6) Administrative Instructions

. Administrative instructions provide the

instructor with special circumstances relating to the ITS, such as

simulation requirements and safety or real world limitations, which may

be a prerequisite to successful accomplishment of the ITS.

b. Composition of a T&R Event. A T&R event contains the following

components:

1) Event Code

. The event code is a three-letter and three-digit

designator. The three-letter code is used for grouping events

according to their functional area. For collective events, these

groupings are derived directly from the community’s METs. The

three-digit code is used to arrange events in a progressive sequence.

The purpose of coding events is to provide Marines with a simplified

system for planning, tracking, and recording unit and individual

training accomplishments.

a) Functional Area Grouping. Categorizing events with the use of

a recognizable three-letter code makes the type of skill or

capability being referenced fairly obvious. Examples include DEF

(defensive tactics), MAN (maneuver), NBC (nuclear, biological, and

chemical), etc.

b) Sequencing. A numerical code is assigned to each training

event. The higher the number, the more advanced the capability

or skill being evaluated. For example, PAT-201 (patrolling) could

be patrolling conducted at the squad level, PAT-240 could be

patrolling at the platoon-level, PAT-301 could be patrolling at the

battalion level, etc.

2) Event Description

. The event description is a narrative description

of the training event.

3) Tasks

. A listing of the tasks that are done together to accomplish

the training Event. Tasks are defined on page 1.3. There are

normally multiple training tasks contained in each event. Tasks may

or may not be completed sequentially.

4) Condition

. Condition refers to the constraints that may affect event

performance in a real-world environment. It includes equipment,

tools, materials, environmental and safety constraints pertaining to

event completion.

5) Standard

. Standards are the metric for evaluating the effectiveness

of the event performance. It identifies the proficiency level for the

event performance in terms of accuracy, speed, sequencing, and

adherence to procedural guidelines. It establishes the criteria of how

well the event is to be performed.

6) Performance Steps

. Performance steps specify the actions required

to accomplish a task. Performance steps follow a logical progression,

and should be followed sequentially, unless otherwise stated.

Normally, performance steps are listed only for 100-level individual

T&R events (those that are taught in the entry-level MOS school), but

may be included in upper-level events when appropriate.

7) Prerequisite(s)

. Prerequisites are the listing of academic training

and/or T&R events that must be completed prior to attempting

completion of the event.

8) Reference(s). References are the listing of doctrinal or reference

publications that may assist the trainees in satisfying the performance

standards and the trainer in evaluating the performance of the event.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-9

9) Ordnance. Each event will contain a listing of ordnance types

and quantities required to complete the event.

10) External Support Requirements

. Each event will contain a

listing of the external support requirements needed for event

completion (e.g., range, support aircraft, targets, training

devices, other personnel, and non-organic equipment).

11) Combat Readiness Percentage (CRP)

. The CRP is a

numerical value used in calculating training readiness. The CRP

value for each event is determined by that event’s overall

importance within the training syllabus for that unit,

occupational specialty, or billet.

12) Sustainment Interval

. The period, expressed in number of

months, between evaluation or retraining requirements to

refresh perishable skills and assure readiness. Skills and

capabilities acquired through the accomplishment of training

events are to be refreshed at pre-determined intervals. Those

intervals, known as sustainment intervals, are developed at the

respective T&R conference to standardize currency

requirements for Marines to maintain proficiency.

3. ITS/T&R Staffing. ITS/T&R staffing involves soliciting comments

from affected individuals or organizations throughout the Marine Corps, and

then integrating those comments into the ITS/T&R document. The Operating

Forces, formal schools/training detachments, and OccFld sponsors (and

designated SMEs under special circumstances) will be included on the

ITS/T&R staffing distribution list. TECOM (GTB/ATB) will coordinate final

review, and will consolidate and reconcile all recommendations.

Upon completion of this process, necessary changes will be incorporated into

the final ITS/T&R draft Order for signature. ITS/T&R Manuals are forwarded

to CG, TECOM for approval and signature.

Once final approval and signature has been received, the training standards

are published as either a T&R Manual in the MCO 3500-series, or as an ITS

Order in the MCO 1510-series, and can then be distributed throughout the

Marine Corps.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-10

1400. INSTRUCTIONAL SETTING

The third process in the Analyze Phase involves determining the instructional

setting for each individual training standard (ITS) task behavior. Instructional

setting is important because it defines who is responsible for instructing the task

and the level of proficiency the student must achieve when performing the task in

an instructional environment. TECOM is responsible for determining the

organization responsible for conducting the instruction and the level of instruction

assigned to each task. This is done during the SME Conference while ITS/T&R

events are being developed. When determining instructional setting, two guiding

factors must be used -- effectiveness and efficiency. The Marine Corps seeks the

best training possible within acceptable, affordable costs while meeting the

learning requirement.

1. Responsibility for Instruction. Once the job is defined and the

ITS/T&R events are developed, the job structure can be broken down into

organizations that will assume responsibility for instruction. The tasks must be

divided into four groups:

a. Those that are to be included in a formal learning program.

b. Those that are to be included in a Managed On-the-Job-Training (OJT)

program.

c. Those that can be covered via computer-based instruction or via

simulation.

d. Those for which no formal or OJT is needed (i.e., can be learned by using

job performance aids or self study packets).

2. Instructional Setting. The purpose of entry level formal school

instruction is twofold: to teach the minimum skills necessary to make the Marine

productive immediately upon arrival at his first duty station; and to provide the

Marine with the necessary prerequisites to continue instruction in an MOJT

program. Instructional setting refers to the extent of instruction assigned to each

Individual Training Standard (ITS) task behavior. Instructional setting is

generally determined by convening a board of job incumbents and SMEs to

discuss the extent of instruction required to adequately perform the task.

Instructional settings are published in the T&R Manual or ITS Order.

Instructional settings in T&R Manuals are prescribed only for entry-level training

by listing them as 100-level events. Enclosure (3) of the ITS System (MCO 1510-

series) prescribes instructional settings in the following manner:

a. Tasks taught to standard are indicated by an “S” in the FS/MOJT column.

b. Tasks taught as preliminary or introductory in the formal school setting

are depicted with a “P” in the FS/MOJT column. These tasks require follow-on

instruction at the unit through MOJT for the Marine to achieve the standard of

proficiency required.

c. Tasks that are not taught at the formal school have no designator in the

FS/MOJT column.

SECTION

4

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-11

SECTION

5

1500. RE

Q

UIREMENTS AND RESPONSIBILITIES

IN THE ANALYZE PHASE

1. Training and Education Command [TECOM (GTB/ATB)]

Responsibilities. A systematic approach to the design of instruction

requires an identification of the tasks performed on the job. Job performance

in the Marine Corps is defined and supported by training standards. Training

standards published in ITS orders or as individual events in T&R Manuals are

the primary source for the development of all Marine Corps instruction.

TECOM (GTB/ATB) is responsible for coordinating all the steps in the Analyze

Phase and for managing the FEA process. TECOM will coordinate the

development of ITS/T&R for military occupational fields (OccFld) and military

occupational specialties (MOS). The culmination of the Analyze Phase is an

approved set of training standards for an OccFld or MOS, published as a

Marine Corps Order (MCO) in the 1510 or 3500 series.

a. Job Analysis

. As part of the FEA process, TECOM (GTB) is

responsible for conducting job analyses. Additionally, TECOM (GTB) will

collect supporting information that will assist in the identification and selection

of tasks for instruction. TECOM (GTB) publishes the analysis results in a Front-

End Analysis Report (FEAR).

b. Task Analysis

. TECOM (GTB/ATB) is responsible for convening the

SME conference. The conference conducts formal task analysis and produces

the refined task list.

c. Determination of Instructional Setting. The SME conference

also determines where the tasks should be taught, either at the formal

school/detachment, or in the operating forces/supporting establishment. The

TECOM task analyst conducting the SME conference will publish the

instructional setting in the T&R Manual or ITS Order.

2. Formal School/Detachment Responsibilities. The formal

schools play important roles during the Analyze Phase.

a. Job Analysis

. The formal school/detachment advises the task

analyst within TECOM (GTB/ATB) on the construction of task lists that will be

used to build FEA questionnaires. The school also sends key personnel to the

SME conference who can make decisions on behalf of the commander.

Formal school personnel actively participate in the final step of Job Analysis –

selection of the tasks for instruction -- by making recommendations on

whether or not the task can be properly taught at the school. When the

requirements of the task exceed current resources, the SMEs make

recommendations for additional resources.

b. Task Analysis

. Since task analysis involves determining the

condition, standard, performance steps, etc., having the resident experts from

the formal school participate in this process is critical.

Systems Approach To Training Manual Analyze Phase

Chapter 1 1-12

c. Determination of Instructional Setting

. The determination of where

the tasks should be taught, either at the formal school/detachment, as part of a

web-based course, or as part of an MOJT program in the operating forces is

essential. Formal school/detachment personnel provide key inputs to this step

during the SME conference.

The Determination of the Instructional Setting is the final process in the

Analyze Phase. The output of this phase is:

Individual Training Standards (ITS) Order or Training and Readiness

(T&R) Manual.

This output becomes the input to the Develop Phase. The first step of

the Design Phase will be to write a Target Population Description (TPD)

for the course to be developed from the events/ITS identified during

the Analyze Phase.

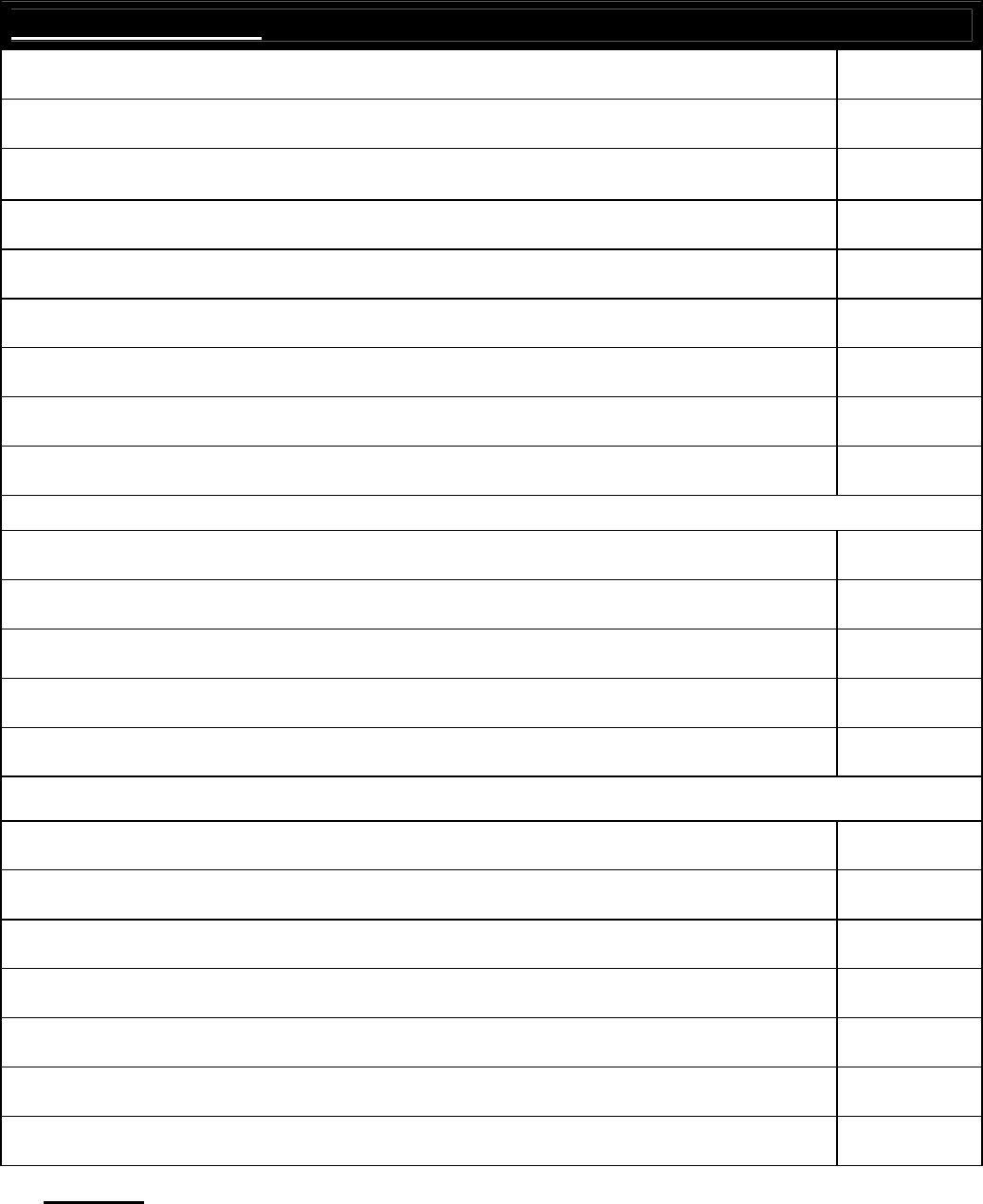

Systems Approach To Training Manual Design Phase

Chapter 2

DESIGN PHASE

In Chapter 2:

2000 INTRODUCTION

2100 WRITE THE TARGET

POPULATION DESCRIPTION

Purpose

Role of TPD in instruction

Steps in Writing the TPD

2200 CONDUCT LEARNING

ANALYSIS (LA)

Purpose

Steps to conduct a LA

Develop Learning Objectives

(LO)

Components of LOs

Record LOs

Writing LOs

Writing ELOs

Develop Test Items

Select Instructional Methods

Select Instructional Media

2300 SEQUENCE TERMINAL

LEARNING OBJECTIVES (TLO)

Purpose

Relationships between TLOs

Guidelines for sequencing TLOs

2-1

2-2

2-2

2-2

2-2

2-4

2-4

2-4

2-13

2-14

2-17

2-18

2-21

2-23

2-43

2-52

2-59

2-59

2-59

2-62

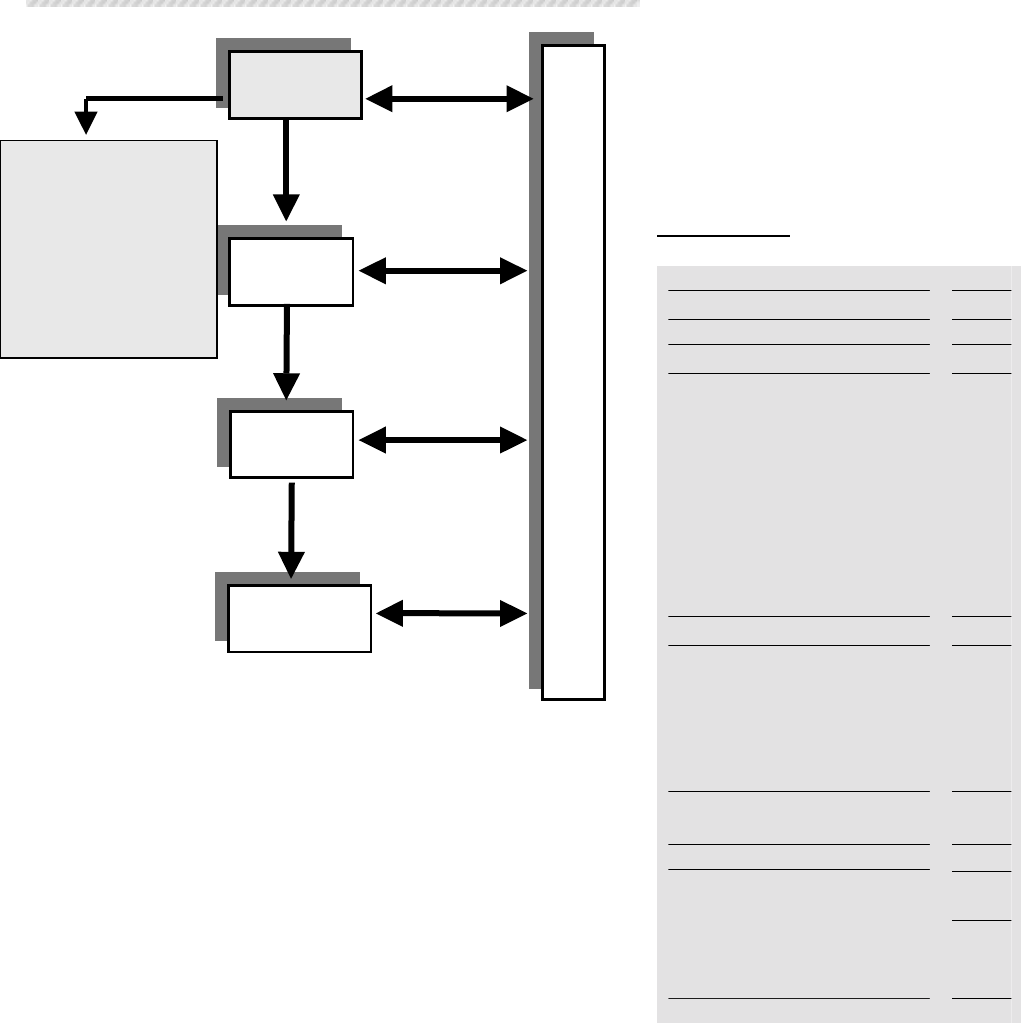

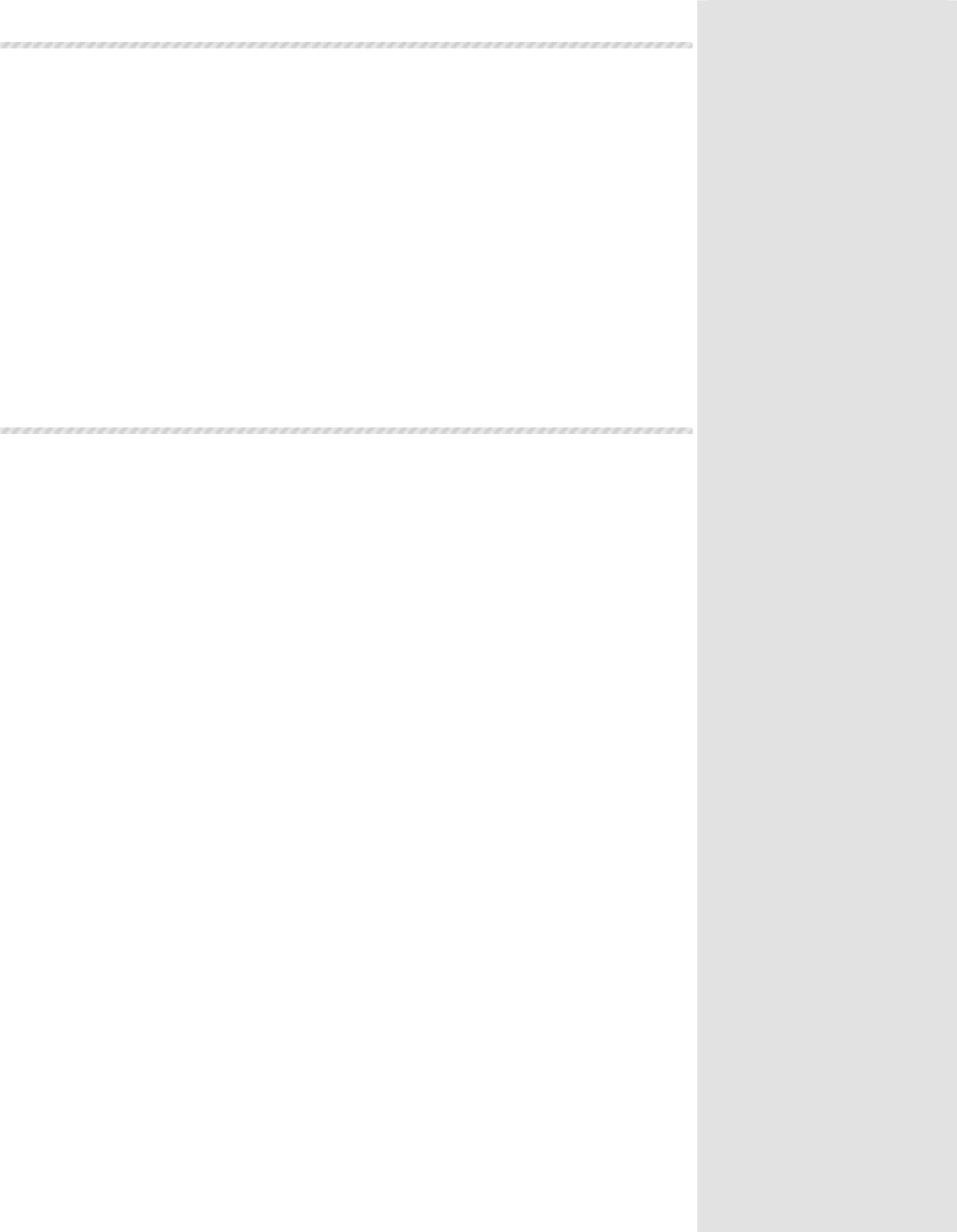

IMPLEMENT

EVALUATE

DESIGN

DEVELOP

A

NALYZE

Produce and

Analyze TPD

Conduct a LA

Sequence LO

Systems Approach To Training Manual Design Phase

Chapter 2 2- 1

Chapter

2

Formal School/

Detachment is any MOS or

professional development

school in the Marine Corps.

2000. INTRODUCTION

The outputs of the Analysis Phase, the ITS Order or the T&R Manual, become the

inputs to the Design Phase. During the Design Phase, the curriculum developer

takes the ITS Tasks or T&R events designated to be taught at the formal

school/detachment, and attempts to simulate, as closely as possible, the real-

world job conditions within the instructional environment. The closer the

instruction is to real world job requirements, the more likely it is that the student

will transfer the learning to the job.

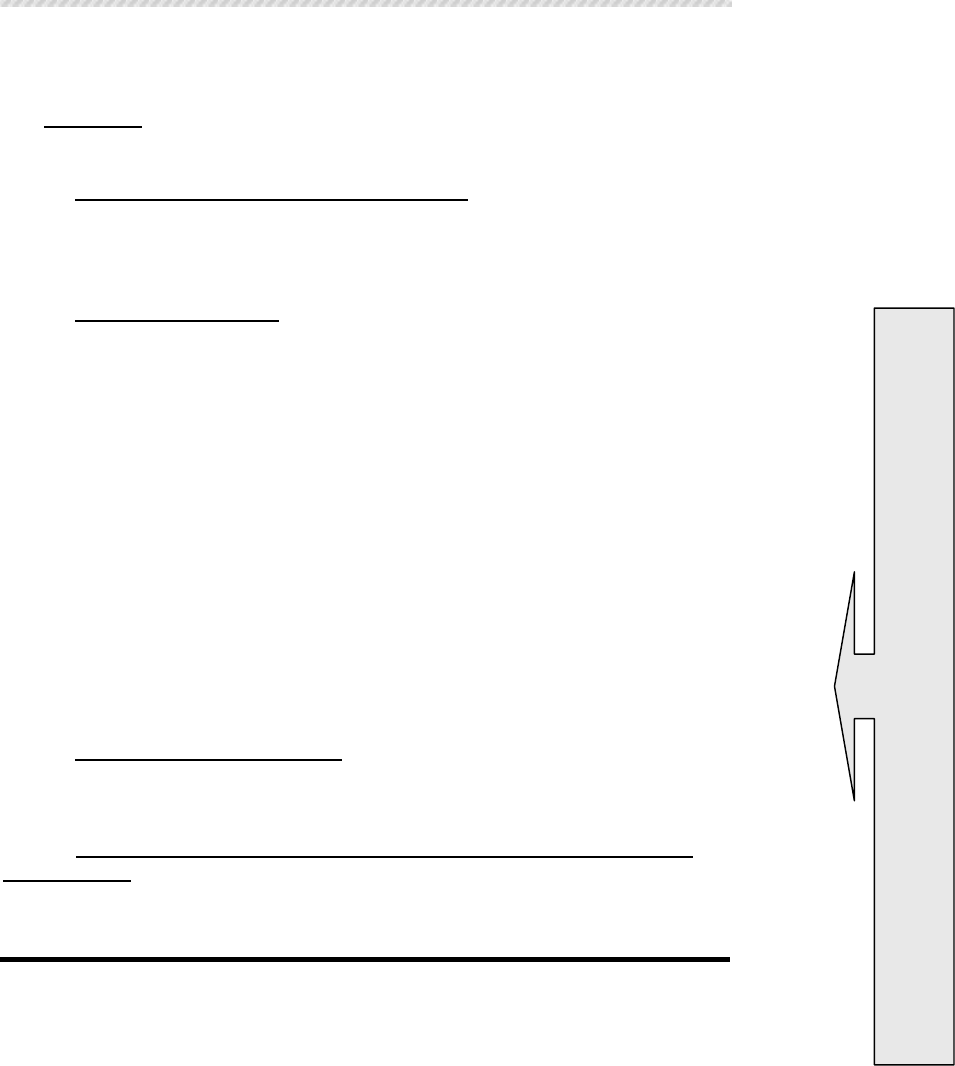

The Design Phase consists of these three processes:

1. Write the Target Population Description (TPD): “Who is coming for

instruction and what knowledge, skills, and attitudes (KSAs) must/will they bring

with them?”

2. Conduct a Learning Analysis: “What do I have to teach with?” and “What

will be taught, evaluated, and how?”

3. Sequence TLOs: “In what order will the instruction be taught to maximize

both resources and the transfer of learning?”

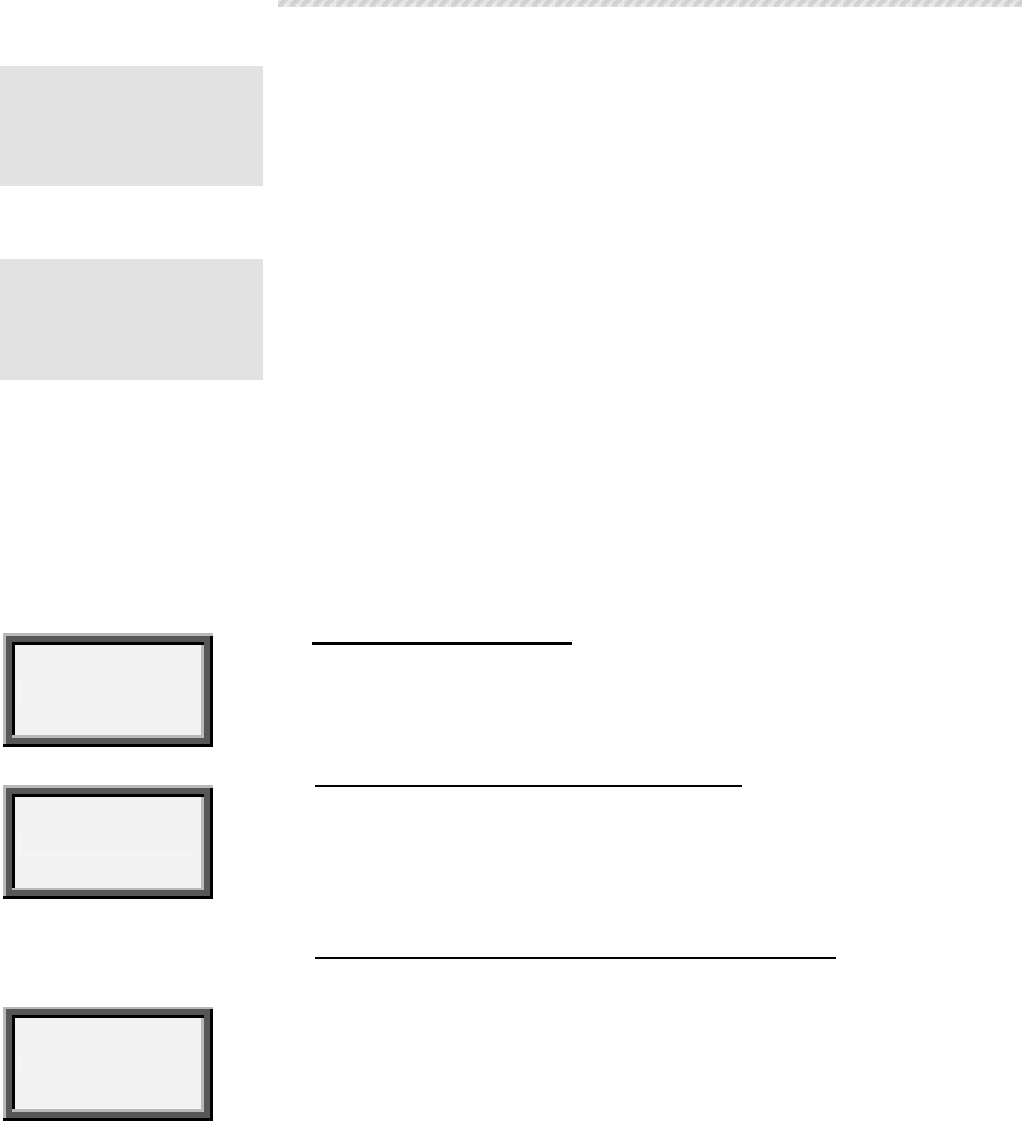

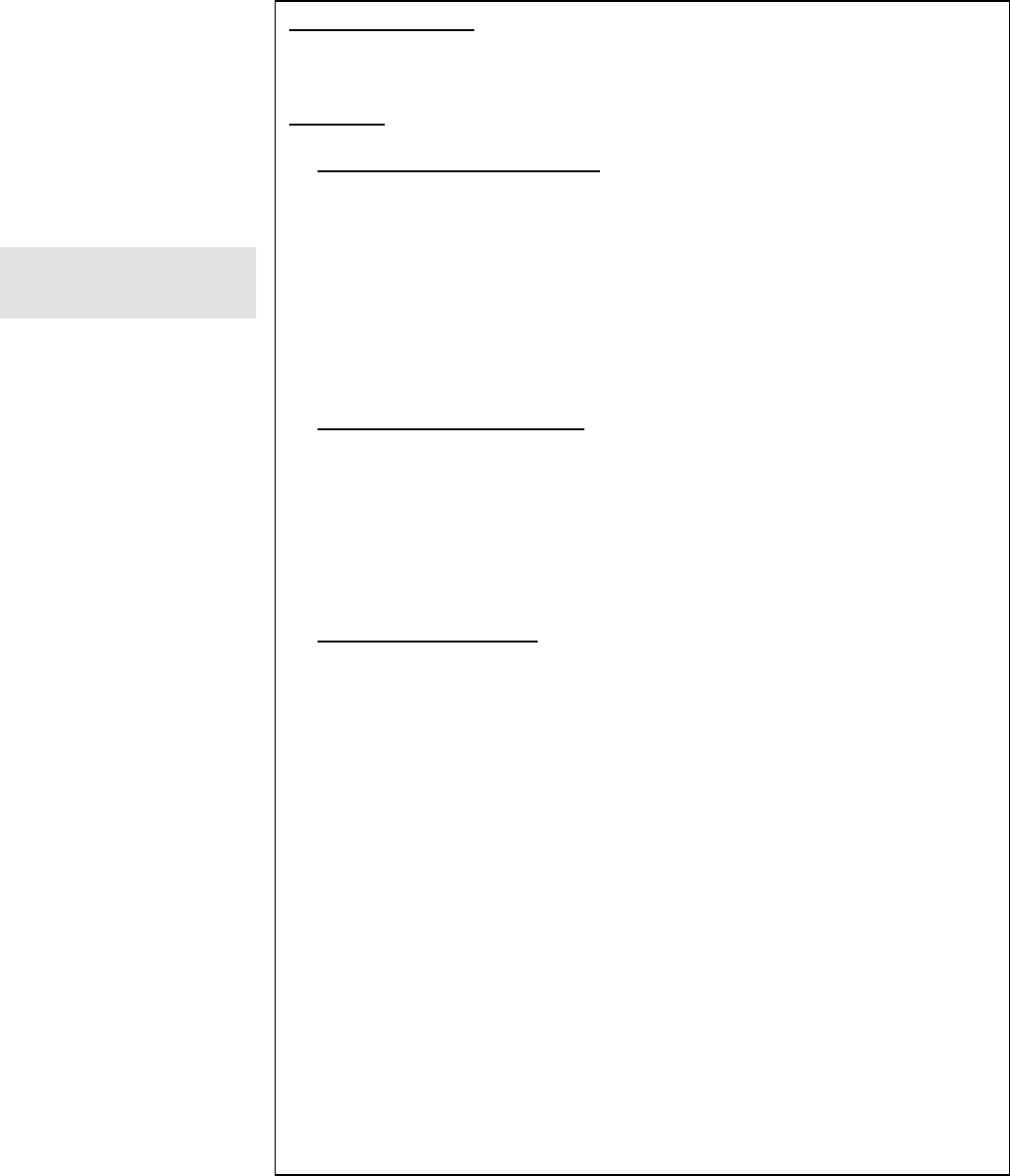

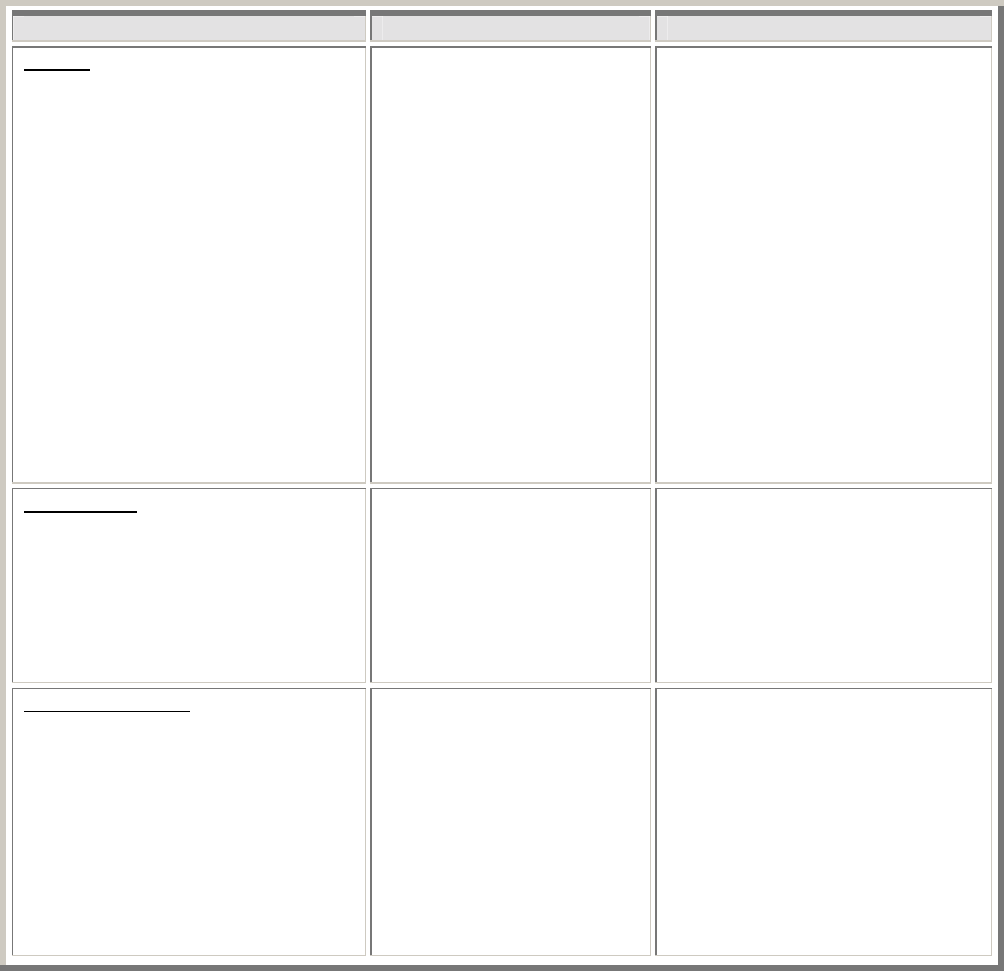

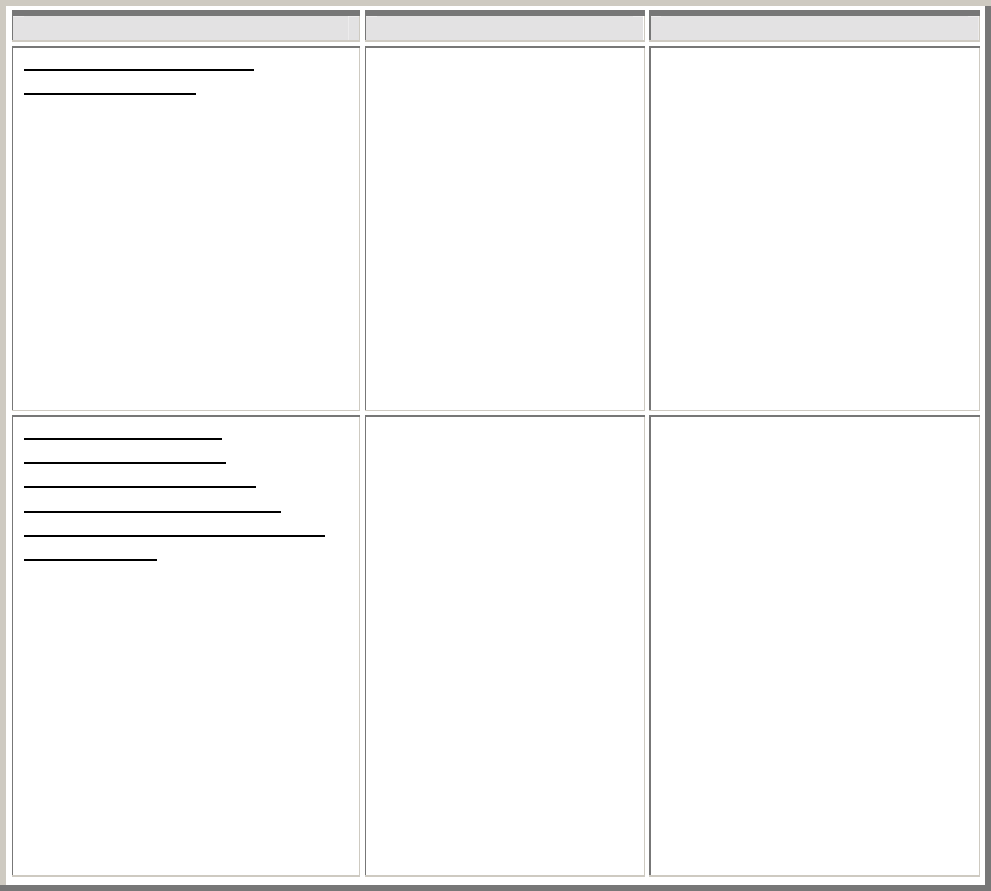

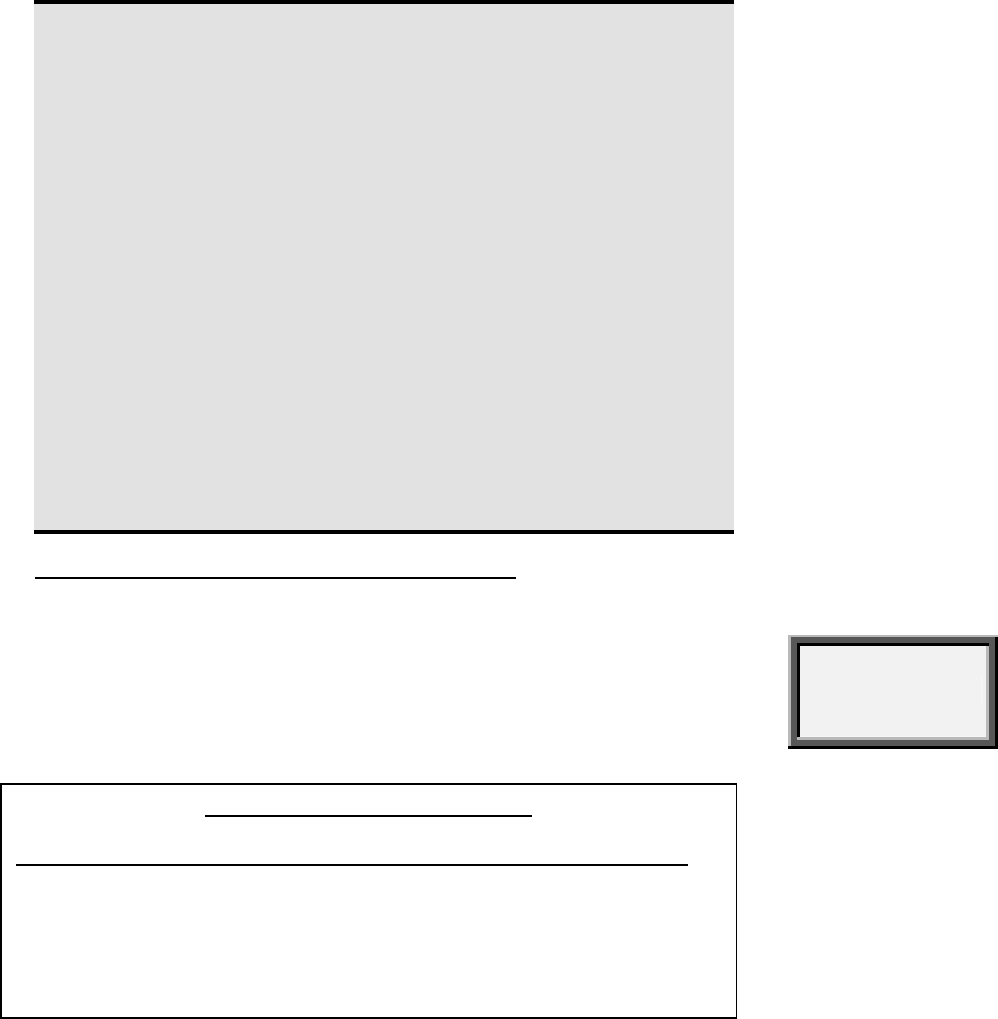

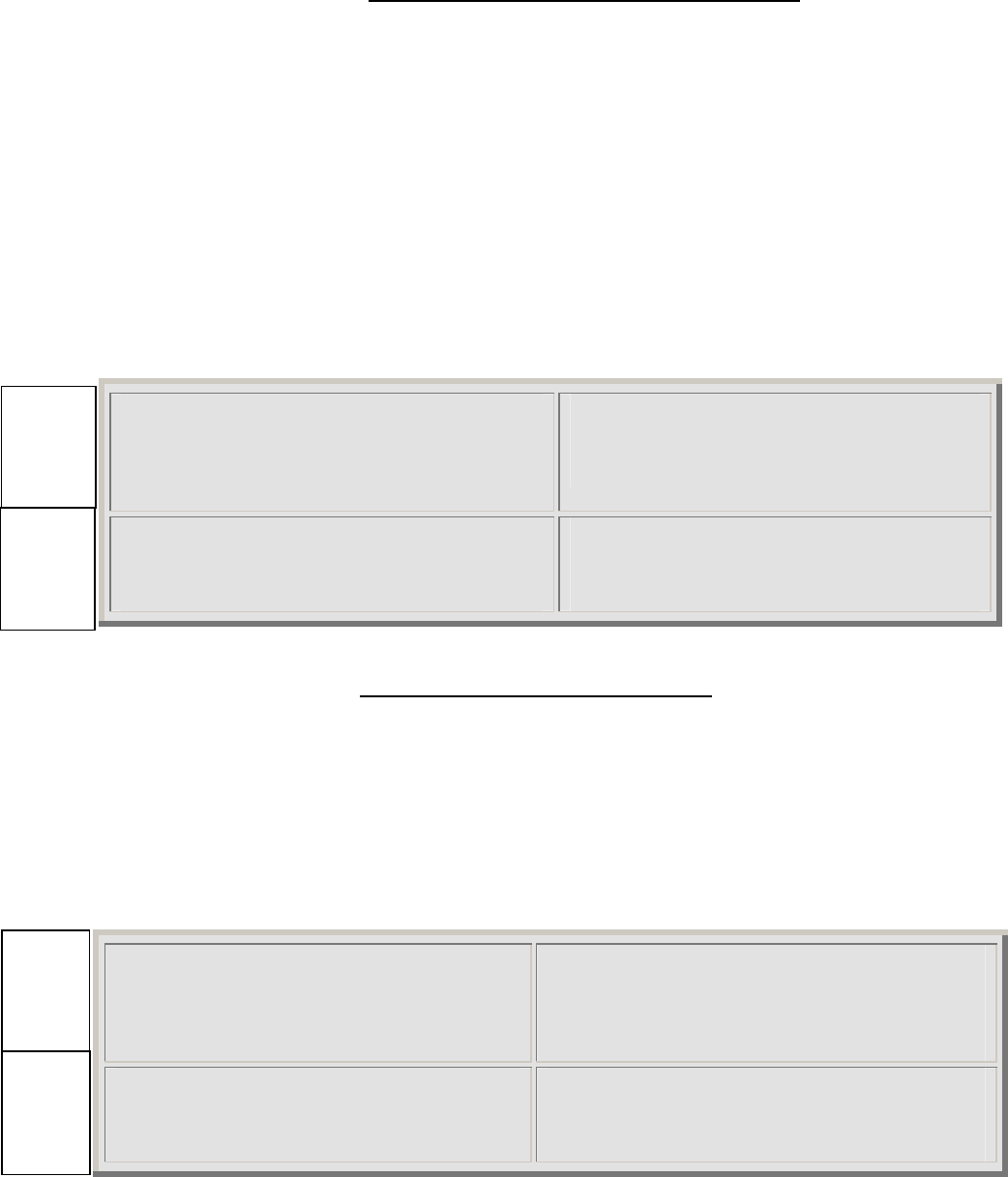

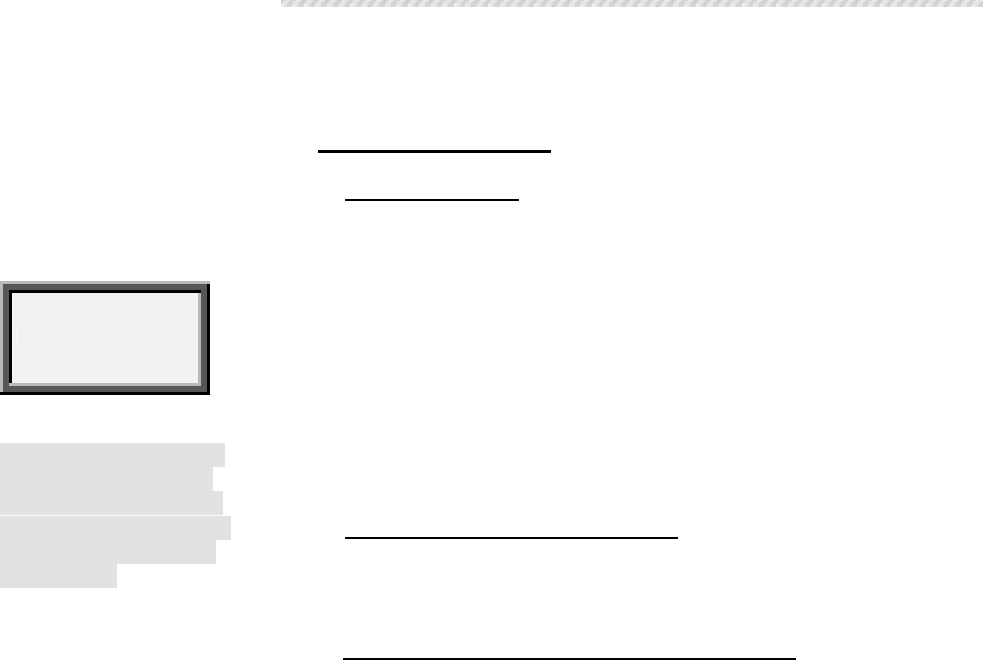

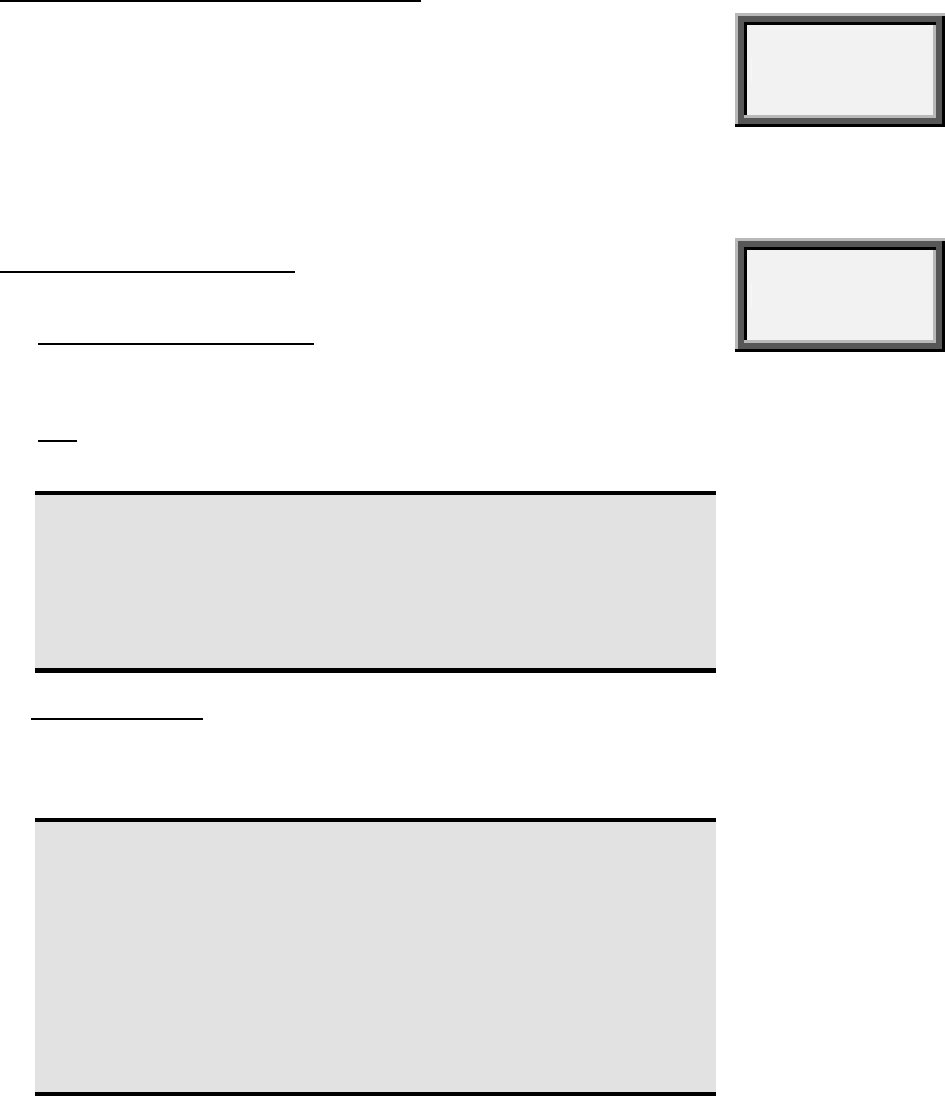

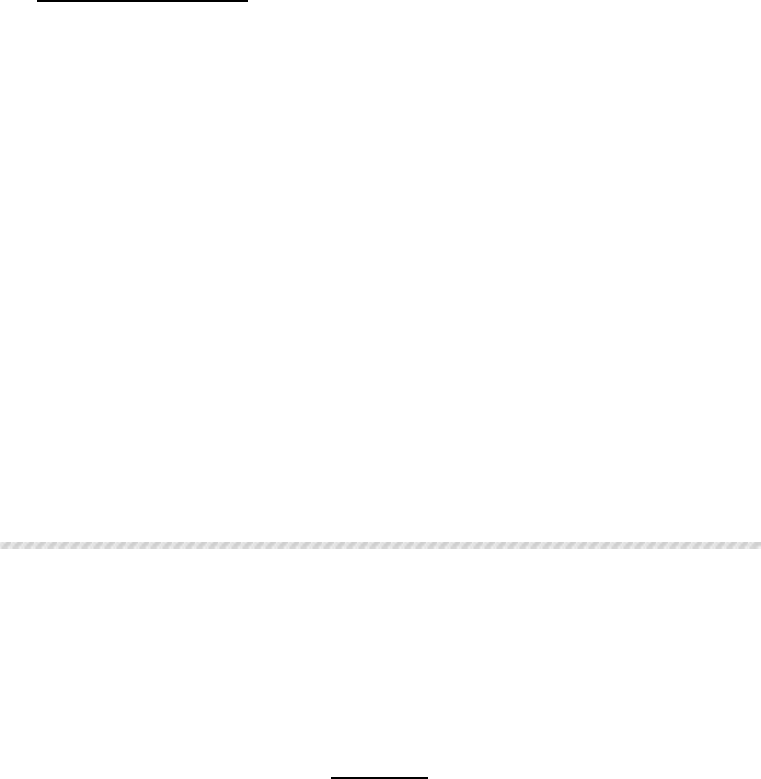

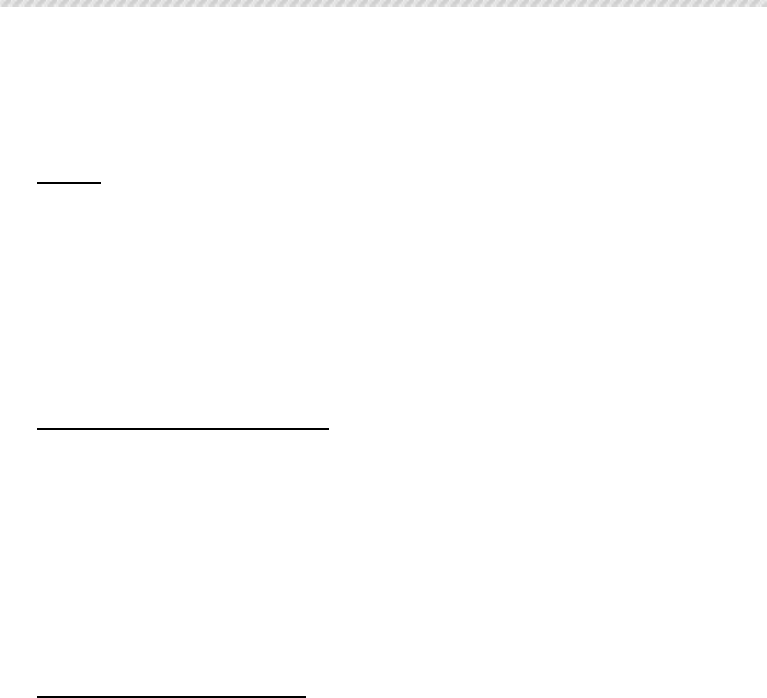

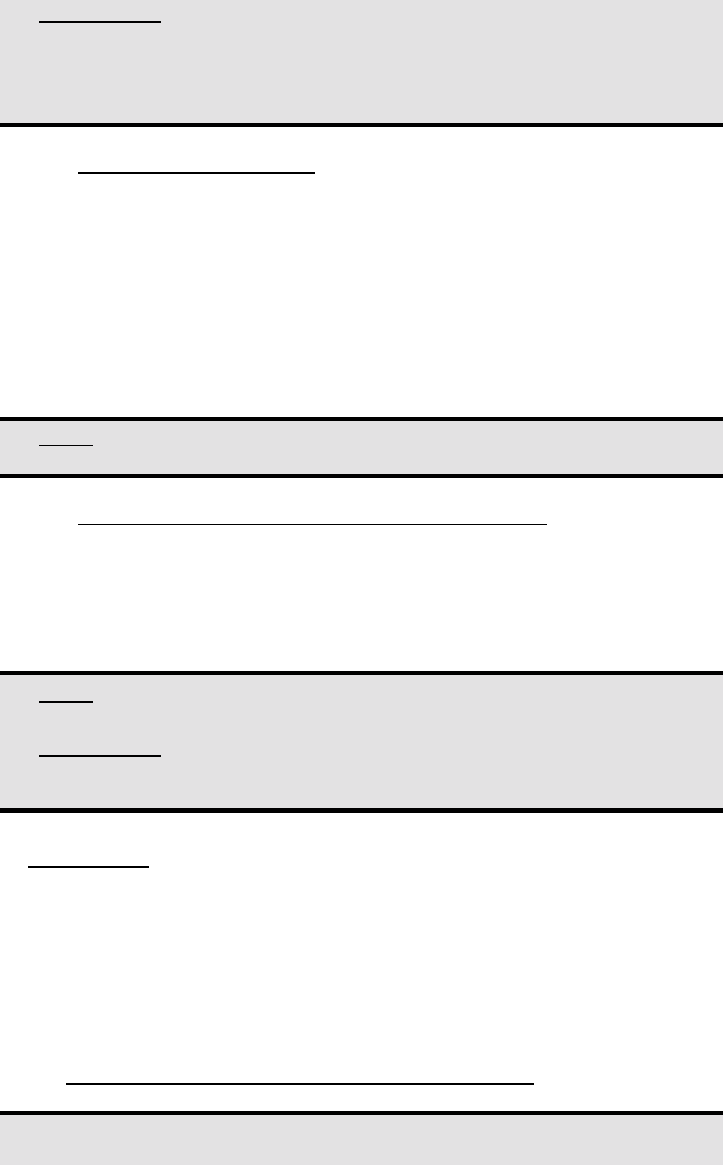

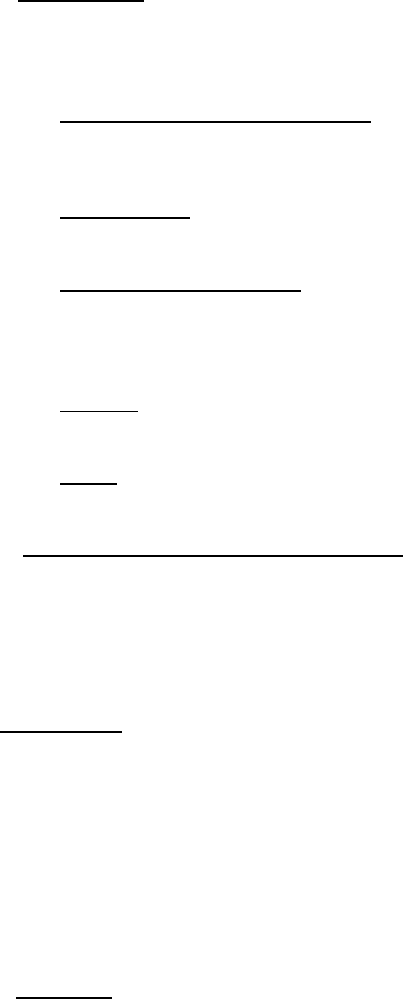

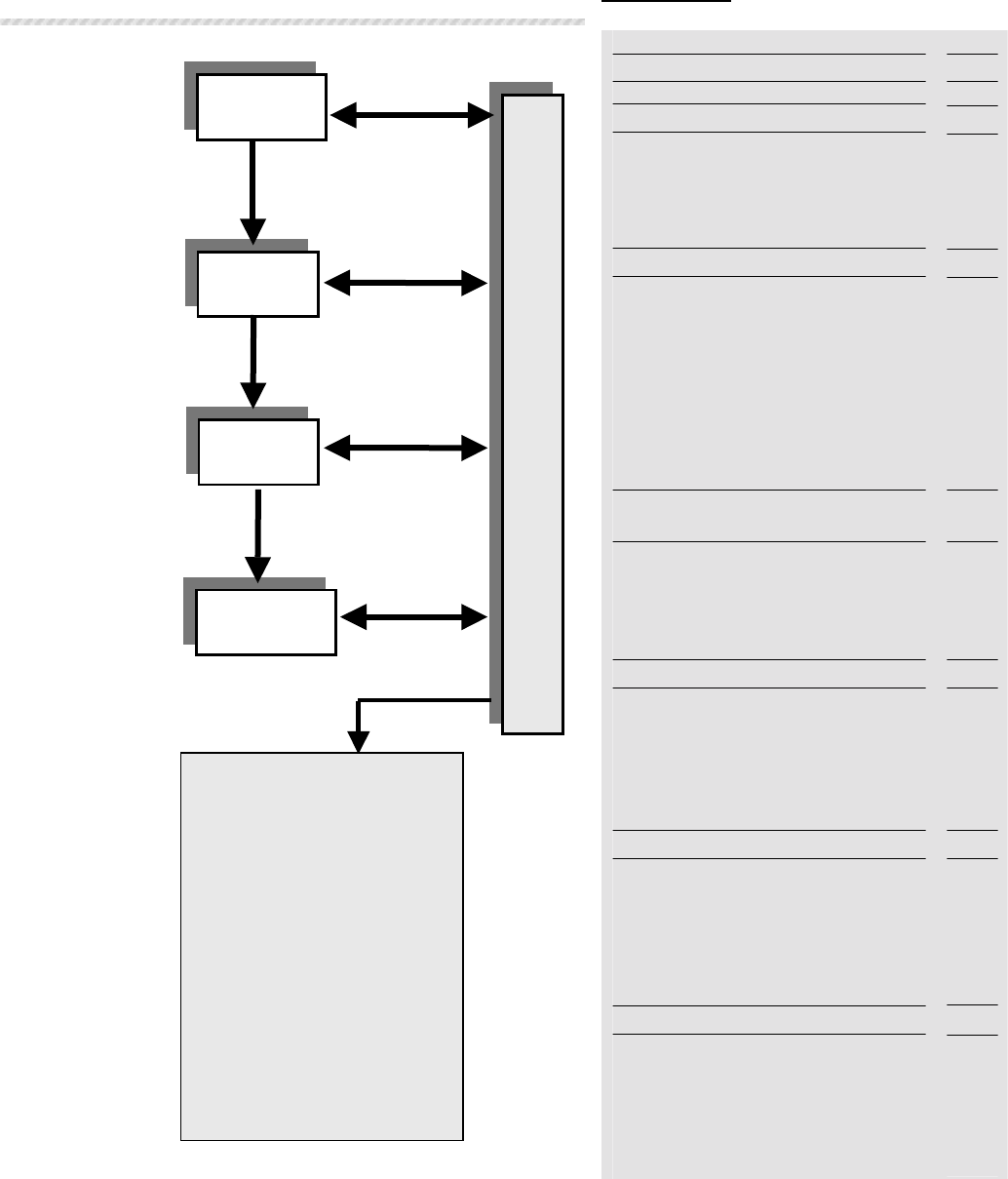

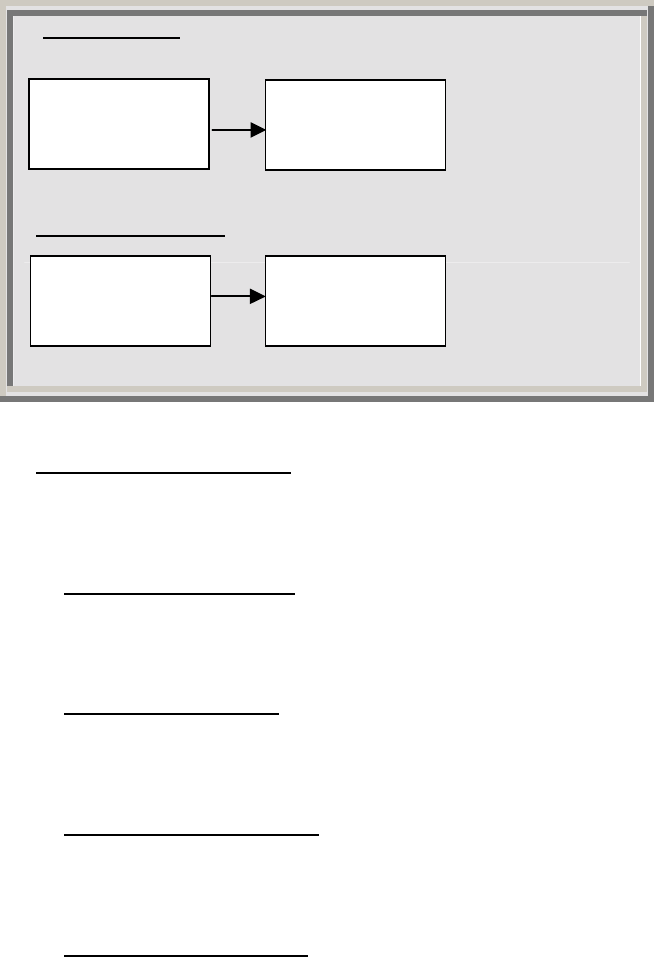

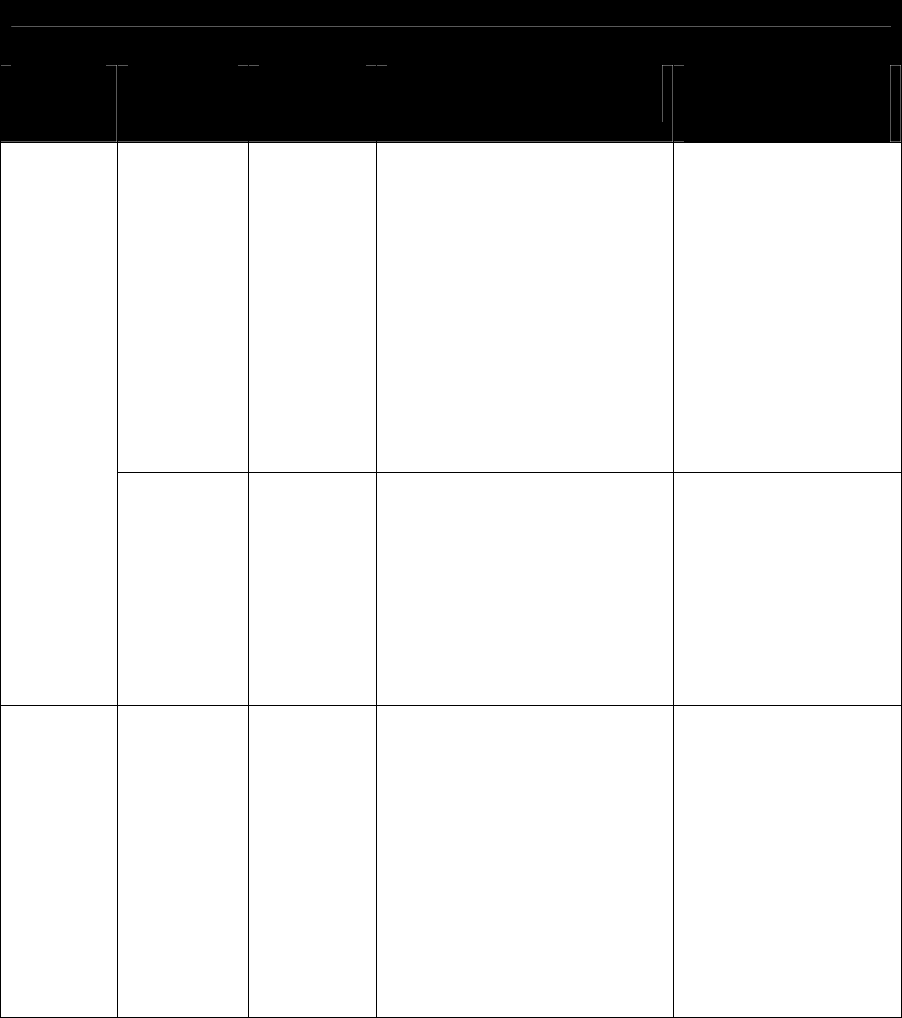

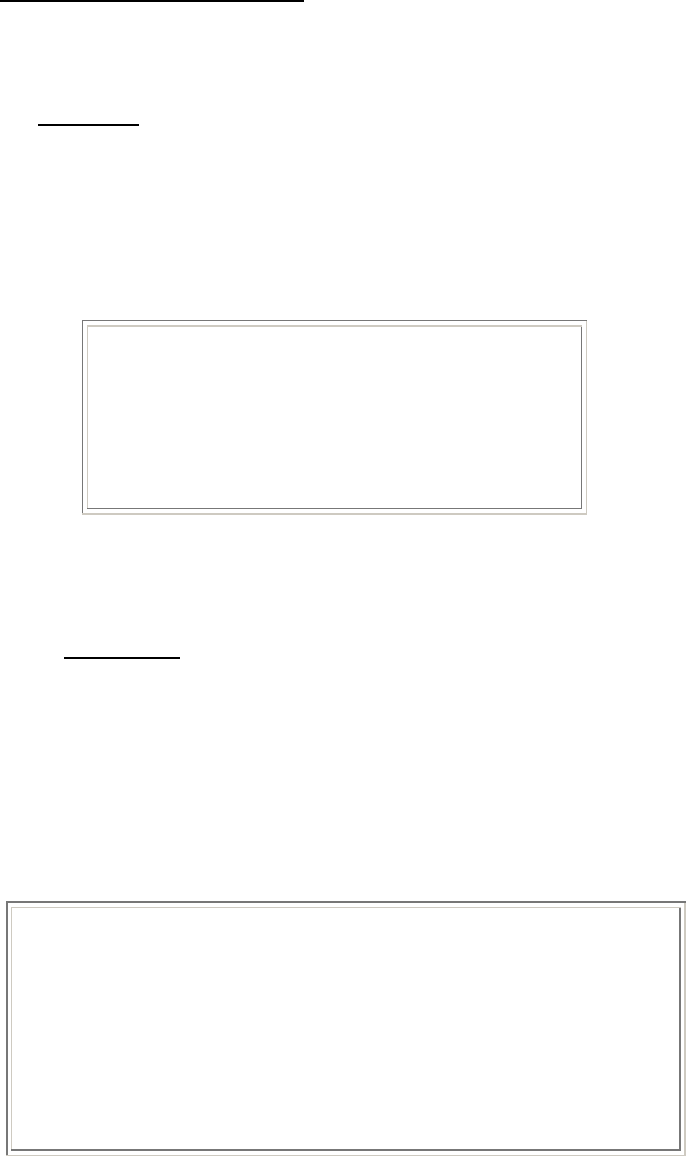

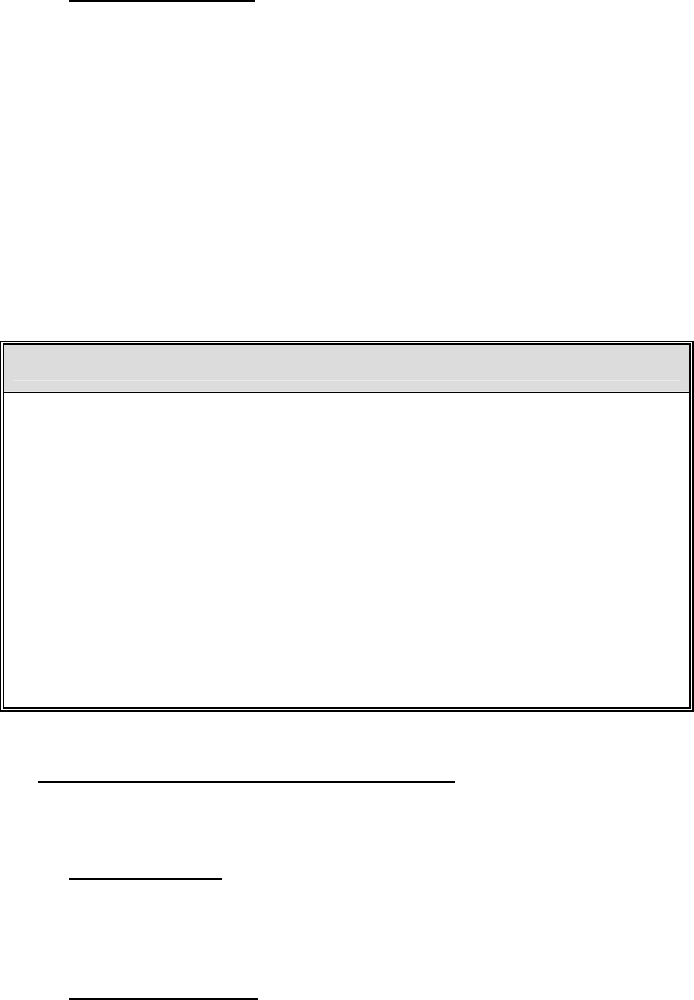

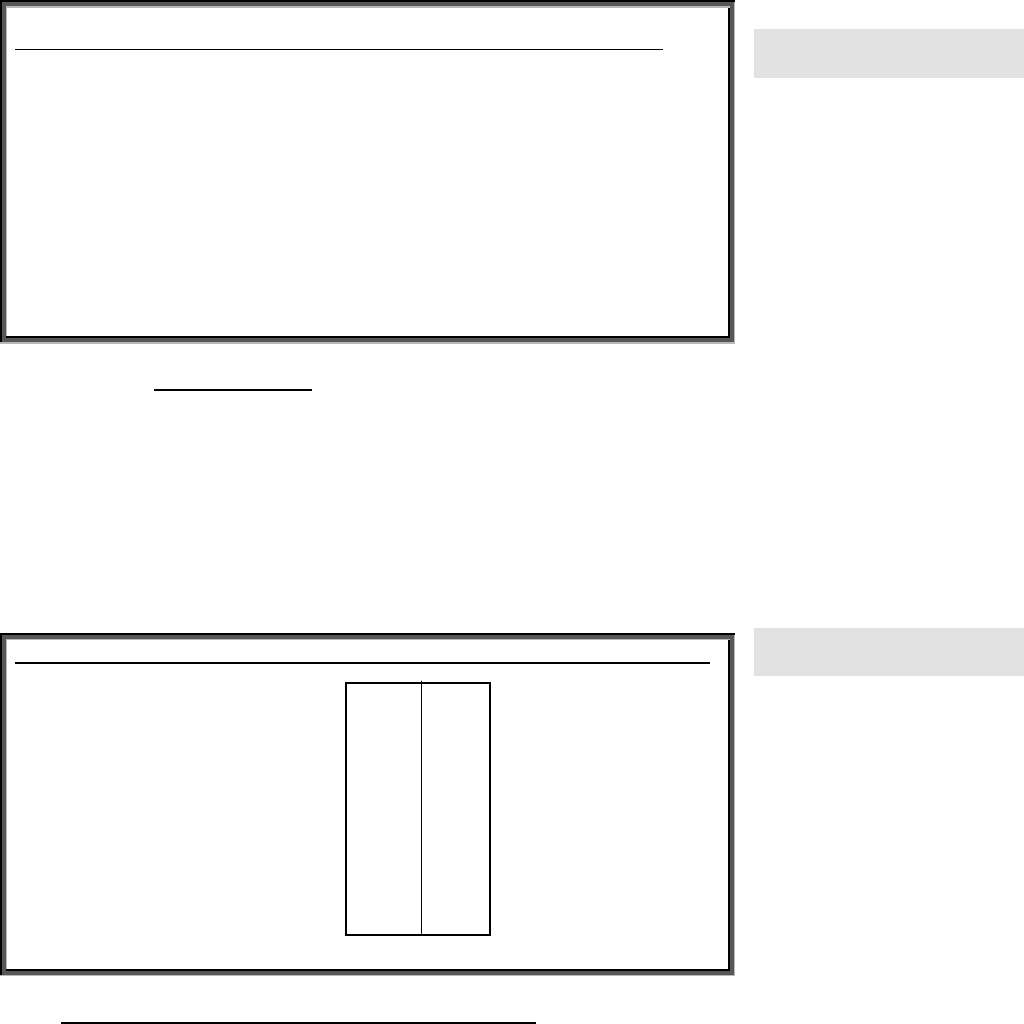

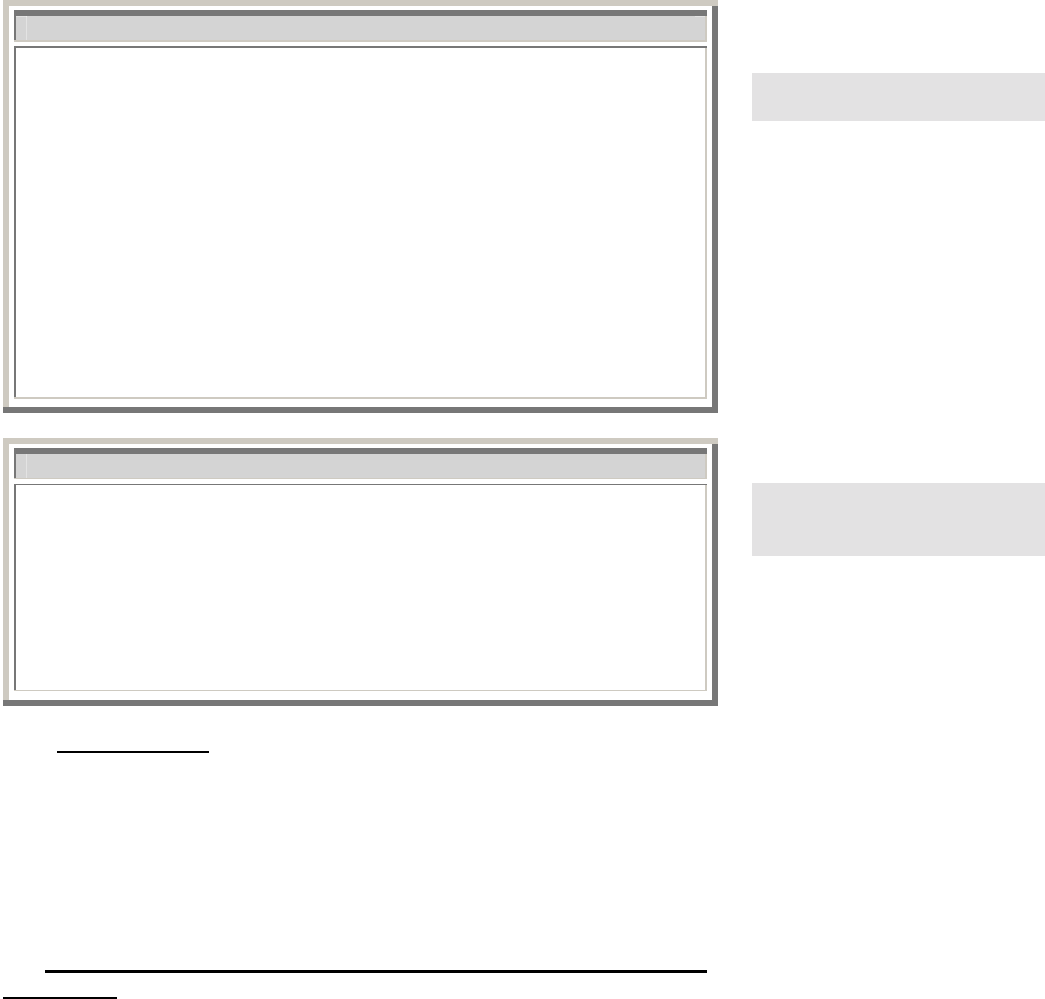

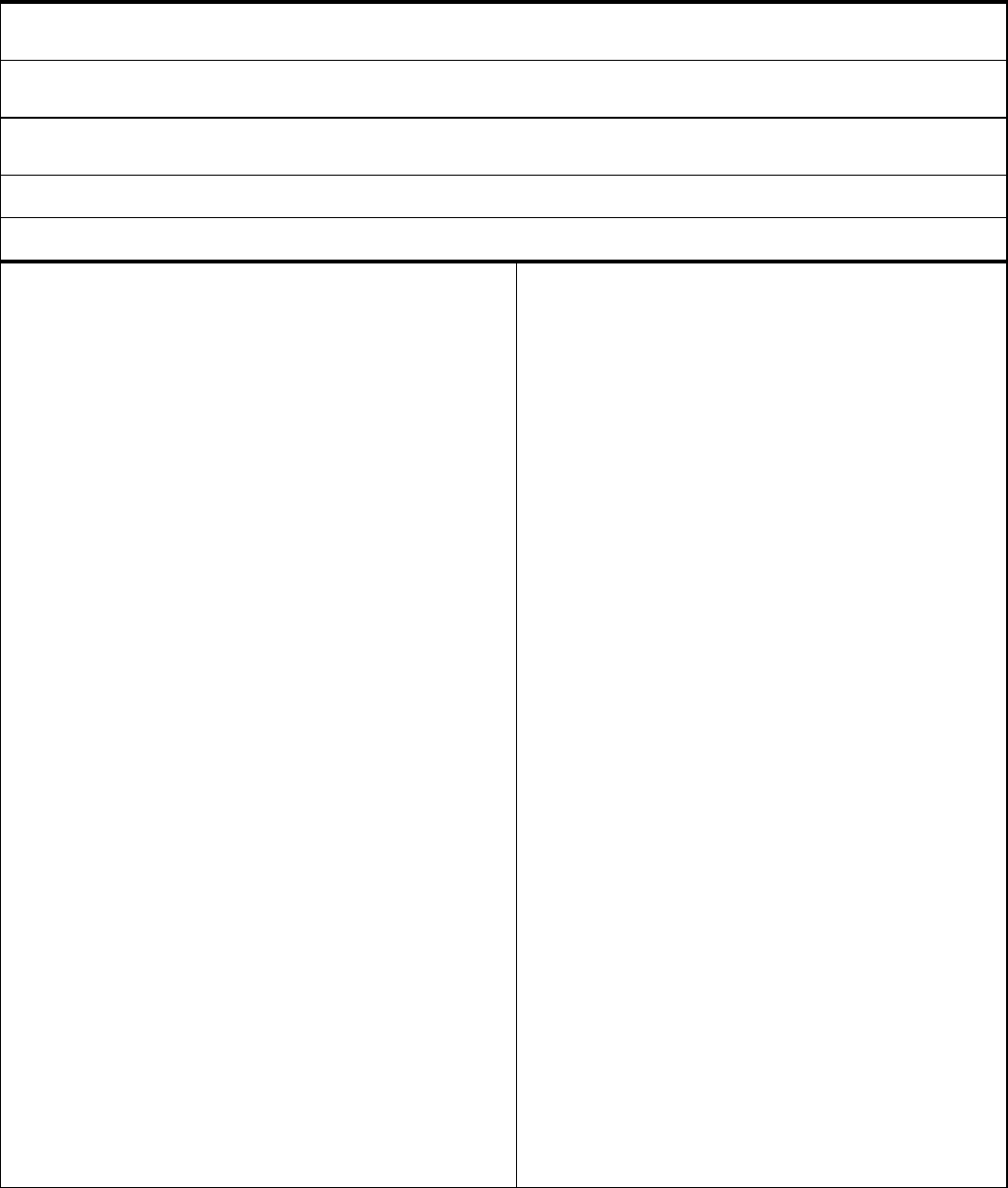

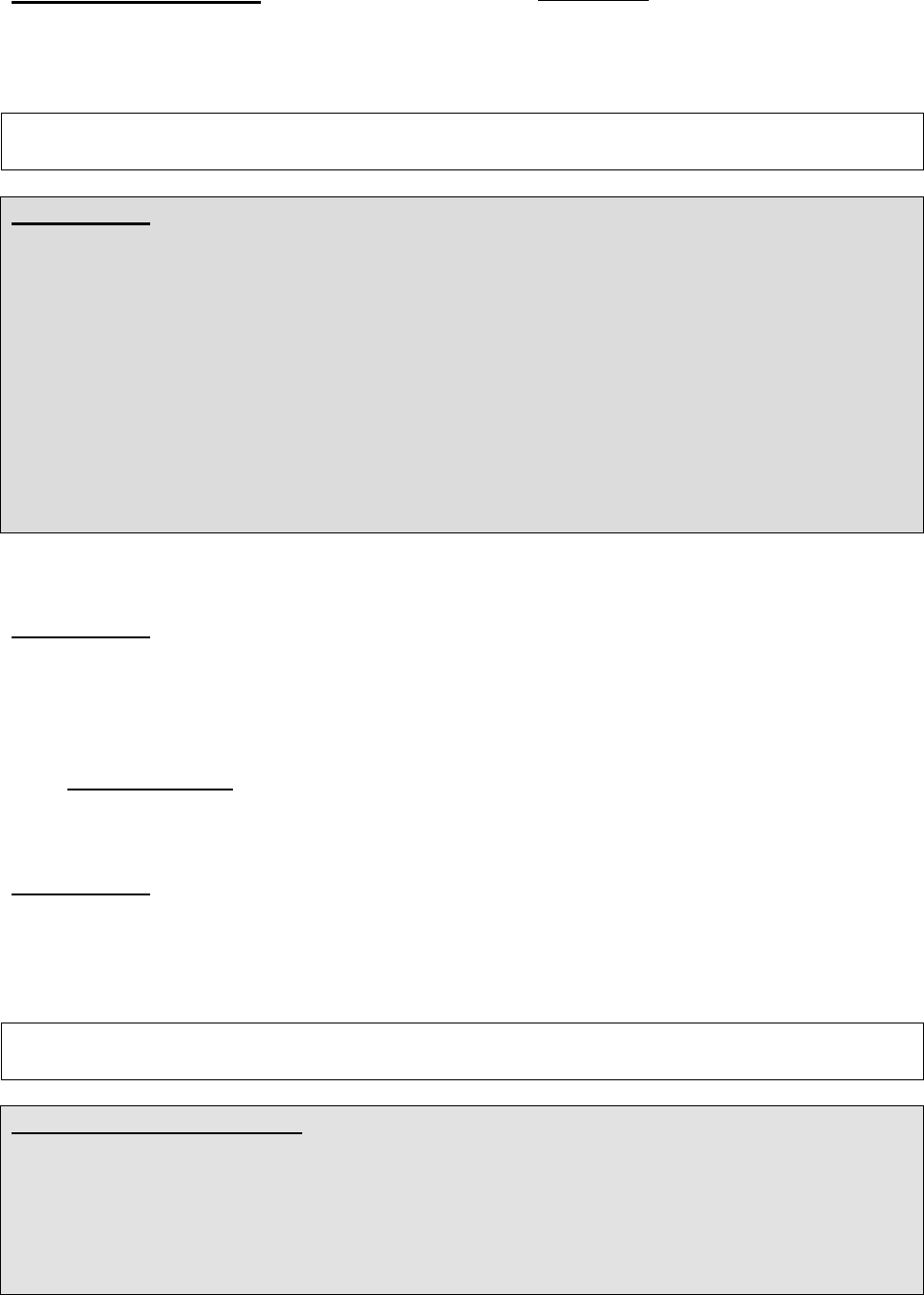

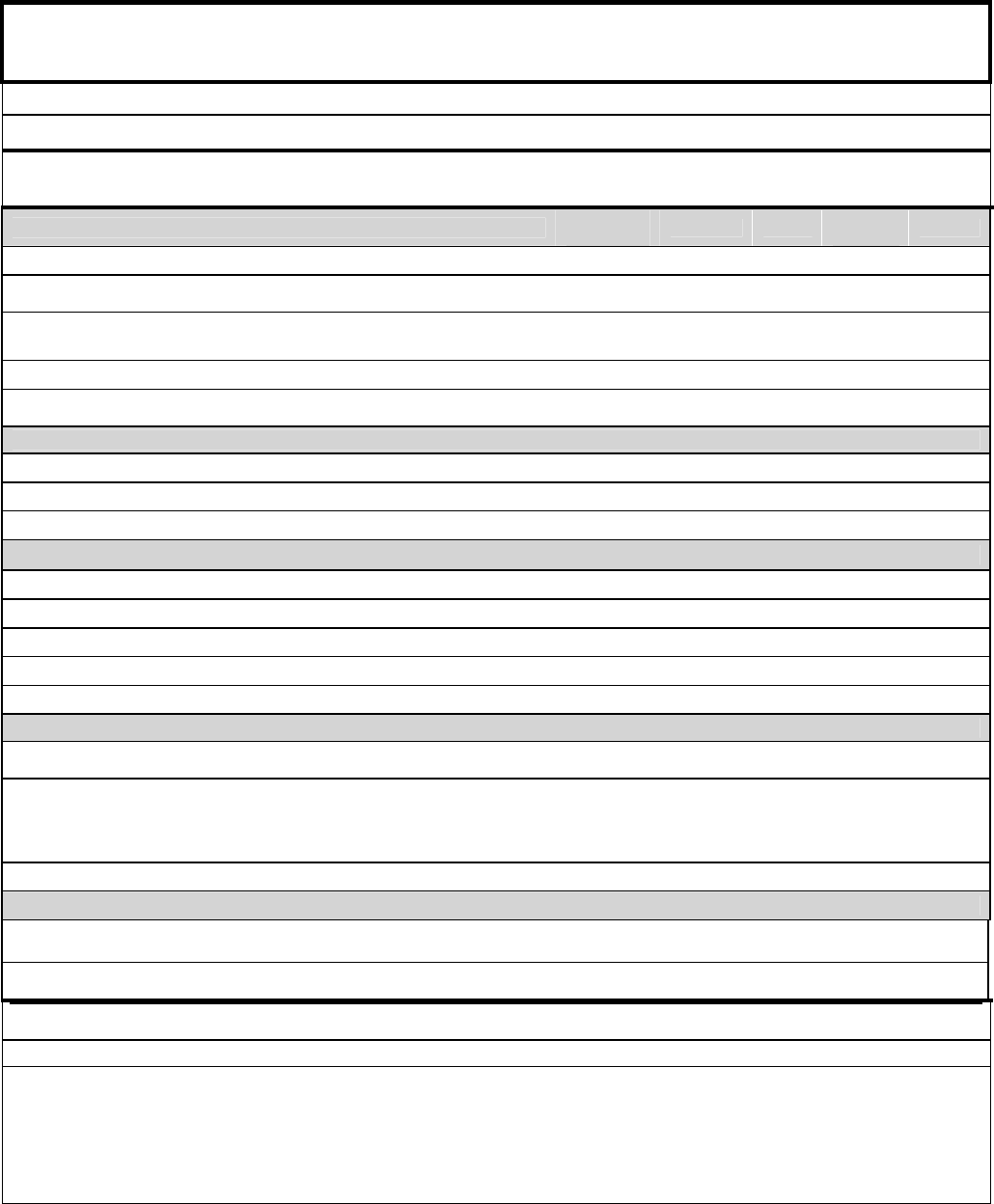

Figure 2-1

PROCESS

PROCESS

OUTPUT

OUTPUT

ITS or T&R

TPD

Learning Objectives

Test Items

Methods/Media

Sequenced TLOs

Write TPD

Conduct Learning Analysis

Sequence TLOs

INPUT

PROCESS

PROCESS

OUTPUT

OUTPUT

ITS or T&R

TPD

Learning Objectives

Test Items

Methods/Media

Sequenced TLOs

TPD

Learning Objectives

Test Items

Methods/Media

Sequenced TLOs

Write TPD

Conduct Learning Analysis

Sequence TLOs

Write TPD

Conduct Learning Analysis

Sequence TLOs

INPUT

Systems Approach To Training Manual Design Phase

Chapter 2 2-

2

2100. WRITE THE TARGET POPULATION

DESCRIPTION

INTRODUCTION The first process of the Design Phase is to write the Target

Population Description (TPD). A TPD is a description of the knowledge, skills,

and attitudes (KSAs) students are expected to bring to a course of instruction. It

provides a general description of an average student and establishes the minimum

administrative, physical, and academic prerequisites they must possess prior to

attending a course. During the Design Phase, the TPD will provide guidance for

developing objectives and selecting instructional strategies that will meet the

needs of the students.

2101. ROLE OF TPD IN INSTRUCTION

The TPD provides the focus for designing instruction. For instruction to be

effective and efficient, it must build upon what students already know.

Considering the TPD allows the curriculum developer to focus on those specific

knowledge and skills a student must develop. For example, if knowing the

nomenclature of the service rifle is required for the job, and the students entering

the course already possess this knowledge, then teaching this specific information

is not required. Conversely, if students entering a course do not know the service

rifle nomenclature, then they need instruction. The TPD also allows the curriculum

developer to select appropriate methods of instruction, media, and evaluation

methods. For example, experienced students can often learn with group projects

or case studies and self-evaluation. Entry-level students generally need instructor-

led training and formal evaluation. In summary, the TPD describes the average

student in general terms, establishes prerequisites, serves as the source document

for developing course description and content, and is used to design instruction.

2102. STEPS IN WRITING THE TPD

1. Obtain Sources of Data To clearly define the target population, gather

data from the appropriate sources listed below. These references outline job

performance by detailing what tasks must be performed on the job and the

specific requirements of that particular job.

a. MCO P1200.7_, Military Occupational Specialty (MOS) Manual.

b. Marine Corps Order (MCO) P3500 Series, Training and Readiness (T&R).

c. Marine Corps Order (MCO) 1510 Series, Individual Training Standards

(ITS).

Additionally, information can be obtained from the OccFld Sponsor and Task

Analysts (GTB) by means of phone conversation and/or electronic message.

SECTION

1

KSA - Knowledge, skills, and

attitudes.

TPD - Target Population

Description

Gather Data

STEP 1

Systems Approach To Training Manual Design Phase

Chapter 2 2-

3

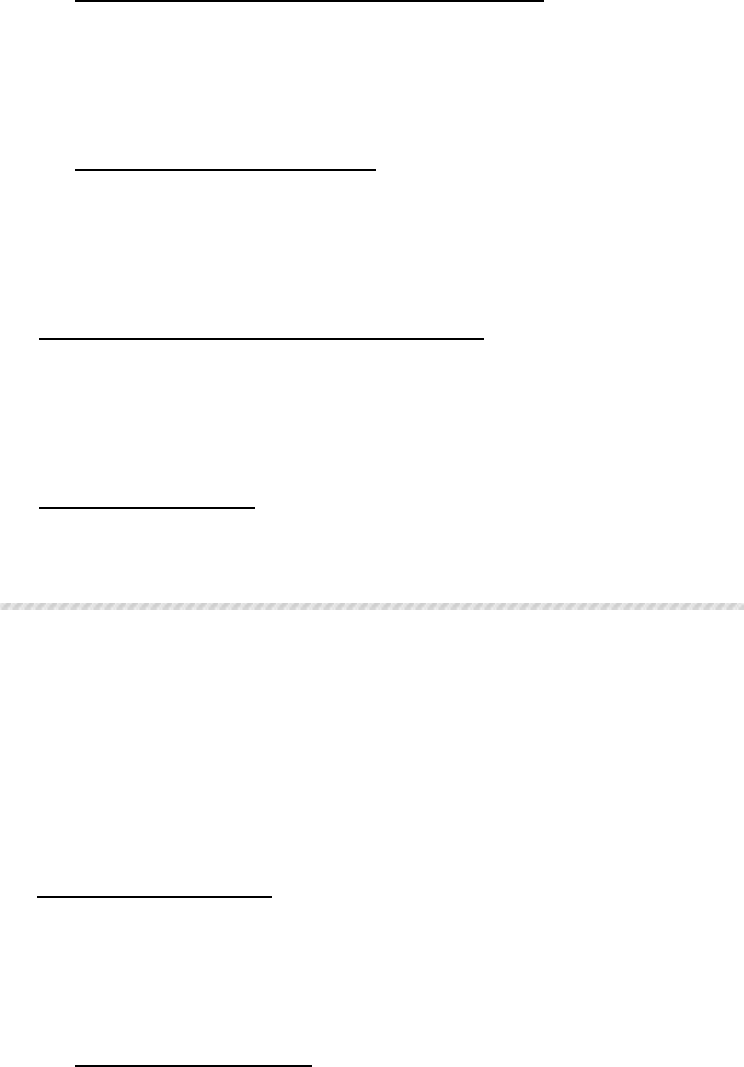

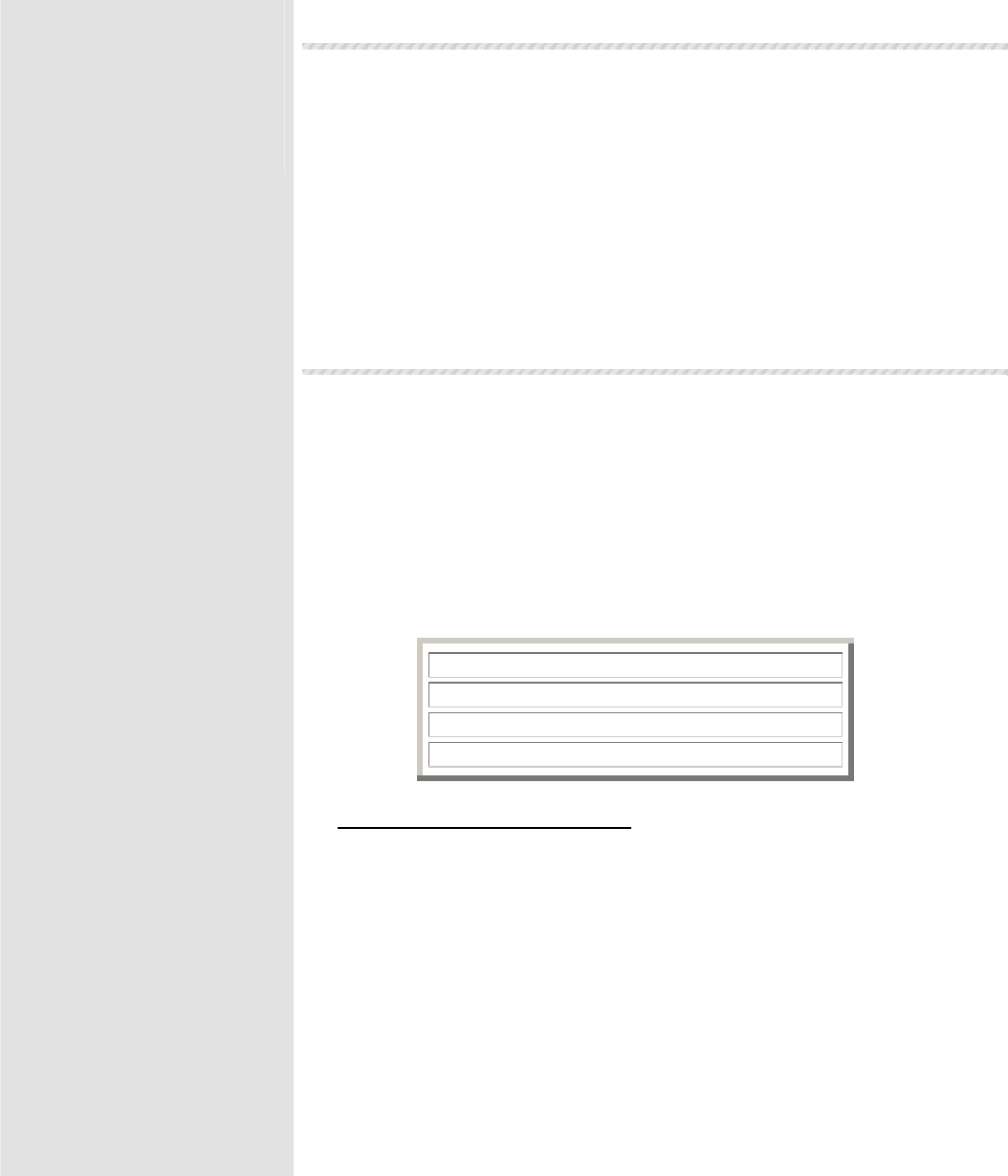

1. Administrative

2. Physical

3. Academic

Figure 2-2.

Sample Target

Population Description

(TPD)

STEP 2

STEP 3

2. Gather and Review Student Background Information While

considering the adult learning characteristics identified in Chapter 6 and the

resources identified above, review pertinent student background information. In

order to ensure the course prerequisites are correct and that the training program

is developed to meet the attributes of the TPD, organize this information into the

following categories:

a. Administrative Certain prerequisites may be necessary due to

administrative requirements of the school or the course material. These

prerequisites include the student’s rank, MOS, security clearance, time

remaining in service, or police record (which may mean exclusion from

certain types of instruction).

b. Physical Physical information includes specific skills and general fitness

which may include age, height, color perception, vision acuity, physical

limitations, etc.

c. Academic Academic information represents an inventory of the

knowledge and skills the student must or will possess prior to the start of

instruction. These prerequisites may include specific basic courses already

completed, reading level, test scores, training experience and GCT/ASVAB

scores.

3. Write the TPD Capture information that describes the general

characteristics of the average student attending the course. Summarize the data

into a concise paragraph describing the target population. Organize the general

information describing the average student so that it is grouped together and any

prerequisites are grouped together.

TPD FOR CURRICULUM DEVELOPER COURSE

This course is designed for Sergeant through Lieutenant Colonel and civilian

employees who perform curriculum development duties at a Marine Formal

School or Detachment. Prior to being enrolled in this course, students are

required to complete the Systems Approach to Training Interactive

Multimedia Instruction (IMI), and the Operational Risk Management IMI.

Most students attending the course have experience as an instructor at a

Formal School or Detachment, are able to use Microsoft Word and

PowerPoint, and possess effective written communication skills.

Systems Approach To Training Manual Design Phase

Chapter 2 2-

4

2200. CONDUCT A LEARNING ANALYSIS

The second process of the Design Phase is to conduct a Learning Analysis to define

what will be taught. The purpose of the Learning Analysis is to examine the real world

behavior that the Marine performs in the Operating Forces and transform it into the

instructional environment. A Learning Analysis produces three primary products

essential to any Program of Instruction (POI): learning objectives, test items, and

methods/media. This process allows for adjustments to be made to accommodate for

resource constraints at the formal school/detachment. A Learning Analysis must be

performed for every task covered in new courses. Additionally, each new task added

to either the Individual Training Standard (ITS) Order or Training and Readiness (T&R)

Manual, and taught at the formal school, requires a Learning Analysis.

2201. STEPS TO CONDUCT A LEARNING ANALYSIS

1. Gather Materials The first step in conducting a Learning Analysis is to gather

materials. Once the scope of the course that the curriculum developer is designing is

determined (by reading guidance from TECOM or the school commander), obtain the:

a. ITS order or T&R manual

– to determine what tasks the jobholder performs.

b. Publications

– like orders, directives, manuals, job aids, etc. that will help

analyze the tasks to be taught.

c. Subject Matter Experts

– to fill in details that the publications will not. SMEs

will conduct the brainstorming session along with the curriculum developer.

d. Learning Analysis Worksheet (LAW)

- Use the LAW found in the SAT Manual,

enlarge it to turn-chart size, or create one on a dry erase board (take a digital photo to

record results). It does not matter which technique is chosen, as long as a record of

the analysis is created.

e. Previously developed LAWs and LOWs for established courses under review.

Figure 2-3 is an extract from an ITS task list. Figure 2-4 is an ITS and component

description. Figure 2-5 is an extract of a T&R event and component description.

POI- Program of

Instruction.

3 primary products of

a Learning Analysis:

1. Learning Objectives

2. Test items

3. Methods/Media

STEP 1

See Figures 2-3, 2-4, and 2-5 on the next several pages.

Systems Approach To Training Manual Design Phase

Chapter 2 2-

5

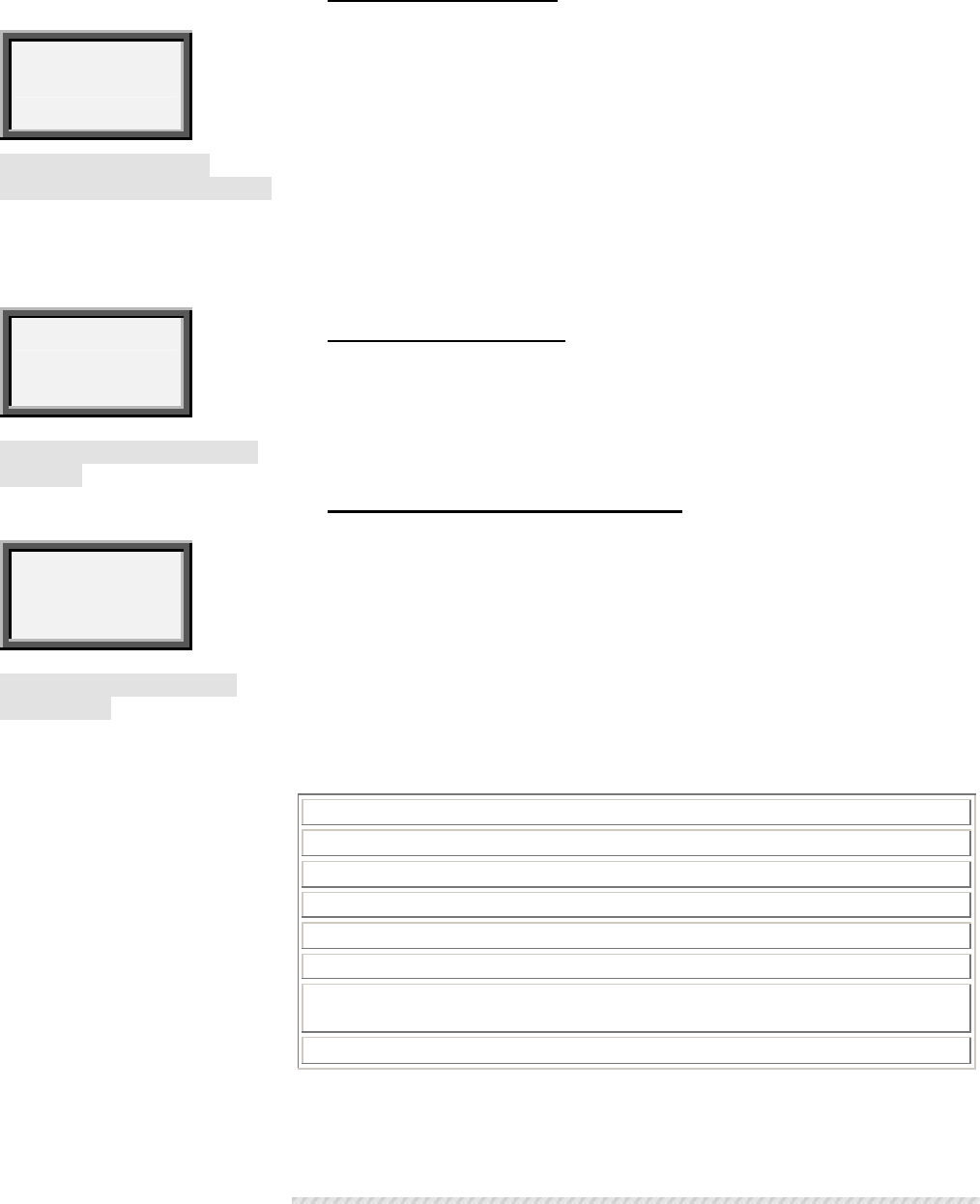

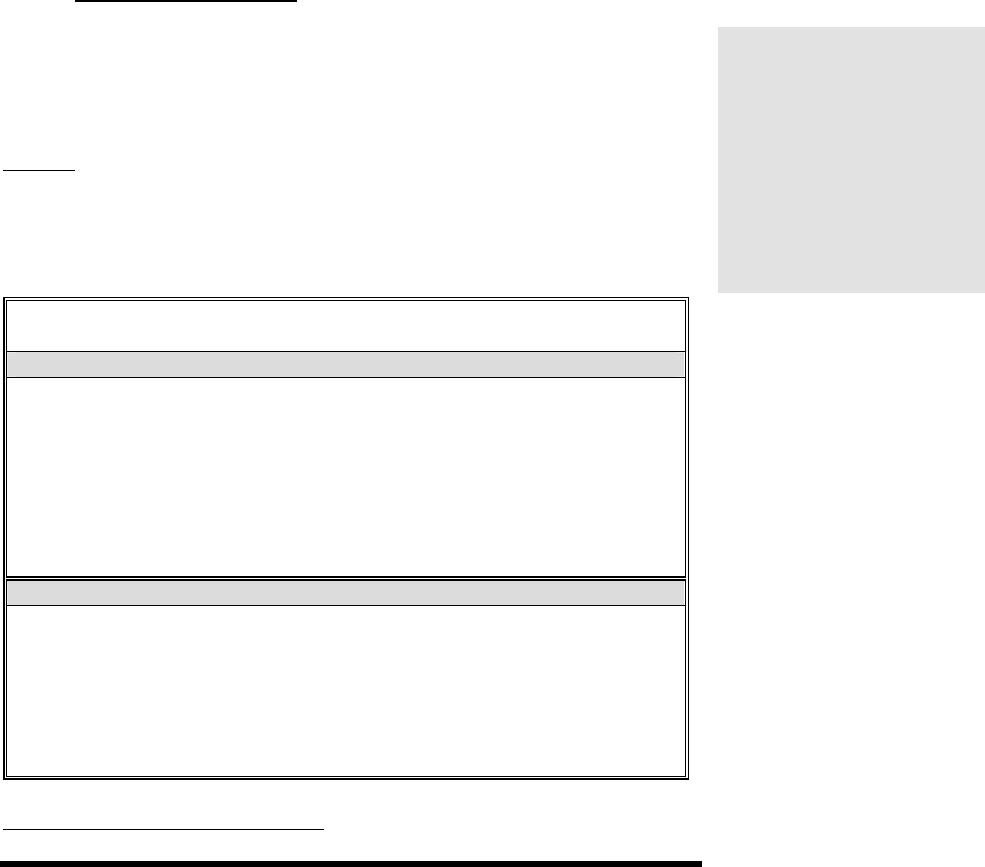

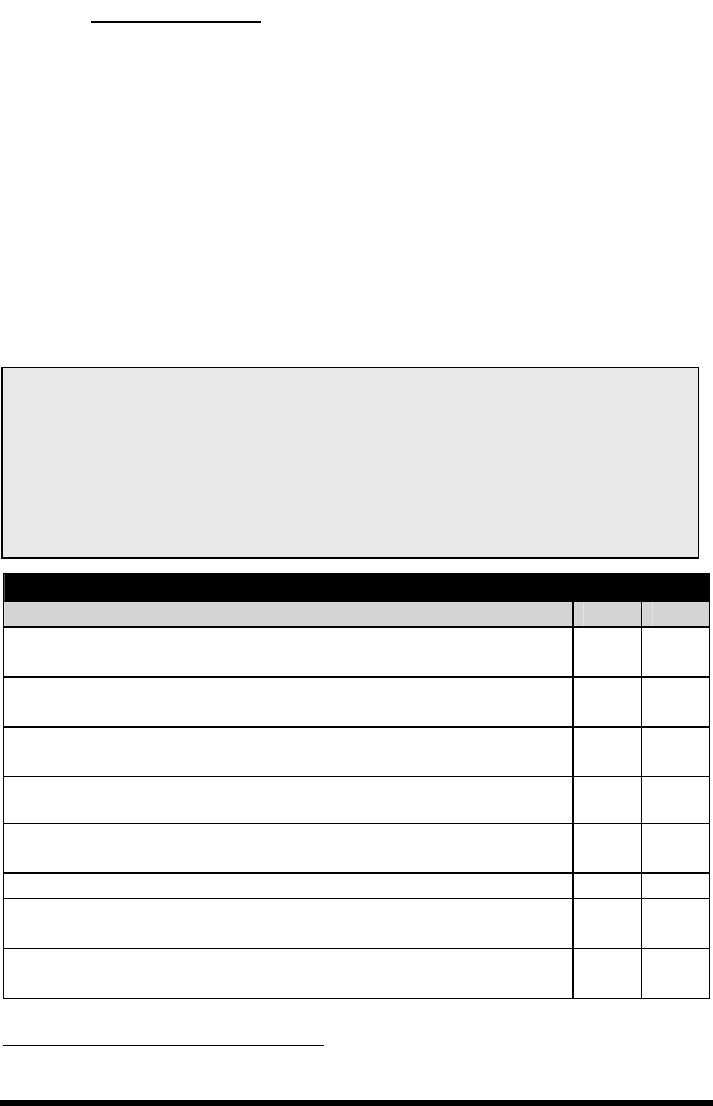

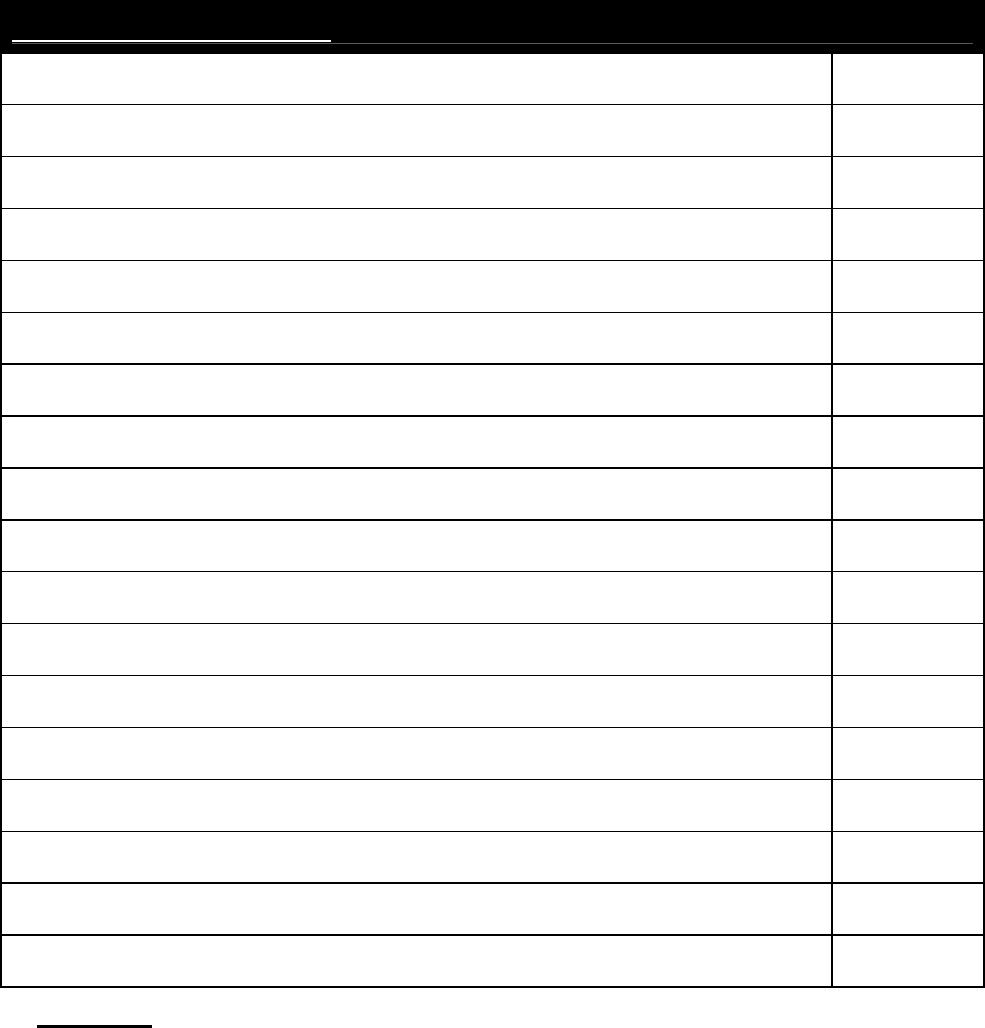

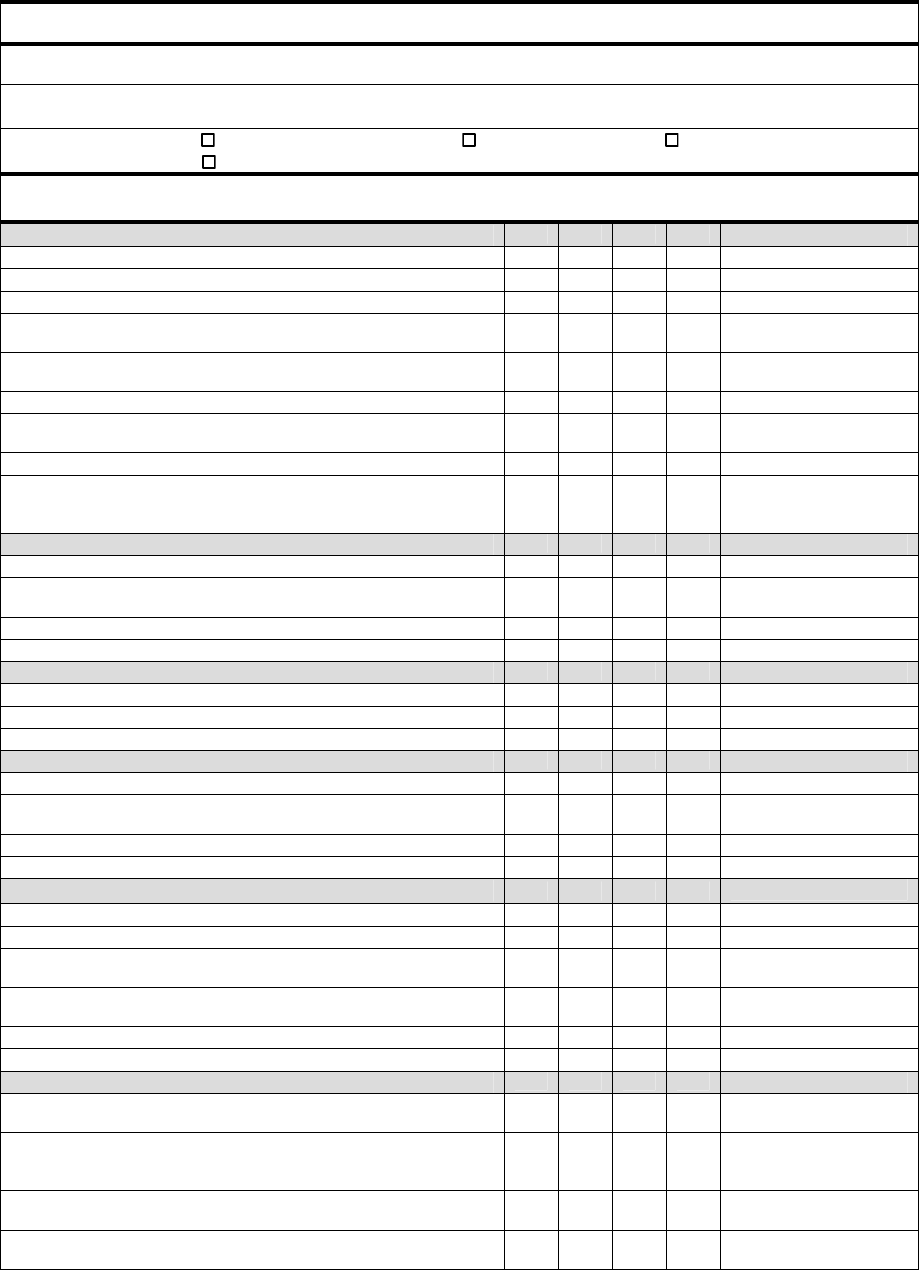

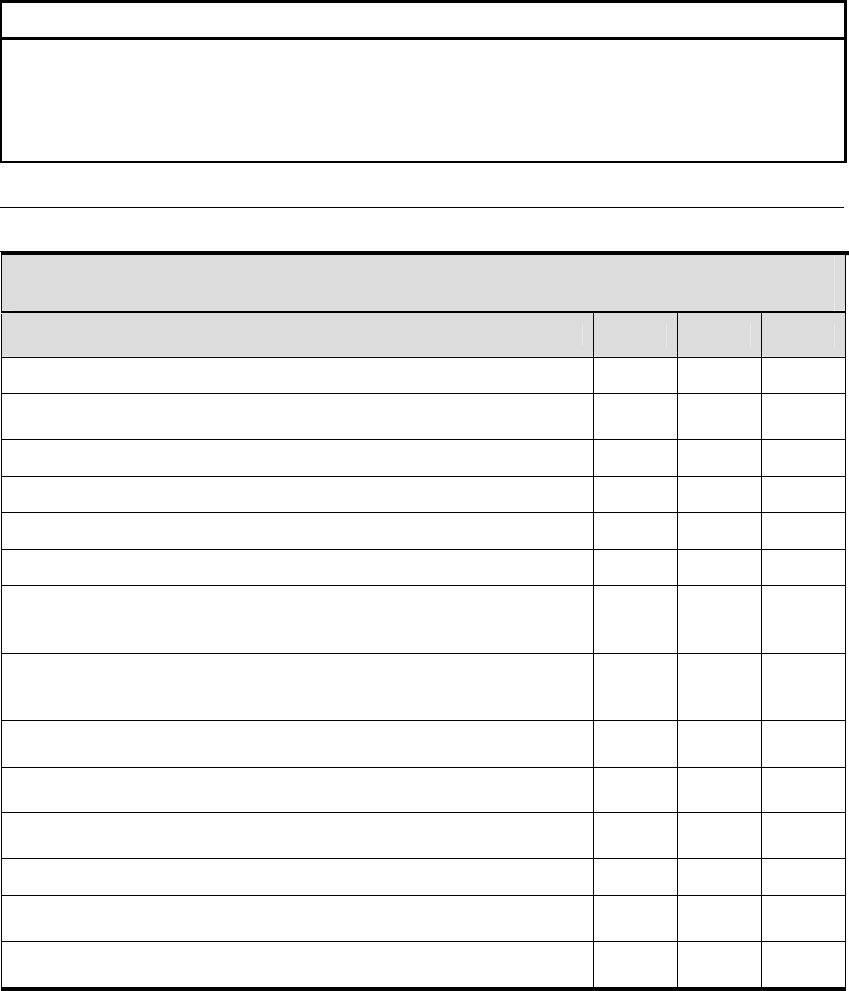

Figure 2-3 ITS Task List Extract

SUMMARY/INDEX OF INDIVIDUAL TRAINING STANDARDS

1. General

This enclosure contains a summary listing of all of the ITS tasks grouped by MOS and Duty Area.

2. Format

The columns are as follows:

a. SEQ

Sequence Number. This number dictates the order in which tasks for a given duty area are

displayed.

b. TASK

ITS Designator. This is the permanent designator assigned to the task when it is created.

c. TITLE

ITS Task Title.

d. CORE

An “X” appears in this column when the task is designated as a “core” task required to earn the

title United States Marine and Basic Rifleman.

e. FS/D

Formal School/Detachment. An “X” is in this column when the FS/D is designated as the initial

training setting.

f. PST

Performance Support Tool. An “X” in this column indicates that at least one PST is associated with

this task. Consult enclosure (6) for details.

g. DL

Distance Learning Product. An “X” in this column indicates that at least one DL product is associated

with this task. Consult enclosure (6) for details.

h. SUS

Sustainment Trainin

g

Period. An entry in this column represents the number of months within which

the unit is expected to train or retrain this task to standard provided the task supports the unit’s METL.

i. REQ BY

Required By. An entry in this column depicts the lowest rank required to demonstrate

proficiency in this task.

j. PAGE

Page Number. This column lists the number of the page in enclosure (6) that contains detailed

information concerning this task.

SE

Q

TASK # TITLE CORE FS/D PST DL SUS RE

Q

BY PAGE

MOS , MCCS, Marine Corps Common Skills

DUTY AREA 11 – INDIVIDUAL WEAPONS (IMCCS)

1) MCCS.11.01 PERFORM WEAPONS HANDLING WITH M16.....X X 12 Pvt 6-A-38

2) MCCS.11.02 MAINTAIN THE M16A2 SERVICE RIFLE X X 12 Pvt 6-A-38

3) MCCS.11.03 ENGAGE TARGETS WITH THE M16A2 X X 12 Pvt 6-A-39

SERVICE RIFLE AT THE SUSTAINED RATE

Systems Approach To Training Manual Design Phase

Chapter 2 2-

6

Individual Training Standards Component Description

1. General ITS's contain six components: task, condition(s), standard(s),

performance steps, reference(s), and administrative instructions.

2. Alphanumeric System Each ITS is identified by a designator consisting, in

order, of four alphanumeric characters: a period, two numbers, a period and two

additional numbers.

a. The first four characters identify the job and should be the same as the

MOS if one exists. For the instructor, the job designator is 8806.

b. The two Arabic numerals following the first period represent a DUTY area

of the JOB. The designator for the first DUTY area under JOB 8806 is 8806.01.

c. The last two Arabic numerals within the designator represent a task within

the DUTY area. The first TASK under the first DUTY area of JOB 8806 is identified

as 8806.01.01. The second TASK under the third DUTY area of JOB 8806 is

designated as 8806.03.02, and so forth.

3. ITS Components

a. Task The task describes what a Marine has to do. It is a clearly stated,

performance-oriented action requiring learned skills and knowledge. A rank

(grade) is noted for each task. This rank is the grade at which the Marine must

be able to perform that task to standard.

FIGURE 2-4

Individual Training

Standard

FIGURE 2-4 (CONT)

Components Description

of an Individual training

Standard.

TASK: MCCS.11.02 (CORE). MAINTAIN THE M16A2 SERVICE RIFLE

CONDITION(S)

: Given an M16A2 Service Rifle, cleaning gear and

lubricants,

STANDARD

: To meet serviceability standards per the TM

PERFORMANCE STEPS

:

1. Handle the weapon safely.

2. Place the rifle in Condition 4.

3. Disassemble the rifle.

4. Clean the rifle.

5. Lubricate the rifle.

6. Reassemble the rifle.

7. Perform function check.

INITITAL TRAINING SETTING

: FS/D Sustainment (12) Req By (Pvt)

REFERENCE(S)

:

1. MCRP 3-01A, Rifle Marksmanship

2 TM 05538C-10/1A, Operator’s Manual for Rifle, 5.56mm M16A2 W/E.

ADMINISTRATIVE INSTRUCTIONS

: NONE

8806.01.01

JOB DUTY TASK

Designator

Systems Approach To Training Manual Design Phase

Chapter 2 2-

7

FIGURE 2-4 (CONT)

Components Description of

an Individual Training

Standard.

b. Condition(s)

The conditions set forth the real world or wartime

circumstances under which the tasks are to be performed. This element of an

ITS underscores "realism" in training. When resources or safety requirements

limit the conditions, this should be stated in Administrative Instructions. It is

important to understand that the conditions set forth in this Order are the

minimum and may be adjusted when applicable.

c. Standard(s)

A standard states exactly the proficiency level to which

the task will be performed. It is not guidance, but a very carefully worded

statement, which sets the proficiency level required when the task is

performed. The standard is the established acceptable level of task

performance under the prescribed conditions.

d. Performance Steps

There must be at least two performance steps

for each task. Performance steps specify actions required to fulfill the

proficiency established by the standard. These performance steps indicate a

logical sequence of collective actions required to accomplish the standard.

e. Reference(s)

Reference(s) are directives and doctrinal/technical

publications that specify, support, or clarify the performance steps. References

should be publications that are readily available.

f. Administrative Instructions

Administrative instructions provide the

trainer/instructor with special circumstances relating to the ITS such as safety

or real world limitations which may be a prerequisite to successful

accomplishment of the ITS.

g. Initial Training Setting

All ITS's are assigned an Initial Training

Setting that includes a specific location for initial instruction [Formal School

(FS) or Operating Forces], level of training required at that location

(Core/Core-Plus/MOJT), a sustainment factor (number of months between

evaluation or retraining to maintain the proficiency required by the standard),

and a “Required By” rank (the lowest rank at which task proficiency is

required).

h. Training Material (Optional)

Training materiel includes all training

devices, simulators, aids, equipment, and materials [except ammunition and

Marine Corps Institute (MCI) publications] required or recommended to

properly train the task under the specified condition and to the specific

standard. Mandatory items are preceded with an asterisk (*).

i. Ammunition (Optional)

This table, if present, depicts ammunition,

explosives, and/or pyrotechnics required for proper training of the ITS.

j. Current MCI(s) (Optional)

This section includes a list of any

currently available MCI publications designed to provide training related to this

task.

Systems Approach To Training Manual Design Phase

Chapter 2 2-

8

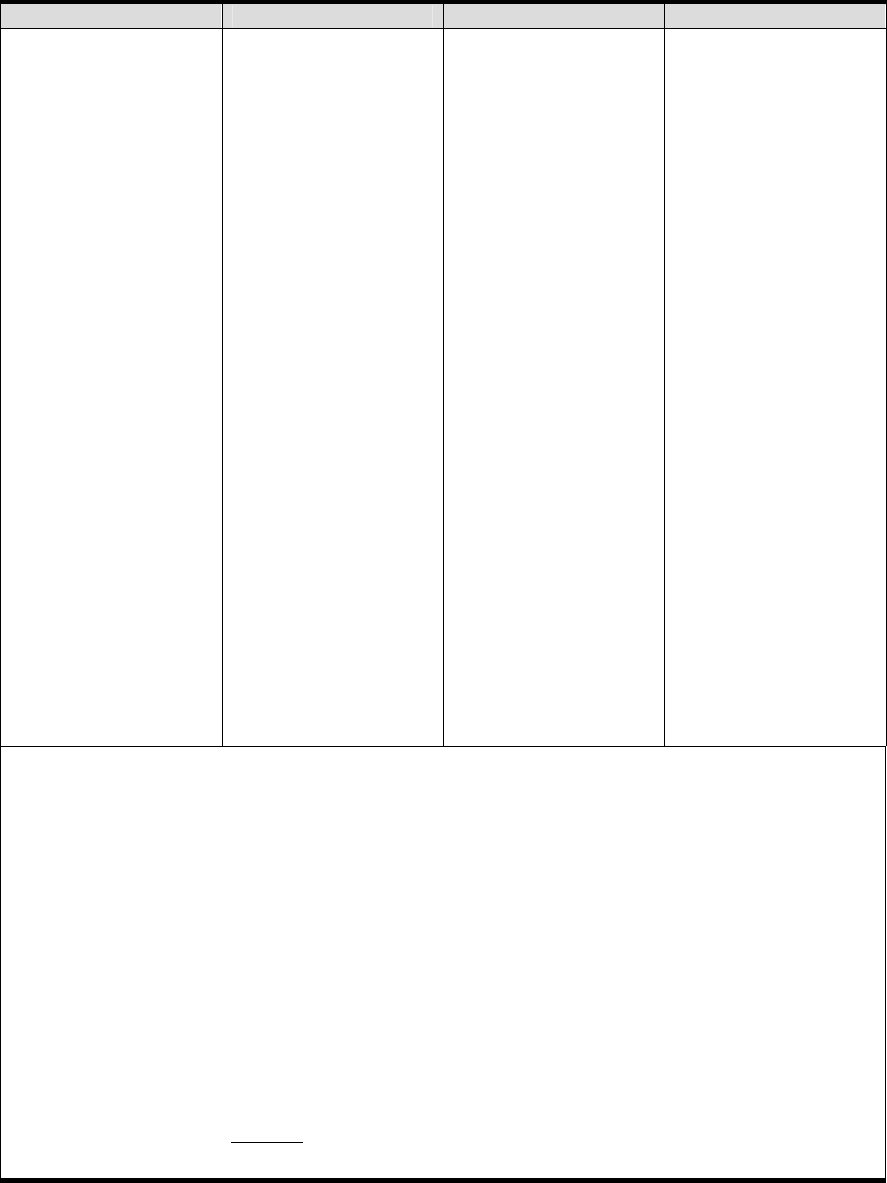

Figure 2-5 Components of a T&R Event

Training and Readiness Event Component Description

1. General

An event contained within a T&R Manual is an individual or collective

training standard and contains the following components.

a. Event Code The event code is a 4-letter alpha and/or numeric MOS

designator followed by an up to 4-letter alpha and/or numeric functional area

designator followed by a 4-letter numeric sequence designator. The purpose of

coding events is to provide Marines with a simplified system for planning, tracking,

and recording individual and unit training accomplishments. Grouping and

sequencing individual skills and unit capabilities build a “picture” for the user

showing the progression of training.

Grouping

: The code is used for grouping events according to their functional

area. Categorizing events with the use of a recognizable up to 4-letter code

makes the type of skill or capability being referenced fairly obvious. Examples

include DEF (defensive tactics), MAN (maneuver), NBC (nuclear, biological, and

chemical), RAD (Radar), etc.

Sequencing

: The 4-digit code is used to arrange events in a progressive

sequence.

FIGURE 2-5 (CONT)

Component Descriptions

of a Training and

Readiness Event.

Event Description Sust Int

57XX-OPS-1010 Conduct operations in a chemically contaminated environment 3 mths

Tasks:

1. Prepare for NBC operations.

2. Prepare for chemical attack.

3. React to chemical attack.

4. Prepare to cross a chemically contaminated area.

5. Cross a chemically contaminated area.

6. Decontaminate individual Marines.

7. Conduct hasty equipment decontamination.

8. Conduct MOOP gear exchange.

Condition: Under any terrain and weather condition, given normal individual and unit combat

equipment.

Standard: All personnel don MOPP gear and be ready to continue unit movement, combat

support or combat service support within 10 minutes of the alarm. Perform operation in MOPP

gear for a minimum of 30 minutes. Decontaminate all personnel and equipment within 2

hours.

Performance Steps: N/A

References: MCO 3400.3, FMFM 11-1, FMFM 11-10, FMFM 11-9

Ordnance: N/A

External Su

pp

ort: Movement ran

g

e a

pp

ro

p

riate for unit size.

Systems Approach To Training Manual Design Phase

Chapter 2 2-

9

FIGURE 2-5 (CONT)

Components Descriptions

of a Training and

Readiness Event.

b. Event Description Narrative description of the training event.

c. Tasks A listing of the component parts of the T&R Event. Tasks are

usually a unit of work usually performed over a finite period of time that has a

specific beginning and end can be measured, and is a logical, necessary unit of

performance. In the T&R Program, a unit of work may actually be a T&R Event,

and may reappear in a higher-level event as a component part – a Task. There

is often more than one task for each training event. A 100-level Event (to be

taught at the formal school) consists of a single task that is the Event Description

and will not normally have a listing of Tasks as shown here. A 100-level event

will often have multiple performance steps, although they may not be listed in

the T&R Manual if an event’s reference contains the necessary performance

steps.

d. Condition Condition refers to the constraints that may affect task

performance in a real-world environment. It includes equipment, tools,

materials, environmental and safety constraints pertaining to the task

completion.

e. Standard Standards are the metrics for evaluating the effectiveness of

the event performance. It identifies the proficiency level for the event

performance in terms of accuracy, speed, sequencing, and adherence to

procedural guidelines. It establishes the criteria of how well the event is to be

performed. It is not guidance; it states exactly the proficiency level to which the

task will be performed. Whenever possible, the standard should cite a reference

that defines the tasks in procedural or operational terms.

f. Performance Steps Performance steps specify the actions required to

accomplish a task. Performance steps follow a logical progression and should be

followed sequentially, unless otherwise stated. Normally, performance steps are

listed only for 1000-level individual events (those that are taught in the formal

MOS school). Listing performance steps is optional for 2000-level events and

above.

g. Prerequisite(s) Prerequisites are the listing of academic training

and/or T&R events that must be completed prior to attempting completion of the

event.

h. Reference(s) References are the listing of doctrinal or reference

publications that may assist the trainee in satisfying the performance standards

and the trainer in evaluating the performance of the event.

i. Ordnance Each event will contain a listing of ordnance types and

quantities required to complete the task.

j. External Support Requirements Each event will contain a listing of

the external support requirements needed for event completion (e.g., range,

support aircraft, targets, training devices, other personnel, and non-organic

equipment).

k. Combat Readiness Percentage (CRP) The CRP is a quantitative

numerical value used in calculating individual and collective training readiness.

The CRP of each event is determined by its relative importance to other events.

l. Sustainment Interval The number of months between evaluation or

retraining by the individual or collective event in order to maintain proficiency.

Systems Approach To Training Manual Design Phase

Chapter 2 2-

10

2. Determine Training Requirements Review the ITS order or T&R

manual to determine what tasks must be taught at the formal school/detachment.

a. For an ITS order, refer to enclosure three. Those tasks designated for

instruction at a formal school will have alpha indicator in the column labeled “FS”.

This information is also spelled out for each task in Appendix A to Enclosure 6 of

the ITS order.

b. In a T&R manual, all tasks taught at the formal school for initial,

individual MOS training are listed at the 1000-level. For MOS progression training

conducted at the formal school, select events are identified in the manual.

In some cases, topics that need to be taught at a formal school/detachment will

not have corresponding tasks in the ITS order or T&R manual. To teach these

topics in a formal school/detachment, one of two courses of action must be

followed. The first course of action is to designate the lesson as "Lesson

Purpose"; it will not have learning objectives. Examples are a course overview or

an introduction to a series of tasks being instructed. Lesson purpose classes must

be kept to a minimum, because they use school resources (like time) without

directly supporting a given task. The other course of action is to contact the task

analyst at TECOM for further guidance. It is possible that the ITS order or T&R

manual needs a task added to it, and the analyst can provide authority to teach

until the revision is made.

3. Analyze the Target Population Description Before the knowledge,

skills, and attitudes (KSAs) are determined, the target population must be

analyzed. The TPD is analyzed so that the curriculum developer can make a

determination of the KSAs the students will bring into the instructional

environment. Instruction must capitalize on students’ strengths and focus on

those KSAs the students must develop or possess to perform satisfactorily on the

job. The goal is for the learning analysis to reveal the instructional needs of the

target population so that selected methods and media are appropriate for the

student audience .

4. Record Task Data Record the data found in the ITS order or T&R manual.

The LAW in Appendix A serves as a guide for what information to record. Record

the T&R Event or ITS Duty Description, T&R Event or ITS Duty Code, the task,

task code, and the conditions and standards associated with the task. Then

record each performance step. A good strategy to stay organized and focused is

to only record one performance step per page. It is also a good idea to fill out all

LAWs required for a learning analysis prior to beginning step 5.

5. Generate Knowledge, Skills, and Attitudes for each

Performance Step When generating knowledge, skills, and attitudes (KSA),

analyze each performance step and break it down into a list of KSAs required for

each student to perform that performance step. Consideration of the level of

detail needed, transfer of learning, target population, and school resources is

essential. The method used to identify KSAs is commonly called “brainstorming.”

Brainstorming is the process used for SMEs and curriculum developers to work

together to ensure that KSAs are generated for each performance step. In order

to do this, the differences between knowledge, skill, and attitude must be

identified:

KSA – Knowledge, Skill,

Attitude.

STEP 2

STEP 3

STEP 4

STEP 5

Systems Approach To Training Manual Design Phase

Chapter 2 2-

11

KSAs are brainstormed and

recorded with one object

and one verb.

ELO - Enabling Learning

Objective.