Privately Evaluating Decision Trees and Random Forests

(Extended Version)

David J. Wu

∗

Tony Feng

∗

Michael Naehrig

†

Kristin Lauter

†

Abstract

Decision trees and random forests are common classifiers with widespread use. In this paper,

we develop two protocols for privately evaluating decision trees and random forests. We operate

in the standard two-party setting where the server holds a model (either a tree or a forest),

and the client holds an input (a feature vector). At the conclusion of the protocol, the client

learns only the model’s output on its input and a few generic parameters concerning the model;

the server learns nothing. The first protocol we develop provides security against semi-honest

adversaries. We then give an extension of the semi-honest protocol that is robust against

malicious adversaries. We implement both protocols and show that both variants are able to

process trees with several hundred decision nodes in just a few seconds and a modest amount

of bandwidth. Compared to previous semi-honest protocols for private decision tree evaluation,

we demonstrate a tenfold improvement in computation and bandwidth.

1 Introduction

In recent years, machine learning has been successfully applied to many areas, such as spam clas-

sification, credit-risk assessment, cancer diagnosis, and more. With the transition towards cloud-

based computing, this has enabled many useful services for consumers. For example, there are

many companies that provide automatic medical assessments and risk profiles for various diseases

by evaluating a user’s responses to an online questionnaire, or by analyzing a user’s DNA profile.

In the personal finance area, there exist automatic tools and services that provide valuations for a

user’s car or property based on information the user provides. In most cases, these services require

access to the user’s information in the clear. Many of these situations involve potentially sensitive

information, such as a user’s medical or financial data. A natural question to ask is whether one

can take advantage of cloud-based machine learning, and still maintain the privacy of the user’s

data. On the flip side, in many situations, we also require privacy for the model. For example,

in scenarios where companies leverage learned models for providing product recommendations, the

details of the underlying model often constitute an integral part of the company’s “secret sauce,”

and thus, efforts are taken to guard the precise details. In other scenarios, the model might have

been trained on sensitive information such as the results from a medical study or patient records

from a hospital; here, revealing the model can compromise sensitive information as well as violate

certain laws and regulations.

∗

†

Microsoft Research - {mnaehrig, klauter}@microsoft.com.

1

In this work, we focus on one commonly used class of classifiers: decision trees and random

forests [43, 19]. Decision trees are simple classifiers that consist of a collection of decision nodes

arranged in a tree structure. As the name suggests, each decision node is associated with a pred-

icate or test on the query (for example, a possible predicate could be “age > 55”). Decision tree

evaluation simply corresponds to tree traversal. These models are often favored by users for their

ease of interpretability. In fact, there are numerous web APIs [2, 1] that enable users to both train

and query decision trees as part of a machine learning as a service platform. In spite of their simple

structure, decision trees are widely used in machine learning, and have been successfully applied to

many scenarios such as disease diagnosis [62, 5] and credit-risk assessment [49].

In this work, we develop practical protocols for private evaluation of decision trees and random

forests. In our setting, the server has a decision tree (or random forest) model and the client holds

an input to the model. Abstractly, our desired security property is that at the end of the protocol

execution, the server should not learn anything about the client’s input, and the client should not

learn anything about the server’s model other than what can be directly inferred from the output

of the model. This is a natural setting in cases where we are working with potentially sensitive

and private information on the client’s side and where we desire to protect the server’s model,

which might contain proprietary or confidential information. To motivate the need for privacy, we

highlight one such application of using decision trees for automatic medical diagnosis.

Application to medical diagnosis. Decision trees are used by physicians for both automatic

medical diagnosis and medical decision making [62, 5]. A possible deployment scenario is for a

hospital consortium or a government agency to provide automatic medical diagnosis services for

other physicians to use. To leverage such a service, a physician (or even a patient) would take a

set of measurements (as specified by the model) and submit those to the service for classification.

Of course, to avoid compromising the patient’s privacy, we require that at the end of the protocol,

the service does not learn anything about the client’s input. On the flip side, there is also a need

to protect the server’s model from the physician (or patient) that is querying the service. In recent

work, Fredrikson et al. [34] showed that white-box access to a decision tree model can be efficiently

exploited to compromise the privacy of the users whose data was used to train the decision tree.

In this case, this means that the medical details of the patients whose medical profiles were used

to develop the model are potentially compromised by revealing the model in the clear. Not only

is this a serious privacy concern, in the case of medical records, this can be a violation of HIPAA

regulations. Thus, in this scenario, it is critical to provide privacy for both the input to the

classifier, as well as the internal details of the classifier itself. We note that even though black-box

access to a model can still be problematic, combining our private model evaluation protocol with

“privacy-aware” decision tree training algorithms [34, §6] can significantly mitigate this risk.

1.1 Our Contributions

We begin by constructing a decision tree evaluation protocol with security against semi-honest

adversaries (i.e., adversaries that behave according to the protocol specification). We then show how

to extend the semi-honest protocol to provide robustness against malicious adversaries. Specifically,

we show that a malicious client cannot learn additional information about the server’s model, and

that a malicious server cannot learn anything about the client’s input. Note that it is possible

for a malicious server to cause the client to obtain a corrupted or wrong output; however, even

in this case, it does not learn anything about the client’s input. This model is well-suited for

2

cloud-based applications where we assume the server is trying to provide a useful service, and thus,

not incentivized to give corrupt or nonsensical output to the client. In fact, because the server has

absolute control over the model in the private decision tree evaluation setting, privacy of the client’s

input is the strongest property we can hope for in the presence of a malicious server. We describe our

threat model formally in Section 2.3. Our protocols leverage two standard cryptographic primitives:

additive homomorphic encryption and oblivious transfer.

As part of our construction for malicious security, we show how a standard comparison protocol

based on additively homomorphic encryption [28] can be used to obtain an efficient conditional

oblivious transfer protocol [11, 27] for the less-than predicate.

To assess the practicality of our protocols, we implement both the semi-honest protocol as well

as the extended protocol with protection against malicious adversaries using standard libraries. We

conduct experiments with decision trees with depth up to 20, as well as decision trees with over

10,000 decision nodes to assess the scalability of our protocols. We also compare the performance

of our semi-honest secure protocol against the protocols of [17, 7, 20], and demonstrate over 10x

reduction in client computation and bandwidth, or both, while operating at a higher security level

(128 bits of security as opposed to 80 bits of security in past works). We conclude our experimental

analysis by evaluating our protocols on decision trees trained on several real datasets from the

UCI repository [6]. In most cases, our semi-honest decision tree protocol completes on the order

of seconds and requires bandwidth ranging from under 100 KB to several MB. This represents

reasonable performance for a cloud-based service.

This work provides the first implementation of a private decision tree evaluation protocol with

security against malicious adversaries. In our benchmarks, we additionally show that even with

the extensions for malicious security, our protocol still outperforms existing protocols that achieve

only semi-honest security.

Related work. This problem of privately evaluating decision trees falls under the general um-

brella of multiparty computation. One approach is based on homomorphic encryption [35, 59],

where the client sends the server an encryption of its input, and the server evaluates the function

homomorphically and sends the encrypted response back to the client. The client decrypts to learn

the output. While these methods have been successfully applied to several problems in privacy-

preserving data mining [39, 16], the methods are limited to simple functionalities. Another general

approach is based on Yao’s garbled circuits [63, 53, 51, 10, 9], where one party prepares a garbled

circuit representing the joint function they want to compute and the other party evaluates the cir-

cuit. These methods typically have large communication costs; we provide some concrete estimates

based on state-of-the-art tools in Section 6. We survey additional related work in Section 7.

2 Preliminaries

We begin with some notation. Let [n] be the set of integers {1, . . . , n}, and Z

p

be the ring of

integers modulo p. For two k-bit strings x, y ∈ {0, 1}

k

, we write x ⊕ y for their bitwise xor. For a

distribution D, we write x ← D to denote a sample s from D. For a finite set S, we write x

r

←− S

to denote a uniform draw x from S. We say that two distributions D

1

and D

2

are computationally

indistinguishable (denoted D

1

c

≈ D

2

) if no efficient (that is, probabilistic polynomial time) algorithm

can distinguish them except with negligible probability. We write D

1

s

≈ D

2

to denote that the two

3

distributions D

1

and D

2

are statistically close. A function f(λ) is negligible in a parameter λ if for

all positive integers c, f = o(1/λ

c

). For a predicate P we write 1 {P(x)} to denote the indicator

function for the predicate P—that is, 1 {P(x)} = 1 if and only if P(x) holds, and 0 otherwise.

2.1 Cryptographic Primitives

In this section, we introduce the primitives we require.

Homomorphic encryption. A semantically secure public-key encryption system with message

space R (we model R as a ring) is specified by three algorithms KeyGen, Enc

pk

, Dec

sk

(for key

generation, encryption, decryption, respectively). The key-generation algorithm outputs a public-

private key pair (pk, sk). For a message m, we write Enc

pk

(m; r) to denote an encryption of m

with randomness r. The security requirement is the standard notion of semantic security [37].

In an additively homomorphic encryption [59, 28, 29] system, we require an additional public-key

operation that takes encryptions of two messages m

0

, m

1

and outputs an encryption of m

0

+ m

1

.

Additionally, we require that the scheme supports scalar multiplication: given an encryption of

m ∈ R, there is a public-key operation that produces an encryption of km for all k ∈ Z.

Oblivious Transfer. Oblivious transfer (OT) [60, 56, 57, 4] is a primitive commonly employed

in cryptographic protocols. In standard 1-out-of-n OT, there are two parties, denoted the sender

and the receiver. The sender holds a database x

1

, . . . , x

n

∈ {0, 1}

`

and the client holds a selection

bit i ∈ [n]. At the end of the protocol, the client learns x

i

and nothing else about the contents of

the database; the server learns nothing.

2.2 Decision Trees and Random Forests

Decision trees are frequently encountered in machine learning and can be used for classification

and regression. A decision tree T : Z

n

→ Z implements a function on an n-dimensional feature

space (the feature space is typically R

n

, so we use a fixed-point encoding of the values). We refer to

elements x ∈ Z

n

as feature vectors. Each internal node v

k

in the tree is associated with a Boolean

function f

k

(x) = 1 {x

i

k

< t

k

}, where i

k

∈ [n] is an index into a feature vector x ∈ Z

n

, and t

k

is

a threshold. Each leaf node ` is associated with an output value z

`

. To evaluate the decision tree

on an input x ∈ Z

n

, we start at the root node, and at each internal node v

k

, we evaluate f

k

(x).

Depending on whether f

k

(x) evaluates to 0 or 1, we take either the left or right branch of the tree.

We repeat this process until we reach a leaf node `. The output T (x) is the value z

`

of the leaf

node.

The depth of a decision tree is the length of the longest path from the root to a leaf. The i

th

layer of the tree is the set of nodes of distance exactly i from the root. A binary tree with depth d

is complete if for 0 ≤ i ≤ d, the i

th

layer contains exactly 2

i

nodes.

Complete binary trees. In general, decision trees need not be binary or complete. However,

all decision trees can be transformed into a complete binary decision tree by increasing the depth

of the tree and introducing “dummy” internal nodes. In particular, all leaves in the subtree of a

dummy internal node have the same value. We associate each dummy node with the trivial Boolean

function f(x) = 0. Without loss of generality, we only consider complete binary decision trees in

this work.

4

Node indices. We use the following indexing scheme to refer to nodes in a complete binary

tree. Let T be a decision tree with depth d. Set v

1

to be the root node. We label the remaining

nodes inductively: if v

i

is an internal node, let v

2i

be its left child and v

2i+1

be its right child. For

convenience, we also define a separate index from 0 to 2

d

− 1 for the leaf nodes. Specifically, if v

i

is

the parent of a leaf node, then we denote its left and right children by z

2i−m−1

and z

2i−m

, where

m is the number of internal nodes in T . With this indexing scheme, the leaves of the tree, when

read from left-to-right, correspond with the ordering z

0

, . . . , z

2

d

−1

.

Paths in a binary tree. We associate paths in a complete binary tree with bit strings. Specifi-

cally, let T be a complete binary tree with depth d. We specify a path by a bit string b = b

1

· · · b

d

∈

{0, 1}

d

, where b

i

denotes whether we visit the left child or the right child when we are at a node at

level i − 1. Starting at the root node (level 0), and traversing according to the bits b, this process

uniquely defines a path in T . We refer to this path as the path induced by b in T .

Similarly, we define the notion of a decision string for an input x on a tree T . Let m be the

number of internal nodes in a complete binary tree T . The decision string is the concatenation

f

1

(x) · · · f

m

(x) of the value of each predicate f

i

on the input x. Thus, the decision string encodes

information regarding which path the evaluation would have taken at every internal node, and

thus, uniquely identifies the evaluation path of x in T . Thus, we also refer to the path induced

by a decision string s in T . In specifying the path s, the decision string also specifies the index

of the leaf node at the end of the path. We let φ : {0, 1}

m

→ {0, . . . , m} be the function that

maps a decision string s for a complete binary tree with m decision nodes onto the index of the

corresponding leaf node in the path induced by s in T .

Random forests. One way to improve the performance of decision tree classifiers is to combine

responses from many decision trees. In a random forest [19], we train many decision trees, where

each tree is trained using a random subset of the features. This has the effect of decorrelating the

individual trees in the forest. More concretely, we can describe a random forest F by an ensemble of

decision trees F = {T

i

}

i∈[n]

. If the random forest operates by computing the mean of the individual

decision tree outputs, then F(x) =

1

n

P

i∈[n]

T

i

(x).

2.3 Security Model

Our security definitions follow the real-world/ideal-world paradigm of [36, 22, 23, 44]. Specifically,

we compare the protocol execution in the real world, where the parties interact according to the

protocol specification π, to an execution in an ideal world, where the parties have access to a trusted

party that evaluates the decision tree. Similar to [22], we view the protocol execution as occurring

in the presence of an adversary A and coordinated by an environment E = {E

λ

}

λ∈N

(modeled as

a family of polynomial-size circuits parameterized by a security parameter λ). The environment

chooses the inputs to the protocol execution and plays the role of distinguisher between the real

and ideal experiments.

Leakage and public parameters. Our protocols reveal several meta-parameters about the

decision tree: a bound d on the depth of the tree, the dimension n of a feature vector, and a

bound t on the number of bits needed to represent each component of the feature vector. For the

semi-honest protocol, there is a performance-privacy trade-off where the protocol also reveals the

5

number ` of non-dummy internal nodes in the tree. In our security analysis, we assume that these

parameters are public and known to the client and server.

Real model of execution. In the real-world, the protocol execution proceeds as follows:

1. Inputs: The environment E chooses a feature vector x ∈ Z

n

for the client and a decision

tree T for the server. Each component in x is represented by at most t bits, and the tree T

has depth at most d. In the semi-honest setting where the number ` of non-dummy internal

nodes in T is public, we impose the additional requirement that T has ` non-dummy internal

nodes. The environment gives the input of the corrupted party to the adversary.

2. Protocol Evaluation: The parties begin executing the protocol. All honest parties behave

according to the protocol specification π. The adversary A has full control over the behavior

of the corrupted party and sees all messages received by the corrupted party. If A is semi-

honest, then A directs the corrupted party to follow the protocol as specified.

3. Output: The honest party computes and gives its output to the environment E. The adver-

sary computes a function of its view and gives it to E.

At the end of the protocol execution, the environment E outputs a bit b ∈ {0, 1}. Let REAL

π,A,E

(λ)

be the random variable corresponding to the value of this bit.

Ideal model of execution. In the ideal-world execution, the parties have access to a trusted

third party (TTP) that evaluates the decision tree. We now describe the ideal-world execution:

1. Inputs: Same as in the real model of execution.

2. Submission to Trusted Party: If a party is honest, it gives its input to the trusted party.

If a party is corrupt, then it can send any input of its choosing to the trusted party, as directed

by A. If A is semi-honest, it submits the input it received from the environment to the TTP.

3. Response from Trusted Party: On input x and T from the client and server, respectively,

the TTP computes and gives T (x) to the client.

4. Output: An honest party gives the message (if any) it received from the TTP to E. The

adversary computes a function of its view of the protocol execution and gives it to E.

At the end of the protocol execution, the environment E outputs a bit b ∈ {0, 1}. Let IDEAL

A,E

(λ)

be the random variable corresponding to the value of this bit. Informally, we say that a two-

party protocol π is a secure decision tree evaluation protocol if for all efficient adversaries A in the

real world, there exists an adversary S in the ideal world (sometimes referred to as a simulator )

such that the outputs of the protocol executions in the real and ideal worlds are computationally

indistinguishable. More formally, we have the following:

Definition 2.1 (Security). Let π be a two-party protocol. Then, π securely evaluates the decision

tree functionality in the presence of malicious (resp., semi-honest) adversaries if for all efficient

adversaries (resp., semi-honest adversaries) A, there exists an efficient adversary (resp., semi-honest

adversary) S such that for every polynomial-size circuit family E = {E

λ

}

λ∈N

,

REAL

π,A,E

(λ)

c

≈ IDEAL

S,E

(λ).

6

In this work, we also consider the weaker notion of privacy, which captures the notion that an

adversary does not learn anything about the inputs of the other parties beyond what is explicitly

leaked by the computation and its inputs/outputs. We use the definitions from [46]. Specifically,

define the random variable REAL

0

π,A,E

(λ) exactly as REAL

π,A,E

(λ), except in the final step of the

protocol execution, the environment E only receives the output from the adversary (and not the

output from the honest party). Define IDEAL

0

A,E

(λ) similarly. Then, we can define the notion for

a two-party protocol to privately compute a functionality f:

Definition 2.2 (Privacy). Let π be a two-party protocol. Then, π privately computes the decision

tree functionality in the presence of malicious (resp., semi-honest) adversaries if for all efficient

adversaries (resp., semi-honest adversaries) A, there exists an efficient adversary (resp., semi-honest

adversary) S such that for every polynomial-size circuit family E = {E

λ

}

λ∈N

,

REAL

0

π,A,E

(λ)

c

≈ IDEAL

0

S,E

(λ).

3 Semi-honest Protocol

In this section, we describe our two-party protocol for privately evaluating decision trees in the

semi-honest model. We show how to generalize these protocols to random forests in Section 5. In

our scenarios, we assume the client holds a feature vector and the server holds a model (either

a decision tree or a random forest). The protocol we describe is secure assuming a semantically

secure additively homomorphic encryption scheme and a semi-honest secure OT protocol.

3.1 Setup

We make the following assumptions about our model:

• The client has a well-formed public-private key-pair for an additively homomorphic encryption

scheme.

• The client’s private data consists of a feature vector x = (x

1

, . . . , x

n

) ∈ Z

n

, where x

i

≥ 0 for

all i. Let t be the bit-length of each entry in the feature vector.

• The server holds a complete binary decision tree T with m (possibly dummy) internal nodes.

Leakage. As noted in Section 2.3, we assume that the dimension n, the precision t, the depth

d of the decision tree and the number ` of non-dummy internal nodes are public in the protocol

execution.

3.2 Building Blocks

In this section, we describe the construction of our decision tree evaluation protocol in the semi-

honest setting. Before presenting its full details (Figure 1), we provide a high level survey of our

methods. As stated in Section 3.1, the decision trees we consider have a very simple structure

known to the client: a complete binary tree T with depth d. Let z

0

, . . . , z

2

d

−1

be the leaf values of

T . Suppose also that we allow the client to learn the index i ∈

0, . . . , 2

d

− 1

of the leaf node in

the path induced in T by x. If the client knew the index i, it can then privately obtain the value

7

z

i

= T (x) by engaging in a 1-out-of-2

d

OT with the server. In this case, the server’s “database” is

the set

z

0

, . . . , z

2

d

−1

.

The problem with this scheme is that revealing the index of the leaf node to the client reveals

information about the structure of the tree. We address this by having the server first permute

the nodes of the tree. After this randomization process, we can show that the decision string

corresponding to the client’s query is uniform over all bit strings with length 2

d

− 1. Thus, it is

acceptable for the client to learn the decision string corresponding to its input on the permuted

tree. The basic idea for our semi-honest secure decision tree evaluation protocol is thus as follows:

1. The server randomly permutes the tree T to obtain an equivalent tree T

0

.

2. The client and server engage in a comparison protocol for each decision node in T

0

. At the

end of this phase, the client learns the result of each comparison in T

0

, and therefore, the

decision string corresponding to its input in T

0

.

3. Using the decision string, the client determines the index i that contains its value z

i

= T (x).

The client engages in an OT protocol with the server to obtain the value z

i

.

Comparison protocol. The primary building block we require for private decision tree evalua-

tion is a comparison protocol. We use a variant of the two-round comparison protocol from [28, 32,

17] based on additive homomorphic encryption. In the protocol, the client and server each have a

value x and y, respectively. At the end of the protocol, the client and server each possess a share

of the comparison bit 1 {x < y}. Neither party learns anything else about the other party’s input.

We give a high-level sketch of the protocol. Suppose the binary representations of x and y

are x

1

x

2

· · · x

t

and y

1

y

2

· · · y

t

, respectively. Then, x < y if and only if there exists some index

i ∈ [t] where x

i

< y

i

, and for all j < i, x

j

= y

j

. As observed in [28], this latter condition is

equivalent to there existing an index i such that z

i

= x

i

− y

i

+ 1 + 3

P

j<i

(x

j

⊕ y

j

) = 0. In the

basic comparison protocol, the client encrypts each bit of its input x

1

, . . . , x

t

using an additively

homomorphic encryption scheme (with plaintext space Z

p

). The server homomorphically computes

encryptions of r

1

z

1

, . . . , r

t

z

t

where r

1

, . . . , r

t

r

←− Z

p

, and sends the ciphertexts back to the client in

random order. To learn if x < y, the client checks whether any of the ciphertexts decrypt to 0.

Note that if z

i

6= 0, then the value r

i

z

i

is uniformly random in Z

p

. Thus, depending on the value

of the comparison bit, the client’s view either consists of t encryptions of random nonzero values,

or t − 1 encryptions of random nonzero values and one encryption of 0.

For our decision tree evaluation protocol, we do not reveal the actual comparison bit to the

client. Instead, we secret share the comparison bit across the client and server. This is achieved by

having the server “flip” the direction of each comparison with probability 1/2. At the beginning

of the protocol, the server chooses b

r

←− {0, 1}, sets γ = 1 − 2 · b, and computes encryptions of

z

i

= x

i

− y

i

+ γ + 3

P

j<i

(x

j

⊕ y

j

). Let b

0

= 1 if there is some i ∈ [t] where z

i

= 0. Then,

b ⊕ b

0

= 1 {x < y}.

Decision tree randomization. As mentioned at the beginning of Section 3.2, we apply a tree

randomization procedure to hide the structure of the tree. For each decision node v, we interchange

its left and right subtrees with equal probability. Moreover, to preserve correctness, if we inter-

changed the left and right subtrees of v, we replace the boolean function f

v

at v with its negation

8

˜

f

v

(x) := f

v

(x) ⊕ 1. More precisely, on input a decision tree T with m internal nodes v

1

, . . . , v

m

, we

construct a permuted tree T

0

as follows:

1. Initialize T

0

← T and choose s

r

←− {0, 1}

m

. Let v

0

1

, . . . , v

0

m

denote the internal nodes of T

0

and let f

0

1

, . . . , f

0

m

be the corresponding boolean functions.

2. For i ∈ [m], set f

0

i

(x) ← f

i

(x) ⊕ s

i

. If s

i

= 1, then swap the left and right subtrees of v

0

i

. Do

not reindex the nodes of T

0

during this step.

3. Reindex the nodes v

0

1

, . . . , v

0

m

in T

0

according to the standard indexing scheme described in

Section 2.2. Output the permuted tree T

0

.

In the above procedure, we obtain a new tree T

0

by permuting the nodes of T according to a

bit-string s ∈ {0, 1}

m

. We denote this process by T

0

← π

s

(T ). By construction, for all x ∈ Z

n

and all s ∈ {0, 1}

m

, we have that T (x) = π

s

(T )(x). Moreover, we define the permutation τ

s

that

corresponds to the permutation on the nodes of T effected by π

s

. In other words, the node indexed

i in T is indexed τ(i) in T

0

. Then, if σ ∈ {0, 1}

m

is the decision string of T on input x, τ(σ ⊕ s) is

the decision string of π

s

(T ) on x.

3.3 Semi-honest Decision Tree Evaluation

The protocol for evaluating a decision tree with security against semi-honest adversaries is given

in Figure 1. Just to reiterate, in the first part of the protocol (Steps 1-4), the client and server

participate in an interactive comparison protocol that ultimately reveals to the client a decision

string for a permuted tree. Given the decision string, the client obtains the response via an OT

protocol. We show that this protocol is correct and state the corresponding security theorem. We

give the formal security proof in Appendix A.

Theorem 3.1. If the client and server follow the protocol in Figure 1, then at the end of the

protocol, the client learns T (x).

Proof. Appealing to the analysis of the comparison protocol from [28, §4.1], we have that for all

k ∈ [`], b

k

⊕ b

0

k

= 1(x

i

k

≤ t

k

) = f

q

k

(x). Since f

v

(x) = 0 for the dummy nodes v, we conclude that σ

is the decision string of x on T . Then, as noted in Section 3.2, τ

s

(σ ⊕ s) = σ

0

is the corresponding

decision string of x on π

s

(T ) = T

0

. By correctness of the OT protocol, the client learns the value

of z

0

φ(σ

0

)

= T

0

(x) at the end of Step 5. Since the tree randomization process preserves the function,

it follows that z

0

φ(σ

0

)

= T

0

(x) = T (x).

Theorem 3.2. The protocol in Figure 1 is a decision tree evaluation protocol with security against

semi-honest adversaries (Definition 2.1).

Asymptotic analysis. We now briefly characterize the asymptotic performance of the protocol

in Figure 1. In our analysis, we only count the number of cryptographic operations the client and

server perform. Let d be the depth of the tree, n be the dimension of the feature space, ` be

the number of non-dummy internal nodes, and t be the precision (the number of bits needed to

represent each component of the feature vector).

For the client, encrypting the feature vector requires O(nt) public-key operations; processing the

comparisons require O(`t) operations; computing the leaf node and the OT require O(d) operations.

9

Let (pk, sk) be a public-private key-pair for an additively homomorphic encryption scheme over Z

p

. The client

holds the secret key sk. Fix a precision t ≤ blog

2

pc.

• Client input: A feature vector x ∈ Z

n

p

where each x

i

is at most t bits. Let x

i,j

denote the j

th

bit of x

i

.

• Server input: A complete, binary decision tree T with m internal nodes. Let q

1

, . . . , q

`

be the indices of the

non-dummy nodes, and let f

q

k

(x) = 1 {x

i

k

≤ t

k

}, where i

k

∈ [n] and t

k

∈ Z

p

. For the dummy nodes v, set

f

v

(x) = 0. Let z

0

, . . . , z

m

∈ {0, 1}

∗

be the values of the leaves of T . See Section 3.1 for more details.

1. Client: For each i ∈ [n] and j ∈ [t], compute and send Enc

pk

(x

i,j

) to the server.

2. Server: The server chooses b

r

←− {0, 1}

`

. Then, for each k ∈ [`], set γ

k

= 1 − 2 · b

k

. For each k ∈ [`] and

j ∈ [t], choose r

k,j

r

←− Z

∗

p

and homomorphically compute the ciphertext

ct

k,j

= Enc

pk

"

r

k,j

x

i

k

,w

− t

k,w

+ γ

k

+ 3 ·

X

w<j

(x

i

k

,w

⊕ t

k,w

)

!#

. (1)

For each k ∈ [`], the server sends the client the ciphertexts (ct

k,1

, . . . , ct

k,t

) in random order. Note that the

server is able to homomorphically compute ct

k,j

for all k ∈ [`] and j ∈ [t] because it has the encryptions of

x

i

k

and the plaintext values of r

k,j

, γ

k

, and t

k

. To evaluate the xor, the server uses the fact that for a bit

x ∈ {0, 1}, x ⊕ 0 = x and x ⊕ 1 = 1 − x. Since the server knows the value of t

k

in the clear, this computation

only requires additive homomorphism.

3. Client: The client obtains a set of ` tuples of the form (

e

ct

k,1

, . . . ,

e

ct

k,t

) from the server. For each k ∈ [`], it

sets b

0

k

= 1 if there exists j ∈ [t] such that

˜

ct

k,j

is an encryption of 0. Otherwise, it sets b

0

k

= 0. The client

replies with Enc

pk

(b

0

1

), . . . , Enc

pk

(b

0

`

).

4. Server: The server chooses s

r

←− {0, 1}

m

and constructs the permuted tree T

0

= π

s

(T ), where π

s

is the

permutation associated with the bit-string s (see Section 3.2). Initialize σ = 0

m

. For k ∈ [`], update

σ

i

k

= b

k

⊕b

0

k

. Let τ

s

be the permutation on the node indices of T effected by π

s

, and compute σ

0

← τ

s

(σ ⊕ s).

The server homomorphically computes Enc

pk

(σ

0

) (each bit is encrypted individually) and sends the result to

the client. This computation only requires additive homomorphism because the server knows the plaintext

values of b

k

and s

k

and has the encryptions of b

0

k

for all k ∈ [`].

5. Client and Server: The client decrypts the server’s message to obtain σ

0

and then computes the index i

of the leaf node containing the response (the client computes i ← φ(σ

0

), with φ(·) as defined in Section 2.2).

Next, it engages in a 1-out-of-(m + 1) OT with the server to learn a value ˜z. In the OT protocol, the client

supplies the index i and the server supplies the permuted leaf values z

0

0

, . . . , z

0

m

of T

0

. The client outputs ˜z

and the server outputs nothing.

Figure 1: Decision tree evaluation protocol with security against semi-honest adversaries.

The total number of cryptographic operations the client has to perform is thus O (t(n + `) + d).

For the server, evaluating the comparisons requires O(`t) public-key operations. After receiving

the comparison responses, the server constructs the decision string, which has length 2

d

− 1, so this

step requires O(2

d

) operations. The 1-out-of-2

d

OT at the end also requires O(2

d

) computation on

the server’s side, for a total complexity of O

`t + 2

d

.

4 Handling Malicious Adversaries

Next, we describe an extension of our proposed decision tree evaluation protocol that achieves

stronger security against malicious adversaries. Specifically, we describe a protocol that is fully

secure against a malicious client and private against a malicious server. This is the notion of one-

10

sided security (see [44, §2.6.2] for a more thorough discussion). To motivate the construction of the

extended protocol, we highlight two ways a malicious client might attack the protocol in Figure 1:

• In the first step of the protocol, a malicious client might send encryptions of plaintexts that

are not in {0, 1}. The server’s response could reveal information about the thresholds in the

decision tree.

• When the client engages in OT with the server, it can request an arbitrary index i

0

of its

choosing and learn the value z

i

0

of an arbitrary leaf node, independent of its query.

In the following sections, we develop tools that prevent these two particular attacks on the protocol.

In turn, the resulting protocol will provide security against malicious clients and privacy against

malicious servers.

4.1 Building Blocks

To protect against malicious adversaries, we leverage two additional cryptographic primitives:

proofs of knowledge and an adaptation of conditional OT. In this section, we give a brief sur-

vey of these methods.

Proofs of knowledge. At the beginning of Section 4, we noted that a malicious client can deviate

from the protocol and submit encryptions of non-binary values as its query. To protect against

this malicious behavior, we require that the client includes a zero-knowledge proof [38] to certify

that it is submitting encryptions of bits. In our experiments, we use the exponential variant of the

ElGamal encryption scheme [26, §2.5] for the additively homomorphic encryption scheme. In this

case, proving that a ciphertext encrypts a bit can be done using the Chaum-Pedersen protocol [24] in

conjunction with the OR proof transformation in [25, 45]. Moreover, we can apply the Fiat-Shamir

heuristic [33] to make these proofs non-interactive in the random oracle model.

To show security against a malicious client in our security model (Section 2.3), the ideal-world

simulator needs to be able to extract a malicious client’s input in order to submit it to the trusted

third party (ideal functionality). This is enabled using a zero-knowledge proof of knowledge. In our

exposition, we use the notation introduced in [21] to specify these proofs. We write statements of the

form PoK {(r) : c

1

= Enc

pk

(0; r) ∨ c

1

= Enc

pk

(1; r)} to denote a zero-knowledge proof-of-knowledge

of a value r where either c

1

= Enc

pk

(0; r) or c

2

= Enc

pk

(1; r). All values not enclosed in parenthesis

are assumed to be known to the verifier. We refer readers to [38, 8] for a more complete treatment

of these topics.

Conditional oblivious transfer. The second problem with the semi-honest protocol is that the

client can OT for the value of an arbitrary leaf independent of its query. To address this, we modify

the protocol so the client can only learn the value that corresponds to its query. We use a technique

similar to conditional oblivious transfer introduced in [27, 11]. Like OT, (strong) conditional OT is

a two-party protocol between a sender and a receiver. The receiver holds an input x and the sender

holds two secret keys κ

0

, κ

1

and an input y. At the conclusion of the protocol, the receiver learns

κ

1

if (x, y) satisfies a predicate Q, and κ

0

otherwise. For instance, a “less-than” predicate would

be Q(x, y) = 1 {x < y}. As in OT, the server learns nothing at the conclusion of the protocol.

Neither party learns Q(x, y). In Section 4.2, we describe how to modify the comparison protocol

from Figure 1 to obtain a conditional OT protocol for the less-than predicate.

11

4.2 Secure Decision Tree Evaluation

We now describe how we extend our decision tree evaluation protocol to protect against malicious

adversaries.

Modified comparison protocol. Recall from Section 3.2 that the basic comparison protocol

exploits the fact that x < y if and only if there exists some index i ∈ [t] where z

i

= x

i

− y

i

+

1 + 3

P

j<i

(x

j

⊕ y

j

) = 0. In the comparison protocol, the server homomorphically computes an

encryption of c

i

= r

i

z

i

for a random r

i

∈ Z

p

and the client decrypts each ciphertext to learn whether

z

i

= 0 for some i. Suppose the server wants to transmit a key κ

0

∈ Z

p

if and only if x < y. It can

do this by including an additional set of ciphertexts Enc

pk

(c

i

ρ

i

+ κ

0

) in addition to Enc

pk

(c

i

) for

each i ∈ [t], and where ρ

i

is a uniformly random blinding factor from Z

p

. Certainly, if all of the

c

i

6= 0, then ρ

i

c

i

is uniform and perfectly hides κ

0

. On the other hand, if for some i, c

i

= 0, then the

client is able to recover the secret key κ

0

. Thus, this gives a conditional key transfer protocol for

the less-than predicate: the client is able to learn the key κ

0

if its input x is less than the server’s

input y. Similarly, the server can construct another set of ciphertexts such that κ

1

is revealed if

x > y.

1

Finally, the same trick described in Section 3.2 can be used to ensure the client learns only

a share of the comparison bit (and correspondingly, the key associated with its share).

Decision tree evaluation. Our two-round decision tree evaluation protocol with security against

malicious adversaries is given in Figure 2. As in the semi-honest protocol, the client begins by

sending a bitwise encryption of its feature vector to the server. In addition to the ciphertexts, the

client also sends zero-knowledge proofs that each of its ciphertexts encrypts a single bit. Next,

the server randomizes the tree in the same manner as in the semi-honest protocol (Section 3.2).

Moreover, the server associates a randomly chosen key with each edge in the permuted tree. The

server blinds each leaf value using the keys along the path from the root to the leaf.

2

The server

sends the blinded response vector to the client. In addition, for each internal node in the decision

tree, the server prepares a response that allows the client to learn the key associated with the

comparison bit between the corresponding element in its feature vector and the threshold associated

with the node. Using these keys, the client unblinds the value (and only this value) at the index

corresponding to its query.

Remark 4.1. In the final step of the protocol in Figure 2, the client computes the index of the

leaf node based on the complete decision string b

0

it obtains by decrypting each ciphertext in the

server’s response. At the same time, we note that the path induced by b

0

in the decision tree can be

fully specified by just d = dlog

2

me bits in b

0

. This gives a way to reduce the client’s computation

in the decision tree evaluation protocol. Specifically, after the client receives the m messages from

the server in Step 3 of the protocol, it only computes the d bits in the decision string needed to

specify the path through the decision tree. To do so, the client first computes the decision value at

the root node to learn the first node in the path. It then iteratively computes the next node in the

1

We can assume without loss of generality that x 6= y in our protocol. Instead of comparing x with y, we compare

2x against 2y + 1. Observe that x ≤ y if and only if 2x < 2y + 1. Thus, it suffices to only consider when x > y and

x < y.

2

There is a small technicality here since the values are elements of {0, 1}

`

, while the keys are elements in G. However,

as long as |G| > 2

`

, we can obtain a (nearly) uniform key in {0, 1}

`

by hashing the group element using a pairwise

independent family of hash functions and invoking the leftover hash lemma [42].

12

Let (pk, sk) be a public-secret key-pair for an additively homomorphic encryption scheme over Z

p

. We assume

the client holds the secret key. Fix a precision t ≤ blog

2

pc.

• Client input: A feature vector x ∈ Z

n

p

where each x

i

is at most t bits. Let x

i,j

denote the j

th

bit of x

i

.

• Server input: A complete, binary decision tree T with decision nodes v

1

, . . . , v

m

. For all k ∈ [m], the

predicate f

k

associated with decision node v

k

is of the form f

k

(x) = 1 {x

i

k

≤ t

k

}, where i

k

∈ [n] is an index

and t

k

∈ Z

p

is a threshold. Let z

0

, . . . , z

m

∈ {0, 1}

`

be the values of the leaves of T .

1. Client: For each i ∈ [n] and j ∈ [t], the client chooses r

i,j

r

←− Z

p

, and constructs ciphertexts

ct

i,j

= Enc

pk

(x

i,j

; r

i,j

) and proofs π

i,j

= PoK {(r

i,j

) : ct

i,j

= Enc

pk

(0; r

i,j

) ∨ ct

i,j

= Enc

pk

(1; r

i,j

)}. It sends

{(ct

i,j

, π

i,j

)}

i∈[n],j∈[t]

to the server.

2. Server: Let

(

˜

ct

i,j

, ˜π

i,j

)

i∈[n],j∈[t]

be the ciphertexts and proofs the server receives from the client. For

i ∈ [n] and j ∈ [t], the server verifies the proof ˜π

i,j

. If ˜π

i,j

fails to verify, it aborts the protocol. Otherwise,

the server does the following:

(a) It chooses s

r

←− {0, 1}

m

and computes T

0

← π

s

(T ), where π

s

is the permutation associated with the

bit-string s (see Section 3.2). Let τ be the permutation effected by π

s

on the nodes of T . Let s

0

1

· · · s

0

m

=

τ (s

1

· · · s

m

). Similarly, define the permuted node indices i

0

1

, . . . , i

0

m

and thresholds t

0

1

, . . . , t

0

m

in T

0

.

(b) For each k ∈ [m], it chooses keys κ

k,0

, κ

k,1

r

←− Z

p

. Let γ

0

i

= 1 − 2 · s

0

i

. Then, for each k ∈ [m] and j ∈ [t],

it chooses blinding factors r

(0)

k,j

, r

(1)

k,j

, ρ

(0)

k,j

, ρ

(1)

k,j

r

←− Z

∗

p

, and defines

c

(0)

k,j

= r

(0)

k,j

"

˜x

i

0

k

,j

− t

0

k,j

− γ

0

k

+ 3

X

w<j

˜x

i

0

k

,w

⊕ t

0

k,w

#

c

(1)

k,j

= r

(1)

k,j

"

˜x

i

0

k

,j

− t

0

k,j

+ γ

0

k

+ 3

X

w<j

˜x

i

0

k

,w

⊕ t

0

k,w

#

.

Finally, it computes the following vectors of ciphertexts for each k ∈ [m]:

A

(0)

k

=

Enc

pk

(c

(0)

k,1

), . . . , Enc

pk

(c

(0)

k,t

)

B

(0)

k

=

Enc

pk

(c

(0)

k,1

ρ

(0)

k,1

+ κ

k,0

), . . . , Enc

pk

(c

(0)

k,t

ρ

(0)

k,t

+ κ

k,0

)

A

(1)

k

=

Enc

pk

(c

(1)

k,1

), . . . , Enc

pk

(c

(1)

k,t

)

B

(1)

k

=

Enc

pk

(c

(1)

k,1

ρ

(1)

k,1

+ κ

k,1

), . . . , Enc

pk

(c

(1)

k,t

ρ

(1)

k,t

+ κ

k,1

)

.

For each k ∈ [m], it randomly permutes the entries in A

(0)

k

and applies the same permutation to B

(0)

k

.

Similarly, it randomly permutes the entries in A

(1)

k

and applies the same permutation to B

(1)

k

. In the

above description, we write ˜x

i,j

to denote the value that ˜c

i,j

decrypts to under the client’s secret key sk.

While the server does not know ˜x

i,j

in the clear, it can still construct encryptions of each c

(0)

k,j

and c

(1)

k,j

by relying on additive homomorphism of the underlying encryption scheme (the xor can be evaluated

using the same procedure as in the semi-honest protocol of Figure 1).

(c) Let d = log

2

(m + 1) be the depth of T

0

. For each leaf node z

0

i

in T

0

, let b

1

· · · b

d

be the binary

representation of i, and let i

1

, . . . , i

d

be the indices of the nodes along the path from the root to the leaf

in T

0

. It computes ˆz

0

i

= z

0

i

⊕

L

j∈[d]

h(κ

i

j

,b

j

)

, where h : Z

p

→ {0, 1}

`

is a hash function (drawn from

a pairwise independent family).

(d) It sends the ciphertexts A

(0)

k

, A

(1)

k

, B

(0)

k

, B

(1)

k

for all k ∈ [m] and the blinded response vector [ˆz

0

0

, . . . , ˆz

0

m

]

to the client.

Figure 2: Decision tree evaluation protocol with security against malicious clients and privacy

against malicious servers. The protocol description continues on the next page.

13

3. Client: Let

˜

A

(0)

k

,

˜

A

(1)

k

,

˜

B

(0)

k

,

˜

B

(1)

k

(for k ∈ [m]) be the vectors of ciphertexts and [˜z

0

, . . . , ˜z

m

] be the blinded

response vector the client receives. For each k ∈ [m], the client decrypts each entry

˜

A

(0)

k,j

for j ∈ [t]. If for

some j ∈ [t],

˜

A

(0)

k,j

decrypts to 0 under sk, then it sets κ

k

= Dec

sk

(

˜

B

(0)

k,j

) and b

0

k

= 0. Otherwise, it decrypts

each entry

˜

A

(1)

k,j

for j ∈ [t]. If

˜

A

(1)

k,j

decrypts to 0 for some j ∈ [t], it sets κ

k

= Dec

sk

(

˜

B

(1)

k,j

) and b

0

k

= 1. If

neither condition holds, the client aborts the protocol. Finally, let i

1

, . . . , i

d

be the indices of the internal

nodes in the path induced by b

0

= b

0

1

· · · b

0

m

in a complete binary tree of depth d, and let i

∗

be the index of

the leaf node at the end of the path. The client computes and outputs ˜z = ˜z

i

∗

⊕

L

j∈[d]

h(κ

i

j

)

.

Figure 2 (Continued): Decision tree evaluation protocol with security against malicious clients and

privacy against malicious servers.

path by decrypting the set of ciphertexts associated with that node from the private comparison

protocol. The client’s computation is thus reduced to O(t · log

2

m).

Security. We now state the security theorem for the protocol in Figure 2. We give the formal

proof in Appendix B.

Theorem 4.1. The protocol in Figure 2 is a decision tree evaluation protocol with security against

malicious clients (Definition 2.1) and privacy against malicious servers (Definition 2.2).

Asymptotic analysis. We perform a similar analysis of the asymptotic performance for the

one-sided secure protocol as we did for the semi-honest secure protocol. If we apply the improve-

ment from Remark 4.1, the client’s computation requires O (t(n + d)) operations and the server’s

computation requires O

2

d

t

operations.

5 Extensions

In this section, we describe two extensions to our private decision tree evaluation protocol. First,

we describe a simple extension to support private evaluation of random forests (Section 2.2). Then,

we describe how to provide support for decision trees over feature spaces that contain categorical

variables.

Random forest evaluation. As mentioned in Section 2.2, a random forest classifier is an en-

semble classifier that aggregates the responses of multiple decision trees in order to obtain a more

robust response. Typically, response aggregation is done by taking a majority vote of the individual

decision tree outputs, or taking the average of the responses. A simple, but na¨ıve method for gener-

alizing our protocol to a random forest F = {T

i

}

i∈[n]

is to run the decision tree evaluation protocol

n times, once for each decision tree T

i

. At the end of the n protocol executions, the client learns

the values T

1

(x), . . . , T

n

(x), and can then compute the mean, majority, or some other function of

the individual decision tree outputs.

The problem is that this simple protocol reveals to the client the values T

i

(x) for all i ∈ [n].

In the case where the output of the random forest is the average (or any affine function) of the

individual classifications, we can do better by using additive secret sharing. Specifically, suppose

14

that the value of each leaf of T

i

(for all i) is an element of Z

p

. Then, at the beginning of the protocol,

the server chooses blinding values r

1

, . . . , r

n

r

←− Z

p

. For each tree T

i

, the server blinds each of its

leaf values v ∈ Z

p

by computing v ← v + r

i

. Since r

i

is uniform over Z

p

, v is now uniformly

random over Z

p

. The protocol execution proceeds as before, except that the server also sends

the client the value r ←

P

i∈[n]

r

i

. At the conclusion of the protocol, the client learns the values

{v

i

+ r

i

}

i∈[n]

where v

i

= T

i

(x). In order to compute the mean of v

1

, . . . , v

n

, the client computes

the sum

P

i∈[n]

(v

i

+ r

i

) − r =

P

i∈[n]

v

i

which is sufficient for computing the mean provided the

client knows the number of trees in the forest. We note that this protocol generalizes naturally

to evaluating any affine function of the individual responses with little additional overhead. The

leakage in the case of affine functions is the total number of comparisons, the depth of each decision

tree in the model (or a bound on the depth if all the trees are padded to the maximum depth), and

the total number of trees. No information about the response value of any single decision tree in

the forest is revealed.

Equality testing and categorical variables. In practice, feature vectors might contain cate-

gorical variables in addition to numeric variables. When branching on the value of a categorical

variable, the natural operation is testing for set membership. For instance, if x

i

is a categorical

variable that can take on values from a set S = {s

1

, . . . , s

n

}, then a branching criterion is more

naturally phrased in the form 1 {x

i

∈ S

0

} for some S

0

⊆ S. We leverage this observation to de-

velop a method for testing for set inclusion based on private equality testing when the number of

attributes is small. More precisely, to determine whether x

i

∈ S

0

, we test whether x = s for each

s ∈ S

0

.

We use the two-party equality testing protocol of [58]. Fix a group G with generator P and let

(pk, sk) be a key-pair for an additively homomorphic encryption scheme where the client holds the

secret key sk. Let Z

p

be the plaintext space for the encryption scheme. Let x, y ∈ Z

p

denote the

client and server’s input to the equality testing protocol, respectively. To test whether x = y, the

client sends Enc

pk

(x) to the server. The server then chooses a random r

r

←− Z

∗

p

and homomorphically

computes Enc

pk

(r(x − y)) and sends it to the client. The key observation is that r(x − y) is 0 if

x = y, and otherwise, is uniform in Z

p

.

We now extend the decision tree evaluation protocol to support categorical variables with up

to t classes, where t is the number of bits needed to encode a numeric component in the feature

vector. To evaluate a decision function of the form 1 {x

i

∈ S

0

}, where S

0

= {y

1

, . . . , y

m

}, the server

constructs the ciphertexts Enc

pk

(r

j

(x

i

− y

j

)) for each y

j

∈ S

0

as above and additional dummy

ciphertexts Enc

pk

(r

j+1

), . . . , Enc

pk

(r

t

), for r

j+1

, . . . , r

t

r

←− Z

∗

p

. The server sends these ciphertexts to

the client in random order. Clearly, x

i

∈ S

0

if and only if one of these ciphertexts is an encryption of

0. Moreover, this set of ciphertexts is computationally indistinguishable from the set of ciphertexts

the client would receive for a comparison node. Finally, in the decision tree evaluation protocol,

we require that the client learns only a share of the value of the decision variable. This is also

possible for set membership testing: depending on the value of the server’s share of the decision

variable, the server can test membership in S

0

or in its complement S

0

. Since

S

0

∪ S

0

≤ t, the

decision tree evaluation protocols can support these tests with almost no modification. The only

difference in the semi-honest setting is that the client encrypts categorical variables directly rather

than bitwise. In the one-sided secure setting, the client additionally needs to prove that it sent

an encryption of a valid categorical value. To facilitate this, we number the categories from 1 to

|S| ≤ n, where S is the set of all possible categories for a given variable. Then, in addition to

15

providing the encryption c ← Enc

pk

(x; r) of a value x ∈ {1, . . . , |S|}, the client also includes a proof

PoK {(x, r) : (c = Enc

pk

(x; r)) ∧ (1 ≤ x ≤ |S|)}. Since |S| is small, this can be done using the same

OR proof transformation of Chaum-Pedersen proofs.

6 Experimental Evaluation

We implemented the decision tree evaluation protocol secure against semi-honest adversaries (Fig-

ure 1) as well as the protocol with protection against malicious adversaries (Figure 2). In the latter

case, we also implement the optimization from Remark 4.1. Additionally, we implemented both the

extension for random forest evaluation and in the semi-honest setting, the extension for supporting

categorical variables from Section 5.

Our implementation is written in C++. For the additively homomorphic encryption scheme

in our protocols, we use the exponential variant of the ElGamal encryption scheme [26, §2.5]

(based on the DDH assumption [13]), and implement it using the MSR-ECC library [14, 15]. In

the semi-honest protocol, we instantiated the 1-out-of-n OT with the Naor-Pinkas OT [56], and

implemented it using the the OT library of Asharov et al. [4]. In the malicious setting, we use

Chaum-Pedersen [24] proofs to prove that the encryptions the client submits are encryptions of

bits. We apply the Fiat-Shamir heuristic [33] to make the proofs non-interactive in the random

oracle model. We instantiate the random oracle with SHA-256, and leverage the implementation

in OpenSSL. We use NTL [61] over GMP [40] for the finite field arithmetic needed for the Chaum-

Pedersen proofs. We compile our code using g++ 4.8.2 on a machine running Ubuntu 14.04.1. In

our experiments, we run the client-side code on a commodity laptop with a multicore 2.30 GHz

Intel Core i7-4712HQ CPU and 16 GB of RAM. We run the server on a compute-optimized Amazon

EC2 instance with a dual-core 2.60GHz Intel Xeon E5-2666 v3 processor and 3.75 GB of RAM. We

do not leverage parallelism in our benchmarks. The network speed in our experiments is around

40-50 Mbps.

We conduct all experiments at a 128-bit security level. For our implementation of exponential

ElGamal, we use the 256-bit elliptic curve numsp256d1. We instantiate the OT scheme at the

128-bit security level using the parameters in [4]. For our first set of benchmarks, we compare our

performance against the protocols in [17, 7] on the ECG classification tree from [7] and the Nursery

dataset from the UCI Machine Learning Repository [6]. Since the decision tree used in [17] for the

Nursery dataset is not precisely specified, we test our protocol against a tree with the same depth

and number of comparison nodes as in [17]. In our benchmarks we measure the computation and

total communication between the client and the server. We also perform some heuristic analysis

to estimate the communication needed if we were to use a generic two-party secure computation

protocol based on Yao’s garbled circuits [63, 53] for private decision tree evaluation. We describe

this analysis in greater detail at the end of this section.

Our results are summarized in Table 1. The numbers we report for the performance of [17, 7]

are taken from [17, Table 4]. While our test environment is not identical to that in [17], it is similar:

Bost et al. conduct their experiments on a machine with a 2.66 GHz Intel Core i7 processor with 8

GB of RAM. Our results show that despite running at a higher security level (128 bits vs. 80 bits),

our protocol is over 19x faster for the client and over 5x faster for the server compared to the

protocols in [17] based on somewhat homomorphic encryption (SWHE). Moreover, our protocol is

more than 25x more efficient in terms of communication. Compared to the protocol in [7] based

on homomorphic encryption and garbled circuits, of Barni et al. [7], our protocol is almost 20x

16

Dataset n d m Method

Security End-to-End Computation (s)

Bandwidth (KB)

Level Time (s) Client Server

ECG 6 4 6

Barni et al. [7] 80 - 2.609 6.260 112.2

Bost et al. [17] 80 - 2.297 1.723 3555.0

Generic 2PC

†

128 - - - ≥ 180.5

Our protocol 128 0.344 0.136 0.162 101.9

Nursery 8 4 4

Bost et al. 80 - 1.579 0.798 2639.0

Generic 2PC

†

128 - - - ≥ 240.5

Our protocol 128 0.269 0.113 0.126 101.7

†

Estimated bandwidth from directly applying Yao’s protocol [63, 53] for private decision

tree evaluation. Note that the estimated numbers are only a lower bound, since we only

estimate the size of the garbled circuit, and not the OTs. See the discussion at end of

Section 6 for more information on these estimates.

Table 1: Performance of protocols for semi-honest secure decision tree evaluation. The decision

trees have m decision nodes and depth d. The feature vectors are n-dimensional. The “End-to-End

Time” column gives the total time for the protocol execution, as measured by the client (including

network communication). Performance numbers for the Barni et al. and Bost et al. methods are

taken from [17], which use a similar evaluation environment.

faster for both the client and the server, and requires slightly less communication. For these small

trees, our protocols also require less communication compared to generic two-party computation

protocols.

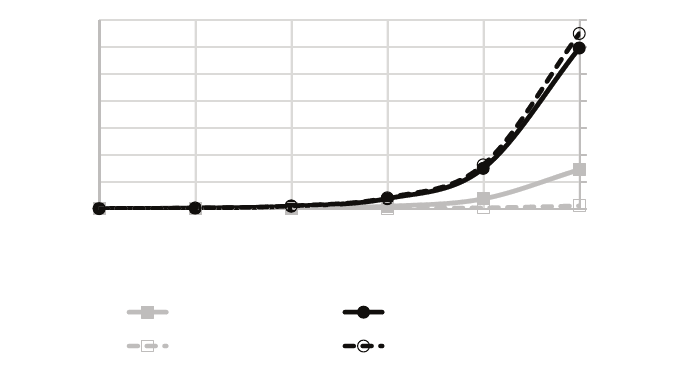

Scalability and sparsity. To understand the scalability of our protocols on large decision trees,

we perform a series of experiments on synthetic decision trees with different depths and densities.

We first consider complete decision trees, which serve as a “worst-case” bound on the protocol’s

performance for trees of a certain depth. In our experiments, we fix the dimension of the feature

space to n = 16 and the precision to t = 64. We measure both the computation time and the total

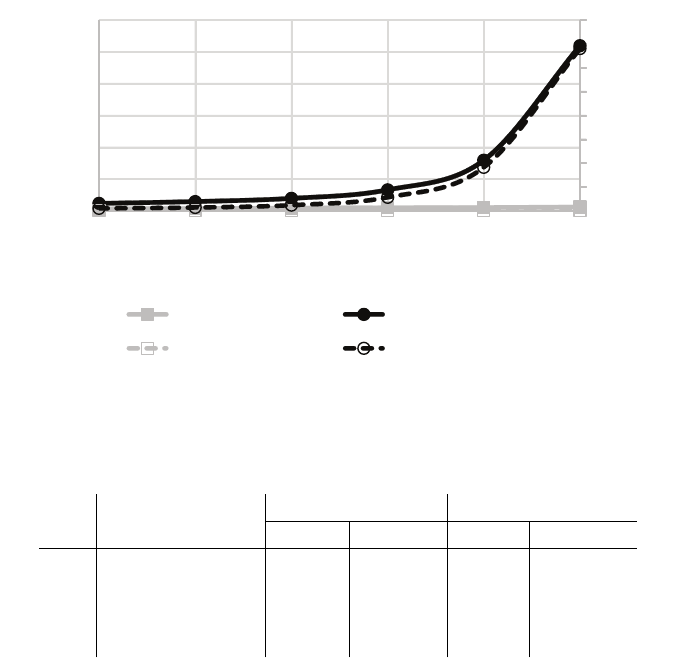

communication between the client and server. Our results are shown in Figure 3. We note that

even for large trees with over ten thousand decision nodes, our protocol still operates on the order

of minutes.

We also compare our protocol against the private decision tree evaluation protocol of Brickell et

al. [20]. Their protocol can evaluate a 1100 node tree in around 5 minutes and 25 MB of communi-

cation. On a similarly sized tree over an equally large feature space, our protocol completes in 30

seconds and requires about 10 MB of communication, representing a 10x and a 2.5x improvement

in computation and bandwidth, respectively.

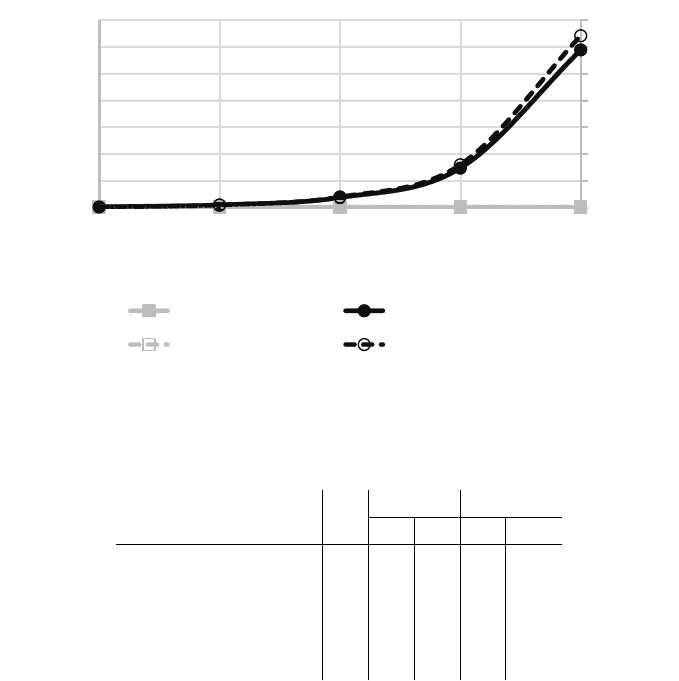

We also perform a set of experiments on “sparse” trees where the number m of decision nodes is

linear in the depth d of the tree. Here, we set m = 25d. We present the results of these experiments

in Figure 4. The important observation here is that the client’s computation now grows linearly,

rather than exponentially in the depth of the tree. Unfortunately, because the server computes a

decision string for the complete tree, the server’s computation increases exponentially in the depth.

However, since the cost of the homomorphic operations in the comparison protocol is greater than

the cost of computing the decision string, the protocol still scales to deeper trees and maintains

17

0

20

40

60

80

100

120

140

0

50

100

150

200

250

300

350

4 6 8 10 12 14

Bandwidth (MB)

Computaon Time (s)

Depth of Decision Tree

Client Computaon Server Computaon

Client Upload Server Upload

Figure 3: Client and server computation (excluding network communication) and total bandwidth

for semi-honest protocol on complete decision trees.

runtimes on the order of minutes. The limiting factor in this case is the exponential growth in

the size of the server’s response. Even though the tree is sparse, because the decision string

communicated by the server encodes information about every node in the padded tree, the amount

of communication from the server to the client is mostly unchanged. The communication upstream

from the client to the server, though, is significantly reduced (linear rather than exponential in the

depth).

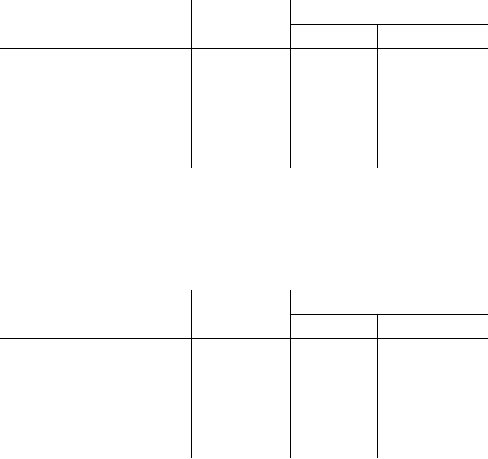

Handling malicious adversaries. Next, we consider the performance of the protocol from Fig-

ure 2 that provides protection against malicious adversaries. Since this protocol does not distinguish

between dummy and non-dummy nodes, the performance is independent of the number of actual

decision nodes in the tree. Thus, we only consider the benchmark for complete trees. Again, we

fix the dimension n = 16 and the precision t = 64. The results are shown in Figure 5. Here,

the client’s computation grows linearly in the depth of the tree, and thus, is virtually constant in

these experiments. In all experiments in Figure 5, the total client-side computation is under half

a second. Moreover, the amount of communication from the client to the server depends only on

n, t, and is independent of the size of the model. Thus, the client’s computation is very small. This

means that the protocol is suitable for scenarios where the client’s computational power is limited.

The trade-off is that the server now performs more work. Nonetheless, even for trees of depth 12

(with more than 4000 decision nodes), the protocol completes in a few minutes. It is also worth

noting that this protocol is almost non-interactive (i.e., the client does not have to remain online

during the server’s computation).

As a final note, we also benchmarked the protocol in Figure 2 on the ECG and Nursery datasets.

On the ECG dataset, the client’s and server’s computation took 0.191 s and 0.948 s, respectively,

with a total communication of 660 KB. On the Nursery dataset, the client’s and server’s compu-

tation took 0.216 s and 0.937 s, respectively, with a total communication of 720 KB. Even in spite

of the higher security level and the stronger security guarantees, our protocol remains 2x faster

18

0

20

40

60

80

100

120

140

160

0

20

40

60

80

100

120

10 12 14 16 18 20

Bandwidth (MB)

Computaon Time (s)

Depth of Decision Tree

Client Computaon Server Computaon

Client Upload

Server Upload

Figure 4: Client and server computation (excluding network communication) and total bandwidth

for semi-honest protocol on “sparse” trees.

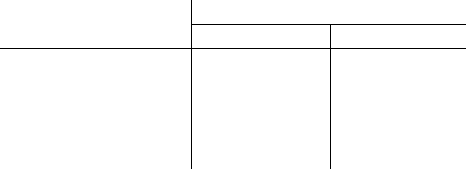

k End-to-End (s)

Computation (s) Bandwidth (MB)

Client Server Client Server

10 26.161 4.652 19.069 0.247 9.106

25 65.067 11.343 47.756 0.430 22.761

50 130.496 22.252 95.133 0.736 45.520

100 256.362 44.759 190.196 1.346 91.037

Table 2: Semi-honest random forest evaluation benchmarks. Each forest consists of k trees (each

tree has depth at most 10 and exactly 100 comparisons). The “End-to-End” measurements include

the time for network communication.

than that of [17] in total computation time and requires 3.5x less communication. Thus, even the

protocol secure against malicious adversaries is practical, and for the reasons mentioned above (low

client overhead and only two rounds of interaction), might, in some cases, be more suitable than

the semi-honest secure protocol.

Random forests. As described in Section 5, we generalize our decision tree evaluation protocol

to support random forests with an affine aggregation function with almost no additional overhead

than the cost of evaluating each decision tree privately. The computational and communication

complexity of the random forest evaluation protocol is just the complexity of the decision tree eval-

uation protocol scaled up by the number of trees in the forest. We give some example performance

numbers for evaluating a random forest with different number of trees. Each tree in the forest

has depth at most 10 and contains exactly 100 comparisons. As before, we take n = 16 for the

dimension and t = 64 for the precision. Our results are summarized in Table 2.

19

0

20

40

60

80

100

120

140

0

50

100

150

200

250

300

350

4 6 8 10 12

Bandwidth (MB)

Computaon Time (s)

Depth of Decision Tree

Client Computaon Server Computaon

Client Upload Server Upload

Figure 5: Client and server computation (excluding network communication) and total bandwidth

for one-sided secure protocol for decision tree evaluation.

Dataset n

Tree Forest

d m d m

breast-cancer 9 8 12 11 276

heart-disease 13 3 5 8 178

housing 13 13 92 12 384

credit-screening 15 4 5 9 349

spambase 57 17 58 16 603

Table 3: Parameters for decision trees and random forests trained on UCI datasets [6]: n is the

dimension of the data, m is the number of decision nodes in the model, d is the depth of the tree(s)

in the model.

Performance on real datasets. We conclude our analysis by describing experiments on decision

trees and random forests trained on real datasets. We benchmark our protocol on five datasets

from the UCI repository [6] spanning application domains such as breast cancer diagnosis and

credit rating classification. We train our trees using standard Matlab tools (classregtree and

TreeBagger). To obtain more robust models, we introduce a hyperparameter α ≥ 1 that specifies