MAP® Growth™ Technical Report

March 2019

© 2019 NWEA.

NWEA, MAP, and Measures of Academic Progress are registered trademarks, and MAP Skills,

MAP Growth, and MAP Reading Fluency are trademarks, of NWEA in the U.S. and in other

countries. All rights reserved. No part of this document may be modified or further distributed

without written permission from NWEA.

The names of other companies and their products mentioned are the trademarks of their

respective owners.

Suggested citation: NWEA. (2019). MAP® Growth™ technical report. Portland, OR: Author.

2019 MAP® Growth™ Technical Report Page i

Table of Contents

Executive Summary ....................................................................................................................1

Chapter 1: Introduction ................................................................................................................3

1.1. MAP Growth Overview ..................................................................................................3

1.2. Background ...................................................................................................................5

1.3. Rationale.......................................................................................................................6

1.3.1. Accurate Measurement .....................................................................................6

1.3.2. Content Standards Match ..................................................................................7

1.4. Intended Uses of Test Scores .......................................................................................7

Chapter 2: Test Design ...............................................................................................................8

2.1. Design Principles ..........................................................................................................8

2.1.1. Six Guiding Principles .......................................................................................8

2.1.2. Universal Design ...............................................................................................8

2.2. Types of MAP Growth Assessments .............................................................................9

2.2.1. MAP Growth K–2.............................................................................................10

2.2.2. MAP Growth 2–12 ...........................................................................................11

2.3. Content Design Rationale ...........................................................................................11

2.3.1. Reading and Language Usage ........................................................................11

2.3.2. Mathematics ....................................................................................................12

2.3.3. Science ...........................................................................................................12

2.4. MAP Growth Transition ...............................................................................................12

2.5. Instructional Areas and Sub-areas ..............................................................................13

2.6. Learning Statements ...................................................................................................18

2.7. Item Alignment to Standards .......................................................................................18

2.7.1. Alignment Studies ...........................................................................................18

2.7.2. Alignment Guidelines ......................................................................................18

2.8. Test Construction ........................................................................................................22

2.9. Test Content Validation ...............................................................................................22

Chapter 3: Item Development ...................................................................................................24

3.1. Item Types ..................................................................................................................24

3.2. Item Development Resources .....................................................................................30

3.2.1. Item Specifications ..........................................................................................30

3.2.2. Cognitive Complexity .......................................................................................30

3.3. Item Writing .................................................................................................................31

3.3.1. Freelance Recruitment and Selection ..............................................................31

3.3.2. Media ..............................................................................................................31

3.3.3. Metadata .........................................................................................................31

3.4. Item Review ................................................................................................................32

3.4.1. Copyright and Permissions Review .................................................................33

3.4.2. Content Validation ...........................................................................................34

3.4.3. Item Owner Review .........................................................................................34

3.4.4. Content Confirmation Review ..........................................................................36

3.4.5. Item Quality Review ........................................................................................36

3.4.6. Bias, Sensitivity, and Fairness .........................................................................36

3.5. Reading Passage Development ..................................................................................37

2019 MAP® Growth™ Technical Report Page ii

3.5.1. Passage Writer Recruitment and Selection .....................................................39

3.5.2. Passage Acquisition and Review Process .......................................................39

3.6. Text Readability ..........................................................................................................40

3.7. Field Testing ...............................................................................................................40

3.8. Statistical Summary of the Item Pools .........................................................................41

Chapter 4: Test Administration and Security .............................................................................45

4.1. Adaptive Testing .........................................................................................................45

4.2. Test Engagement Functionality ...................................................................................46

4.3. User Roles and Responsibilities ..................................................................................46

4.4. Administration Training ...............................................................................................47

4.5. Practice Tests .............................................................................................................47

4.6. Accessibility and Accommodations .............................................................................48

4.6.1. Universal Features ..........................................................................................48

4.6.2. Designated Features .......................................................................................49

4.6.3. Accommodations .............................................................................................49

4.6.4. Third-Party Assistive Software.........................................................................50

4.7. Test Security ...............................................................................................................51

4.7.1. Assessment Security .......................................................................................52

4.7.2. Role-Based Access .........................................................................................52

Chapter 5: Test Scoring and Item Calibration ............................................................................53

5.1. Rasch Unit (RIT) Scales ..............................................................................................53

5.2. Calculation of RIT Scores ...........................................................................................54

5.3. 2015 MAP Growth Norms ...........................................................................................54

5.3.1. Norm Reference Groups .................................................................................55

5.3.2. Variation in Testing Schedules and Instructional Time ....................................55

5.3.3. Estimating the 2015 MAP Growth Norms ........................................................55

5.3.4. Achievement Status and Growth Norms ..........................................................56

5.3.5. Measuring Growth ...........................................................................................56

5.3.6. Norms Example ...............................................................................................57

5.4. RIT Score Descriptive Statistics ..................................................................................58

5.4.1. Overall Descriptive Statistics ...........................................................................58

5.4.2. Descriptive Statistics by Instructional Area ......................................................60

5.5. Item Calibration ...........................................................................................................63

5.6. Field Test Item Evaluation ...........................................................................................64

5.6.1. Item Fit ............................................................................................................64

5.6.2. Model of Man (MoM) Procedure ......................................................................66

5.6.3. Human Review Process ..................................................................................67

5.7. Item Parameter Drift ....................................................................................................67

Chapter 6: Reporting .................................................................................................................68

6.1. MAP Growth Reports ..................................................................................................68

6.1.1. Student-Level Reports .....................................................................................70

6.1.2. Class-Level Reports ........................................................................................73

6.1.3. District-Level Reports ......................................................................................76

6.1.4. Learning Continuum ........................................................................................79

6.2. Quality Assurance .......................................................................................................80

Chapter 7: Reliability .................................................................................................................82

2019 MAP® Growth™ Technical Report Page iii

7.1. Test-Retest Reliability .................................................................................................82

7.2. Marginal Reliability (Internal Consistency) ...................................................................84

7.3. Score Precision ...........................................................................................................88

Chapter 8: Validity .....................................................................................................................93

8.1. Evidence Based on Test Content ................................................................................93

8.2. Evidence Based on Relations to Other Variables ........................................................93

8.2.1. Concurrent Validity ..........................................................................................94

8.2.2. Classification Accuracy of Predicting State Achievement Levels .....................94

8.3. Evidence Based on Internal Structure .........................................................................95

8.3.1. Test-taking Engagement .................................................................................95

8.3.2. Differential Item Functioning (DIF) ...................................................................96

References ............................................................................................................................. 100

Appendix A: Student Sample by State and Demographics ...................................................... 104

Appendix B: Average RIT Scores by State .............................................................................. 110

Appendix C: Test-Retest Reliability by State ........................................................................... 121

Appendix D: Marginal Reliability by State ................................................................................ 156

Appendix E: Concurrent Validity by State ................................................................................ 176

Appendix F: Classification Accuracy by State.......................................................................... 181

List of Tables

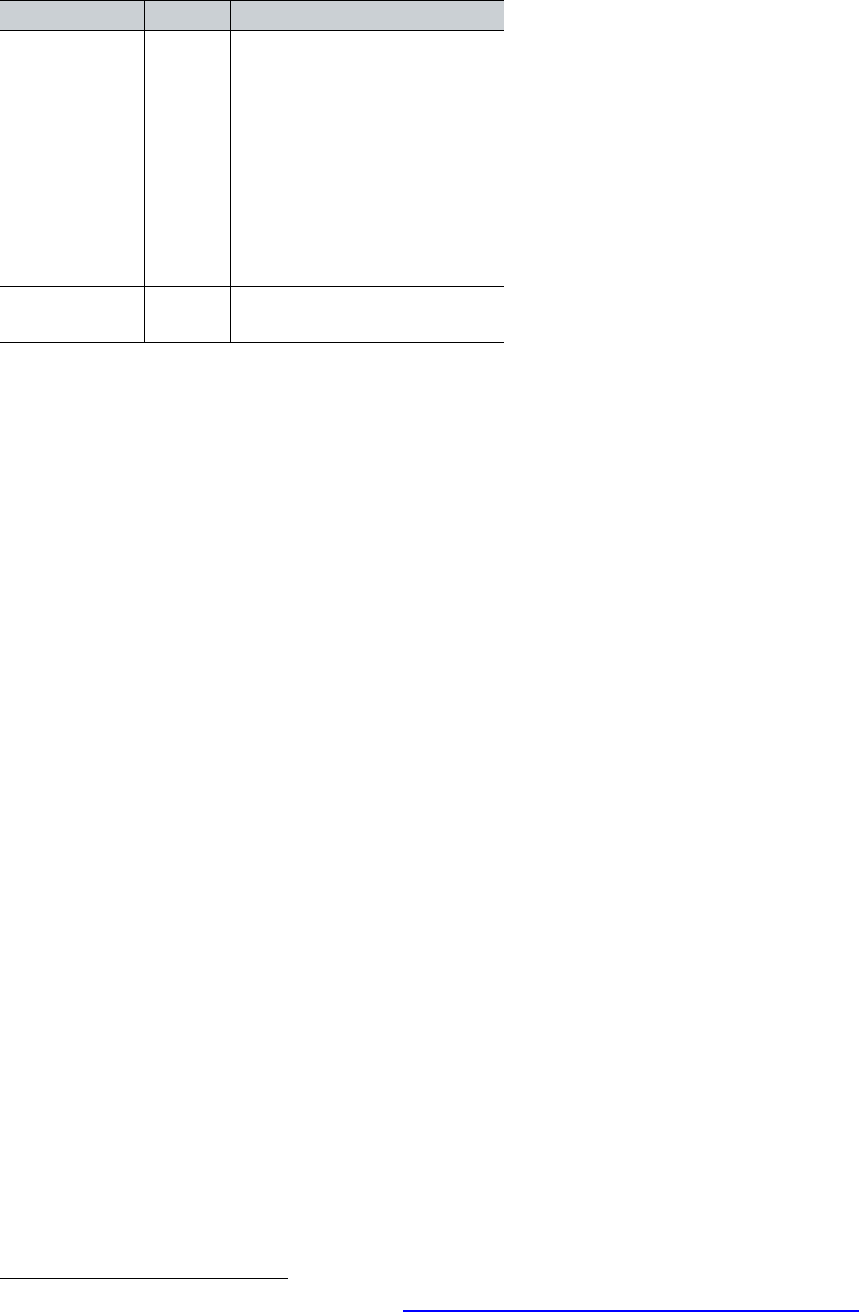

Table 1.1. MAP Growth Assessed Grades by Content Area ........................................................3

Table 2.1. Universal Design Principles ........................................................................................9

Table 2.2. MAP Growth Assessments .........................................................................................9

Table 2.3. Instructional Area Chart for use with CCSS—Reading K–2 ......................................13

Table 2.4. Instructional Area Chart for use with CCSS—Reading 2–5 and 6+ ...........................14

Table 2.5. Instructional Area Chart for use with CCSS—Language Usage 2–12 .......................14

Table 2.6. Instructional Area Chart for use with CCSS—Mathematics K–2 and 2–5..................15

Table 2.7. Instructional Area Chart for use with CCSS—Mathematics 6+ .................................15

Table 2.8. Instructional Area Chart for use with CCSS—High School Mathematics ..................15

Table 2.9. Instructional Area Chart for use with NGSS—Science 2–12 .....................................17

Table 2.10. Alignment Guidelines for MAP Growth ...................................................................19

Table 3.1. Item Types ...............................................................................................................25

Table 3.2. Item Review Checklist ..............................................................................................35

Table 3.3. Common Stimulus Passage Word Count Guidelines ................................................38

Table 3.4. Quantitative and Qualitative Analyses ......................................................................40

Table 3.5. MAP Growth Content Structure for use with CCSS and NGSS .................................41

Table 4.1. User Roles in the MAP Growth System ....................................................................46

Table 4.2. Available Universal Features ....................................................................................48

Table 4.3. Available Designated Features .................................................................................49

Table 4.4. Available Accommodations ......................................................................................50

Table 4.5. Third-Party Assistive Software ..................................................................................50

Table 4.6. Test Security Before and During Testing ..................................................................52

Table 5.1. Evaluation of Growth for a Sample of Grade 4 Students in MAP Growth Reading ....57

2019 MAP® Growth™ Technical Report Page iv

Table 5.2. Overall Descriptive Statistics of RIT Scores ..............................................................59

Table 5.3. RIT Score Descriptive Statistics by Instructional Area—Reading K–2 ......................61

Table 5.4. RIT Score Descriptive Statistics by Instructional Area—Reading 2–12 .....................61

Table 5.5. RIT Score Descriptive Statistics by Instructional Area—Language Usage 2–12 .......62

Table 5.6. RIT Score Descriptive Statistics by Instructional Area—Mathematics K–2 ................62

Table 5.7. RIT Score Descriptive Statistics by Instructional Area—Mathematics 2–12 ..............62

Table 5.8. RIT Score Descriptive Statistics by Instructional Area—Science 2–12 .....................63

Table 5.9. Fit Index Descriptions and Criteria ............................................................................65

Table 6.1. Required Roles for Report Access............................................................................68

Table 6.2. Report Summary ......................................................................................................68

Table 6.3. Ensuring Software Integrity ......................................................................................81

Table 7.1. Test-Retest with Alternate Forms Reliability by Grade ..............................................83

Table 7.2. Marginal Reliability by Grade ....................................................................................85

Table 7.3. Marginal Reliability by Instructional Area and Grade—Reading K–2.........................86

Table 7.4. Marginal Reliability by Instructional Area and Grade—Reading 2–12 .......................87

Table 7.5. Marginal Reliability by Instructional Area and Grade—Language Usage 2–12 .........87

Table 7.6. Marginal Reliability by Instructional Area and Grade—Mathematics K–2 ..................87

Table 7.7. Marginal Reliability by Instructional Area and Grade—Mathematics 2–12 ................88

Table 7.8. Marginal Reliability by Instructional Area and Grade—Science 3–12 ........................88

Table 8.1. Average Concurrent Validity (r) and Classification Accuracy (p) ...............................93

Table 8.2. Summary of Classification Accuracy Statistics..........................................................95

Table 8.3. DIF Categories .........................................................................................................97

Table 8.4. Number of Students and Items Included in the Fall 2016 to Fall 2017 DIF Analysis .98

Table 8.5. DIF Results for Gender and Ethnicity .......................................................................98

List of Figures

Figure 1.1. Tracking Growth ........................................................................................................4

Figure 3.1. Item Development Flowchart ...................................................................................24

Figure 3.2. Sample Item—Multiple-Choice (Mathematics) .........................................................25

Figure 3.3. Sample Item—Multiple Select/Multiselect (Reading) ...............................................26

Figure 3.4. Sample Item—Selectable Text (Language Usage) ..................................................26

Figure 3.5. Sample Item—Selectable Text (Mathematics) .........................................................26

Figure 3.6. Sample Item—Drag-and-Drop (Language Usage) ...................................................27

Figure 3.7. Sample Item—Click-and-Pop (Mathematics) ...........................................................27

Figure 3.8. Sample Item—Text Entry (Mathematics) .................................................................27

Figure 3.9. Sample Item—Item Set, Multiple-Choice (Reading).................................................28

Figure 3.10. Sample Item—Item Set, Multiple Select/Multiselect (Reading) ..............................28

Figure 3.11. Sample Item—Composite Item (Reading) .............................................................29

Figure 3.12. Sample Item—Composite Item (Science) ..............................................................29

Figure 5.1. Fall-to-Winter CGP for a Sample of Schools in MAP Growth Reading Grade 4 .......58

Figure 5.2. Mathematics Item with Poor Model Fit .....................................................................66

Figure 5.3. Reading Item with Good Model Fit ..........................................................................66

Figure 6.1. Student Profile Report .............................................................................................71

Figure 6.2. Student Progress Report .........................................................................................72

2019 MAP® Growth™ Technical Report Page v

Figure 6.3. Student Goal Setting Worksheet .............................................................................73

Figure 6.4. Class Report ...........................................................................................................74

Figure 6.5. Achievement Status and Growth (ASG) Report .......................................................75

Figure 6.6. Class Breakdown by Projected Proficiency Report ..................................................76

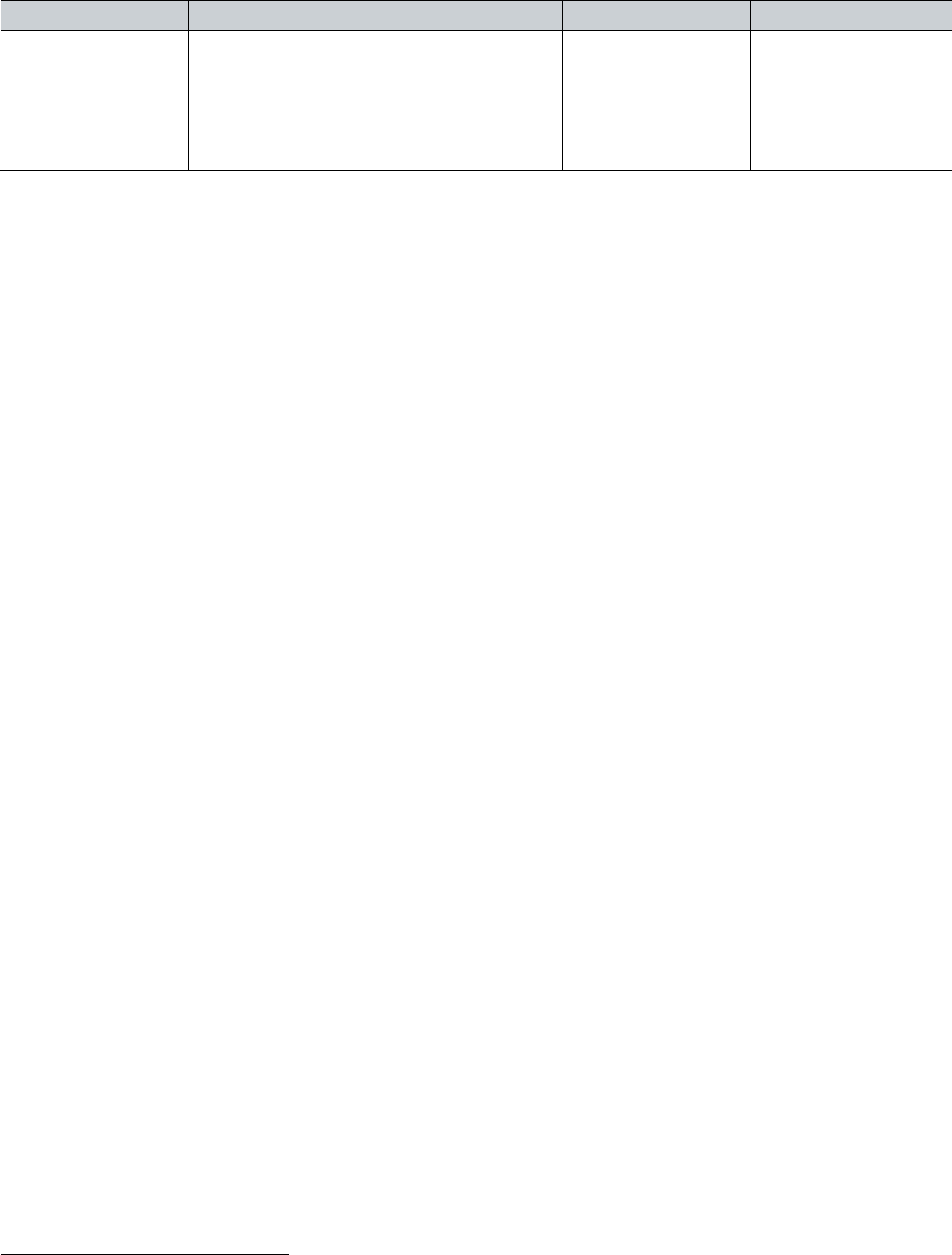

Figure 6.7. District Summary Report .........................................................................................77

Figure 6.8. Student Growth Summary Report............................................................................77

Figure 6.9. Projected Proficiency Summary Report ...................................................................78

Figure 6.10. Grade Report ........................................................................................................78

Figure 6.11. Grade Breakdown Report ......................................................................................79

Figure 6.12. Learning Continuum Class View............................................................................80

Figure 7.1. Mean SEM of RIT Scores, Fall 2016 – Fall 2017—Reading ....................................89

Figure 7.2. Mean SEM of RIT Scores, Fall 2016 – Fall 2017—Language Usage ......................90

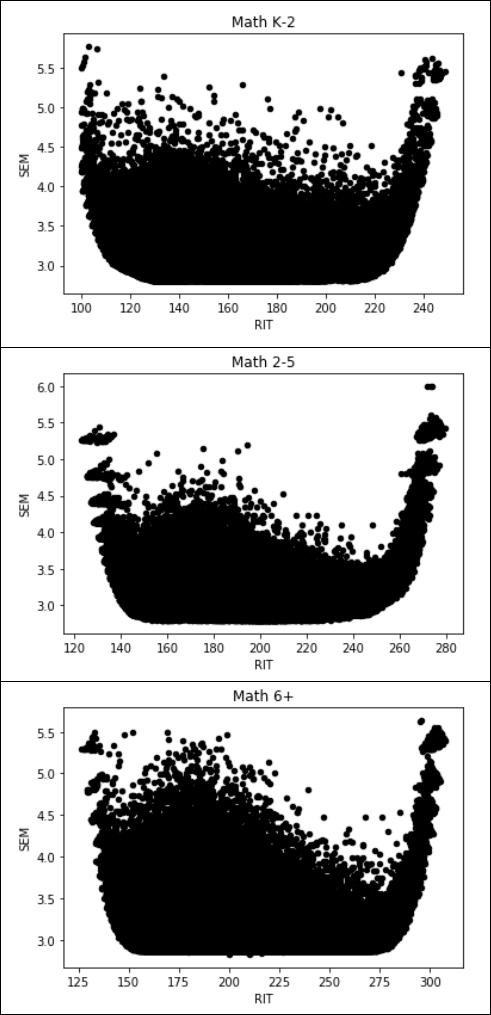

Figure 7.3. Mean SEM of RIT Scores, Fall 2016 – Fall 2017—Mathematics .............................91

Figure 7.4. Mean SEM of RIT Scores, Fall 2016 – Fall 2017—Science .....................................92

2019 MAP® Growth™ Technical Report Page vi

List of Abbreviations

Below is a list of abbreviations that appear in this technical report.

ALT .................. Achievement Level Test (paper-pencil precursor to MAP Growth)

AOR ................. Aspects of Rigor

ASG ................. Achievement Status and Growth

CCSS ............... Common Core State Standards

CCSSO ............ Council of Chief State School Officers

CGI ................... conditional growth index

CGP ................. conditional growth percentile

DIF ................... differential item functioning

DOK ................. Depth of Knowledge

ELA .................. English Language Arts

ELL ................... English language learner

ETS .................. Educational Testing Service

GRD ................. Growth Research Database

HLM ................. hierarchal linear model

IEP ................... Individualized Education Program

IRT ................... item response theory

MAP ................. Measures of Academic Progress® (now MAP Growth)

MH ................... Mantel-Haenszel

MLE .................. maximum likelihood estimation

MoM ................. Model of Man

MPG ................. MAP for Primary Grades (now MAP Growth K–2)

MSE ................. mean square error

NCRTI .............. National Center on Response to Intervention

NGSS ............... Next Generation Science Standards

PARCC ............. Partnership for Assessment of Readiness for College and Careers

RIT ................... Rasch Unit

RMSE ............... root mean square error

RTI ................... response to intervention

SBAC ............... Smarter Balanced Assessment Consortium

SCI ................... School Challenge Index

SD .................... standard deviation

SEM ................. standard error of measurement

TEI ................... technology-enhanced item

TTS .................. text-to-speech

UDL .................. Universal Design for Learning

Acknowledgements

It is with great appreciation that we recognize the many people at NWEA who contributed to this

technical report. It was a collaborative effort involving people from numerous departments in the

organization. We give special thanks to those who conducted the analyses and wrote and

edited the document, including Emily Bo, Jing Chen, Laurence Dupray, Garron Gianopulos,

Kelly Larson, Sylvia Li, Patrick Meyer, Mary Resanovich, Adam Withycombe, and countless

others whose expertise and knowledge about MAP Growth was crucial.

2019 MAP® Growth™ Technical Report Page 1

Executive Summary

This technical report is written for measurement professionals and administrators to help

evaluate the quality of the MAP® Growth™ assessments. Principal information presented in

each chapter is summarized below. This report is not intended to be an administration guide for

the tests or a technical description of the hardware and software needed for use of the system.

For additional information not covered in this technical report, please contact your local NWEA®

representative or consult the NWEA website at www.nwea.org.

Chapter 1: Introduction

This chapter summarizes MAP Growth and describes the background and rationale behind the

development of the assessments. MAP Growth assessments are interim adaptive tests that

measure a student’s academic achievement and growth. Scores are reported on the Rasch Unit

(RIT) scale and can be used to track growth and predict performance on state summative

assessments. The rationale behind the MAP Growth development has two primary aspects: the

need for accurate measurement for all students and the need to provide schools with tests that

align to their academic standards. As of February 2018, NWEA has partnered with more than

9,700 education organizations worldwide and has reached approximately 11 million students.

Chapter 2: Test Design

This chapter summarizes the different types of MAP Growth assessments and the rationale

behind their designs. The assessments are structured by content area, instructional area, and

sub-area. Items are carefully aligned to the standards and assigned learning statements. When

new tests are constructed or updated, they are first validated to ensure that each newly aligned

MAP Growth item pool performs as intended and that the assessments can withstand multiple

administrations per year. Tests are classified as pass, pass with qualifiers, or fail. Most tests

pass or receive a qualified pass.

Chapter 3: Item Development

This chapter describes the MAP Growth item types and the item development and review

processes, including the MAP Growth Reading passage development process. MAP Growth

assessments draw from an item bank containing more than 42,000 items that are carefully

aligned to standards and assigned learning statements. All newly developed items are field

tested, and items that meet psychometric quality criteria are added to the item bank. Item

development and field testing for MAP Growth assessments occurs continually to enhance and

deepen the item pool.

Chapter 4: Test Administration and Security

This chapter describes the test administration and test security processes. MAP Growth

assessments are untimed and can be administered up to four times a year (fall, winter, and

spring, with a fourth optional administration in summer). Access to the MAP Growth system is

based on differentiated roles such as system administrator and proctor. Administration training

is provided as part of the NWEA professional learning services, and practice tests are available

that provide the same access and functionality as the real MAP Growth tests. MAP Growth

assessments have several features to improve test fairness and provide more precise and valid

measurement, including universal features such as a calculator and highlighter, designated

features such as text-to-speech (TTS), and accommodations such as assistive technology. Test

security is maintained in a variety of ways, including with large item pools, adaptive testing

advantages, a lockdown browser, data encryption, and role-based access.

2019 MAP® Growth™ Technical Report Page 2

Chapter 5: Test Scoring and Item Calibration

This chapter describes the development of the RIT scale, the calculation of RIT scores, item

calibration, evaluation of field test items, and item parameter drift. It also provides RIT score

descriptive statistics, including the mean, standard deviation, and the minimum and maximum

RIT scores. The RIT scale is a vertical scale based on the Rasch item response theory (IRT)

model. During testing, each item is selected to yield maximum information about the student’s

ability. Individual tests are constructed based on the student’s performance while responding to

items constrained in content to a set of standards. A student’s final ability estimate indicates the

student’s location on the RIT scale and is reported as a RIT score from 100 to 350. Each

content area has its own unique scale. Scores also include percentile ranks based on the 2015

MAP Growth norms (Thum & Hauser, 2015) to compare students’ achievement status and

growth to their peers. Field test items are administered in fixed positions during an operational

test. Responses are continuously collected on field test items until the items successfully pass

calibration and can be administered operationally. Good item parameter estimates are critical to

the validity of a test based on IRT, so field test items are checked for model fit via item fit

statistics, the Model of Man (MoM) procedure, and human reviews. Finally, periodic reviews of

item performance are conducted based on item parameter drift to ensure scale stability across

time and student subgroups. Thus far, results have shown that a large majority of MAP Growth

items are stable over time and have little to no drift.

Chapter 6: Reporting

This chapter summarizes the MAP Growth reports that are available at the student, class, and

district levels. Report types include the Student Profile, Student Progress, Achievement Status

and Growth (ASG), Class Breakdown by RIT, District Summary, and Skills Checklists and

Screening reports. The learning continuum shows the content a student can encounter

throughout the test by instructional area, standards, and RIT bands. This report can be used to

show what students performing at a given RIT level on MAP Growth assessments have

achieved and what they are typically ready to learn. It has two views: the class view and test

view. The reporting software undergoes routine quality assurance processes.

Chapter 7: Reliability

This chapter summarizes the reliability evidence provided for MAP Growth. Reliability refers to

the consistency of achievement estimates obtained from the assessment. The reliability of the

MAP Growth assessments was examined via test-retest reliability, marginal reliability (internal

consistency), and score precision based on the standard error of measurement (SEM). Test-

retest results indicate that students’ MAP Growth scores are highly consistent for students at

different grade levels and from different states. The overall marginal reliabilities for all grades

and content areas are in the .90s, which suggests that MAP Growth tests have high internal

consistency. Regarding score precision, the MAP Growth adaptive test algorithm selects the

best items for each student, producing a significantly lower SEM than fixed-form tests.

Chapter 8: Validity

Validity is defined as the “the degree to which evidence and theory support the interpretations of

test scores for proposed uses. Validity is, therefore, the most fundamental consideration in

developing tests and evaluating tests” (AERA, APA, & NCME, 2014, p. 11). This chapter

summarizes evidence based on test content, internal structure, and relations to other variables.

2019 MAP® Growth™ Technical Report Page 3

Chapter 1: Introduction

This technical report documents the processes and procedures employed by NWEA® to build

and support the MAP® Growth™ and MAP Growth K–2 assessments for use with the Common

Core State Standards (CCSS; National Governors Association Center for Best Practices &

Council of Chief State School Officers [CCSSO], 2010)

1

and Next Generation Science

Standards (NGSS; NGSS Lead States, 2013)

2

.

1.1. MAP Growth Overview

MAP Growth assessments are interim adaptive tests that measure a student’s academic

achievement and growth in Reading, Language Usage, Mathematics, and Science, as shown in

Table 1.1. The assessments are untimed and can be administered up to four times a year in the

fall, winter, and spring, with a fourth optional administration in summer. It generally takes

students about one hour to complete each MAP Growth test.

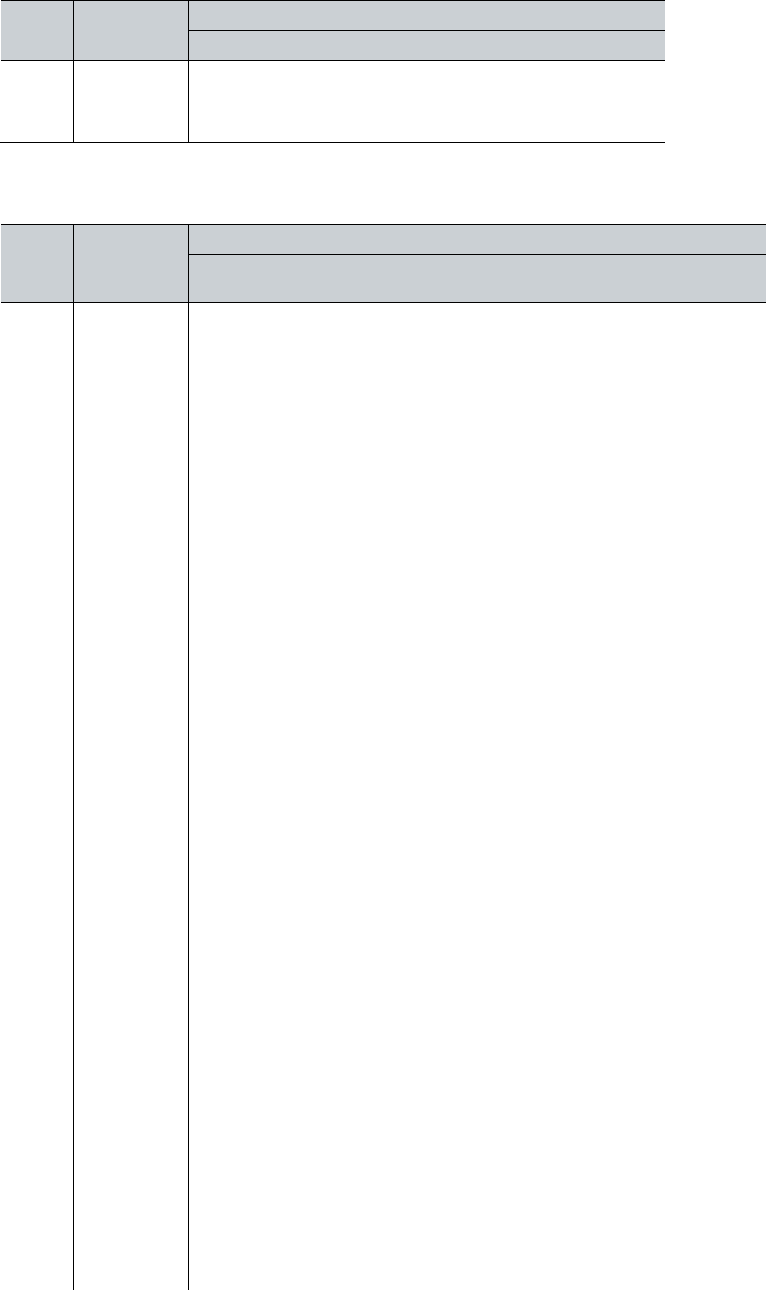

Table 1.1. MAP Growth Assessed Grades by Content Area

Assessed Grades

Content Area

K

1

2

3

4

5

6

7

8

9

10

11

12

Reading

X

X

X

X

X

X

X

X

X

X

X

X

X

Mathematics

X

X

X

X

X

X

X

X

X

X

X

X

X

Language Usage

X

X

X

X

X

X

X

X

X

X

X

Science*

X

X

X

X

X

X

X

X

X

X

X

*MAP Growth Science assessments in Grades 9–12 were published for the first time in July 2018. MAP Growth

Science 3–5 can be administered to students in Grades 2–5. The MAP Growth Science 6+ assessments can be

administered to students in Grades 6–12.

MAP Growth assessments have many benefits, including the following:

• Dynamic adjustment to each student’s achievement level, providing an accurate

indication of their performance and instructional level

• Performance and growth summaries of an individual student and group of students at

the grade, classroom, school, and district levels relative to a reference group of

examinees

• Frequent administrations throughout the year, allowing teachers to make timely

instructional adjustments

• Grade-independent scaling that allows educators to monitor a student's academic

achievement and growth regardless of the student’s current grade level

• Score reports that include status and growth scores for describing a student's learning

from different perspectives

• Untimed test administrations to best measure what students know rather than what they

can read and complete in a fixed period of time

1

© Copyright 2010 National Governors Association Center for Best Practices and Council of Chief State

School Officers. All rights reserved.

2

Next Generation Science Standards is a registered trademark of Achieve. Neither Achieve nor the lead

states and partners that developed the Next Generation Science Standards were involved in the

production of this product, and do not endorse it.

2019 MAP® Growth™ Technical Report Page 4

MAP Growth has an item bank containing more than 42,000 items aligned to various content

standards. Many states use the CCSS and NGSS, but NWEA also creates a unique set of item

pools and assessments for states that have their own state-specific content standards. For each

version of the MAP Growth assessment, NWEA content specialists review the standards, select

items from the MAP Growth item bank that directly align to the standard statements, and write

new items to ensure coverage of the standards. MAP Growth items are dichotomously scored

multiple-choice items or technology-enhanced items (TEIs). Each MAP Growth adaptive

assessment selects items balanced across the breadth of student learning expectations,

ensuring that students see a variety of content across the standards.

MAP Growth assessments are designed to provide accurate measurement of student

performance by featuring content across grades and adjusting the assessment outside of grade

level. For example, a Grade 3 student would see items aligned to the Grade 3 standards but

could also see items aligned to higher and lower grade levels depending on their test

performance. Because MAP Growth is administered adaptively, individual students’ learning

levels, not simply grade-specific achievement levels, are identified. This means that off-grade

alignment may be appropriate for an individual student.

Each MAP Growth assessment produces a score in the overall content area, as well as

instructional area subscores that can be used to tailor instructional practices and identify

specific content a student is most ready to learn. MAP Growth scores are reported on the

NWEA Rasch Unit (RIT) scale, an equal-interval vertical scale that is continuous across grades

and unique to each content area. Tests of the same content area share a common RIT scale.

Score reports also include achievement and growth norms used by teachers to set learning

goals for students and provide context for interpreting changes in RIT scores related to the age

and grade of students. NWEA conducts MAP Growth norming studies every three to five years.

The 2015 MAP Growth norms (Thum & Hauser, 2015) are the most recent.

Changes in students’ test scores over time may be interpreted as growth in academic

achievement. MAP Growth reveals how much growth has occurred between testing events and,

when combined with the NWEA norms, shows how growth compares to a reference group of

students. Educators can track growth through the school year and over multiple years, as

shown in Figure 1.1.

Figure 1.1. Tracking Growth

2019 MAP® Growth™ Technical Report Page 5

1.2. Background

NWEA began in 1973 by a group of school districts looking for practical answers to the following

questions. To this day, these questions remain central to the mission of NWEA and, more

broadly, to educational assessment and research.

• How can student achievement be efficiently and accurately measured?

• How can assessment results be leveraged to inform instruction?

• How can the rate of learning be accelerated using assessment information?

In 1977, NWEA became an incorporated not-for-profit and began to work with individual school

districts in Oregon and Washington (with Portland providing the largest sample of students) to

write and field test items that covered the spectrum of student performance in Grades 3–8 in

Reading and Mathematics. This work allowed NWEA to create the Achievement Level Tests

(ALTs) to improve measurement for students who were progressing normally, falling behind

their peers, or excelling beyond their peers. These tests used a multi-stage test design and

were administered in paper-pencil form (Ingebo, 1997). The multiple levels made ALTs more

precise than a fixed-form test but also logistically complex to administer. These tests were

constructed from the NWEA item banks to fit the content standards of each school district.

In 1985, NWEA began to work with districts in Oregon and Washington to create adaptive tests

administered on personal computers to make the assessment even more efficient and precise.

By this time, NWEA had expanded its testing capabilities to include high school grades and had

added content in Language Usage and Science. These tests used the full range of adaptive

testing capabilities developed in universities to improve measurement (Weiss & Vale, 1987;

Kingsbury & Weiss, 1980). These adaptive tests provided excellent measurement accuracy for

a variety of students. However, due to the limitations on computers available in the schools,

limitations on networking, and limitations on the client-server software available at that time,

most districts continued to use the ALTs and used the NWEA adaptive tests only for special-

purpose testing.

In 2000, NWEA released Measures of Academic Progress® (MAP®) using improvements in

educational technology. These tests used expanded item pools and took advantage of

technological advancements to allow schools to replace their ALTs with adaptive tests for all but

a few students with special needs. Since almost every state had a set of content standards in

place at the time of the release of MAP, specific items were selected from the item banks to

match the content standards in each state.

In 2006, NWEA responded to the growing need for better assessment of younger students by

introducing MAP for Primary Grades (MPG). These assessments include audio support to

enable students who are beginning readers to access the content and demonstrate their

achievement. They include adaptive tests and a set of specific fixed-form pre-tests designed to

measure precursor skills that are common to kindergarten curriculum.

Starting in 2017, MAP and MPG are now known as MAP Growth and MAP Growth K–2,

respectively. The client-server version of MAP Growth was also retired in 2017 and replaced by

the web-based version. As of February 2018, NWEA has partnered with more than 9,700

education organizations worldwide and has reached approximately 11 million students.

2019 MAP® Growth™ Technical Report Page 6

1.3. Rationale

The rationale behind the development of MAP Growth has two primary aspects:

1. The quest for accurate measurement for all students

2. A need to provide schools with tests that match their academic content standards

1.3.1. Accurate Measurement

Fixed-form tests tend to lack information for certain segments of the student population. For

example, if a fixed-form test is designed to measure well for the middle of the distribution of

students, most of the items will be concentrated near the middle of the distribution. These items

will be too difficult for students who are struggling and too easy for students who are excelling.

This means that the result of the test will provide less information for students at the extreme

ends of the distribution than it provides for the students near the middle. Giving the teacher less

information about students at the low or high end of the distribution makes it more difficult to

target instruction for those students. This is an equity issue for these students, and it certainly

reduces the efficiency of teaching them.

The early NWEA researchers realized the equity problem and understood that the tests

available at the time failed to give equally precise information for all students. In searching for

answers to this problem, these researchers discovered two useful tools:

1. The Rasch item response theory (IRT) model (Rasch, 1960/1980) that allows the

development of item banks in which the items have known characteristics. This means

that the item characteristics, once estimated, can be applied to new groups of students

in the population of interest. This, in turn, makes it possible to create and administer

different tests to different students while having all the test scores associated to a

common measurement scale.

2. Adaptive testing (Weiss, 1974) that draws items from an item pool according to the

performance of each student. As the student answers items correctly, the system

chooses more difficult items to administer. If the student answers items incorrectly, the

next item will be easier. This type of test allows the test developer to provide a test that

has scores with similar precision for every student tested, provided the item pool is large

enough and the adaptive testing design is adequate.

The NWEA researchers employed both these tools to create large item banks calibrated to

known measurement scales. They then used these item banks to create adaptive tests that

measure the students in their schools well by presenting items that, given the purpose of the

test, are well matched to a student’s experience, characteristics, or behavior. This is known as

item targeting, which is a critical influence on test quality.

A fixed-form test might be carefully aligned to a set of specific content standards. If all students

in a class were taught according to those content standards, it might be concluded that the

items were targeted indirectly to the students through the content. This would be considered a

low level of item targeting because it is directed exclusively at the student’s experience and

ignores other student characteristics and behaviors. A test administered adaptively, on the other

hand, presents a higher level of targeting. Items presented may be selected from a core grade-

level content pool and from pools that extend both above and below the core pool. Items are

selected using a specified content structure. An algorithm is used to estimate the student’s

achievement level after the student’s response to each item and randomly selects the next item

2019 MAP® Growth™ Technical Report Page 7

from all available items having difficulty values that match the estimate of the student’s

achievement. Such a test engages the student by presenting items that are neither too easy

(leading to boredom) nor too hard (leading to frustration).

When a student remains sufficiently engaged in such a test, the measurement error associated

with the test score will be much smaller than a fixed-form test of the same length or even

somewhat longer. Therefore, an adaptive test makes efficient use of the time that the student

spends in the testing environment by maximizing the level of information that each item

contributes to the total test score. The result is total test scores with higher information values,

for virtually all students, than would be expected from a fixed-form test of the same length

administered to the same group of students.

1.3.2. Content Standards Match

Creation of the adaptive tests depends on the match of the item pools to the content standards

of the state. Another difficulty that struck NWEA researchers early on was that assessments

taken off the shelf rarely matched the content being taught in the schools. Further, since content

standards differed from state to state (and from district to district at that time), no one test could

capture the nuances associated with the way a content area was taught in schools from one

district or state to the next. It was clear that to establish consistent measurement across

locations, the assessment content had to be matched to the content standards of each agency

(i.e., a district or state).

The NWEA item banks are large and include content that goes beyond the bounds of any one

curriculum structure. Therefore, when developing MAP Growth assessments for an agency, only

a portion of the items in the item banks are included in the item pools for the assessments.

Content specialists isolate the items in the banks that match the respective content standards,

and only those items are included in the assessments. This allows the assessments to be

appropriate for the content standards of the agency. When this feature is combined with the

capabilities of adaptive testing using IRT, it provides an assessment that uses appropriate

content to measure all students in a school with a consistent level of accuracy.

1.4. Intended Uses of Test Scores

MAP Growth assessment data can be used in numerous ways to support student growth and

achievement. NWEA supports the use of MAP Growth scores to:

• Monitor student achievement and growth over time, from kindergarten to high school

• Plan instruction for individual students and groups of students at the classroom, grade,

school, and district levels

• Compare student performances within normed groups

• Make universal screening and placement decisions within a response to intervention

(RTI) framework or for talented and gifted programs

• Predict student performance on external measures of academic achievement, such as

the ACT®, SAT®, and on statewide summative achievement tests

• Evaluate programs and conduct school improvement planning

• Summarize scores for district- or school-level resource allocation

• Combine RIT scores with other information (e.g., homework, classroom tests, state

assessments) to make educational decisions

2019 MAP® Growth™ Technical Report Page 8

Chapter 2: Test Design

The design of each MAP Growth test starts with an analysis of the content standards to be

assessed. Items that align to standards are included in a pool and grouped into instructional

areas and sub-areas. Although each item pool is tailored to specific standards, all MAP Growth

assessments follow the same design principles and content rationale. These principles and

rationales are described in this chapter, along with procedures for aligning items to the

standards and constructing and validating the assessments.

2.1. Design Principles

This section describes the design principles that provide the foundation for the MAP Growth

assessments, including six guiding principles and universal design.

2.1.1. Six Guiding Principles

The MAP Growth system was designed according to guiding principles that reflect educators’

needs and help NWEA design assessments for a specific educational purpose. Given its

intended purpose, the test should:

1. Be challenging for a student across all items. It should not be frustrating or boring. The

goal is to minimize disengagement that can affect a student’s results. The adaptivity of

MAP Growth ensures that students are presented with content that is neither too far

above nor too far below their achievement level.

2. Be economical in its use of student time. It should provide as much information as

possible for the time it takes to administer. The adaptivity of MAP Growth helps

decrease the amount of testing time required for accurate results.

3. Provide a reflection of a student’s achievement that is as accurate and reliable as

needed for the decisions to be made based on its results. This is demonstrated by score

precision as measured by the standard error of measurement (SEM). The adaptivity of

MAP Growth helps lower the SEM, which indicates greater precision in the scores.

4. Consist of content the student should have had an opportunity to learn. The alignment

of test items to partner standards ensures that students encounter expected content.

5. Provide information about a student’s change in achievement level from one test

occasion to another, as well as the student’s current achievement level. A single test

result is only a snapshot of student achievement. Multiple snapshots are needed to

gauge a student’s growth over time.

6. Provide results to educators and other stakeholders as quickly as possible while

maintaining a high level of integrity in the reported results.

2.1.2. Universal Design

Test development incorporates Universal Design for Learning (UDL) principles to address the

needs of diverse populations of students taking the MAP Growth assessments. The NWEA

content team applies the UDL principles summarized in Table 2.1 (Thompson, Johnstone, &

Thurlow, 2002) and the UDL guidelines (Center for Applied Special Technology [CAST], 2018)

when creating test items. These principles improve tests and test fairness by removing

characteristics of tests that are unrelated to the measured construct but may inadvertently affect

test scores. The result is a more accurate score for the student and a clearer picture of what the

student knows and can do. It also provides a framework for incorporating flexibility in the ways

the content is presented and how students respond or show their knowledge. It also allows

multiple ways for students to be engaged.

2019 MAP® Growth™ Technical Report Page 9

Table 2.1. Universal Design Principles

UDL Principle

Description

Inclusive assessment

population

Field tests should include students with a wide range of abilities, students with

limited English proficiency, and students across racial, ethnic, and

socioeconomic lines.

Precisely defined

constructs

The test design is clear on the construct(s) to be measured and the purpose

for which scores will be used and inferences that will be made from the scores.

Universally designed assessments do this by removing barriers, which is

referred to as construct-irrelevant variance.

Accessible, non-

biased items

To ensure the quality of items, a differential item functioning (DIF) analysis can

investigate whether certain items perform differently for various

subpopulations. Additionally, using a bias, sensitivity and fairness panel can

help eliminate bias before the item is seen by students.

Amenable to

accommodations

Accommodations are used to increase access to assessments and to the

items within the assessments. Accommodations change the environment on

how the test is presented or responded to and is typically used by students

with disabilities and by English language learners (ELLs).

Simple, clear, and

intuitive instructions

and procedures

Assessments should be easy to understand regardless of a student’s

knowledge and experience. The instructions and procedures of the test and

the items should not create barriers for students. The student must be able to

access the test as intended.

Maximum readability

and comprehensibility

Ensuring readability and comprehensibility is important for clarity and access

purposes. It is vital that the construct to be measured is presented clearly with

plain language and at the appropriate reading level.

Maximum legibility

This refers to the capability of being deciphered with ease.

2.2. Types of MAP Growth Assessments

There are several types of MAP Growth assessments, as shown in Table 2.2. MAP Growth

assessments are offered for different grade bands (K–2, 2–5, and 6+) and account for the

developmental needs of students at different age levels.

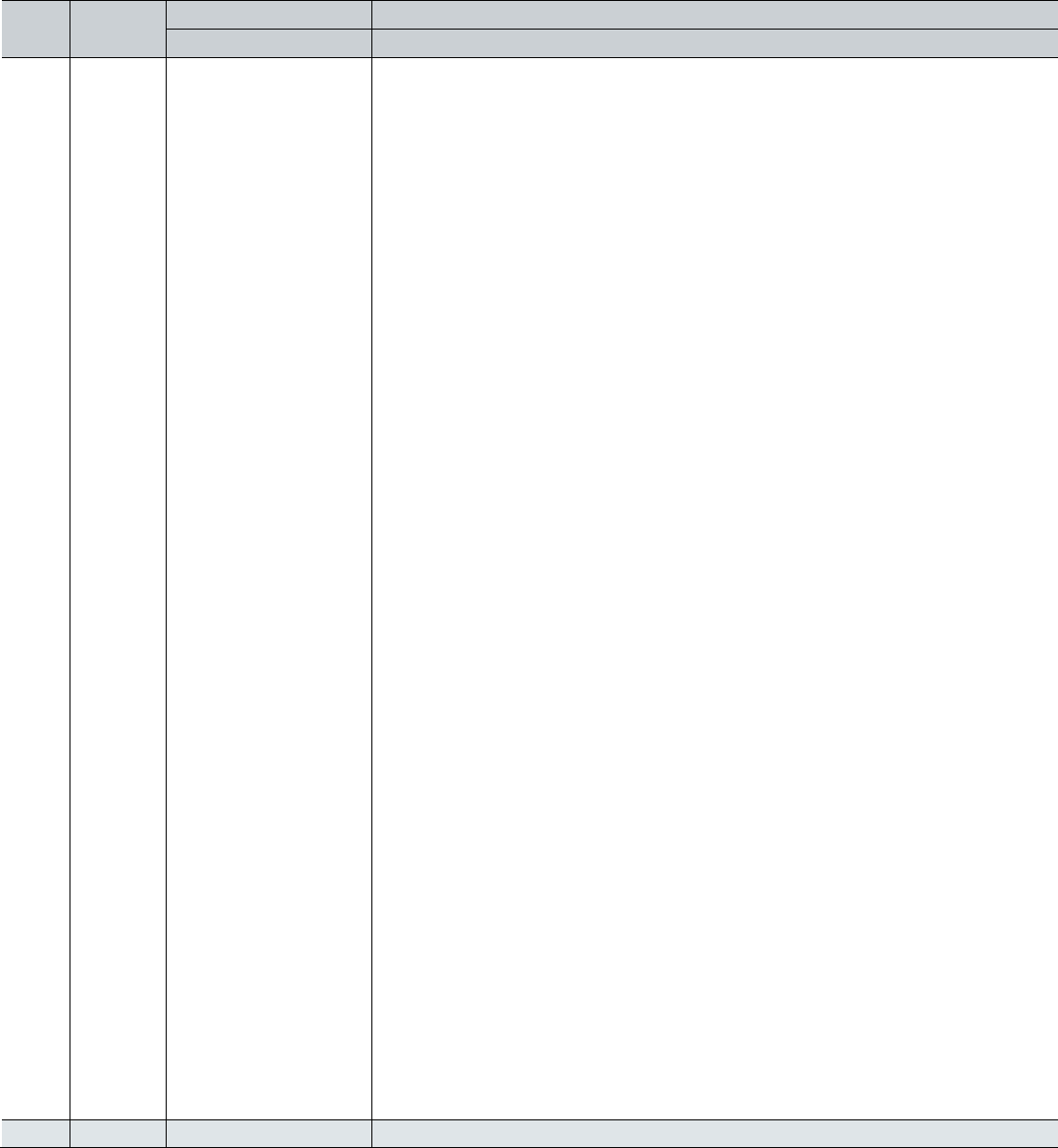

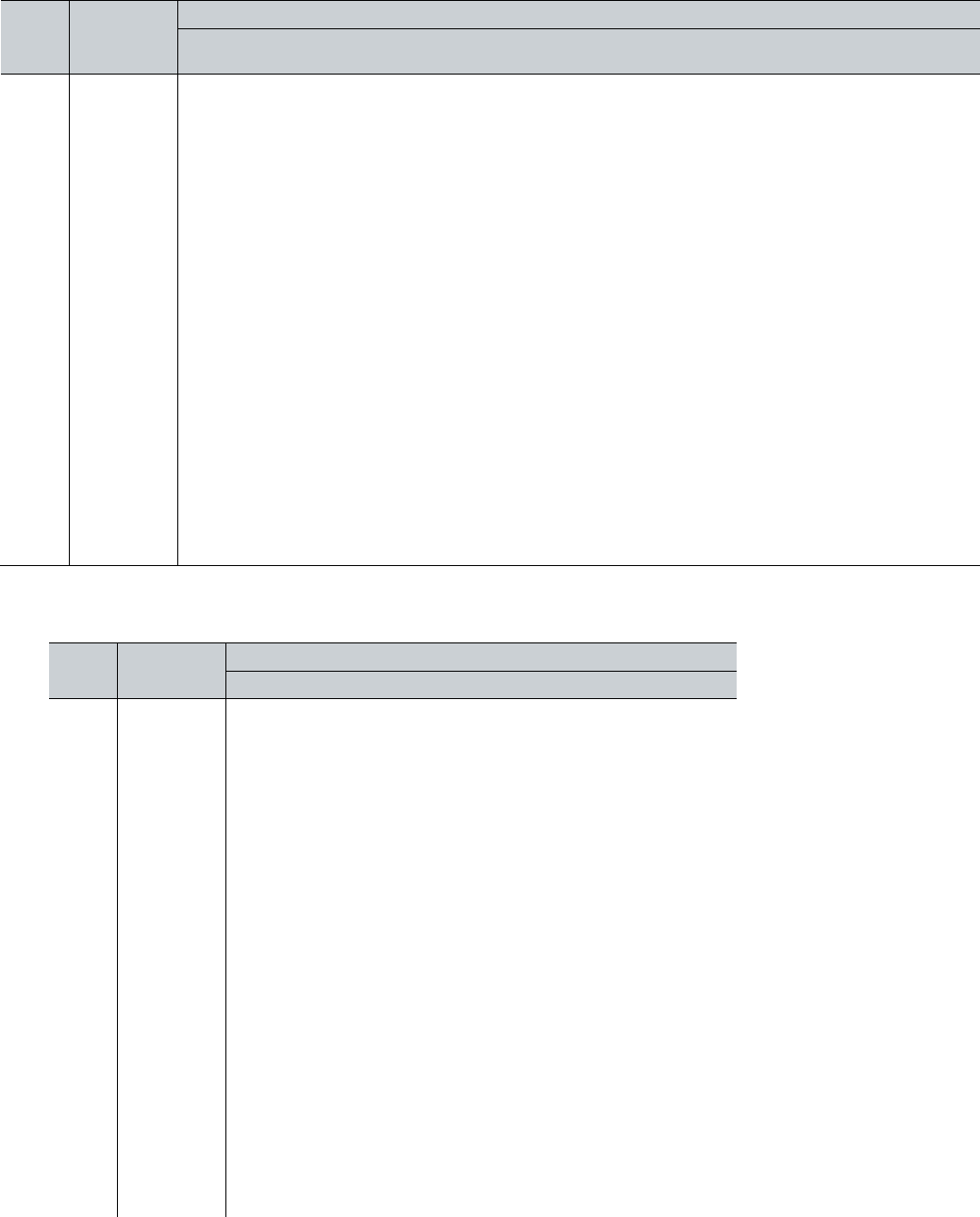

Table 2.2. MAP Growth Assessments

Test Type

Description

Testing Frequency

Content Areas

MAP Growth K–2

Adaptive test with a cross-grade vertical

scale that assesses achievement according

to standards-aligned content. Scores from

repeated administrations are used to

measure growth over time.

Four times per year

(three times per

school year, plus an

optional summer

administration)

• Reading

• Mathematics

MAP Growth 2–12

Adaptive test with a cross-grade vertical

scale that assesses achievement according

to standards-aligned content. Scores from

repeated administrations are used to

measure growth over time.

Four times per year

(three times per

school year, plus an

optional summer

administration)

• Reading

• Language Usage

• Mathematics

• Science

Course-Specific

High School

Mathematics

Adaptive test designed to measure specific

content a student may understand in one

specialty of Mathematics. It can be used to

measure growth over one academic year,

fall to spring. Resulting scores provide one

indicator of whether a student is ready to

move to the next Mathematics course.

Two to three times

per year

• Algebra I, II

• Geometry

• Integrated

Mathematics I, II, III

2019 MAP® Growth™ Technical Report Page 10

Test Type

Description

Testing Frequency

Content Areas

High School

Discipline-Specific

MAP Growth

Science

Adaptive test designed to measure specific

content a student may understand in Life

Science. It can be used to measure growth

over one academic year, fall to spring.

Resulting scores provide one indicator of

growth for high school Life Science.

Two to three times

per year

• 9–12 Life Science

2.2.1. MAP Growth K–2

MAP Growth K–2 assessments in Reading and Mathematics are designed for students in the

primary grades of kindergarten through Grade 2. MAP Growth K–2 includes an adaptive Growth

test (formerly known as Survey with Goals), Screening tests, and Skills Checklist tests.

3

• Screening tests are designed to get baseline information for a new student who is in the

earliest stages of learning. They are administered once at the end of pre-K or when a

student enters kindergarten. These tests are designed to assess the most foundational

skills of literacy and numeracy and are helpful in gathering information about students for

whom a teacher may have no previous data.

• Skills Checklists are diagnostic tests that assess knowledge of a specific skill before or

after teaching it, or after seeing screening or growth results. Skills Checklists cover a

subset of the early reading and early numeracy skills taught in Grades K–2. Each skill

area has its own individual assessment. These tests are not adaptive and give students

the same items every time they take the same Skills Checklist test. These items are not

part of the MAP Growth vertical RIT scale. Skills Checklist tests can be administered as

many times as necessary during the school year between Growth assessments to

assess skills identified as needing work or currently being instructed in the classroom.

Early identification of each student’s achievement level provides a strong foundation for

educators to use in establishing an environment for academic success. The MAP Growth K–2

assessments are designed to:

• Provide student achievement and growth information to aid instructional decisions during

the early stages of a student's academic career

• Identify the needs of a variety of primary grade students, from struggling to advanced

learners

• Use engaging items, interactive elements, and audio to encourage student participation

for more accurate results and to help beginning readers understand the items

All MAP Growth K–2 items include some audio. The amount of audio in each item depends on

the skill being assessed, but the stem (i.e., the question in the item) is always read aloud. In

other words, every K–2 item has audio, but some items only have audio on the stem while other

items are completely presented in audio. For example, number answers in Mathematics items

are not typically read, and some standards ask students to identify the number words, so no

audio is provided. When the item loads, at least some audio is played automatically. The

student can replay any part that has audio. Some graphics also have audio that identifies the

graphic (e.g., a graphic of a peach pit may have the audio “pit” associated with it).

3

Screening tests and Skills Checklist tests are not included in the psychometric analyses described in

this technical report.

2019 MAP® Growth™ Technical Report Page 11

Most of the content in the MAP Growth Mathematics K–2 assessments has audio. For MAP

Growth Reading K–2, audio is provided on items where decoding is not the skill being

assessed. For example, items use audio in Reading Foundational Skills to allow students to

hear words and associated sounds. Audio support for K–2 students in Reading is essential for

assessing foundational content such as phonological awareness and phonics. Since students in

Grades K–2 are learning to read rather than reading to learn, providing audio ensures that they

will be measured based on what they know and can do, rather than solely on their current

reading ability. For assessing comprehension, the assessment includes items that:

• Assess listening comprehension

• Provide audio support with text

• Have audio to be used at the discretion of the student

• Include no audio at all, other than the directions and stem

Professional voiceover artists are used so that items sound as natural and fluent as possible.

These professionals are chosen for their voice timbre and crispness of enunciation. The

voiceover artists are directed to read the content the way they would to a child with natural

pacing and appropriate enunciation.

2.2.2. MAP Growth 2–12

MAP Growth 2–12 assessments measure what students know and inform what they are ready

to learn in Reading, Language Usage, Mathematics, and Science. They include an adaptive

Growth test and Screening tests. The Screening tests for Grades 2–12 are 20-item adaptive

tests that yield an overall score and are administered only once to a student for intake or

placement purposes. MAP Growth Mathematics tests are also available for high school students

in Algebra 1, Algebra 2, Geometry, and Integrated Mathematics 1, 2, and 3. MAP Growth

Science tests are also available for high school students in Life Science (Biology). MAP Growth

2–12 tests are content area specific and built to adhere to the content of agency-specific

standards. Test content is organized into large categories called instructional areas and sub-

areas. The number of instructional areas ranges from three to seven per test depending on the

content area. MAP Growth assessments provide instructional area scores in each content area

that supplement an overall score.

2.3. Content Design Rationale

2.3.1. Reading and Language Usage

MAP Growth assesses English Language Arts (ELA) on two scales: Reading and Language

Usage. For MAP Growth assessments from Grades 2–12, tests on the Reading scale address

reading comprehension, understanding of genres and text, and vocabulary. Assessments on the

Language Usage scale cover grammar, mechanics, and the elements of writing. MAP Growth

Reading K–2 tests are also on the Reading scale but cover some elements of Language Usage

as well as Reading. The MAP Growth Reading K–2 and MAP Growth Reading and Language

Usage 2–12 literature reviews (Jiban, 2017) establish a rationale for why Reading and

Language Usage are combined on the Reading K–2 test but have separate scales for 2+.

MAP Growth Reading is broken into K–2, 2–5, and 6+ tests. The K–2 test provides targeted

audio support and addresses skills appropriate for students who are learning to read, including

Reading Foundational Skills and Language and Writing standards. In contrast, students who

take the 2–5 and 6+ tests tend to have better reading skills than primary students. The split

2019 MAP® Growth™ Technical Report Page 12

between the 2–5 and 6+ test helps ensure that students see content appropriate to their age

and achievement level. For example, when taking the 6+ test, middle school students reading

below grade level will see texts that allow them to demonstrate their reading skills without

including overly juvenile references that may be perceived as demeaning. Similarly, advanced

elementary readers will be challenged with increasingly complex texts without encountering

excerpts from Shakespeare or college course catalogs for which they have no frame of

reference.

MAP Growth Language Usage is designed for Grades 2–12 and provides an in-depth, focused

exploration of grammar, mechanics, and the elements of writing. Students see increasingly

challenging items as their writing abilities grow and flourish, building on the early foundations to

add nuance and complexity.

2.3.2. Mathematics

MAP Growth Mathematics is broken into K–2, 2–5, 6+, and high school tests. The decision to

have separate K–2 tests was influenced by the unique learning needs of young students and

the types of skills assessed at this level, such as counting and cardinality. Audio is provided for

K–2 students who are still learning to read and thus require audio support to fairly assess their

Mathematics skills. MAP Growth Mathematics tests are built for grade bands 2–5 and 6+

because new content is often introduced at the Grade 6 level as students move into middle

school mathematics courses. There is overlap of content across the 2–5 and 6+ tests to support

students performing both above and below grade expectations. High school Mathematics tests

were created to meet the specific structure of course-based mathematics at the high school

level.

2.3.3. Science

MAP Growth Science is broken into grade band tests according to the structure of the standards

and breadth of the MAP Growth item bank. Some Science tests are offered with grade bands 3–

5, 6–8, and 9–12, while some are offered as 3–5 and 6+. The decision to separate the tests into

grade bands was influenced by content appropriateness and standard coverage. This ensures

that only well-aligned, appropriate content is part of each test.

2.4. MAP Growth Transition

MAP Growth assessments in each content area and grade band have some overlap in grades

and content covered, which is essential given the adaptive nature of the assessments.

Determining which assessment is most appropriate for each student depends on the purposes

of the assessments, the intentions and uses of the results, and each assessment’s

measurement characteristics. There may be times when comparisons are desirable across

students, classes, schools, or even districts, or required by state policy where it is important to

have data from the same MAP Growth assessments for a given grade (e.g., all Grade 2

students taking MAP Growth 2–5).

Grade 2 content is represented in the MAP Growth K–2 tests and the Reading 2–5, Language

2–12, and Mathematics 2–5 tests. MAP Growth K–2 and 2–5 transition decisions should

consider students’ reading readiness and exposure to content. NWEA recommends students

take the same test within a school year, meaning students should not switch tests mid-year

because of the need to make strong growth comparisons from fall to spring.

2019 MAP® Growth™ Technical Report Page 13

2.5. Instructional Areas and Sub-areas

Each MAP Growth test is defined by a content area such as Mathematics and a grade band

such as 2–5. Within each test, the content is further defined by instructional areas such as

Geometry, Number Sense, and Measurement that are derived from the structure of the content

standards and provide information about how the content area is represented in the test. The

instructional areas act as reporting categories. As another layer of defining the test content,

each instructional area is further divided into sub-areas. The instructional areas and sub-areas

from each MAP Growth test are posted online for partner viewing and use at

https://cdn.nwea.org/state-information/index.html. As examples, Table 2.3 – Table 2.9 present

the instructional area charts for MAP Growth tests for use with the CCSS and NGSS.

Once NWEA content specialists have created instructional areas and sub-areas for a test, they

align standard statements to these areas to establish the test structure and content. This

combination of instructional areas, sub-areas, and standard statements is called a test blueprint.

Once the blueprints are created, the MAP Growth item bank is reviewed, and appropriate items

are aligned to the standards. During test administration, the blueprint helps drive item selection

to ensure that items presented to a student cover all instructional areas at a difficultly level

appropriate to that student's performance, both overall and within each instructional area. Item

selection is not restricted to items within a student's grade, allowing MAP Growth to better target

students who are performing above or below the grade level mean for an instructional area.

Table 2.3. Instructional Area Chart for use with CCSS—Reading K–2

CCSS Reading Strands

Instructional Areas & Sub-Areas

MAP Growth Reading K–2

Reading: Foundational Skills

• Print Concepts

• Phonological Awareness

• Phonics and Word Recognition

Foundational Skills

• Phonics and Word Recognition

• Phonological Awareness

• Print Concepts

Writing

• Text Types and Purposes

• Production and Distribution of Writing

• Research to Build and Present Knowledge

Language

• Conventions of Standard English

• Knowledge of Language

Language and Writing

• Capitalize, Spell, Punctuate,

• Language: Grammar, Usage

• Writing: Purposes: Plan, Develop, Edit

Reading: Literature

• Key Ideas and Details

• Craft and Structure

• Integration of Knowledge and Ideas

Reading: Informational Text

• Key Ideas and Details

• Craft and Structure

• Integration of Knowledge and Ideas

Speaking and Listening

• Comprehension and Collaboration (SL.2)

Literature and Informational Text

• Literature: Key Ideas, Craft, Structure

• Informational Text: Key Ideas, Details, Craft, Structure

Language

• Vocabulary Acquisition and Use

Speaking and Listening

• Presentation of Knowledge and Ideas (SL.4)

Vocabulary Use and Functions

• Language: Context Clues and References

• Vocabulary Acquisition and Use

2019 MAP® Growth™ Technical Report Page 14

Table 2.4. Instructional Area Chart for use with CCSS—Reading 2–5 and 6+

CCSS Reading Strands*

Instructional Areas & Sub-Areas

MAP Growth Reading 2–5 and 6+

Reading: Literature

• Key Ideas and Details

• Integration of Knowledge and Ideas (RL.9)

Literary Text: Key Ideas and Details

• Draw Conclusions, Infer, Predict

• Summarize; Analyze Themes, Characters, and Events

Reading: Literature

• Craft and Structure

• Integration of Knowledge and Ideas (RL.7)

Language

• Vocabulary Acquisition and Use (L.5)

Literary Text: Language, Craft and Structure

• Figurative, Connotative Meanings; Tone

• Point of View, Purpose, Perspective

• Text Structures, Text Features

Reading: Informational Text

• Key Ideas and Details

• Integration of Knowledge and Ideas (RI.9)

Informational Text: Key Ideas and Details

• Draw Conclusions, Infer, Predict

• Summarize; Analyze Central Ideas, Concepts and

Events

Reading: Informational Text

• Craft and Structure

• Integration of Knowledge and Ideas (RI.7,

RI.8)

Language

• Vocabulary Acquisition and Use (L.5)

Informational Text: Language, Craft and Structure

• Point of View, Purpose, Perspective, Figurative and

Rhetorical Language

• Text Structures, Text Features

Reading: Informational Text

• Craft and Structure (RI.4)

Language

• Vocabulary Acquisition and Use (L.4, L.5,

L.6)

Vocabulary: Acquisition and Use

• Context Clues and Multiple-Meaning words

• Word Relationships and Nuance

• Word Parts, Reference, and Academic Vocabulary

*Where strands are mapped among multiple goals, specific standards are indicated for each goal.

Table 2.5. Instructional Area Chart for use with CCSS—Language Usage 2–12

CCSS Reading Strands*

Instructional Areas & Sub-Areas

MAP Growth Language Usage 2–12

Writing

• Text Types and Purposes

• Production and Distribution of Writing

• Research to Build and Present Knowledge

Language

• Knowledge of Language

Writing: Write, Revise Texts for Purpose and Audience

• Plan and Organize; Create Cohesion, Use Transitions

• Provide Support; Develop Topics; Conduct Research

• Establish and Maintain Style; Use Precise Language

Language

• Conventions of Standard English (L.1)

Language: Understand, Edit for Grammar, Usage

• Parts of Speech

• Phrases, Clauses, Agreement, Sentences

Language

• Conventions of Standard English (L.2)

Language: Understand, Edit for Mechanics

• Capitalization

• Punctuation

• Spelling

2019 MAP® Growth™ Technical Report Page 15

Table 2.6. Instructional Area Chart for use with CCSS—Mathematics K–2 and 2–5

CCSS Mathematics Domains

Instructional Areas & Sub-Areas

• Counting & Cardinality

• Operations & Algebraic Thinking

• Number & Operations in Base Ten

• Number & Operations – Fractions

• Measurement & Data

• Geometry

MAP Growth Mathematics K–2

Operations and Algebraic Thinking

• Represent and Solve Problems

• Properties of Operations

Number and Operations

• Understand Place Value, Counting, and Cardinality

• Number and Operations: Base Ten and Fractions

Measurement and Data

• Solve Problems Involving Measurement

• Represent and Interpret Data

Geometry

• Reason with Shapes and Their Attributes

MAP Growth Mathematics 2–5

Operations and Algebraic Thinking

• Represent and Solve Problems

• Analyze Patterns and Relationships

Number and Operations

• Understand Place Value, Counting, and Cardinality

• Number and Operations in Base Ten

• Number and Operations – Fractions

Measurement and Data

• Geometric Measurement and Problem Solving

• Represent and Interpret Data

Geometry

• Reason with Shapes, Attributes, & Coordinate Plane

Table 2.7. Instructional Area Chart for use with CCSS—Mathematics 6+

CCSS Mathematics Domains

Instructional Areas & Sub-Areas

MAP Growth Mathematics 6+

• Ratios & Proportional Relationships

• The Number System

• Expressions & Equations

• Functions

• Geometry

• Statistics & Probability

Operations and Algebraic Thinking

• Expressions and Equations

• Use Functions to Model Relationships

The Real and Complex Number Systems

• Ratios and Proportional Relationships

• Perform Operations

• Extend and Use Properties

Geometry

• Geometric Measurement and Relationships

• Congruence, Similarity, Right Triangles, & Trigonometry

Statistics and Probability

• Interpreting Categorical and Quantitative Data

• Using Sampling and Probability to Make Decisions

Table 2.8. Instructional Area Chart for use with CCSS—High School Mathematics

CCSS Mathematics Courses/ Domains

Instructional Areas & Sub-Areas

High School: Number and Quantity

• The Real Number System

• Quantities

• The Complex Number System

• Vector & Matrix Quantities

MAP Growth Mathematics Algebra 1

Equations and Inequalities

• Reason Quantitatively and Use Units

• Creating Equations and Inequalities

• Reasoning with Equations and Inequalities

2019 MAP® Growth™ Technical Report Page 16

CCSS Mathematics Courses/ Domains

Instructional Areas & Sub-Areas

High School: Algebra

• Seeing Structure in Expressions

• Arithmetic with Polynomials & Rational

Expressions

• Creating Equations

• Reasoning with Equations & Inequalities

High School: Functions

• Interpreting Functions

• Building Functions