15

Scalable Atomic Visibility with RAMP Transactions

PETER BAILIS, Stanford University

ALAN FEKETE, University of Sydney

ALI GHODSI, JOSEPH M. HELLERSTEIN, and ION STOICA,UCBerkeley

Databases can provide scalability by partitioning data across several servers. However, multipartition, mul-

tioperation transactional access is often expensive, employing coordination-intensive locking, validation,

or scheduling mechanisms. Accordingly, many real-world systems avoid mechanisms that provide useful

semantics for multipartition operations. This leads to incorrect behavior for a large class of applications

including secondary indexing, foreign key enforcement, and materialized view maintenance. In this work,

we identify a new isolation model—Read Atomic (RA) isolation—that matches the requirements of these

use cases by ensuring atomic visibility: either all or none of each transaction’s updates are observed by

other transactions. We present algorithms for Read Atomic Multipartition (RAMP) transactions that en-

force atomic visibility while offering excellent scalability, guaranteed commit despite partial failures (via

coordination-free execution), and minimized communication between servers (via partition independence).

These RAMP transactions correctly mediate atomic visibility of updates and provide readers with snap-

shot access to database state by using limited multiversioning and by allowing clients to independently

resolve nonatomic reads. We demonstrate that, in contrast with existing algorithms, RAMP transactions

incur limited overhead—even under high contention—and scale linearly to 100 servers.

Categories and Subject Descriptors: H.2.4 [Systems]: Distributed Databases

General Terms: Design, Algorithms, Performance

Additional Key Words and Phrases: Atomic visibility, transaction processing, NoSQL, secondary indexing,

materialized views

ACM Reference Format:

Peter Bailis, Alan Fekete, Ali Ghodsi, Joseph M. Hellerstein, and Ion Stoica. 2016. Scalable atomic visibility

with RAMP transactions. ACM Trans. Database Syst. 41, 3, Article 15 (July 2016), 45 pages.

DOI: http://dx.doi.org/10.1145/2909870

1. INTRODUCTION

Faced with growing amounts of data and unprecedented query volume, distributed

databases increasingly split their data across multiple servers, or partitions, such that

no one partition contains an entire copy of the database [Baker et al. 2011; Chang et al.

2006; Curino et al. 2010; Das et al. 2010; DeCandia et al. 2007; Kallman et al. 2008;

This research was supported by NSF CISE Expeditions award CCF-1139158 and DARPA XData Award

FA8750-12-2-0331, the National Science Foundation Graduate Research Fellowship (grant DGE-1106400),

and gifts from Amazon Web Services, Google, SAP, Apple, Inc., Cisco, Clearstory Data, Cloudera, EMC,

Ericsson, Facebook, GameOnTalis, General Electric, Hortonworks, Huawei, Intel, Microsoft, NetApp, NTT

Multimedia Communications Laboratories, Oracle, Samsung, Splunk, VMware, WANdisco and Yahoo!.

Authors’ addresses: P. Bailis, 353 Serra Mall, Stanford University, Stanford, CA 94305; email:

ney, NSW 2006, Australia; email: alan.fekete@sydney.edu.au; A. Ghodsi, J. M. Hellerstein, and I. Stoica, 387

Soda Hall, Berkeley, CA 94720; emails: {alig, hellerstein, istoica}@cs.berkeley.edu.

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted

without fee provided that copies are not made or distributed for profit or commercial advantage and that

copies show this notice on the first page or initial screen of a display along with the full citation. Copyrights for

components of this work owned by others than ACM must be honored. Abstracting with credit is permitted.

To copy otherwise, to republish, to post on servers, to redistribute to lists, or to use any component of this

work in other works requires prior specific permission and/or a fee. Permissions may be requested from

Publications Dept., ACM, Inc., 2 Penn Plaza, Suite 701, New York, NY 10121-0701 USA, fax +1 (212)

869-0481, or [email protected].

c

2016 ACM 0362-5915/2016/07-ART15 $15.00

DOI: http://dx.doi.org/10.1145/2909870

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:2 P. Bailis et al.

Thomson et al. 2012]. This strategy succeeds in allowing near-unlimited scalability

for operations that access single partitions. However, operations that access multiple

partitions must communicate across servers—often synchronously—in order to provide

correct behavior. Designing systems and algorithms that tolerate these communication

delays is a difficult task but is key to maintaining scalability [Corbett et al. 2012;

Kallman et al. 2008; Jones et al. 2010; Pavlo et al. 2012].

In this work, we address a largely underserved class of applications requiring mul-

tipartition, atomically visible

1

transactional access: cases where all or none of each

transaction’s effects should be visible. The status quo for these multipartition atomic

transactions provides an uncomfortable choice between algorithms that are fast but

deliver inconsistent results and algorithms that deliver consistent results but are often

slow and unavailable under failure. Many of the largest modern, real-world systems

opt for protocols that guarantee fast and scalable operation but provide few—if any—

transactional semantics for operations on arbitrary sets of data items [Bronson et al.

2013; Chang et al. 2006; Cooper et al. 2008; DeCandia et al. 2007; Hull 2013; Qiao et al.

2013; Weil 2011]. This may lead to anomalous behavior for several use cases requiring

atomic visibility, including secondary indexing, foreign key constraint enforcement, and

materialized view maintenance (Section 2). In contrast, many traditional transactional

mechanisms correctly ensure atomicity of updates [Bernstein et al. 1987; Corbett et al.

2012; Thomson et al. 2012]. However, these algorithms—such as two-phase locking and

variants of optimistic concurrency control—are often coordination intensive, slow, and

under failure, unavailable in a distributed environment [Bailis et al. 2014a; Curino

et al. 2010; Jones et al. 2010; Pavlo et al. 2012]. This dichotomy between scalability

and atomic visibility has been described as “a fact of life in the big cruel world of huge

systems” [Helland 2007]. The proliferation of nontransactional multi-item operations

is symptomatic of a widespread “fear of synchronization” at scale [Birman et al. 2009].

Our contribution in this article is to demonstrate that atomically visible transactions

on partitioned databases are not at odds with scalability. Specifically, we provide high-

performance implementations of a new, nonserializable isolation model called Read

Atomic (RA) isolation. RA ensures that all or none of each transaction’s updates are

visible to others and that each transaction reads from an atomic snapshot of database

state (Section 3)—this is useful in the applications we target. We subsequently de-

velop three new, scalable algorithms for achieving RA isolation that we collectively

title Read Atomic Multipartition (RAMP) transactions (Section 4). RAMP transactions

guarantee scalability and outperform existing atomic algorithms because they satisfy

two key scalability constraints. First, RAMP transactions guarantee coordination-free

execution: one client’s transactions cannot cause another client’s transactions to stall or

fail. Second, RAMP transactions guarantee partition independence: clients only contact

partitions that their transactions directly reference (i.e., there is no central master, co-

ordinator, or scheduler). Together, these properties ensure limited coordination across

partitions and horizontal scalability for multipartition access.

RAMP transactions are scalable because they appropriately control the visibility

of updates without inhibiting concurrency. Rather than force concurrent reads and

writes to stall, RAMP transactions allow reads to “race” writes: RAMP transactions can

autonomously detect the presence of nonatomic (partial) reads and, if necessary, repair

them via a second round of communication with servers. To accomplish this, RAMP

writers attach metadata to each write and use limited multiversioning to prevent

readers from stalling. The three algorithms we present offer a trade-off between the

size of this metadata and performance. RAMP-Small transactions require constant space

1

Our use of “atomic” (specifically, RA isolation) concerns all-or-nothing visibility of updates (i.e., the ACID

isolation effects of ACID atomicity; Section 3). This differs from uses of “atomicity” to denote serializabil-

ity [Bernstein et al. 1987] or linearizability [Attiya and Welch 2004].

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:3

(a timestamp per write) and two RoundTrip Time delays (RTTs) for reads and writes.

RAMP-Fast transactions require metadata size that is linear in the number of writes

in the transaction but only require one RTT for reads in the common case and two in

the worst case. RAMP-Hybrid transactions employ Bloom filters [Bloom 1970] to provide

an intermediate solution. Traditional techniques like locking couple atomic visibility

and mutual exclusion; RAMP transactions provide the benefits of the former without

incurring the scalability, availability, or latency penalties of the latter.

In addition to providing a theoretical analysis and proofs of correctness, we demon-

strate that RAMP transactions deliver in practice. Our RAMP implementation achieves

linear scalability to over 7 million operations per second on a 100 server cluster (at

overhead below 5% for a workload of 95% reads). Moreover, across a range of workload

configurations, RAMP transactions incur limited overhead compared to other tech-

niques and achieve higher performance than existing approaches to atomic visibility

(Section 5).

While the literature contains an abundance of isolation models [Bailis et al. 2014a;

Adya 1999], we believe that the large number of modern applications requiring RA

isolation and the excellent scalability of RAMP transactions justify the addition of yet

another model. RA isolation is too weak for some applications, but, for the many that

it can serve, RAMP transactions offer substantial benefits.

The remainder of this article proceeds as follows: Section 2 presents an overview of

RAMP transactions and describes key use cases based on industry reports. Section 3

defines RA isolation, presents both a detailed comparison with existing isolation guar-

antees and a syntactic condition, the Read-Subset-Writes property, that guarantees

equivalence to serializable isolation, and defines two key scalability criteria for RAMP

algorithms to provide. Section 4 presents and analyzes three RAMP algorithms, which

we experimentally evaluate in Section 5. Section 6 presents modifications of the RAMP

protocols to better support multidatacenter deployments and to enforce transitive de-

pendencies. Section 7 compares with Related Work, and Section 8 concludes with a

discussion of promising future extensions to the protocols presented here.

2. OVERVIEW AND MOTIVATION

In this article, we consider the problem of making transactional updates atomically

visible to readers—a requirement that, as we outline in this section, is found in several

prominent use cases today. The basic property we provide is fairly simple: either all or

none of each transaction’s updates should be visible to other transactions. For example,

if x and y are initially null and a transaction T

1

writes x = 1andy = 1, then another

transaction T

2

should not read x = 1andy = null. Instead, T

2

should either read x = 1

and y = 1 or, possibly, x = null and y = null. Informally, each transaction reads from an

unchanging snapshot of database state that is aligned along transactional boundaries.

We call this property atomic visibility and formalize it via the RA isolation guarantee

in Section 3.

The classic strategy for providing atomic visibility is to ensure mutual exclusion

between readers and writers. For example, if a transaction like T

1

above wants to

update data items x and y, it can acquire exclusive locks for each of x and y, update

both items, then release the locks. No other transactions will observe partial updates

to x and y, ensuring atomic visibility. However, this solution has a drawback: while one

transaction holds exclusive locks on x and y, no other transactions can access x and y

for either reads or writes. By using mutual exclusion to enforce the atomic visibility of

updates, we have also limited concurrency. In our example, if x and y are located on

different servers, concurrent readers and writers will be unable to perform useful work

during communication delays. These communication delays form an upper bound on

throughput: effectively,

1

message delay

operations per second.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:4 P. Bailis et al.

To avoid this upper bound, we separate the problem of providing atomic visibility

from the problem of maintaining mutual exclusion. By achieving the former but avoid-

ing the latter, the algorithms we develop in this article are not subject to the scalability

penalties of many prior approaches. To ensure that all servers successfully execute a

transaction (or that none do), our algorithms employ an Atomic Commitment Proto-

col (ACP). When coupled with a blocking concurrency control mechanism like locking,

ACPs are harmful to scalability and availability: arbitrary failures can (provably) cause

any ACP implementation to stall [Bernstein et al. 1987]. (Optimistic concurrency con-

trol mechanisms can similarly block during validation.) We instead use ACPs with

nonblocking concurrency control mechanisms; this means that individual transactions

can stall due to failures or communication delays without forcing other transactions

to stall. In a departure from traditional concurrency control, we allow multiple ACP

rounds to proceed in parallel over the same data.

The end result—our RAMP transactions—provide excellent scalability and perfor-

mance under contention (e.g., in the event of write hotspots) and are robust to partial

failure. RAMP transactions’ nonblocking behavior means that they cannot provide cer-

tain guarantees like preventing concurrent updates. However, applications that can use

RA isolation will benefit from our algorithms. The remainder of this section identifies

several relevant use cases from industry that require atomic visibility for correctness.

2.1. RA Isolation in the Wild

As a simple example, consider a social networking application: if two users, Sam and

Mary, become “friends” (a bidirectional relationship), other users should never see

that Sam is a friend of Mary but Mary is not a friend of Sam: either both relationships

should be visible, or neither should be. A transaction under RA isolation would correctly

enforce this behavior. We can further classify three general use cases for RA isolation:

(1) Foreign key constraints. Many database schemas contain information about re-

lationships between records in the form of foreign key constraints. For example,

Facebook’s TAO [Bronson et al. 2013], LinkedIn’s Espresso [Qiao et al. 2013], and

Yahoo! PNUTS [Cooper et al. 2008] store information about business entities such

as users, photos, and status updates as well as relationships between them (e.g.,

the previous friend relationships). Their data models often represent bidirectional

edges as two distinct unidirectional relationships. For example, in TAO, a user per-

forming a “like” action on a Facebook page produces updates to both the LIKES and

LIKED_BY associations [Bronson et al. 2013]. PNUTS’s authors describe an identical

scenario [Cooper et al. 2008]. These applications require foreign key maintenance

and often, due to their unidirectional relationships, multientity update and ac-

cess. Violations of atomic visibility surface as broken bidirectional relationships

(as with Sam and Mary previously) and dangling or incorrect references. For exam-

ple, clients should never observe that Frank is an employee of department.id=5,

but no such department exists in the department table.

With RAMP transactions, when inserting new entities, applications can bundle

relevant entities from each side of a foreign key constraint into a transaction. When

deleting associations, users can avoid dangling pointers by creating a “tombstone”

at the opposite end of the association (i.e., delete any entries with associations via

a special record that signifies deletion) [Zdonik 1987].

(2) Secondary indexing. Data is typically partitioned across servers according to a

primary key (e.g., user ID). This allows fast location and retrieval of data via pri-

mary key lookups but makes access by secondary attributes challenging (e.g., in-

dexing by birth date). There are two dominant strategies for distributed secondary

indexing. First, the local secondary index approach colocates secondary indexes and

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:5

primary data, so each server contains a secondary index that only references and

indexes data stored on its server [Baker et al. 2011; Qiao et al. 2013]. This allows

easy, single-server updates but requires contacting every partition for secondary

attribute lookups (write-one, read-all), compromising scalability for read-heavy

workloads [Bronson et al. 2013; Corbett et al. 2012; Qiao et al. 2013]. Alternatively,

the global secondary index approach locates secondary indexes (which may be par-

titioned, but by a secondary attribute) separately from primary data [Cooper et al.

2008; Baker et al. 2011]. This alternative allows fast secondary lookups (read-one)

but requires multipartition update (at least write-two).

Real-world services employ either local secondary indexing (e.g., Espresso [Qiao

et al. 2013], Cassandra, and Google Megastore’s local indexes [Baker et al. 2011])

or nonatomic (incorrect) global secondary indexing (e.g., Espresso and Megastore’s

global indexes, Yahoo! PNUTS’s proposed secondary indexes [Cooper et al. 2008]).

The former is nonscalable but correct, while the latter is scalable but incorrect.

For example, in a database partitioned by id with an incorrectly maintained global

secondary index on salary, the query ‘‘SELECT id, salary WHERE salary >

60,000’’ might return records with salary less than $60,000 and omit some

records with salary greater than $60,000.

With RAMP transactions, the secondary index entry for a given attribute can

be updated atomically with base data. For example, suppose a secondary index

is stored as a mapping from secondary attribute values to sets of item versions

matching the secondary attribute (e.g., the secondary index entry for users with

blue hair would contain a list of user IDs and last-modified timestamps correspond-

ing to all of the users with attribute hair-color=blue). Insertions of new primary

data require additions to the corresponding index entry, deletions require removals,

and updates require a “tombstone” deletion from one entry and an insertion into

another.

(3) Materialized view maintenance. Many applications precompute (i.e., material-

ize) queries over data, as in Twitter’s Rainbird service [Weil 2011], Google’s Perco-

lator [Peng and Dabek 2010], and LinkedIn’s Espresso systems [Qiao et al. 2013].

As a simple example, Espresso stores a mailbox of messages for each user along

with statistics about the mailbox messages: for Espresso’s read-mostly workload,

it is more efficient to maintain (i.e., prematerialize) a count of unread messages

rather than scan all messages every time a user accesses her mailbox [Qiao et al.

2013]. In this case, any unread message indicators should remain in sync with

the messages in the mailbox. However, atomicity violations will allow materialized

views to diverge from the base data (e.g., Susan’s mailbox displays a notification

that she has unread messages but all 60 messages in her inbox are marked as

read).

With RAMP transactions, base data and views can be updated atomically. The

physical maintenance of a view depends on its specification [Chirkova and Yang

2012; Huyn 1998; Blakeley et al. 1986], but RAMP transactions provide appropri-

ate concurrency control primitives for ensuring that changes are delivered to the

materialized view partition. For select-project views, a simple solution is to treat

the view as a separate table and perform maintenance as needed: new rows can

be inserted/deleted according to the specification, and if necessary, the view can

be (re-)computed on demand (i.e., lazy view maintenance [Zhou et al. 2007]). For

more complex views, such as counters, users can execute RAMP transactions over

specialized data structures such as the CRDT G-Counter [Shapiro et al. 2011].

In brief: Status Quo. Despite application requirements for RA isolation, few large-

scale production systems provide it. For example, the authors of Tao, Espresso, and

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:6 P. Bailis et al.

PNUTS describe several classes of atomicity anomalies exposed by their systems, rang-

ing from dangling pointers to the exposure of intermediate states and incorrect sec-

ondary index lookups, often highlighting these cases as areas for future research and

design [Bronson et al. 2013; Qiao et al. 2013; Cooper et al. 2008]. These systems are

not exceptions: data stores like Bigtable [Chang et al. 2006], Dynamo [DeCandia et al.

2007], and many popular “NoSQL” [Mohan 2013] and even some “NewSQL” [Bailis et al.

2014a] stores do not provide transactional guarantees for multi-item operations. Unless

users are willing to sacrifice scalability by opting for serializable semantics [Corbett

et al. 2012], they are often left without transactional semantics.

The designers of these Internet-scale, real-world systems have made a conscious

decision to provide scalability at the expense of multipartition transactional semantics.

Our goal with RAMP transactions is to preserve this scalability but deliver correct,

atomically visible behavior for the use cases we have described.

3. SEMANTICS AND SYSTEM MODEL

In this section, we formalize RA isolation and, to capture scalability, formulate a pair

of strict scalability criteria: coordination-free execution and partition independence.

Readers more interested in RAMP algorithms may wish to proceed to Section 4.

3.1. RA Isolation: Formal Specification

To formalize RA isolation, as is standard [Adya 1999; Bernstein et al. 1987], we consider

ordered sequences of reads and writes to arbitrary sets of items, or transactions. We

call the set of items a transaction reads from and writes to its item read set and item

write set. Each write creates a version of an item and we identify versions of items by

a timestamp taken from a totally ordered set (e.g., natural numbers) that is unique

across all versions of each item. Timestamps therefore induce a total order on versions

of each item, and we denote version i of item x as x

i

. All items have an initial version

⊥ that is located at the start of each order of versions for each item and is produced

by an initial transaction T

⊥

. Each transaction ends in a commit or an abort operation;

we call a transaction that commits a committed transaction and a transaction that

aborts an aborted transaction. In our model, we consider histories comprised of a set of

transactions along with their read and write operations, versions read and written, and

commit or abort operations. In our example histories, all transactions commit unless

otherwise noted.

Definition 3.1 (Fractured Reads). A transaction T

j

exhibits the fractured reads

phenomenon if transaction T

i

writes versions x

a

and y

b

(in any order, where x and y

may or may not be distinct items), T

j

reads version x

a

and version y

c

,andc < b.

We also define RA isolation to prevent transactions from reading uncommitted or

aborted writes. This is needed to capture the notion that, under RA isolation, read-

ers only observe the final output of a given transaction that has been accepted by

the database. To do so, we draw on existing definitions from the literature on weak

isolation. Though these guarantees predate Adya’s dissertation [Adya 1999], we use

his formalization of them, which, for completeness, we reproduce in the following in

the context of our system model. We provide some context for the interested reader,

however Adya [1999] provides the most comprehensive treatment.

Read dependencies capture behavior where one transaction observes another trans-

action’s writes.

Definition 3.2 (Read-Depends). Transaction T

j

directly read-depends on T

i

if trans-

action T

i

writes some version x

i

and T

j

reads x

i

.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:7

Antidependencies capture behavior where one transaction overwrites the versions

that another transaction reads. In a multiversioned model like Adya’s, we define over-

writes according to the version order defined for that item.

Definition 3.3 (Antidepends). Transaction T

j

directly antidepends on T

i

if transaction

T

i

reads some version x

k

and T

j

writes x’s next version (after x

k

) in the version order.

Note that the transaction that wrote the later version directly antidepends on the

transaction that read the earlier version.

Write dependencies capture behavior where one transaction overwrites another

transaction’s writes.

Definition 3.4 (Write-Depends). Transaction T

j

directly write-depends on T

i

if T

i

writes a version x

i

and T

j

writes x’s next version (after x

i

) in the version order.

We can combine these three kinds of labeled edges into a data structure called the

Direct Serialization Graph.

Definition 3.5 (Direct Serialization Graph). We define the Direct Serialization Graph

(DSG) arising from a history H, denoted by DSG(H) as follows. Each node in the graph

corresponds to a committed transaction and directed edges correspond to different

types of direct conflicts. There is a read dependency edge, write dependency edge, or

antidependency edge from transaction T

i

to transaction T

j

if T

j

reads/writes/directly

antidepends on T

i

.

We can subsequently define several kinds of undesirable behaviors by stating prop-

erties about the DSG. The first captures the intent of the Read Uncommitted isolation

level [Berenson et al. 1995].

Definition 3.6 (G0: Write Cycles). A history H exhibits phenomenon G0 if DSG(H)

contains a directed cycle consisting entirely of write-dependency edges.

The next three undesirable behaviors comprise the intent of the Read Committed

isolation level [Berenson et al. 1995].

Definition 3.7 (G1a: Aborted Reads). A history H exhibits phenomenon G1a if H

contains an aborted transaction T

a

and a committed transaction T

c

such that T

c

reads

a version written by T

a

.

Definition 3.8 (G1b: Intermediate Reads). A history H exhibits phenomenon G1b if

H contains a committed transaction T

i

that reads a version of an object x

j

written by

transaction T

f

,andT

f

also wrote a version x

k

such that j < k.

The definition of the Fractured Reads phenomenon subsumes the definition of G1b.

For completeness, and, to prevent confusion, we include it here and in our discussion

in the following.

Definition 3.9 (G1c: Circular Information Flow). A history H exhibits phenomenon

G1c if DSG(H) contains a directed cycle that consists entirely of read-dependency and

write-dependency edges.

Our RAMP protocols prevent G1c by assigning the final write to each item in each

transaction the same timestamp. However, to avoid further confusion between the

standard practice of assigning each final write in a serializable multiversion history

the same timestamp [Bernstein et al. 1987] and the flexibility of timestamp assign-

ment admitted in Adya’s formulation of weak isolation, we continue with the previous

definitions.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:8 P. Bailis et al.

As Adya describes, the previous criteria prevent readers from observing uncommitted

versions (i.e., those produced by a transaction that has not committed or aborted),

aborted versions (i.e., those produced by a transaction that has aborted), or intermediate

versions (i.e., those produced by a transaction but were later overwritten by writes to

the same items by the same transaction).

We can finally define RA isolation:

Definition 3.10 (RA). A system provides RA isolation if it prevents fractured reads

phenomena and also proscribes phenomena G0, G1a, G1b, G1c (i.e., prevents transac-

tions from reading uncommitted, aborted, or intermediate versions).

Thus, RA informally provides transactions with a “snapshot” view of the database

that respects transaction boundaries (see Sections 3.3 and 3.4 for more details, includ-

ing a discussion of transitivity). RA is simply a restriction on write visibility—RA re-

quires that all or none of a transaction’s updates are made visible to other transactions.

3.2. RA Implications and Limitations

As outlined in Section 2.1, RA isolation matches many of our use cases. However, RA

is not sufficient for all applications. RA does not prevent concurrent updates or provide

serial access to data items; that is, under RA, two transactions are never prevented

from both producing different versions of the same data items. For example, RA is

an incorrect choice for an application that wishes to maintain positive bank account

balances in the event of withdrawals. RA is a better fit for our “friend” operation

because the operation is write-only and correct execution (i.e., inserting both records)

is not conditional on preventing concurrent updates.

From a programmer’s perspective, we have found RA isolation to be most easily

understandable (at least initially) with read-only and write-only transactions; after

all, because RA allows concurrent writes, any values that are read might be changed

at any time. However, read-write transactions are indeed well defined under RA.

In Section 3.3, we describe RA’s relation to other formally defined isolation levels,

and in Section 3.4, we discuss when RA provides serializable outcomes.

3.3. RA Compared to Other Isolation Models

In this section, we illustrate RA’s relationship to alternative weak isolation models by

both example and reference to particular isolation phenomena drawn from Adya [1999]

and Bailis et al. [2014a]. We have included it in the extended version of this work in

response to valuable reader and industrial commentary requesting clarification on

exactly which phenomena RA isolation does and does not prevent.

For completeness, we first reproduce definitions of existing isolation models (again,

using Adya’s models) and then give example histories to compare and contrast with

RA isolation.

Read Committed. We begin with the common [Bailis et al. 2014a] Read Commit-

ted isolation. Note that the phenomena mentioned in the following were defined in

Section 3.1.

Definition 3.11 (Read Committed (PL-2 or RC)). Read Committed isolation proscribes

phenomena G1a, G1b, G1c,andG0.

RA is stronger than Read Committed as Read Committed does not prevent fractured

reads. History 1 does not respect RA isolation. After T

1

commits, both T

2

and T

3

could

both commit but, to prevent fractured reads, T

4

and T

5

must abort. History (1) respects

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:9

RC isolation and all transactions can safely commit.

T

1

w(x

1

); w(y

1

), (1)

T

2

r(x

⊥

); r(y

⊥

),

T

3

r(x

1

); r(y

1

),

T

4

r(x

⊥

); r(y

1

),

T

5

r(x

1

); r(y

⊥

).

Lost Updates. Lost Updates phenomena informally occur when two transactions si-

multaneously attempt to make conditional modifications to the same data item(s).

Definition 3.12 (Lost Update). A history H exhibits the phenomenon Lost Updates

if DS G(H) contains a directed cycle having one or more antidependency edges and all

edges are by the same data item x.

That is, Lost Updates occur when the version that a transaction reads is overwritten

by a second transaction that the first transaction depends on. History (2) exhibits the

Lost Updates phenomenon: T

1

antidepends on T

2

because T

2

overwrites x

⊥

,whichT

1

read, and T

2

antidepends on T

1

because T

1

also overwrites x

⊥

,whichT

2

read. However,

RA does not prevent Lost Updates phenomena, and History (2) is valid under RA. That

is, T

1

and T

2

can both commit under RA isolation.

T

1

r(x

⊥

); w(x

1

), (2)

T

2

r(x

⊥

); w(x

2

).

History (2) is invalid under a stronger isolation model that prevents Lost Updates

phenomena. For completeness, we provide definitions of two such models—Snapshot

Isolation and Cursor Isolation—in the following.

Informally, a history is snapshot isolated if each transaction reads a transaction-

consistent snapshot of the database and each of its writes to a given data item x is the

first write in the history after the snapshot that updates x. To more formally describe

Snapshot Isolation, we formalize the notion of a predicate-based read, as in Adya. Per

Adya, queries and updates may be performed on a set of items if a certain condition

called the predicate applies. We call the set of items a predicate P refers to as P’s

logical range and denote the set of versions returned by a predicate-based read r

j

(P

j

)

as Vset(P

j

). We say a transaction T

i

changes the matches of a predicate-based read

r

j

(P

j

)ifT

i

overwrites a version in Vset(P

j

).

Definition 3.13 (Version Set of a Predicate-Based Operation). When a transaction

executes a read or write based on a predicate P, the system selects a version for each

item to which P applies. The set of selected versions is called the Version set of this

predicate-based operation and is denoted by Vset(P).

Definition 3.14 (Predicate-Many-Preceders (PMP)). A history H exhibits phe-

nomenon PMP if, for all predicate-based reads r

i

(P

i

: Vset(P

i

)) and r

j

(P

j

: Vset(P

j

)

in T

k

such that the logical ranges of P

i

and P

j

overlap (call it P

o

) and the set of trans-

actions that change the matches of r

i

(P

i

)andr

j

(P

j

) for items in P

o

differ.

To complete our definition of Snapshot Isolation, we must also consider a variation

of the DSG. Adya describes the Unfolded Serialization Graph (USG) in Adya [1999,

Section 4.2.1]. For completeness, we reproduce it here. The USG is specified for a

transaction of interest, T

i

, and a history, H, and is denoted by USG(H, T

i

). For the

USG, we retain all nodes and edges of the DSG except for T

i

and the edges incident on

it. Instead, we split the node for T

i

into multiple nodes—one node for every read/write

event in T

i

. The edges are now incident on the relevant event of T

i

.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:10 P. Bailis et al.

USG(H, T

i

) is obtained by transforming DSG(H) as follows: For each node p (p =

T

i

)inDS G(H), we add a node to USG(H, T

i

). For each edge from node p to node q

in DS G(H), where p and q are different from T

i

, we draw a corresponding edge in

USG(H, T

i

). Now we add a node corresponding to every read and write performed by

T

i

. Any edge that was incident on T

i

in the DS G is now incident on the relevant event

of T

i

in the USG. Finally, consecutive events in T

i

are connected by order edges, e.g.,

if an action (e.g., SQL statement) reads object y

j

and immediately follows a write on

object x in transaction T

i

, we add an order edge from w

i

(x

i

)tor

i

(y

j

).

Definition 3.15 (Observed Transaction Vanishes (OTV)). A history H exhibits phe-

nomenon OTV if USG(H) contains a directed cycle consisting of exactly one read-

dependency edge by x from T

j

to T

i

and a set of edges by y containing at least one

antidependency edge from T

i

to T

j

and T

j

’s read from y precedes its read from x.

Informally, OTV occurs when a transaction observes part of another transaction’s

updates but not all of them (e.g., T

1

writes x

1

and y

1

and T

2

reads x

1

and y

⊥

). OTV is

weaker than fractured reads because it allows transactions to read multiple versions

of the same item: in our previous example, if another transaction T

3

also wrote x

3

,

T

1

could read x

1

and subsequently x

3

without exhibiting OTV (but this would exhibit

fractured reads and therefore violate RA).

With these definitions in hand, we can finally define Snapshot Isolation.

Definition 3.16 (Snapshot Isolation). A system provides Snapshot Isolation if it

prevents phenomena G0, G1a, G1b, G1c, PMP, OTV, and Lost Updates.

Informally, cursor stability ensures that, while a transaction is updating a particular

item x in a history H, no other transactions will update x. We can also formally define

Cursor Stability per Adya.

Definition 3.17 (G-cursor(x)). A history H exhibits phenomenon G-cursor(x) if

DSG(H) contains a cycle with an antidependency and one or more write-dependency

edges such that all edges correspond to operations on item x.

Definition 3.18 (Cursor Stability). A system provides Cursor Stability if it prevents

phenomena G0, G1a, G1b, G1c, and G-cursor(x) for all x.

Under either Snapshot Isolation or Cursor Stability, in History (2), either T

1

or

T

2

, or both would abort. However, Cursor Stability does not prevent fractured reads

phenomena, so RA and Cursor Stability are incomparable.

Write Skew. Write Skew phenomena informally occur when two transactions simul-

taneously attempted to make disjoint conditional modifications to the same data items.

Definition 3.19 (Write Skew (Adya G2-item)). A history H exhibits phenomenon

Write Skew if DS G(H) contains a directed cycle having one or more antidependency

edges.

RA does not prevent Write Skew phenomena. History 3 exhibits the Write Skew

phenomenon (Adya’s G2): T

1

antidepends on T

2

and T

2

antidepends on T

1

. However,

History 3 is valid under RA. That is, T

1

and T

2

can both commit under RA isolation.

T

1

r(y

⊥

); w(x

1

), (3)

T

2

r(x

⊥

); w(y

2

).

History (3) is invalid under a stronger isolation model that prevents Write Skew phe-

nomena. One stronger model is Repeatable Read, defined next.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:11

Definition 3.20 (Repeatable Read). A system provides Repeatable Read isolation if

it prevents phenomena G0, G1a, G1b, G1c, and Write Skew for nonpredicate reads and

writes.

Under Repeatable Read isolation, the system would abort either T

1

, T

2

, or both.

Adya’s formulation of Repeatable Read is considerably stronger than the ANSI SQL

standard specification [Bailis et al. 2014a].

Missing Dependencies. Notably, RA does not—on its own—prevent missing depen-

dencies or missing transitive updates. We reproduce Adya’s definitions as follows:

Definition 3.21 (Missing Transaction Updates). A transaction T

j

misses an effect of

a transaction T

i

if T

i

writes x

i

and commits and T

j

reads another version x

k

such that

k < i;thatis,T

j

reads a version of x that is older than the version that was committed

by T

i

.

Adya subsequently defines a criterion that prohibits missing transaction updates

across all types of dependency edges:

Definition 3.22 (No-Depend-Misses). If transaction T

j

depends on transaction T

i

, T

j

does not miss the effects of T

i

.

History (4) fails to satisfy No-Depend-Misses but is still valid under RA. That is,

T

1

, T

2

,andT

3

can all commit under RA isolation. Thus, fractured reads prevention is

similar to No-Depend-Misses but only applies to immediate read dependencies (rather

than all transitive dependencies).

T

1

w(x

1

); w(y

1

), (4)

T

2

r(y

1

); w(z

2

),

T

3

r(x

⊥

); r(z

2

).

History (4) is invalid under a stronger isolation model that prevents missing depen-

dencies phenomena, such as standard semantics for Snapshot Isolation (notably, not

Parallel Snapshot Isolation [Sovran et al. 2011]) and Repeatable Read isolation. Under

these models, the system would abort either T

3

or all of T

1

, T

2

,andT

3

.

This behavior is particularly important and belies the promoted use cases that we

discuss in Sections 3.2 and 3.4: writes that should be read together should be written

together.

We further discuss the benefits and enforcements of transitivity in Section 6.3.

Predicates. Thus far, we have not extensively discussed the use of predicate-based

reads. As Adya notes [Adya 1999] and we described earlier, predicate-based isolation

guarantees can be cast as an extension of item-based isolation guarantees (see also

Adya’s PL-2L, which closely resembles RA). RA isolation is no exception to this rule.

Relating to Additional Guarantees. RA isolation subsumes several other useful

guarantees. RA prohibits Item-Many-Preceders and Observed Transaction Vanishes

phenomena; RA also guarantees Item Cut Isolation, and with predicate support, RA

subsumes Predicate Cut Isolation [Bailis et al. 2014b]. Thus, it is a combination of

Monotonic Atomic View and Item Cut Isolation. For completeness, we reproduce these

definitions next.

Definition 3.23 (Item-Many-Preceders (IMP)). A history H exhibits phenomenon

IMP if DSG(H) contains a transaction T

i

such that T

i

directly item-read-depends by x

on more than one other transaction.

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:12 P. Bailis et al.

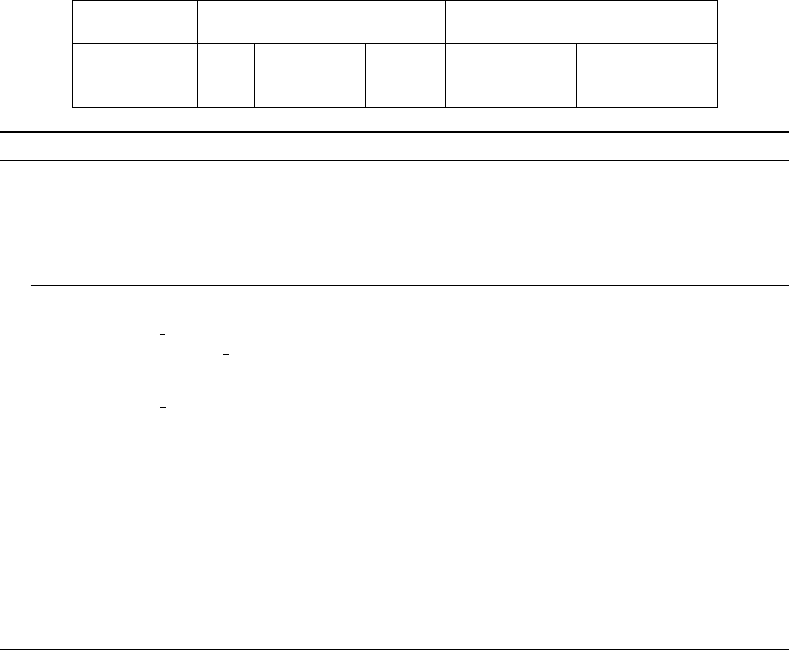

Fig. 1. Comparison of RA with isolation levels from Adya [1999] and Bailis et al. [2014a]. RU: Read Uncom-

mitted, RC: Read Committed, CS: Cursor Stability, MAV: Monotonic Atomic View, ICI: Item Cut Isolation,

PCI: Predicate Cut Isolation, RA: Read Atomic, SI: Snapshot Isolation, RR: Repeatable Read (Adya PL-2.99),

S: Serializable.

Informally, IMP occurs if a transaction observes multiple versions of the same item

(e.g., transaction T

i

reads x

1

and x

2

).

Definition 3.24 (Item Cut Isolation (I-CI)). A system that provides Item Cut Isolation

prohibits the phenomenon IMP.

Definition 3.25 (Predicate-Many-Preceders (PMP)). A history H exhibits the phe-

nomenon PMP if, for all predicate-based reads r

i

(P

i

: Vset(P

i

)) and r

j

(P

j

: Vset(P

j

)in

T

k

such that the logical ranges of P

i

and P

j

overlap (call it P

o

), the set of transactions

that change the matches of P

o

for r

i

and r

j

differ.

Informally, PMP occurs if a transaction observes different versions resulting from

the same predicate read (e.g., transaction T

i

reads Vset(P

i

) =∅and Vset(P

i

) ={x

1

})).

Definition 3.26 (Monotonic Atomic View (MAV)). A system that provides Monotonic

Atomic View isolation prohibits the phenomenon OTV in addition to providing Read

Committed isolation.

Summary. Figure 1 relates RA isolation to several existing models. RA is stronger than

Read Committed, Monotonic Atomic View, and Cut Isolation; weaker than Snapshot

Isolation, Repeatable Read, and Serializability; and incomparable to Cursor Stability.

3.4. RA and Serializability

When we began this work, we started by examining the use cases outlined in Section 2

and derived a weak isolation guarantee that would be sufficient to ensure their correct

execution. For general-purpose read-write transactions, RA isolation may indeed lead

to nonserializable (and possibly incorrect) database states and transaction outcomes.

Yet, as Section 3.2 hints, there appears to be a broader “natural” pattern for which

RA isolation appears to provide an intuitive (even “correct”) semantics. In this section,

we show that for transactions with a particular property of their item read and item

write sets, RA is, in fact, serializable. We define this property, called the Read-Subset-

Items-Written (RSIW) property, prove that transactions obeying the RSIW property

lead to serializable outcomes, and discuss the implications of the RSIW property for

the applications outlined in Section 2.

Because our system model operates on multiple versions, we must make a small

refinement to our use of the term “serializability”—namely, we draw a distinction

between serial and one-copy serializable schedules [Bernstein et al. 1987]. First, we

say that two histories H

1

and H

2

are view equivalent if they contain the same set of

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:13

committed transactions and have the same operations and DS G(H

1

)andDS G(H

2

)

have the same direct read dependencies. For brevity, and for consistency with prior

work, we say that T

i

reads from T

j

if T

i

directly read-depends on T

j

.Wesaythata

transaction is read-only if it does not contain write operations and that a transaction is

write-only if it does not contain read operations. In this section, we concern ourselves

with one-copy serializability [Bernstein et al. 1987], which we define using the previous

definition of view equivalence.

Definition 3.27 (One-Copy Serializability). A history is one-copy serializable if it is

view equivalent to a serial execution of the transactions over a single logical copy of

the database.

The basic intuition behind the RSIW property is straightforward: under RA isolation,

if application developers use a transaction to bundle a set of writes that should be

observed together, any readers of the items that were written will, in fact, behave

“properly”—or one-copy serializably. That is, for read-only and write-only transactions,

if each reading transaction only reads a subset of the items that another write-only

transaction wrote, then RA isolation is equivalent to one-copy serializable isolation.

Before proving that this behavior is one-copy serializable, we characterize this condition

more precisely:

Definition 3.28 (RSIW). A read-only transaction T

r

exhibits the RSIW property if,

whenever T

r

reads a version produced by a write-only transaction T

w

, T

r

only reads

items written by T

w

.

For example, consider the following History (5):

T

1

w(x

1

); w(y

1

), (5)

T

2

r(x

1

); r(y

1

),

T

3

r(x

1

); r(z

⊥

).

Under History (5), T

2

exhibits the RSIW property because it reads a version produced

by transaction T

1

and its item read set ({x, y}) is a subset of T

1

’s item write set ({x, y}).

However, T

3

does not exhibit the RSIW property because (i) T

3

reads from T

1

but T

3

’s

read set ({x, z})isnotasubsetofT

1

’s write set ({x, y}) and (ii) perhaps more subtly, T

3

reads from both T

1

and T

⊥

.

We say that a history H containing read-only and write-only transactions exhibits

the RSIW property (or has RSIW) if every read-only transaction in H exhibits the

RSIW property.

This brings us to our main result in this section:

T

HEOREM 3.29. If a history H containing read-only and write-only transactions has

RSIW and is valid under RA isolation, then H is one-copy serializable.

The proof of Theorem 3.29 is by construction: given a history H has RSIW and is valid

under RA isolation, we describe how to derive an equivalent one-copy serial execution

of the transactions in H. We begin with the construction procedure, provide examples

of how to apply the procedure, then prove that the procedure converts RSIW histories

to their one-copy serial equivalents. We include the actual proof in Appendix A.

Utility. Theorem 3.29 is helpful because it provides a simple syntactic condition for

understanding when RA will provide one-copy serializable access. For example, we can

apply this theorem to our use cases from Section 2. In the case of multientity update

and read, if clients issue read-only and write-only transactions that obey the RSIW

property, their result sets will be one-copy serializable. The RSIW property holds for

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:14 P. Bailis et al.

equality-based lookup of single records from an index (e.g., fetch from the index and

subsequently fetch the corresponding base tuple, each of which was written in the same

transaction or was autogenerated upon insertion of the tuple into the base relation).

However, the RSIW property does not hold in the event of multituple reads, leading to

less intuitive behavior. Specifically, if two different clients trigger two separate updates

to an index entry, some clients may observe one update but not the other, and other

clients may observe the opposite behavior. In this case, the RAMP protocols still provide

a snapshot view of the database according to the index(es)—that is, clients will never

observe base data that is inconsistent with the index entries—but nevertheless surface

nonserializable database states. For example, if two transactions each update items x

and y to value 2, then readers of the index entry for 2 may observe x = 2andy = 2

but not both at the same time; this is because two transactions updating two separate

items do not have RSIW. Finally, for more general materialized view accesses, point

queries and bulk insertions may also have RSIW.

As discussed in Section 2, in the case of indexes and views, it is helpful to view

each physical data structure (e.g., a CRDT [Shapiro et al. 2011] used to represent

an index entry) as a collection of versions. The RSIW property applies only if clients

make modifications to the entire collection at once (e.g., as in a DELETE CASCADE opera-

tion); otherwise, a client may read from multiple transaction item write sets, violating

RSIW.

Coupled with an appropriate algorithm ensuring RA isolation, we can ensure one-

copy serializable isolation. This addresses a long-standing concern with our work: why

is RA somehow “natural” for these use cases (but not necessarily all use cases)? We

have encountered applications that do not require one-copy serializable access—such

as the mailbox unread message maintenance from Section 2 and, in some cases, index

maintenance for non-read-modify-write workloads—and therefore may safely violate

RSIW but believe it is a handy principle (or, at the least, rule of thumb) for reasoning

about applications of RA isolation and the RAMP protocols.

Finally, the RSIW property is only a sufficient condition for one-copy serializable

behavior under RA isolation. There are several alternative sufficient conditions to

consider. As a natural extension, while RSIW only pertains to pairs of read-only and

write-only transactions, one might consider allowing readers to observe multiple write

transactions. For example, consider the following history:

T

1

w(x

1

); w(y

1

), (6)

T

2

w(u

2

); w(z

2

),

T

3

r(x

1

); r(z

2

).

History (6) is valid under RA and is also one-copy serializable but does not satisfy RSIW:

T

3

reads from two transactions’ write sets. However, consider the following history:

T

1

: w(x

1

); w(y

1

), (7)

T

2

: w(u

2

); w(z

2

),

T

3

: r(x

1

); r(z

⊥

),

T

4

: r(x

⊥

); r(z

2

).

History (7) is valid under RA, consists only of read-only and write-only transactions,

yet is no longer one-copy serializable. T

3

observes a prefix beginning with T

⊥

; T

1

while

T

4

observes a prefix beginning with T

⊥

; T

2

.

Thus, while there may indeed be useful criteria beyond the RSIW property that

we might consider as a basis for one-copy serializable execution under RA, we have

observed RSIW to be the most intuitive and useful thus far. One clear criteria is to

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:15

search for schedules or restrictions under RA with an acyclic DSG (from Appendix A).

The reason why RSIW is so simple for read-only and write-only transactions is that

each read-only transaction only reads from one other transaction and does not induce

any additional antidependencies. Combining reads and writes complicates reasoning

about the acyclicity of the graph.

This exercise touches upon an important lesson in the design and use of weakly

isolated systems: by restricting the set of operations accessible to a user (e.g., RSIW

read-only and write-only transactions), one can often achieve more scalable implemen-

tations (e.g., using weaker semantics) without necessarily violating existing abstrac-

tions (e.g., one-copy serializable isolation). While prior work often focuses on restricting

only operations (e.g., to read-only or write-only transactions [Lloyd et al. 2013; Bailis

et al. 2014c; Agrawal and Krishnaswamy 1991], or stored procedures [Thomson et al.

2012; Kallman et al. 2008; Jones et al. 2010], or single-site transactions [Baker et al.

2011]) or only semantics (e.g., weak isolation guarantees [Bailis et al. 2014a; Lloyd

et al. 2013; Bailis et al. 2012]), we see considerable promise in better understanding

the intersection between and combinations of the two. This is often subtle and almost

always challenging, but the results—as we found here—may be surprising.

3.5. System Model and Scalability

We consider databases that are partitioned, with the set of items in the database spread

over multiple servers. Each item has a single logical copy, stored on a server—called

the item’s partition—whose identity can be calculated using the item. Clients forward

operations on each item to the item’s partition, where they are executed. Transaction

execution terminates in commit, signaling success, or abort, signaling failure. In our

examples, all data items have the null value (⊥) at database initialization. We do not

model replication of data items within a partition; this can happen at a lower level of

the system than our discussion (see Section 4.6) as long as operations on each item are

linearizable [Attiya and Welch 2004].

Scalability Criteria. As we hinted in Section 1, large-scale deployments often eschew

transactional functionality on the premise that it would be too expensive or unstable

in the presence of failure and degraded operating modes [Birman et al. 2009; Bronson

et al. 2013; Chang et al. 2006; Cooper et al. 2008; DeCandia et al. 2007; Helland 2007;

Hull 2013; Qiao et al. 2013; Weil 2011]. Our goal in this article is to provide robust and

scalable transactional functionality, and, so we first define criteria for “scalability”:

Coordination-free execution ensures that one client’s transactions cannot cause another

client’s to block and that, if a client can contact the partition responsible for each item

in its transaction, the transaction will eventually commit (or abort of its own volition).

This prevents one transaction from causing another to abort—which is particularly

important in the presence of partial failures—and guarantees that each client is able

to make useful progress. In the absence of failures, this maximizes useful concurrency.

In the literature, coordination-free execution for replicated transactions is also called

transactional availability [Bailis et al. 2014a]. Note that “strong” isolation models like

serializability and Snapshot Isolation require coordination and thus limit scalability.

Locking is an example of a non-coordination-free implementation mechanism.

Many applications can limit their data accesses to a single partition via explicit

data modeling [Das et al. 2010; Qiao et al. 2013; Baker et al. 2011; Helland 2007] or

planning [Curino et al. 2010; Pavlo et al. 2012]. However, this is not always possible. In

the case of secondary indexing, there is a cost associated with requiring single-partition

updates (scatter-gather reads), while in social networks like Facebook and large-scale

hierarchical access patterns as in Rainbird [Weil 2011], perfect partitioning of data

accesses is near impossible. Accordingly:

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:16 P. Bailis et al.

Partition independence ensures that, in order to execute a transaction, a client only con-

tacts partitions for data items that its transaction directly accesses. Thus, a partition

failure only affects transactions that access items contained on the partition. This also

reduces load on servers not directly involved in a transaction’s execution. In the litera-

ture, partition independence for replicated data is also called replica availability [Bailis

et al. 2014a] or genuine partial replication [Schiper et al. 2010]. Using a centralized

validator or scheduler for transactions is an example of a non-partition-independent

implementation mechanism.

In addition to the previous requirements, we limit the metadata overhead of algo-

rithms. There are many potential solutions for providing atomic visibility that rely on

storing prohibitive amounts of state. We will attempt to minimize the metadata—that

is, data that the transaction did not itself write but which is required for correct execu-

tion. Our algorithms will provide constant-factor metadata overhead (RAMP-S, RAMP-H)

or else overhead linear in transaction size (but independent of data size; RAMP-F). As an

example of a solution using prohibitive amounts of metadata, each transaction could

send copies of all of its writes to every partition it accesses so that readers observe all of

its writes by reading a single item. This provides RA isolation but requires considerable

storage. Other solutions may require extra data storage proportional to the number of

servers in the cluster or, worse, the database size (Section 7).

4. RAMP TRANSACTION ALGORITHMS

Given specifications for RA isolation and scalability, we present algorithms for achiev-

ing both. For ease of understanding, we first focus on providing read-only and write-only

transactions with a “last writer wins” overwrite policy, then subsequently discuss how

to perform read/write transactions. Our focus in this section is on intuition and un-

derstanding; we defer all correctness and scalability proofs to Appendix B, providing

salient details inline.

At a high level, RAMP transactions allow reads and writes to proceed concurrently.

This provides excellent performance but, in turn, introduces a race condition: one

transaction might only read a subset of another transaction’s writes, violating RA (i.e.,

fractured reads might occur). Instead of preventing this race (hampering scalability),

RAMP readers autonomously detect the race (using metadata attached to each data

item) and fetch any missing, in-flight writes from their respective partitions. To make

sure that readers never have to block waiting for writes to arrive at a partition, writers

use a two-phase (atomic commitment) protocol that ensures that once a write is visible

to readers on one partition, any other writes in the transaction are present on and, if

appropriately identified by version, readable from their respective partitions.

In this section, we present three algorithms that provide a trade-off between the

amount of metadata required and the expected number of extra reads to fetch missing

writes. As discussed in Section 2, if techniques like distributed locking couple mutual

exclusion with atomic visibility of writes, RAMP transactions correctly control visibility

but allow concurrent and scalable execution.

4.1. RAMP-Fast

To begin, we present a RAMP algorithm that, in the race-free case, requires one RTT

for reads and two RTTs for writes, called RAMP-Fast (abbreviated RAMP-F; Algorithm 1).

RAMP-F stores metadata in the form of write sets (overhead linear in transaction size).

Overview. Each write in RAMP-F (lines 14–21) contains a timestamp (line 15) that

uniquely identifies the writing transaction as well as a set of items written in the

transaction (line 16). For now, combining a unique client ID and client-local sequence

number is sufficient for timestamp generation (see also Section 4.5).

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:17

RAMP-F write transactions proceed in two phases: a first round of communication

places each timestamped write on its respective partition. In this

PREPARE phase, each

partition adds the write to its local database (versions, lines 1, 17–19). A second round of

communication (lines 20–21) marks versions as committed. In this

COMMIT phase, each

partition updates an index containing the highest-timestamped committed version of

each item (lastCommit, lines 2, 6–8).

RAMP-F read transactions begin by first fetching the last (highest-timestamped) com-

mitted version for each item from its respective partition (lines 23–30). Using the

results from this first round of reads, each reader can calculate whether it is “missing”

any versions (that is, versions that were prepared but not yet committed on their par-

titions). The reader calculates a mapping from each item i to the highest-timestamped

version of i that appears in the metadata of any version (of i or of any other item) in

the first-round read set (lines 26–29). If the reader has read a version of an item that

has a lower timestamp than indicated in the mapping for that item, the reader issues a

second read to fetch the missing version (by timestamp) from its partition (lines 30–32).

Once all missing versions are fetched (which can be done in parallel), the client can

return the resulting set of versions—the first-round reads, with any missing versions

replaced by the optional, second round of reads.

By Example. Consider the RAMP-F execution depicted in Figure 2. T

1

writes to both x

and y, performing the two-round write protocol on two partitions, P

x

and P

y

. However,

T

2

reads from x and y while T

1

is concurrently writing. Specifically, T

2

reads from P

x

after P

x

has committed T

1

’s write to x,butT

2

reads from P

y

before P

y

has committed

T

1

’s write to y. Therefore, T

2

’s first-round reads return x = x

1

and y =∅, and returning

this set of reads would violate RA. Using the metadata attached to its first-round

reads, T

2

determines that it is missing y

1

(since v

latest

[y] = 1and1> ∅)andsoT

2

subsequently issues a second read from P

y

to fetch y

1

by version. After completing its

second-round read, T

2

can safely return its result set. T

1

’s progress is unaffected by T

2

,

and T

1

subsequently completes by committing y

1

on P

y

.

Why it Works. RAMP-F writers use metadata as a record of intent: a reader can detect

if it has raced with an in-progress commit round and use the metadata stored by

the writer to fetch the missing data. Accordingly, RAMP-F readers only issue a second

round of reads in the event that they read from a partially committed write transaction

(where some but not all partitions have committed a write). In this event, readers will

fetch the appropriate writes from the not-yet-committed partitions. Most importantly,

RAMP-F readers never have to stall waiting for a write that has not yet arrived at a

partition: the two-round RAMP-F write protocol guarantees that, if a partition commits a

write, all of the corresponding writes in the transaction are present on their respective

partitions (though possibly not committed locally). As long as a reader can identify

the corresponding version by timestamp, the reader can fetch the version from the

respective partition’s set of pending writes without waiting. To enable this, RAMP-F

writes contain metadata linear in the size of the writing transaction’s write set (plus a

timestamp per write).

RAMP-F requires two RTTs for writes: one for

PREPARE and one for COMMIT. For reads,

RAMP-F requires one RTT in the absence of concurrent writes and two RTTs otherwise.

RAMP timestamps are only used to identify specific versions and in ordering concur-

rent writes to the same item; RAMP-F transactions do not require a “global” timestamp

authority. For example, if lastCommi t[i] = 2, there is no requirement that a transaction

with timestamp 1 has committed or even that such a transaction exists.

4.2. RAMP-Small: Trading Metadata for RTTs

While RAMP-F requires metadata size linear in write set size but provides best-case one

RTT for reads, RAMP-Small (RAMP-S) uses constant metadata but always requires two

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:18 P. Bailis et al.

Fig. 2. Space-time diagram for RAMP-F execution for two transactions T

1

and T

2

performed by clients C

1

and

C

2

on partitions P

x

and P

y

. Lightly shaded boxes represent current partition state (lastCommit and versions),

while the single darker box encapsulates all messages exchanged during C

2

’s execution of transaction T

2

.

Because T

1

overlaps with T

2

, T

2

must perform a second round of reads to repair the fractured read between

x and y. T

1

’s writes are assigned timestamp 1. We depict a compressed version of metadata, where each item

does not appear in its list of writes (e.g., P

x

sees {y} only and not {x, y}).

RTT for reads (Algorithm 2). RAMP-S and RAMP-F writes are identical, but instead of

attaching the entire write set to each write, RAMP-S writers only store the transaction

timestamp (line 7). Unlike RAMP-F, RAMP-S readers issue a first round of reads to fetch

the highest committed timestamp for each item from its respective partition (lines 3,

9–11). Then the readers send the entire set of timestamps they received to the partitions

in a second round of communication (lines 13–14). For each item in the read request,

RAMP-S servers return the highest-timestamped version of the item that also appears

in the supplied set of timestamps (lines 5–6). Readers subsequently return the results

from the mandatory second round of requests.

By Example. In Figure 3, under RAMP-S, P

x

and P

y

, respectively, return the sets {1}

and {∅} in response to T

2

’s first round of reads. T

2

would subsequently send the set

{1, ∅} to both P

x

and P

y

, which would return x

1

and y

1

.

Why it Works. In RAMP-S, if a transaction has committed on some but not all partitions,

the transaction timestamp will be returned in the first round of any concurrent read

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

Scalable Atomic Visibility with RAMP Transactions 15:19

ALGORITHM 1: RAMP-Fast

Server-side Data Structures

1: versions: set of versions item,value, timestamp ts

v

, metadata md

2: lastCommit[i]: last committed timestamp for item i

Server-side Methods

3: procedure

PREPARE(v : version)

4: versions.add(v)

5: return

6: procedure

COMMIT(ts

c

:timestamp)

7: I

ts

←{w.item | w ∈ versions ∧ w.ts

v

= ts

c

}

8: ∀i ∈ I

ts

, lastCommit[i] ← max(lastCommit[i], ts

c

)

9: procedure

GET(i :item,ts

req

:timestamp)

10: if ts

req

=∅then

11: return v ∈ versions : v.item = i ∧ v.ts

v

= lastCommit[item]

12: else

13: return v ∈ versions : v.item = i ∧ v.ts

v

= ts

req

Client-side Methods

14: procedure

PUT ALL(W : set of item,value)

15: ts

tx

← generate new timestamp

16: I

tx

← set of items in W

17: parallel-for i,v∈W

18: w ←item = i,value = v, ts

v

= ts

tx

, md = (I

tx

−{i})

19: invoke

PREPARE(w) on respective server (i.e., partition)

20: parallel-for server s : s contains an item in W

21: invoke

COMMIT(ts

tx

)ons

22: procedure

GET ALL(I : set of items)

23: ret ←{}

24: parallel-for i ∈ I

25: ret[i] ←

GET(i, ∅)

26: v

latest

←{}(default value: −1)

27: for response r ∈ ret do

28: for i

tx

∈ r.md do

29: v

latest

[i

tx

] ← max(v

latest

[i

tx

], r.ts

v

)

30: parallel-for item i ∈ I

31: if v

latest

[i] > ret[i].ts

v

then

32: ret[i] ←

GET(i,v

latest

[i])

33: return ret

transaction accessing the committed partitions’ items. In the (required) second round

of read requests, any prepared-but-not-committed partitions will find the committed

timestamp in the reader-provided set and return the appropriate version. In contrast

with RAMP-F, where readers explicitly provide partitions with a specific version to return

in the (optional) second round, RAMP-S readers defer the decision of which version

to return to the partition, which uses the reader-provided set to decide. This saves

metadata but increases RTTs, and the size of the parameters of each second-round

GET

request is (worst-case) linear in the read set size. Unlike RAMP-F, there is no requirement

to return the value of the last committed version in the first round (returning the

version, lastCommit[i], suffices in line 3).

ACM Transactions on Database Systems, Vol. 41, No. 3, Article 15, Publication date: July 2016.

15:20 P. Bailis et al.

Fig. 3. Space-time diagram for RAMP-S execution for two transactions T

1

and T

2

performed by clients C

1

and

C

2

on partitions P

x

and P

y

. Lightly shaded boxes represent current partition state (lastCommit and versions),

while the single darker box encapsulates all messages exchanged during C

2

’s execution of transaction T

2

. T

2

first fetches the highest committed timestamp from each partition, then fetches the corresponding version.

In this depiction, partitions only return timestamps instead of actual versions in response to first-round

reads.

4.3. RAMP-Hybrid: An Intermediate Solution

RAMP-Hybrid (RAMP-H; Algorithm 3) strikes a compromise between RAMP-F and RAMP-S.

RAMP-H and RAMP-S write protocols are identical, but instead of storing the entire write

set (as in RAMP-F), RAMP-H writers store a Bloom filter [Bloom 1970] representing the

transaction write set (line 1). RAMP-H readers proceed as in RAMP-F, with a first round

of communication to fetch the last-committed version of each item from its partition