All in One: Design, Verification, and Implementation of SNOW-Optimal Read

Atomic Transactions

SI LIU, ETH Zürich, Switzerland

Distributed read atomic transactions are important building blocks of modern cloud databases which magnicently bridge the gap

between data availability and strong data consistency. The performance of their transactional reads is particularly critical to the

overall system performance as many real-world database workloads are dominated by reads. Following the SNOW design principle for

optimal reads, we develop LORA, a novel SNOW-optimal algorithm for distributed read atomic transactions. LORA completes its reads

in exactly one round trip, even in the presence of conicting writes, without imposing additional overhead to the communication and

outperforms the state-of-the-art read atomic algorithms.

To guide LORA’s development we present a rewriting-logic-based framework and toolkit for design, verication, implementation,

and evaluation of distributed databases. Within the framework, we formalize LORA and mathematically prove its data consistency

guarantees. We also apply automatic model checking and statistical verication to validate our proofs and to estimate LORA’s

performance. We additionally generate from the formal model a correct-by-construction distributed implementation for testing and

performance evaluation under realistic deployments. Our design-level and implementation-based experimental results are consistent,

which together demonstrate LORA’s promising data consistency and performance achievement.

CCS Concepts:

• Software and its engineering → Formal methods

;

Development frameworks and environments

;

• Infor-

mation systems → Distribute d database transactions; Database performance evaluation.

Additional Key Words and Phrases: model checking, statistical verication, data consistency, the SNOW theorem

1 INTRODUCTION

Modern web applications are typically layered on top of a high-performance cloud database running in a partitioned,

geo-replicated environment for system scalability, data availability, and fault tolerance. As network partitions/failures

(P) are unavoidable in general, a cloud database design must sacrice either strong data consistency (C) or high data

availability (A) according to the CAP theorem [

17

]. To balance well the trade-o between C and A, there is therefore

a plethora of data consistency models for distributed database systems, from weak models such as read committed

through various forms of snapshot isolation to the strongest guarantee strict serializability.

Read atomicity (RA) [

10

]—either all or none of a transaction’s updates are visible to another transaction’s reads—

magnicently bridges the gap between C and A in a distributed setting by providing the strongest data consistency that

is achievable with high availability (the HAT semantics [

9

]). Many industrial and academic distributed databases have

therefore integrated read atomic transactions as important building blocks [

4

,

10

,

21

,

48

,

53

,

75

]. To cite a few examples,

RAMP-TAO [

21

] has recently layered RA on Facebook’s TAO data store [

18

] to provide atomically visible and highly

available transactions. The ROLA transaction system [

48

] implements a new stronger data consistency model, update

atomicity, by extending RA with the mechanisms for preventing the lost update phenomena.

Many real-world database workloads are dominated by reads, e.g., in Facebook’s TAO [

18

], LinkedIn’s Espresso

[

67

], and Google’s Spanner [

24

], and thus the performance of transactional reads is critical to the overall system

performance. Cloud database designers have dedicated tremendous eorts to the specic algorithms for transactional

reads [10, 24, 25, 52, 53, 59] such as the ecient RAMP (Read Atomic Multi-Partition) algorithms [10].

Author’s address: Si Liu, [email protected], ETH Zürich, Switzerland.

Manuscript submitted to ACM 1

2 Si Liu

The SNOW theorem [

54

] is an impossibility result for transactional reads that proves no read can provide strict

serializability (S) with non-blocking client-server communication (N) that completes in exactly one round trip and

with only one returned version of the data (O) in a database system with concurrent transactional writes (W). The

SNOW theorem is a powerful lens for determining whether transactional reads are optimal: for a distributed transaction

algorithm designed for data consistency weaker than strict serializability, e.g., read atomicity, it must achieve the N+O+W

properties to be SNOW-optimal. Nonetheless, the state-of-the-art read atomic algorithms are all SNOW-suboptimal

(a more detailed overview of the suboptimality is given in Section 3.1): even the “fastest” algorithm RAMP-Fast [

10

]

requires 1.5 round trips on average to complete its reads, thus violating the O property; one conjectured optimization

for RAMP-Fast attempts to improve the overall database performance by sacricing the read your writes (RYW) session

guarantee [

77

] (a prevalent building block of many databases such as Facebook’s TAO), which still incurs two round-trip

times for reads in the presence of racing writes.

Our LORA Design.

Challenges for deriving SNOW-optimal read atomic transactions are: (i) improving the already

well-designed ecient read atomic algorithms such as RAMP-Fast; (ii) incurring no extra cost on transactional writes;

and (iii) sacricing no established consistency guarantee such as RYW. In particular, challenge (ii) may be more dicult:

previous work [

54

] on deriving optimal transactional reads from the causally consistent data store COPS has been

shown to provide suboptimal system performance [

29

]. The fundamental issue is that, to optimize the reads, the new

design imposes additional processing costs on writes, which in turn reduce the overall throughput.

We present LORA, the rst SNOW-optimal read atomic transaction algorithm. LORA’s key idea is to compute

an RA-consistent snapshot, represented by the timestamps of the requested data items, of the entire database for

each transactional read which can then retrieve the corresponding RA-consistent version, indicated by the computed

timestamp, in exactly one round trip. To do so, LORA uses a novel client-side data structure that maintains the view of

the last seen timestamp for each data item, together with the associated sibling items updated by the same transaction. A

RA-consistent snapshot can therefore be computed by comparing a data item’s last seen timestamp and those timestamps

for which it is a sibling item: returning the highest timestamp always guarantees RA.

LORA also employs the two-phase commit protocol as in existing read atomic algorithms to guarantee the atomicity

of a transaction’s updates. Dierently, LORA decouples these two phases by allowing a transaction to commit after

the rst phase to improve the performance of transactional writes. To avoid loosing RYW as in the above conjectured

optimization, LORA incorporates the committed writes into the view of the last seen timestamps in between the two

phases for the subsequent RA-consistent snapshot computations. For the client-server communications in both phases,

LORA imposes no more overhead than RAMP-Fast in terms of message payload for writes since the same metadata are

piggybacked via each message.

In addition to optimizing read-only and write-only transactions (where transactional operations are only reads or

writes), LORA also extends existing read atomic algorithms with the support for fully functional read-write transactions

with mixed SNOW-optimal reads and RYW-assured one-phase writes.

From Design to Implementation.

Developing correct and high-performance distributed database systems is hard

with complex transactional read/write dynamics, especially in partitioned, geo-replicated environments. In particular,

improving existing systems by touching a large code base often requires intensive manual eorts, has a high risk of

introducing new bugs, and is not repeatable. In practice, very few optimizations can be explored in this way: in deriving

SNOW-optimal algorithms, only one out of ten design candidates have been implemented and evaluated [54].

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 3

LORA

Maude Spec.

Workload

Generator

Extended

Model Checker

CAT

Prob. Model

Transform.

Consist.

Property

SMC Params.

& Quantitative

Property

Prob.

LORA Spec.

MC

Result

SMC

Result

Workload

Params.

e.g., %reads

Deploy.

Params.

Distr. Impl.

Generation

LORA

Impl.

Evaluation

& Testing

Result

Deploying

Inital

State

Inital

State

Monitoring

Prob. LORA

Spec. with

Monitor

LORA

Impl. with

Monitor

Inital

State

SMChecker

PVeStA

Mathematical

Proof

Theorems

Fig. 1. The overview of our methodology and toolkit: The green, red, blue, and yellow parts refer to mathematical proofs of LORA’s

correctness, model checking of its consistency properties, SMC of its consistency/performance properties, and its implementation-

based testing and evaluation under realistic deployment, respectively. The purple part is the parametric workload generator producing

initial state(s) for the last three analyses. The gray part refers to the monitoring mechanism used to extract transaction measures.

The solid boxes are mechanisms or tools. The inputs are sketched. The intermediate artifacts are dashed. The outputs are gradient.

Formal methods have been advocated and applied by both academia [

6

] and industry (e.g., Amazon Web Services

[62] and Microsoft Azure [58]) to develop and verify cloud databases, particularly at an early design stage to establish

both qualitative correctness (such as data consistency) and quantitative guarantees about the system performance (such

as latency). To cite a few examples, Chapar [

42

] has been used to extract implementations of distributed key-value

stores from their Coq-veried formal specications; TLA+ and its model checker have been employed by Amazon

for design-level verication; Microsoft Research has adopted the IronFleet framework [

35

] for verifying both system

designs and implementations.

Our Methodology and Main Contributions.

We use a combination of rewriting-logic-based techniques to guide the

development of LORA (summarized in Fig. 1). Our methodology tackles all the following challenges that existing formal

development methods fail to (see Section 9 for the detailed related work): (i) the complexity and heterogeneity of cloud

database systems require a exible and expressive formal framework [

62

]; (ii) complex data consistency properties

necessitate the rigorous and automatic verication methods [

62

]; (iii) design-level quantitative assessments are equally

important for cloud computing systems [

6

]; (iv) the pass from a veried design to a correct implementation is a necessity

towards a production-ready system [

76

,

86

]; and (v) the semantic gaps between dierent models (e.g., for correctness

checking and for statistical verication) and between models and implementations must be bridged to obtain faithful

analysis results [48, 51].

Manuscript submitted to ACM

4 Si Liu

Regarding (i), we rely on rewriting logic’s capability of modeling object-based distributed systems [

57

] and formalize

our LORA design in the Maude language [

22

]. Such systems exhibit intrinsic features such as unbounded data structures

and dynamic message creation that are quite hard or impossible to represent in other formalisms (e.g., nite automata).

To address (ii) we prove by induction that the formal model of LORA satises its claimed consistency properties RA

and RYW. We also subject the model to the (extended) CAT tool [

49

], the state-of-the-art model checker for distributed

transaction systems, for automatic model checking analysis of data consistency.

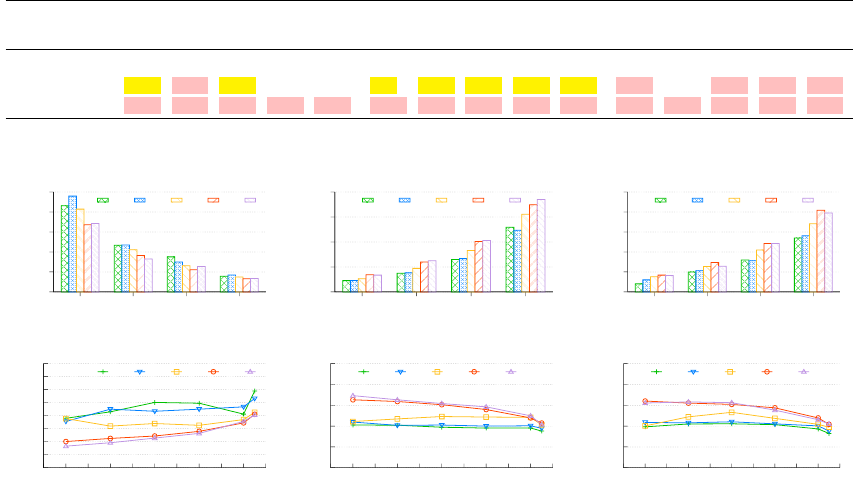

For challenge (iii), we quantitatively analyze LORA’s transaction latency and throughput, as well as various consis-

tency properties, via statistical model checking (SMC) [

69

,

88

] that has demonstrated its predictive power in terms of

system performance in an early design phase [

44

,

48

]. Concretely, we rst transform the nondeterminisitic, untimed

Maude model of LORA (for the above qualitative analyses) into a purely probabilistic, timed model. We then add a

monitoring mechanism to the transformed model that automatically extracts transaction measures during system

executions. Finally, we formalize a number of data consistency and performance properties over such measures and

apply Maude-based SMC with PVeStA [

5

] to statistically verify/measure LORA against the state-of-the-art read atomic

algorithms. Our SMC results demonstrate LORA’s promising performance and consistency achievement.

In addition to the above design-level formal analyses, we also perform the implementation-level testing and evaluation

under realistic deployments, both to validate our claims from the qualitative analyses and to conrm our model-based

SMC predications. In particular, we tackle challenge (iv) by using the

D

transformation [

51

] to generate a correct-by-

construction distributed Maude implementation of LORA from its formal model. Such an implementation is further

enriched by the monitoring mechanism for collecting runtime information before the automated deployment.

We bridge the semantic gaps by dedicating all the above formal eorts within the same framework and with a single

artifact. To the best of our knowledge, this is the rst demonstration that, within the same semantics framework, a

new distributed transaction algorithm can be formally designed, rigorously veried, automatically analyzed for both

qualitative and quantitative properties, correctly implemented, and evaluated under realistic deployments.

For fully automated analysis of extensive consistency properties and of system performance with both SMC and

implementation-based evaluation, we provide additional contributions (also shown in Fig. 1) to the Maude ecosystem in

the course of developing LORA: a workload generator for producing realistic database workloads; the extended CAT

model checker for analyzing the RYW session guarantee; a monitoring mechanism for extracting transaction measures

from both simulations and actual system runs; a library of performance and consistency metrics for quantitative

analysis; and an automated tool for deploying distributed implementations in a cluster.

The remainder of this paper proceeds as follows: Section 2 gives preliminaries on distributed transactions, data

consistency guarantees, and the SNOW theorem. Section 3 examines the suboptimality of existing read atomic algorithms

and presents our LORA design. Section 4 gives an overview of the Maude ecosystem. Section 5 presents our formal

specication of LORA in Maude, followed by the mathematical proofs in Section 6 and the automatic analysis in Section

7. Section 8 discusses the limitations and improvements to our methodology and toolkit. Finally, Section 9 discusses

related work and Section 10 ends the paper with some concluding remarks and future work.

2 DISTRIBUTED TRANSACTIONS AND THE SNOW THEOREM

2.1 Distributed Transactions and Data Consistency

Today’s web applications are layered atop a partitioned, geo-replicated distributed database. With data partitioning,

very large amounts of data are divided into multiple smaller parts stored across multiple servers (or partitions) for

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 5

scalability. Replicating data in multiple distant physical sites improves data availability and system fault tolerance. A

user application request is submitted to the database as a transaction that consists of a sequence of read and/or write

operations

1

on data items (or keys) stored across multiple database partitions. Each transactional write creates a version

of a data item, typically identied by a unique timestamp. A distributed transaction that enforces the ACID properties

(Atomicity, Consistency, Isolation, and Durability) [

13

] terminates as either a committed transaction, signaling success,

or an aborted one, signaling failure.

Serializability

Snapshot

Isolation

Read

Atomicity

Read

Committed

Read

Uncommitted

Update

Atomicity

Transactional

Causality

Strict

Serializability

Read Your

Writes

Fig. 2. Hierarchy of data consistency properties:

A → B

indicates that consistency property

A

is strictly weaker than consistency

property B. Transactional and non-transactional consistency properties are colored in green and yellow, respectively.

Since network failures are unavoidable, a distributed database design must sacrice either strong consistency or

availability (the CAP theorem [

17

]). Providing strong data consistency guarantees for distributed transactions typically

incurs high latency, e.g., a database system for read atomicity is expected to outperform an update atomic transaction

system. There is therefore a spectrum of consistency models over partitioned replicated data for various web applications.

Fig. 2 shows eight prevalent transactional consistency properties (in green) ranging from weak consistency such as

read uncommitted to strong consistency like strict serializability:

•

Read uncommitted (RU) [

12

] provides the weakest data consistency guarantee, where one transaction may see

not-yet-committed changes made by other transactions.

•

Read committed (RC) [

12

], used as the default data consistency model by almost all SQL databases, disallows a

transaction from seeing any uncommitted or aborted data.

•

Read atomicity (RA) [

10

] guarantees that either all or none of a distributed transaction’s updates are visible to

another transaction. Take a social networking application for example, if Thor and Hulk become “friends” in one

transaction, then other transactions should not see that Thor is a friend of Hulk but that Hulk is not a friend of

Thor; either both relationships are visible or neither is.

•

Update atomicity (UA) [

20

,

48

] strengthens read atomicity (see below) by preventing lost updates (a transaction’s

update to a data item is lost as it is overwritten by another transaction’s update).

•

Transactional causality (TC) [

4

,

53

] ensures that a transaction reads from a snapshot of the data store that includes

the eects of all transactions that causally precede it (causal consistency) and all transactional updates are made

visible simultaneously, or none does (read atomicity).

•

Snapshot isolation (SI) [

12

] requires a multi-partition transaction to read from a snapshot of a distributed data

store that reects a single commit order of transactions across sites, even if they are independent of each other.

•

Serializability (SER) [

66

] ensures that the execution of concurrent transactions is equivalent to one where the

transactions are run one at a time.

1

A transaction that consists of only read, resp. write, operations is called a read-only, resp. write-only, transaction; otherwise, it is a read-write transaction

with mixed read and write operations. Transactional reads refer to the read operations in both read-only and read-write transactions.

Manuscript submitted to ACM

6 Si Liu

•

Strict serializability (SSER) is the strongest consistency property that strengthens SER by enforcing the serial

order to follow real time, thus providing the most aggressive data freshness (reads returning the latest writes).

In particular, we recall here RA’s denition in [10]:

“A system provides read atomic isolation if it prevents fractured reads anomalies and also prevents

transactions from reading uncommitted, aborted, or intermediate data.”

where (version i of data item x is denoted as x

i

)

“A transaction

T

j

exhibits fractured reads if transaction

T

i

writes versions

x

m

and

y

n

(in any order, with

x

possibly but not necessarily equal to y), T

j

reads version x

m

and version y

k

, and k < n.”

Note that timestamps are typically taken from a totally ordered set, thus inducing a total order on versions of each data

item (e.g., k < n) and a partial order across versions of dierent data items.

Another useful class of data consistency guarantees refers to the client-centric ordering within a session that depicts a

context persisting between transactions. For example, on a social networking website, all of a user’s requests, submitted

as transactions between login and logout, typically form a session. Terry et al. [

77

] proposed four session guarantees:

read your writes (RYW), monotonic reads (MR), writes follow reads (WFR), and monotonic writes (MW). In this paper we

consider RYW (colored in yellow in Fig. 2), the most prevalent session guarantee advocated by many industrial and

academic database applications such as Facebook’s TAO [

18

] and the RAMP transactions [

10

]. RYW guarantees that

the eects of all writes performed in a (client) session are visible to its subsequent reads.

2.2 Snow-Optimality and Latency-Optimality

Many web applications today exhibit read-dominated database workloads [

18

,

24

,

67

]. Improving the performance of

transactional reads has therefore become a key requirement for modern distributed databases. Lu et al. propose the

SNOW theorem as a design principle for optimal transactional reads. The theorem states that it is impossible for a

distributed transaction algorithm to achieve all four desirable properties (see Section 2.1 for Strict serializability (S)):

•

Non-blocking reads (N) require that each server should process reads without blocking for any external event.

Such operations are desirable as they can save the time that would be spent on blocking.

•

One response per read (O) consists of two sub-properties: one version per read and one round-trip to each server.

The one version sub-property requires that servers send only one value for each read as multiple versions would

consume more time in data serialization/deserialization and transmission. The one round-trip sub-property

requires the client to send at most one request to each server and the server to send at most one response back.

•

Write transactions that conict (W) requires that transactional reads that view data can coexist with conicting

transactions that are concurrently updating that data.

Denition 2.1 (SNOW-Optimality [

54

]). A distributed transaction algorithm is SNOW-optimal if its properties sit on

the boundary of the SNOW theorem, i.e., achieving three out of the four SNOW properties.

Among the four properties, N+O particularly favor the performance of reads as non-blocking and one response lead to

low read latency.

Denition 2.2 (Latency-Optimality [

54

]). A distributed transaction algorithm is latency-optimal if its reads are

non-blocking (N) and it provides one response p er read (O).

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 7

For a distributed transaction algorithm that is designed for data consistency weaker than strict serializability (e.g.,

read atomicity that we target in this paper), the most it can achieve is the N+O+W properties, i.e., SNOW-optimality.

Such an algorithm is also latency-optimal (the N+O properties).

2

3 THE LORA TRANSACTION ALGORITHM

In this section we rst examine the suboptimality of existing read atomic designs, in particular the “fastest” algorithm

RAMP-Fast (Section 3.1), and then present our SNOW-optimal read atomic transaction algorithm LORA (Section 3.2).

Assumptions.

We make the same assumptions for LORA as in existing read atomic algorithms [

10

]: (i) a database is

partitioned and each partition stores part of the entire database items (or keys); (ii) each key has a single logical copy, i.e.,

no data replication; (iii) we consider multiversion concurrency control [

13

] that allows reads to access not-yet-committed

data; (iv) a timestamp uniquely identies a version of a key; and (v) a transaction execution, initiated by a client (or

coordinator), terminates in either commit or abort.

3.1 Suboptimality of Existing Read Atomic Algorithms

Three read atomic algorithms, RAMP-Fast (or RF), RAMP-Small (or RS), and RAMP-Hybrid (or RH), are presented in

[

10

], oering dierent trade-os between the size of message payload and the system performance in terms of the

number of round trips required by a read to fetch the RA-consistent data. Two RAMP optimizations, Faster Commit

(FC) and One-Phase Writes (1PW), are also conjectured by the original developers to reduce the overhead of processing

reads and writes, respectively. Recently, the RAMP-TAO [

21

] protocol layers RAMP transactions on top of Facebook’s

TAO data store, providing replicated read atomic transactions.

None of these algorithms and conjectures are SNOW-optimal or latency-optimal. More specically, RF needs 1.5

round trips on average to complete its reads (two round trips with conicting writes and one round trip otherwise) and

thus violates the O property; RS always requires two round trips for reads; RH may require two round trips even with

no race between reads and writes; the FC design conjecture can only reduce the possibility of second-round reads in

RF, thus not guaranteeing one round-trip reads in the presence of racing writes; the 1PW conjecture only optimizes

transactional writes by sacricing the read your writes session guarantee (see Appendix B for an example scenario and

Section 7.3.2 for its formal analysis). RAMP-TAO inherits the suboptimality from the RAMP transaction algorithms.

In the following we recall the “fastest” algorithm RF (on which our LORA algorithm is built) and illustrate its

suboptimality using a scenario of two concurrently executing transactions.

RAMP-Fast.

To guarantee that all database partitions perform a transaction successfully or that none do, RF performs

two-phase writes by using the two-phase commit protocol. The rst phase initiates a prepare operation of a version

⟨key, value, ts, md⟩

on the partition storing

key

, where

ts

is the timestamp uniquely identifying this version and

md

is the metadata containing all other (sibling) keys updated in the same transaction. The second phase commits the

transaction (via the commit operations) if all involved partitions agree to (indicated by all prepared responses), where

each partition updates an index containing the latest committed version of each key.

Regarding transactional reads, for each key RF rst requests the highest-timestamped committed version stored

on the partition (via a get operation). By examining the returned timestamps and metadata, if RF nds a key in the

2

In general, a SNOW-optimal algorithm is not necessarily latency-optimal, e.g., RIFL [

40

] satises the S+N+W properties; a latency-optimal algorithm

may be SNOW-suboptimal, e.g., COPS-SNOW [54] that provides causal consistency is incompatible with write transactions.

Manuscript submitted to ACM

8 Si Liu

metadata that has a higher timestamp

ts

than the returned timestamp, a second-round read (via get) is issued to request

the ts-stamped version. Once all such “missing” versions have been fetched, RF commits the transaction.

The pseudo-code of RF is shown in Appendix A where only read-only and write-only transactions (operations in a

transaction are only reads, resp. writes) are considered.

Example 3.1 (Fig. 3-(a)). Consider a scenario of two conicting transactions that read and update the same data items

initialized as

x

0

and

y

0

. Transaction

T

1

performs two-phase writes on two partitions

P

x

and

P

y

that respectively store

keys

x

and

y

. The concurrently executing transaction

T

2

reads from

P

x

after

P

x

has committed

T

1

’s write to

x

, while

from

P

y

before

P

y

has committed

T

1

’s write to

y

. Hence,

T

2

’s rst-round reads fetch the versions

x

1

and

y

0

(version

i

of key

k

denoted as

k

i

), the fractured reads that violate read atomicity. Using the metadata attached to its rst-round

reads,

T

2

nds that

y

1

is missing (

last[y] =

1 and 1

>

0), thus issuing a second-round read to fetch

y

1

(indicated by

the requested timestamp

ts

req

). The resulting versions

x

1

and

y

1

are therefore RA-consistent.

T

2

is compatible with

T

1

which can subsequently commits (y

1

on P

y

).

In this example RF satises the N property (

P

x

and

P

y

return the requested versions without blocking), the W

property (

T

2

’s compatibility with

T

1

), and read atomicity, but not the O property (due to the second-round read to

P

y

).

Hence, RF is neither SNOW-optimal nor latency-optimal.

3.2 The LORA Design

We present LORA, a SNOW-optimal read atomic transaction algorithm,

3

that optimizes and extends RF from three

aspects: (i) providing one round-trip reads with read atomicity, (ii) committing writes in one phase with read your writes,

and (iii) supporting read-write transactions. Algorithm 1 shows the pseudo-code of LORA with these major dierences

colored.

Regarding (i), LORA computes a RA-consistent snapshot of the database for each transactional read before it is

issued out. Such a snapshot is indicated by the timestamps whose associated versions are subsequently returned by the

database partitions. Hence, with a single round trip, transactional reads can fetch RA-consistent versions.

More specically, the coordinator (or client) maintains a data structure

last[k]

that maps each key

k

to its last seen

timestamp

ts

and the associated metadata

md

, called sibling keys

4

(line 1, Algorithm 1). LORA invokes the get_all

method to request timestamped RA-consistent versions in parallel (lines 2-8): requesting

k

’s version with the maximum

of

ts

and all those timestamps for which

k

is a sibling key always prevents fractured reads (see Section 2.1), thus

guaranteeing RA. Each partition invokes the get method to return the requested timestamped value, together with the

last committed timestamp for the requested key and the associated metadata used to update the coordinator’s local

view of last[k] (lines 26-29).

Regarding (ii), LORA also employs the two-phase commit protocol as in RAMP-Fast to guarantee the atomicity of

a transaction’s updates. The put_all method uses a rst round of communication to place each timestamped write

on its respective partition (line 31-33) and a second round of communication to mark versions as committed (line

34-36). Unlike RAMP-Fast, LORA decouples these two phases by allowing the coordinator to return after issuing the

prepare round (line 12-15) and to subsequently execute the commit phase asynchronously (line 17-19). The coordinator

updates the local view of

last[k]

with its own writes in between the two phases (line 16) to ensure the read your writes

3

Following the SNOW design principle, we do not strengthen the data consistency to strict serializability to achieve SNOW-optimality (S+N+W in this

case) since doing so would change the base system into something new.

4

Key

x

is called a sibling key of key

y

if both keys are written in the same transaction. For example, given transaction

T

i

: [w(x

i

), w(y

i

), w(z

i

)]

, the

metadata associated to key x is the set of sibling keys {y, z }.

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 9

C

1

C

2

P

x

P

y

T

1

: [w(x

1

), w(y

1

)]

latest[x]=0

versions={x

0

}

latest[y]=0

versions={y

0

}

PREPARE

x

1,

md={y}

PREPARE

y

1,

md={x}

RESPONSE

prepared

RESPONSE

prepared

ts

c

=1

COMMIT

latest[x]=1

versions={x

0

,x

1

}

T

2

: [r(x), r(y)]

GET

k=x, ts

req

=null

GET

k=y, ts

req

=null

RESPONSE

x

1

, md={y}

RESPONSE

y

0

, md={x}

last[x]=1

last[y]=1

COMMIT T

2

rs={x

1

, y

1

}

ts

c

=1

COMMIT

latest[y]=1

versions={y

0

,y

1

}

RESPONSE

committed

RESPONSE

committed

COMMIT T

1

(a) RAMP-Fast Scenario

versions={x

0

,x

1

}

versions={y

0

,y

1

}

last[x]=0

last[y]=0

last[x]=0

last[y]=0

GET

k=y, ts

req

=1

RESPONSE

y

1

, md={x}

C

1

C

2

P

x

P

y

T

1

: [w(x

1

), w(y

1

)]

latest[x]=0

versions={x

0

}

latest[y]=0

versions={y

0

}

PREPARE

x

1,

md={y}

PREPARE

y

1,

md={x}

RESPONSE

prepared

RESPONSE

prepared

ts

c

=1

COMMIT

latest[x]=1

versions={x

0

,x

1

}

T

2

: [r(x), r(y)]

GET

k=x, ts

ra

=0

GET

k=y, ts

ra

=0

RESPONSE

x

0

, ts

ls

=1, md={y}

RESPONSE

y

0

, ts

ls

=0, md={x}

last[x]= < 1,{y} >

last[y]= < 0,{x} >

COMMIT T

2

rs={x

0

, y

0

}

ts

c

=1

COMMIT

latest[y]=1

versions={y

0

,y

1

}

RESPONSE

committed

RESPONSE

committed

COMMIT T

1

last[x]= < 1,{y} >

last[y]= < 1,{x} >

(b) LORA scenario

versions={x

0

,x

1

}

versions={y

0

,y

1

}

last[x]=< 0,{y} >

last[y]=< 0,{x} >

last[x]=< 0,{y} >

last[y]=< 0,{x} >

Fig. 3. Scenarios illustrating how LORA works dierently from RAMP-Fast with conflicting reads and writes. The major dierences

in handling reads, resp. writes, are indicated by the shaded boxes.

Manuscript submitted to ACM

10 Si Liu

Algorithm 1 LORA

Coordinator-side Data Structures

1: last[k]: last seen ⟨timestamp ts, metadata md⟩ for key k ▷ only last seen timestamp for each key in RF

Coordinator-side Methods

2: procedure get_all(K : set of keys) ▷ one round-trip read-only transactions

3: read set rs ← ∅

4: parallel-for key k ∈ K do

5: for key k

′

: k ∈ last[k

′

].md do ts

ra

← max(last[k].ts, last[k

′

].ts) ▷ RA-consistent snapshot

6: rs[k], last[k] ← get(k,ts

ra

) ▷ update local view

7: end parallel-for

8: return rs

9: procedure put_all(W : set of ⟨key, value⟩) ▷ one-phase write-only transactions

10: ts

x

← generate new timestamp

11: K

x

← {w.key | w ∈ W }

12: parallel-for ⟨k, val⟩ ∈ W do

13: v ← ⟨k, val, ts

x

, K

x

− {k}⟩

14: send prepare(v) to partition storing k

15: end parallel-for

16: for key k ∈ K

x

do last[k] ← ⟨ts

x

, K

x

− {k}⟩ ▷ incorporate own writes

17: parallel-for key k ∈ K

x

do

18: send commit(ts

x

) to partition storing k

19: end parallel-for

20: procedure update(K : set of keys, U : set of updates) ▷ read-write transactions

21: rs ← get_all(K)

22: write set ws ← ∅

23: for key k ∈ K do ws[k] ← u

k

(rs[k]) : u

k

∈ U

24: invoke put_all(ws)

Partition-side Data Structures

25: versions: set of versions ⟨key, value, timestamp ts, metadata md⟩

26: latest[k]: last committed timestamp for key k

Partition-side Methods

27: procedure get(k : key, ts

ra

: timestamp)

28: val ← v.value : v ∈ versions ∧ v.key = k ∧ v.ts = ts

ra

29: met ← w.md : w ∈ versions ∧ w.key = k ∧ w.ts = latest[k]

30: return ⟨k, val, latest[k], met⟩ ▷ return latest committed timestamp rather than val’s timestamp as in RF

31: procedure prepare(v : version)

32: versions.add(v)

33: return

34: procedure commit(ts

c

: timestamp)

35: K

ts

← {v.key | v ∈ versions ∧ v.ts

v

= ts

c

}

36: for k ∈ K

ts

do latest[k] ← max(latest[k], ts

c

)

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 11

session guarantee: when the coordinator computes RA-consistent snapshots for transactional reads, previous writes

have already been incorporated in the snapshots.

Unlike RAMP-Fast that considers only read-only and write-only transactions, LORA supports read-write transactions

with mixed reads and writes. LORA starts a read-write transaction with the update method (lines 20-24). It rst invokes

the get_all method for transactional reads to retrieve the values of the keys the coordinator wants to update (stored

then in the read set), as well as the corresponding last seen timestamps and metadata. LORA then updates the write set

accordingly and proceeds with the put_all method for transactional writes.

It is worth mentioning that LORA imposes no more overhead than RAMP-Fast in terms of message payload for both

reads (i.e., one version returned per read) and writes (i.e., metadata containing sibling keys). Naively returning far stale

versions, e.g., the initial versions, may satisfy RA, but users prefer recent data [

33

,

79

]. LORA improves data freshness

by constantly updating the coordinator’s view of latest committed versions during the processing of both reads (line 30)

and writes (line 16); any subsequent RA-consistent snapshot is therefore able to incorporate such versions. See Section

7.4.5 for our statistical analysis of data freshness in LORA.

By Example.

The following example illustrates how LORA works, focusing on its one round-trip, read atomic reads.

We use the same scenario as in Example 3.1 to emphasize the dierence from RAMP-Fast.

Example 3.2 (Fig. 3-(b)). Assume that both coordinators initially have the initial views of the data items

x

and

y

,

i.e.,

last[x] = ⟨

0

, {y}⟩

and

last[y] = ⟨

0

, {x }⟩

. A write-only transaction

T

1

:

[w(x

1

), w(y

1

)]

, issued by coordinator

C

1

, is

attempting writes to both keys, while a read-only transaction

T

2

:

[r (x), r(y)]

, issued by coordinator

C

2

, proceeds while

T

1

is writing. Analogously,

T

2

reads from

P

x

after

P

x

has committed

T

1

’s write to

x

(indicated by

latest[x] =

1) while

from

P

y

before

P

y

has committed

T

1

’s write to

y

(

latest[y] =

0). With LORA

T

2

now requires only one round trip to

return the RA-consistent versions

x

0

and

y

0

which have been specied by timestamp

ts

ra

=

0 in both reads to

P

x

and

P

y

, respectively. Timestamp

ts

ra

=

0 is computed based on

C

2

’s local view of last seen timestamps of

x

and

y

(e.g., ).

T

2

is compatible with T

1

that now commits after the prepare phase.

In the above example, upon committing

T

1

, coordinator

C

1

incorporates the transactional writes into its view of last

seen timestamps (

last[x] = ⟨

1

, {y}⟩

and

last[y] = ⟨

1

, {x }⟩

). A subsequent read-only transaction accessing

x

and

y

can

return both RA-consistent and RYW-consistent versions

x

1

and

y

1

even though it is racing with

T

1

’s commit phase. See

Appendix B for the visualized scenario, as well as the comparison with the conjectured design 1PW that also commits

writes in one phase but violates RYW.

4 THE MAUDE ECOSYSTEM

In this section we give preliminaries on rewriting logic, Maude, and statistical model checking of probabilistic rewrite

theories that are used in our formalization and verication of LORA (Section 5–7).

4.1 Rewriting Logic and Maude

Maude [

22

] is a rewriting-logic-based executable formal specication language and high-performance analysis tool

for object-based distributed systems. The tool provides a wide range of automatic formal analysis methods, including

simulation, reachability analysis, and linear temporal logic (LTL) model checking. Maude has been very successful in

analyzing designs of a wide range of distributed and networked systems [16, 46, 48, 82, 83].

A Maude module species a rewrite theory [56] (Σ, E ∪ A, L, R), where

Manuscript submitted to ACM

12 Si Liu

• Σ is an algebraic signature; i.e., a set of sorts, subsorts, and function symbols;

• (Σ, E ∪ A)

is a membership equational logic theory [

22

], with

E

a set of possibly conditional equations and

membership axioms, and

A

a set of equational axioms such as associativity, commutativity, and identity, so that

equational deduction is performed modulo the axioms A. The theory (Σ, E ∪ A) species the system’s states;

• L is a set of rule labels;

• R

is a collection of labele d conditional rewrite rules of the form

[l]

:

t −→ t

′

if cond

, with

l ∈ L

, that specify the

system’s transitions.

We briey summarize Maude’s syntax and refer to [

22

] for more details. Sorts and subsort relations are declared by

the keywords

sort

and

subsort

, and operators (or functions) are introduced with the

op

keyword:

op f : s

1

. . . s

n

-> s

,

where

s

1

. . . s

n

are the sorts of its arguments, and

s

is its (value) sort. Operators can have user-denable syntax, with

underbars ‘

_

’ marking each of the argument positions, and are declared with the sorts of their arguments and the sort of

their result. Some operators (e.g., the union operator ‘,’ for maps and sets) can have equational attributes, such as

assoc

,

comm

, and

id

, stating that the operator is associative and commutative and has a certain identity element (e.g.,

empty

for maps and sets). Such attributes are then used by the Maude engine to match terms modulo the declared axioms. An

operator can also be declared to be a constructor (

ctor

) that denes the data elements of its sort. Declaring an operator

f with the frozen attribute forbids rewriting with rules in all proper subterms of a term having f as its top operator.

There are three kinds of logical statements in the Maude language, equations, memberships (declaring that a term has

a certain sort), and rewrite rules, introduced with the following syntax:

• equations: eq u = v or ceq u = v if condition;

• memberships: mb u : s or cmb u : s if condition;

• rewrite rules: rl [l]: u => v or crl [l]: u => v if condition.

An equation

f (t

1

, . . . , t

n

) = t

with the

owise

(for “otherwise”) attribute can be applied to a term

f (. . .)

only if no other

equation with left-hand side

f (u

1

, . . . , u

n

)

can be applied. The mathematical variables in such statements are either

explicitly declared with the keywords

var

and

vars

, or can be introduced on the y in a statement without being

declared previously, in which case they have the form

var:sort

. Maude also provides standard parameterized data

types (sets, lists, maps, etc.) that can be instantiated (and renamed). For example,

Map{Nat,String}

denes a mapping

m from natural numbers to strings, having each entry of the form

n |->

s ; the lookup operator

m[n]

returns the

n-indexed string s. Finally, a comment is preceded by ‘***’ and lasts till the end of the line.

In object-oriented Maude specications, a class declaration

class C | a

1

:

s

1

, . . . , a

n

:

s

n

declares

a class

C

of objects with attributes

att

1

to

att

n

of sorts

s

1

to

s

n

. An object instance of class

C

is represented as a term

< O

:

C | a

1

:

val

1

, . . . , a

n

:

val

n

>

, where

O

, of sort

Oid

, is the object’s identier, and where

val

1

to

val

n

are the

current values of the attributes

a

1

to

a

n

. A message is a term of sort

Msg

. A system state is modeled as a term of

the sort

Configuration

, and has the structure of a multiset made up of objects and messages built up with an empty

syntax (juxtaposition) multiset union operator __.

The dynamic behavior of a system is axiomatized by specifying each of its transition patterns by a rewrite rule. For

example, the rule [l]

rl [l] : (to O from O' : w) < O : C | a1 : x, a2 : y, a3 : z >

=>

< O : C | a1 : x + w, a2 : y, a3 : z > (to O' from O : z - w) .

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 13

denes a family of transitions in which a message

(to O from O’ : w)

sent by the object

O’

is read and consumed

by the receiving object

O

of class

C

, whose attribute

a1

is updated to

x + w

with the message payload

w

, and a new

message

(to O’ from O : x)

is generated. Attributes whose values do not aect the next state, such as

a2

, need not

be mentioned in a rule. Attributes whose values do not change, such as

a3

, can be omitted in the right-hand side of a

rule. Hence, the above rewrite rule can also be written as:

rl [l] : (to O from O' : w) < O : C | a1 : x, a3 : z >

=>

< O : C | a1 : x + w > (to O' from O : z - w) .

Example 4.1. The following Maude module

QUERY

species a very simple distributed database system where each

client performs a sequence of queries on the keys stored across dierent database partitions. Rule

[read]

(lines 14–17)

describes when a client (object of class

Client

; line 5) wants to read the value of key

K

, it issues a query

read(K)

(dened in line 8) from its buered queries to the corresponding database partition (object of class

DB

; line 6) computed

by looking up the local key-database mapping

KD[K]

. This database partition replies with the stored key-value pair

(dened in line 8) upon receiving the query (rule

[reply]

; lines 18–21). When the client reads the response, it appends

the associated key-value pair

< K,V >

to its log (rule

[log]

; lines 22–25). Note that, despite the message payload sort

of

Payload

(line 9), both queries and key-value pairs can be piggybacked via messages (e.g., in rule

[reply]

) due to

the subsort relations (line 3).

1 mod QUERY is

2 *** user-defined sorts and subsorts

3 sorts Query Key Value KeyValuePair Payload . subsorts Query KeyValuePair < Payload .

4 *** class declarations for client and database

5 class Client | queries : List{Query}, log : List{KeyValuePair}, mapping : Map{Key,Oid} .

6 class DB | database : Map{Key,Value} .

7 *** user-defined constructors

8 op read : Key -> Query [ctor] . op <_,_> : Key Value -> KeyValuePair [ctor] .

9 op to_from_:_ : Oid Oid Payload -> Msg [ctor] .

10 *** variable declarations

11 vars O O' : Oid . var K : Key . var QS : List{Query} . var KD : Map{Key,Oid} .

12 var V : Value . var B : Map{Key,Oid} . var LOG : List{KeyValuePair} .

13 *** rewrite rules for system dynamics

14 rl [read] :

15 < O : Client | queries : read(K) QS, mapping : KD >

16 =>

17 < O : Client | queries : QS > (to KD[K] from O : read(K)) .

18 rl [reply] :

Manuscript submitted to ACM

14 Si Liu

19 (to O from O' : read(K)) < O : DB | database : B >

20 =>

21 < O : DB | > (to O' from O : < K,B[K] >) .

22 rl [log] :

23 (to O from O' : < K,V >) < O : Client | log : LOG >

24 =>

25 < O : Client | log : LOG < K,V > > .

26 endm

The following shows an example system state (of sort

Configuration

) with two clients (

c1

and

c2

), each having

two queries, and two database partitions (db1 and db2), each storing two data items:

1 *** constant object identifiers: c1, c2, db1, and db2; constant keys: k1, k2, k3, and k4

2 (to c1 from db1 : < k2,8 >) (to db2 from c2 : read(k4))

3 < c1 : Client | queries : nil, log : < k3,9 >,

4 mapping : k1 |-> db1, k2 |-> db1, k3 |-> db2, k4 |-> db2 >

5 < c2 : Client | queries : read(k3), log : nil,

6 mapping : k1 |-> db1, k2 |-> db1, k3 |-> db2, k4 |-> db2 >

7 < db1 : DB | database : k1 |-> 54, k2 |-> 8 > < db2 : DB | database : k3 |-> 9, k4 |-> 7 >

where

c1

has nished its rst query, indicated by the key-value pair

< k3,9 >

in the log (line 3), and is ready to read

the returned data from

db1

for the second query (the rst message in line 2);

c2

has just issued its rst query

read(k4)

to db2 (the second message in line 2) with the next query read(k3) buered (line 5).

4.2 Probabilistic Rewrite Theories and Statistical Model Checking

Probabilistic distributed systems can be modeled as probabilistic rewrite theories [1] in Maude with rules of the form

[l] : t(

−→

x ) −→ t

′

(

−→

x ,

−→

y ) if cond(

−→

x ) with probability

−→

y := π(

−→

x )

where the term

t

′

has additional new variables

−→

y

disjoint from the variables

−→

x

in the term

t

. For a given matching

instance of the variables

−→

x

there can be many ways to instantiate the extra variables

−→

y

. Due to such potential

nondeterminism, probabilistic rewrite rules are not directly executable. By sampling the values of

−→

y

according to the

probability distribution

π(

−→

x )

(that depends on the matching instance of

−→

x

), they can be simulated in Maude (see

Section 7.4.1 for a concrete example).

Statistical model checking (SMC) [

69

,

88

] is a formal approach to analyzing probabilistic systems against temporal

logic properties. Compared to conventional simulations or emulations, SMC can verify a property specied, e.g., in

a stochastic temporal logic (QuaTEx [

1

] used in our analysis), up to a user-specied statistical condence level by

running Monte-Carlo simulations of the system model. The expected value

¯

v

of a property query belongs to the interval

[

¯

v −

β

2

,

¯

v +

β

2

] with (1

−α

) statistical condence, where the parameters

α

and

β

(indicating the margin of error) determine

when an SMC analysis stops performing simulations [

69

]. An SMC result can be a probability or percentage with

respect to some qualitative property such as “99.1% of LORA reads return the latest values written to the database with

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 15

95% condence”, or a quantitative estimation of some performance property such as “With 99% statistical condence,

the average latency of LORA transactions is 1.5 time units”.

Maude-based SMC with the PVeStA tool [

5

] has been successfully used in statistically analyzing high-level designs

of distributed database systems. In particular, with signicantly less eort than the actual system implementations (e.g.,

20x less in terms of lines of code [

51

]), SMC-based performance estimations and predictions in an early design phase

have shown good correspondence with implementation-based evaluations under realistic deployment [16, 48, 51].

5 FORMALIZING LORA

This section presents a formal model of LORA in Maude. The entire executable specication, consisting of 870 lines of

code, is available at [43].

5.1 Data Types, Objects, and Messages

We model LORA in the CAT framework [

49

] for formally specifying distributed transaction systems. This enables us to

leverage the CAT model checker to verify LORA’s data consistency properties (see Section 7.3).

More specically, LORA is formalized in an object-oriented style, where the state is a multiset consisting of a number

of partition objects, each modeling a partition of the entire database, a number of coordinator objects, each modeling

the proxy of a client that executes transactions formalized also as objects residing inside the coordinator, and a number

of messages traveling between the objects.

Data Types.

A version is modeled as a 4-tuple

< key, value, timestamp, metadata >

(of sort

Version

) consisting of

the key, its value, and the version’s timestamp and metadata. A timestamp is modeled as a pair

< id, sqn >

consisting

of an object identier and a local sequence number that together uniquely identify a version. Metadata are modeled

as a set of keys, indicating, for each key, the sibling keys written in the same transaction. The partition-side data

structure versions (line 25, Algorithm 1) is modeled as a set of versions, denoted by

Set{Version}

, that instantiates

Maude’s parameterized container sets on sort

Version

. We also use another container maps to dene latest (line 26) as

Map{Key,Timestamp}

mapping keys to timestamps. The coordinator-side data structure last (line 1) can be similarly

dened as a mapping from each key to a pair < timestamp, metadata > (of sort tmPair).

Objects.

A partition stores parts of the entire database. We formalize it as an object instance of the following class

Partition:

class Partition | database : Set{Version}, latest : Map{Key,Timestamp} .

where the partitioned

database

consists of a set of versions for each key stored locally. The partition also retains the

timestamp of the latest committed version for each key.

A transaction is modeled as an object instance of the class Txn:

class Txn | operations : List{Operation}, waitinglist : Set{Oid},

readset : Set{kvPair}, writeset : Set{kvPair} .

where

operations

indicates the transaction’s reads/writes, with each of the form

read(key)

or

write(key,value)

.

The attribute

readset

, resp.

writeset

, denotes the

< key, value >

pairs fetched, resp. written, by the reads, resp.

writes. The database partitions from which the coordinator awaits responses with respect to the transaction are stored

in waitinglist.

A coordinator, modeled as an object instance of the class Coord, is delegated to process transactions:

Manuscript submitted to ACM

16 Si Liu

class Coord | sqn : Nat, last : Map{Key,tmPair}, queue : List{Object},

mapping : Map{Key,Oid}, executing : Object, committed : Set{Object} .

The attributes

queue

,

executing

, and

committed

store the transaction object(s) which are waiting to be executed,

currently executing, and committed, respectively. Concurrently executing transactions can be modeled by multiple

coordinators with each holding a currently executing transaction. The attribute

last

maps each key to the timestamp-

metadata pair of its latest version the coordinator has seen. To model the keyspace partitioning we use a

mapping

to

pair keys and database partition identiers.

Initial State.

The following shows an example initial state (of sort

Configuration

) of LORA (with some parts replaced

by ‘...’) with two coordinators,

c1

and

c2

, buering, respectively, two and three transactions. Read-only transaction

t1

has two read operations on, respectively, two keys

k1

and

k2

. Write-only transaction

t2

consists of two write operations,

with each writing a value (e.g.,

"apple"

) to a key (e.g.,

k2

). Transaction

t3

is a read-write transaction. The key space is

split by three partitions

p1

,

p2

, and

p3

, e.g., keys

k1

and

k3

are stored at

p1

. Each partition is initialized accordingly; in

particular, for each key, the value is the empty string, the timestamp is null, and the metadata is an empty set:

op initialState : -> Configuration .

eq initialState =

< c1 : Coord |

queue : (< t1 : Txn | operations : (read(k1) read(k2)), waitinglist : empty,

readset : empty, writeset : empty >

< t2 : Txn | operations : (write(k2,"apple") write(k3,"pear")), ... >),

sqn : 0, last : empty, executing : null, committed : empty,

mapping : (k1 |-> p1, k2 |-> p2, k3 |-> p1, k4 |-> p3) >

< c2 : Coord |

queue : (< t3 : Txn | operations : (read(k3) write(k4,"orange")), ... >

< t4 : Txn | ... > < t5 : Txn | ... >), ... >

< p1 : Partition |

database : (< k1, "", null, empty >, < k3, "", null, empty >), latest : empty >

< p2 : Partition | ... > < p3 : Partition | ... > .

Messages.

A message has the form

to receiver from sender : mp

. The terms

sender

and

receiver

are object identiers.

The term mp is the message payload, having the form:

• get(tid,key,ts), for a get message from transaction tid requesting the version ts of key;

• reply(tid,key,val,ts,md), for the returned version from the partition.;

• prepare(tid,key,val,ts,md), for preparing the version written by transaction tid at the partition;

• prepared(tid), for conrming a prepared version;

• commit(tid,ts), for marking the versions with timestamp ts as committed;

• committed(tid), for acknowledging a successful commit operation of transaction tid.

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 17

5.2 Formalizing LORA’s Dynamics

This section formalizes LORA’s dynamic behaviors with respect to its SNOW-optimal read-only transactions using

rewrite rules.

5

We show the corresponding 4 (out of 12) rules and refer to Appendix C for the remaining rules regarding

LORA’s write-only and read-write transactions. For each rule, we also refer to the corresponding line(s) of code in

Algorithm 1. The complete denitions of the data types and the functions are given in [43].

Issuing

get

Messages (Lines 2–6).

A coordinator executes a read-only transaction (ensured by the predicate

readOnly

that returns true if a transaction’s operations are all reads) if the transaction object appears in

executing

in the left-hand

side of a rule:

crl [get-all] :

< C : Coord | executing : < T : Txn | operations : OPS, waitinglist : empty, readset : empty >,

last : LAS, mapping : KP >

=>

< C : Coord | executing : < T : Txn | waitinglist : addR(OPS,KP) > >

getAll(C,T,OPS,LAS,KP) if readOnly(OPS) .

where the attributes

waitinglist

and

readset

must be explicitly present with

empty

. The coordinator adds to the

waiting list the partitions from which it expects to receive the returned versions (by function

addR

). The

getAll

function

(line 6) iterates the operations of the transaction and generates a set of

get

messages (of sort

Configuration

) directed

at the corresponding partitions (the rst equation):

op getAll : Oid Oid Operations Map{Key,tmPair} Map{Key,Oid} -> Configuration .

eq getAll(C,T,(read(K) OPS),LAS,(K |-> P,KP))

= (to P from C : get(T,K,max(K,LAS))) getAll(C,T,OPS,LAS,(K |-> P,KP)) .

eq getAll(C,T,(write(K,VAL) OPS),LAS,KP) = getAll(C,T,OPS,LAS,KP) .

eq getAll(C,T,nil,LAS,KP) = null .

A write operation contributes no message (the second equation). This function returns if every operation of the

transaction has been processed (indicated by

nil

in the third equation). An RA-consistent snapshot is computed by the

(overloaded) operator max (line 5):

op max : Key Map{Key,tmPair} -> Timestamp .

op max : Timestamp Timestamp -> Timestamp .

For each key

K

, the maximum of its last seen timestamp

TS

and (the maximum of) the associated last seen timestamps

for which

K

is a sibling (indicated by the matching

K

in the metadata for

K’

in the second equation) is returned (line 5):

eq max(K,(K |-> < TS,MD >,LAS)) = max(TS,max(K,LAS)) .

eq max(TS,max(K,(K' |-> < TS',(K,KS) >,LAS))) = max(max(TS,TS'),max(K,LAS)) .

eq max(TS,max(K,LAS)) = TS [owise] .

Note that the third equation with the owise attribute covers all remaining cases after all such K’ are processed.

Receiving a

get

Message (Lines 27–30).

Upon receiving a read, the partition replies with a version whose value is

indicated by the requested timestamp

TS

and whose timestamp and metadata are, respectively, the latest committed

5

We do not include variable declarations, but follow the Maude convention that variables are written in (all) capital letters.

Manuscript submitted to ACM

18 Si Liu

timestamp for the requested key and the associated metadata (see [

43

] for the denitions of the two “match” functions

vMatch and mdMatch):

rl [rcv-get] :

(to P from C : get(T,K,TS))

< P : Partition | database : VS, latest : LAT >

=>

< P : Partition | >

(from P to C : reply(T,K,vMatch(TS,KS),LAT[K],mdMatch(K,LAT,VS))) .

Receiving a Response (Lines 6–7).

When a coordinator receives a

reply

message of the returned version, it adds

the pair

< K,VAL >

to the transaction’s read set, updates

last

with the latest committed timestamp

TS

for key

K

and

the associated metadata MD, and removes partition P from the waiting list:

rl [rcv-reply] :

(to C from P : reply(T,K, VAL, TS, MD))

< C : Coord | executing : < T : Txn | waitinglist : P ; OS, readset : RS >,

last : LAS >

=>

< C : Coord | executing : < T : Txn | waitinglist : OS, readset : (RS, < K, VAL >) >,

last : update(K, TS, MD, LAS) > .

Committing a Read-Only Transaction (Line 8).

A read-only transaction commits at the coordinator by changing

its status from

executing

to

committed

, when the transaction has collected all replies from the partitions (indicated

by the empty waiting list) and its read set is no longer empty (which was initialized as empty; see rule [get-all]):

crl [committed-ro] :

< C : Coord | executing : < T : Txn | operations : OPS, waitinglist : empty, readset : (R, RS) >,

committed : TXNS >

=>

< C : Coord | executing : null,

committed : TXNS < T : Txn | > > if readOnly(OPS) .

6 CORRECTNESS PROOFS FOR LORA

In this section we formally prove that LORA provides SNOW-optimal (Section 6.1) and read atomic (Section 6.2)

transactions based on the Maude model in Section 5. We also refer to Appendix D for the proof of LORA’s read your

writes session guarantee.

Assumptions.

We make the following assumptions about the system model as in the formal reasoning about RAMP,

SNOW, and transactional data consistency [

10

,

15

,

37

]: (1) a timestamp uniquely identies a version; (2) without loss of

generality, versions of the keys written by a transaction are the same; (3) a version is persistent on the partition once it

is prepared or committed; (4) all transactions commit unless otherwise noted; (5) each transaction contains at most one

write to each key; (6) reads precede writes in read-write transactions; and (7) the network is asynchronous and reliable,

i.e., any message will eventually be delivered to its receiver.

Manuscript submitted to ACM

All in One: Design, Verication, and Implementation of SNOW-Optimal Read Atomic Transactions 19

Notations.

We use

P

k

to denote the partition storing key

k

. The partial version order is indicated by

<

. Given two

versions

v

k

and

v

′

k

for key

k

and the respective timestamps

ts

k

and

ts

′

k

with

v

k

.ts = ts

k

and

v

′

k

.ts = ts

′

k

, we write

v

k

< v

′

k

or

ts

k

< ts

′

k

if

v

′

k

appears later than

v

k

in the version order. Each key

k

has an initial version

⊥

k

with

⊥

k

< v

k

for any version

v

of

k

. A snapshot taken by a transaction

T

is a set of timestamps

{ts

k

1

, ..., ts

k

n

}

, where

k

1

, ..., k

n

written by

T

are sibling keys to each other and timestamp

ts

k

i

corresponds to version

v

k

i

with

v

k

i

.ts = ts

k

i

. We denote

a system execution

e

, starting from an initial state, by the labels of rules that apply in order:

l

1

, ..., l

n

. We use

prex(e, l)

to indicate the nite prex of an execution e ending with the ring of rule l.

6.1 Providing SNOW-Optimal Reads

We rst adapt the formal denitions of individual SNOW properties in [

37

] into our rewriting logic setting. Since

LORA is designed for read atomic transactions, thus not satisfying strict serializability (S), we only need to consider

the N+O+W properties for its SNOW-optimality (as well as its latency-optimality; Denition 2.1 and Denition 2.2):

Non-blocking reads (N), One-response per read (O), and Write transactions that conict (W).

The N property indicates that if a coordinator

coord

sends a read request to a partition

part

during the transaction

execution, then

part

must respond to

coord

for this request without waiting for any external event such as the arrival of

a message. We formally dene this property as follows.

Denition 6.1 (N). Suppose in an execution e, following rule [start-txn]

6

on the initial state and rule [get-all]

for read-only transactions (resp. rule

[update]

for read-write transactions), rules

[rcv-get]

and

[rcv-reply]

, corre-

sponding to the get message

to part from coord : get(key, ts)

, are red in order with respect to the partition

part

storing key. Then there exists an execution e

′

such that

(i) the execution fragments prex(e, [rcv-get]) and prex(e

′

,[rcv-get]) are identical;

(ii)

in

e

′

rule

[rcv-reply]

is red at

part

after rule

[rcv-get]

applies without any ring of rules in between for

handling get(key, ts).

Note that in (ii) other rule(s) irrelevant to this get message (or this particular read request) may apply between the

rings of rules [rcv-get] and [rcv-reply].

The O property states that each read request during the transaction execution must complete successfully in one

round of coordinator-to-partition communication and exactly one version of the requested key is sent back by the

partition. This property is formally dened as:

Denition 6.2 (O). Suppose in a system execution

e

, after rule

[get-all]

for read-only transactions (or rule

[

update]

for read-write transactions) applies at some coordinator, there exists exactly a pair of rings of rules

[rcv-get]

and

[rcv-reply] in e for each associated partition P

k

with the returned version of the value v

k

.

The W property means that transactional reads can be issued at any point, even in the presence of concurrently

executing writes that update the same key(s) being read.

Denition 6.3 (W). Suppose in an execution

e

, rule

[put-all]

for write-only transactions (resp. rule

[finished-rs]

for read-write transactions) is red, then the transaction can be successfully committed in

e

, i.e., appearing in the

coordinator’s attribute committed.

Lemma 6.4. LORA’s reads are non-blocking (N).

6

We refer to Section 5.2 for the rules of read-only transactions and Appendix C for the remaining rules.

Manuscript submitted to ACM

20 Si Liu

Proof.

By inspection of the formal model of LORA for the partition’s response to an incoming get message (rule

[rcv-get]), where the only rule that can be red with respect to this read request is [rcv-reply]. □

Lemma 6.5. LORA provides one response p er read (O).

Proof.

By inspection of the rules for handling reads: (i) the coordinator issues read requests in parallel to the

corresponding partitions (rule

[get-all]

) and collects all returned versions (rule

[rcv-reply]

) sent by the partitions

(rule

[rcv-get]

) before committing the read-only transaction (rule

[committed-ro]

); and (ii) for each get message

on key

K

, the partition replies with a single version (computed by function

vMatch

) indicated by timestamp

TS

where

the rest of the return data contain no more version (i.e., only the latest committed timestamp

LAT[K]

and the sibling

keys computed by function mdMatch). The same reasoning applies to the case of read-write transactions. □

Lemma 6.6. LORA’s reads are compatible with conicting writes (W).

Proof.

By inspection of the rules for handling writes: write-only (resp. read-write) transactions always complete by

applying (the

if-then

branch of) rule

[rcv-prepared]

after they are issued out (rule

[put-all]

, resp. rule

[update]

)

and appear in committed in the right-hand side of rule [rcv-prepared]. □

According to Lemma 6.4–6.6, LORA satises all three properties N+O+W, and thus is SNOW-optimal.

Theorem 6.7. LORA provides SNOW-optimal reads.

6.2 Providing Read Atomic Transactions

We rst adapt some denitions in [10] with respect to read atomicity into our setting.

Denition 6.8 (Read Atomicity). A distributed transaction algorithm satises read atomicity if it prevents fractured

reads anomalies and also prevents transactions from reading uncommitted, aborted, or intermediate data.

Denition 6.9 (Fractured Reads). A transaction

T

j

exhibits fractured reads if transaction

T

i

writes version

v

x

and

v

′

y

(in any order, with x possibly but not necessarily equal to y), T

j

reads version v

x

and version v

′′

y

, and v

′′

< v

′

.”

Denition 6.10 (Aborted Reads). A transaction

T

j

exhibits aborted reads if there exist an aborted transaction

T

i

such

that T

j

reads a version written by T

i

.

Note that Denition 6.9 subsumes the denition of intermediate reads [10].

The key of LORA’s read atomic transactions lies in how fractured-reads-free snapshots are computed.

Lemma 6.11. Any snapshot returned by the max function contains no fractured reads.

Proof.

Suppose there exists a snapshot returned by the

max

function that exhibits fractured reads, and, without

loss of generality, it is

{ts

x

, ts

′

y

}

. Then the corresponding RA-consistent versions are written by transaction

T =

[w(ts

x

), w(ts

y

), ...]

, with “

...

” the remaining operations on keys rather than

x

and

y