Electronic Journal of Statistics

Vol. 17 (2023) 1492–1546

ISSN: 1935-7524

https://doi.org/10.1214/23-EJS2137

Pretest estimation in combining

probability and non-probability samples

∗

Chenyin Gao and Shu Yang

Department of Statistics, North Carolina State University, Raleigh, NC 27695, USA

Abstract: Multiple heterogeneous data sources are becoming increasingly

available for statistical analyses in the era of big data. As an important

example in finite-population inference, we develop a unified framework of

the test-and-pool approach to general parameter estimation by combining

gold-standard probability and non-probability samples. We focus on the

case when the study variable is observed in both datasets for estimating

the target parameters, and each contains other auxiliary variables. Utilizing

the probability design, we conduct a pretest procedure to determine the

comparability of the non-probability data with the probability data and

decide whether or not to leverage the non-probability data in a pooled

analysis. When the probability and non-probability data are comparable,

our approach combines both data for efficient estimation. Otherwise, we

retain only the probability data for estimation. We also characterize the

asymptotic distribution of the proposed test-and-pool estimator under a

local alternative and provide a data-adaptive procedure to select the critical

tuning parameters that target the smallest mean square error of the test-

and-pool estimator. Lastly, to deal with the non-regularity of the test-and-

pool estimator, we construct a robust confidence interval that has a good

finite-sample coverage property.

MSC2020 subject classifications: Primary 62D05; secondary 62E20,

62F03, 62F35.

Keywords and phrases: Data integration, dynamic borrowing, non-regularity,

Pretest estimator.

Received October 2022.

Contents

1 Introduction................................ 1493

2 Basicsetup ................................ 1495

2.1 Notation:twodatasources .................... 1495

2.2 Assumptionsandseparateestimators............... 1496

2.3 Efficientestimator ......................... 1498

3 Test-and-pool estimator . . . . . . . . . . . . . . . . . . . . . . . . . 1499

3.1 Hypothesisandtest ........................ 1499

3.2 Data-driven pooling . . . . . . . . . . . . . . . . . . . . . . . . 1500

4 Asymptotic properties of the test-and-pool estimator . . . . . . . . . 1501

4.1 Asymptoticdistribution ...................... 1501

∗

Yang’s research is partially supported by the NIH 1R01AG066883 and 1R01ES031651.

1492

Test-and-pool estimator 1493

4.2 Asymptotic bias and mean squared error . . . . . . . . . . . . . 1502

4.3 Adaptiveinference ......................... 1503

5 Simulationstudy ............................. 1505

6 A real-data illustration . . . . . . . . . . . . . . . . . . . . . . . . . . 1508

7 Concludingremarks ........................... 1510

A Proofs................................... 1510

A.1 Regularityconditions........................ 1510

A.2 Proof of Lemmas 2.1 and 3.1 ................... 1512

A.3 Proof of Lemma 3.2 ........................ 1516

A.4 Proof of Theorem 4.1 ........................ 1517

A.5 Proof of the bias and mean squared error of n

1/2

(μ

tap

− μ

g

).. 1520

A.6 Proof of the asymptotic distribution for U(a) .......... 1522

A.7 Proof of Theorem 4.2 ........................ 1524

A.8 Proof of Remark 4.1 ........................ 1525

A.9 Proof of Lemma A.1 ........................ 1526

A.10 Proof of Lemma A.2 ........................ 1527

A.11 Proof of Lemma A.3 ........................ 1528

B Simulation................................. 1530

B.1 A detailed illustration of simulation . . . . . . . . . . . . . . . 1530

B.2 A detailed illustration of bias and mean squared error . . . . . 1536

B.3 Additionalsimulationresults ................... 1539

B.4 Double-bootstrap procedure for v

n

selection........... 1540

B.5 Details of the Bayesian method . . . . . . . . . . . . . . . . . . 1541

References................................... 1542

1. Introduction

It has been widely accepted that probability sampling, where each selected sam-

ple is treated as a representative sample to the target population, is the best

vehicle for finite-population inference. Since the sampling mechanism is known

based on survey design, each weight-calibrated sample can be used to obtain

consistent estimators for the target population; see [53], [15]and[24]fortext-

book discussions. However, complex and ambitious surveys are facing more and

more hurdles and concerns recently, such as costly intervention strategies and

lower participation rates. [2] address some of the current challenges in using

probability samples for finite-population inference. On the other hand, higher

demands of small area estimation and other more factors have led researchers

to seek out alternative data collection with less program budget [69, 28]. In

particular, lots of attention has been drawn to the studies of non-probability

samples.

Non-probability samples are sets of selected objects where the sampling mech-

anism is unknown. First of all, non-probability samples are readily available from

many data sources, such as satellite information [36], mobile sensor data [40],

and web survey panels [62]. In addition, these non-representative samples are

far more cost-effective compared to probability samples and have the potential

1494 C. Gao and S. Yang

of providing estimates in near real-time, unlike the traditional inferences derived

from probability samples [42]. Based on these big and easy-accessible data, a

wealth of literature has been proposed which enunciates the bright future while

properly utilizing such amount of data (e.g., 18, 14, 61,and41).

However, the naive use of such data cannot ensure the statistical validity of

the resulting estimators because such non-probability samples are often selected

without sophisticated supervision. Therefore, the acquisition of large whereas

highly unrepresentative data is likely to produce erroneous conclusions. [17]and

[23] present more recent examples where non-probability samples can often lead

to estimates with significant selection biases. To overcome these challenges, it is

essential to establish appropriate statistical tools to draw valid inferences when

integrating data from the probability and non-probability samples. Various data

integration methods have been proposed in the literature to leverage the unique

strengths of the probability and non-probability samples; see [73] for a review,

and the existing methods for data integration can be categorized into three types

including the inverse propensity score adjustment [50, 22], calibration weighting

[19, 31], and mass imputation [46, 30, 74, 11].

But most of the works assume that the non-probability sample is comparable

to the probability sample in terms of estimating the finite-population param-

eters, which may not be satisfied in many applications due to the unknown

sampling mechanism of the non-probability samples. Thus, the non-probability

samples with unknown sampling mechanisms may bias the estimators for the

target parameters. To resolve this issue, [47] propose a pretest to gauge the sta-

tistical adequacy of integrating the probability and non-probability samples in

an application. The pretesting procedure has been broadly practiced in econo-

metrics and medicine, and its implications are of considerable interests (e.g.,

[68, 63, 3, 72]). Essentially, the final value of the estimator depends on the out-

come of a random testing event and therefore is a stochastic mixture of two dif-

ferent estimators. Despite the long history of the application of the pretest, few

literature investigates the theoretical properties of the underlying non-smooth

distribution for the pretest estimators.

In this paper, we establish a general statistical framework for the test-and-

pool analysis of the probability and non-probability samples by constructing a

test to gauge the comparability of the non-probability data and decide whether

or not to use non-probability data in a pooled analysis. In addition, we con-

sider the null, fixed, and local alternative hypotheses for the pre-testing, rep-

resenting different levels of comparability of the non-probability data with the

probability data. In particular, the non-probability sample is perfectly compa-

rable under the null hypothesis, whereas it is starkly incomparable under the

fixed alternative. Therefore, the fixed alternative cannot adequately capture the

finite-sample behavior of the pre-testing estimator, under which the test statis-

tic will diverge to infinity as the sample size increases. Toward this end, we

establish the asymptotic distribution of the proposed estimator under local al-

ternatives, which provides a better approximation of the finite-sample behavior

of the pretest estimator when the idealistic assumption required for the non-

probability data is weakly violated. Also, we provide a data-adaptive procedure

Test-and-pool estimator 1495

to select the optimal values of the tuning parameters achieving the smallest

mean square error of the pretest estimator. Lastly, we construct a robust con-

fidence interval accounting for the non-regularity of the estimator, which has a

valid coverage property.

The rest of the paper is organized as follows. Section 2 lays out the basic setup

and presents an efficient estimator for combing the non-probability sample and

the probability sample. Section 3 proposes a test statistic and the test-and-

pool estimator. In Section 4, we present the asymptotic properties of the test-

and-pool estimator, an adaptive inference procedure, and lastly a data-adaptive

selection scheme of the tuning parameters. Section 5 presents a simulation study

to evaluate the performance of our test-and-pool estimator. Section 6 provides

a real-data illustration. All proofs are given in the Appendix.

2. Basic setup

2.1. Notation: two data sources

Let F

N

= {V

i

=(X

i

,Y

i

)

: i ∈ U} with U = {1,...,N} denote a finite

population of size N ,whereX

i

is a vector of covariates and Y

i

is the study

variable. We assume that F

N

is a random sample from a superpopulation model

ζ and our objective is to estimate the finite-population parameter μ

g

∈ R

l

,

defined as the solution to

1

N

N

i=1

S(V

i

; μ)=0, (2.1)

where S(V

i

; μ)isal-dimensional estimating function. The class of parameters is

fairly general. For example, if S(V ; μ)=Y −μ, μ

g

= Y

N

= N

−1

N

i=1

Y

i

is the

population mean of Y

i

.IfS(V ; μ)=1(Y<c)−μ for some constant c,where1(·)

is an indicator function, μ

g

= N

−1

N

i=1

1(Y

i

<c) is the population proportion

of Y

i

less than c.IfS(V ; μ)=X(Y − X

μ), μ

g

=(

N

i=1

X

i

X

i

)

−1

(

N

i=1

X

i

Y

i

)

is the coefficient of the finite-population regression projection of Y

i

onto X

i

.

Suppose that there are two data sources, one from a probability sample,

referred to as Sample A, and the other from a non-probability sample, referred to

as Sample B. Assume Sample A to be independent of Sample B, and the observed

units can be envisioned as being generated through two phases of sampling [12].

Firstly, a superpopulation model ζ generates the finite population F

N

. Then, the

probability (or non-probability) sample is selected from it using some known (or

unknown) sampling schemes. Hence, the considered total variance of estimators

is based on the randomness induced by both the superpopulation model and

the sampling mechanisms; see Table 1 for the notations of probability order,

expectation and (co-)variance. For example, E

p

(·|F

N

) is the average over all

possible samples under the probability design for particular finite population

F

N

,andE(·) is the average over all possible samples from all possible finite

populations.

1496 C. Gao and S. Yang

Table 1

Notation and definitions.

Randomness order notation expectation (co-)variance

probability design o

p

(1),O

p

(1) E

p

(·|F

N

)var

p

(·|F

N

) , cov

p

(·|F

N

)

non-probability design o

np

(1),O

np

(1) E

np

(·|F

N

)var

np

(·|F

N

) , cov

np

(·|F

N

)

ζ model o

ζ

(1),O

ζ

(1) E

ζ

(·)var

ζ

(·) , cov

ζ

(·)

total variance o

ζ-p-np

(1),O

ζ-p-np

(1) E (·)var(·) , cov (·)

Thus far, our focus has been on the setting where the covariates X and

the study variable Y are available in both the probability and non-probability

samples, which has also been considered in [21]and[20]. The sampling indicators

are denoted by δ

A,i

and δ

B,i

, respectively; e.g., δ

A,i

= 1 if unit i is selected into

Sample A and zero otherwise. Sample A contains observations O

A

= {(d

i

=

π

−1

A,i

,X

i

,Y

i

):i ∈A}with sample size n

A

,whereπ

A,i

is the known first-order

inclusion probability for Sample A, and Sample B contains observations O

B

=

{(X

i

,Y

i

):i ∈B}with sample size n

B

. The unknown propensity score for being

selected into Sample B is denoted by π

B,i

. Here, A and B denote the indexes

of units in Samples A and B with total sample size n = n

A

+ n

B

and negligible

sampling fractions, i.e., n/N = o(1). Let the limits of the fractions of Sample A

and B be f

A

= lim

n→∞

n

A

/n and f

B

= lim

n→∞

n

B

/n with 0 <f

A

,f

B

< 1.

2.2. Assumptions and separate estimators

As observing (X

i

,Y

i

) for all units i in U is usually not feasible in practice, we

can estimate the population estimating equation (2.1) by the design-weighted

sample analog under the probability sampling design

1

N

N

i=1

δ

A,i

π

A,i

S(V

i

; μ)=0, (2.2)

yielding a design-weighted Z-estimator μ

A

[65]. When S(V ; μ) is a score function,

the resulting estimator will be a pseudo maximum likelihood estimator [58]. For

example, for estimating

Y

N

,wehaveS(V ; μ)=Y − μ, which leads to μ

A

=

(

N

i=1

δ

A,i

π

−1

A,i

)

−1

N

i=1

δ

A,i

π

−1

A,i

Y

i

. We now make the following assumption for

the design-weighted Z-estimator.

Assumption 2.1 (Design consistency and central limit theorem). Let μ

A

be the

corresponding design-weighted Z-estimator of μ

g

, which satisfies that var

p

(μ

A

|

F

N

)=O

ζ

(n

−1

A

) and {var

p

(μ

A

)}

−1/2

×(μ

A

−μ

g

) |F

N

→N(0, 1) in distribution

as n

A

→∞.

Under the typical regularity conditions [24], Assumption 2.1 holds for many

common sampling designs such as probability proportional to size and stratified

simple random sampling. Under Assumption 2.1, μ

A

is design-consistent and

does not rely on any modeling assumptions. This explains why the probability

sampling has been the gold standard approach for finite-population inference,

and we make this assumption throughout this article.

Test-and-pool estimator 1497

Let f(Y | X) be the conditional density function of Y given X in the super-

population model ζ,andletf(X)andf(X | δ

B

= 1) be the density function

of X in the finite population and the non-probability sample, respectively. To

correct for the selection bias of the non-probability sample, most of the existing

literature considers the following assumptions [e.g., 46, 66, 12].

Assumption 2.2 (Common support and ignorability of sampling). (i) The vec-

tor of covariates X has a compact and convex support, with its density bounded

and bounded away from zero. Also, there exist positive constants C

l

and C

u

such

that C

l

≤ f(X)/f(X | δ

B

=1)≤ C

u

almost surely. (ii) Conditional on X, the

density of Y in the non-probability sample follows the superpopulation model;

i.e., f(Y | X, δ

B

=1)=f(Y | X). (iii) The sample inclusion indicator δ

B,i

and

δ

B,j

are independent given X

i

and X

j

for i = j.

Assumption 2.2 (i) and (ii) constitute the strong sampling ignorability condi-

tion [50]. Assumption 2.2 (i) implies that the support of X in the non-probability

sample is the same as that in the finite population, and it can also be formulated

as a positivity assumption that P(δ

B

=1| X) > 0 for all X. This assumption

does not hold if certain units would never be included in the non-probability

sample. Assumption 2.2 (ii) is equivalent to the ignorability of the sampling

mechanism for the non-probability sample conditional on the covariates X, i.e.,

P(δ

B

=1| X, Y )=P(δ

B

=1| X)[34]. This assumption holds if the set of

covariates contain all the outcome predictors that affect the possibility of be-

ing selected into the non-probability sample. Assumption 2.2 (iii) is a critical

condition to employ the weak law of large numbers under the non-probability

sampling design [12]. Under Assumption 2.2, the non-probability sample can be

used to produce consistent estimators. However, this assumption may be unre-

alistic if the non-probability data collection suffers from uncontrolled selection

biases [6], measurement errors [17], or other error-prone issues. Thus, we con-

sider Assumption 2.2 as an idealistic assumption, which may be violated and

require pretesting.

Under Assumptions 2.1 and 2.2, let Φ

A

(V,δ

A

; μ)andΦ

B

(V,δ

A

,δ

B

; μ)be

two l-dimensional estimating functions for the target parameter μ

g

when us-

ing the probability sample and the combined samples, respectively. In prac-

tice, Φ

A

(·)andΦ

B

(·) may depend on unknown nuisance functions, and solving

E{Φ

A

(V,δ

A

; μ)} =0andE{Φ

B

(V,δ

A

,δ

B

; μ)} = 0 is not feasible. By replacing

the nuisance functions with their estimated counterparts, and the expectations

with the empirical averages, we obtain μ

A

and μ

B

by solving

1

N

N

i=1

Φ

A

(V

i

,δ

A,i

; μ)=0,

1

N

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ)=0, (2.3)

respectively, where {

Φ

A

(·),

Φ

B

(·)} are the estimated version of {Φ

A

(·), Φ

B

(·)}.

Remark 2.1. For estimating the finite population means, that is, μ

g

= Y

N

,

Φ

A

(·) and Φ

B

(·) are commonly chosen as

Φ

A

(V,δ

A

; μ)=

δ

A

π

A

(Y − μ), (2.4)

1498 C. Gao and S. Yang

Φ

B

(V,δ

A

,δ

B

; μ)=

δ

B

π

B

(X)

{Y − m (X)} +

δ

A

π

A

m (X) − μ, (2.5)

where π

B

(X)=P(δ

B

=1| X) and m(X)=E(Y | X, δ

B

=1). To obtain the

estimators μ

A

and μ

B

, parametric models π

B

(X; α) and m(X; β) can be posited

for the nuisance functions π

B

(X) and m(X), respectively.

In addition, researchers might be interested in estimating the individual-level

outcomes rather than the population-level outcomes. In this case, Φ

A

(·) and

Φ

B

(·) can be specified for estimating the outcome model m(X; β) as:

Φ

A

(V,δ

A

; β)=

δ

A

π

A

∂m(X; β)

∂β

{Y − m(X; β)}

Φ

B

(V,δ

A

,δ

B

; β)=

δ

A

π

A

+

δ

B

π

B

(X)

∂m(X; β)

∂β

{Y − m(X; β)}.

Next, we adopt the model-design-based framework for inference, which in-

corporates the randomness over the two phases of sampling [27, 37, 7, 70]. The

asymptotic properties for μ

A

and μ

B

can be derived using the standard M-

estimation theory under suitable moment conditions.

Lemma 2.1. Suppose Assumptions 2.1, 2.2 and additional regularity condi-

tions A.1 hold. Then, we have

n

1/2

μ

A

− μ

g

μ

B

− μ

g

→N

0

l×1

0

l×1

,

V

A

Γ

Γ

V

B

, (2.6)

where V

A

, V

B

,andΓ are defined explicitly in the Appendix.

In Lemma 2.1, we extend the conditional normality to unconditional as in

[55], which implies that the asymptotic (co-)variances terms V

A

,V

B

and Γ refer

to all the sources of uncertainty over the two phases.

2.3. Efficient estimator

Under Assumptions 2.1 and 2.2,bothμ

A

and μ

B

are consistent, and it is ap-

pealing to combine μ

A

with μ

B

to achieve efficient estimation. We consider a

class of linear combinations of the functions in (2.3):

N

i=1

{

Φ

A

(V

i

,δ

A,i

; μ)+Λ

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ)} =0, (2.7)

where Λ is the linear coefficient that gauges how much information of the non-

probability sample should be integrated with the probability sample. Equation

(2.7) leads to a class of composite estimators which is a weighted average of

μ

A

and μ

B

with Λ-indexed weight ω

A

and ω

B

.WhenΛ=0,(2.7)provides

the design-consistent estimator μ

A

. The optimal choice Λ

eff

can be empirically

tuned to minimize the asymptotic variance of the composite estimator, leading

Test-and-pool estimator 1499

to the efficient estimator μ

eff

. However, the major concern for μ

eff

is the possible

bias due to the violation of Assumption 2.2 (ii) for the non-probability sample.

When it is violated, it is reasonable to choose Λ = 0 and prevent any bias

associated with the non-probability sample.

3. Test-and-pool estimator

Motivated by the above reasoning, we develop a strategy that pretests the com-

parability of the non-probability sample with the probability sample first and

then decides whether or not we should combine them for efficient estimation.

We formulate the hypothesis test in Section 3.1, and construct the test-and-pool

estimator in Section 3.2.

3.1. Hypothesis and test

We formalize the null hypothesis H

0

when Assumption 2.2 holds, and the fixed

and local alternatives H

a

and H

a,n

when Assumption 2.2 is violated. To be

specific, we consider

H

0

: E{Φ

B

(V,δ

A

,δ

B

; μ

g,0

)} =0, (3.1)

H

a

: E{Φ

B

(V,δ

A

,δ

B

; μ

g,0

)} = η

fix

, (3.2)

H

a,n

: E{Φ

B

(V,δ

A

,δ

B

; μ

g,0

)} = n

−1/2

B

η, (3.3)

where μ

g,0

= E

ζ

(μ

g

), μ

g

= μ

g,0

+ O

ζ

(N

−1/2

), and η

fix

, η are two fixed pa-

rameters. The fixed alternative H

a

is commonly considered in the standard

hypothesis testing framework. However, it enforces the bias of the estimating

function Φ

B

(·) to be fixed and indicates a strong violation of Assumption 2.2,

under which the test statistic T will diverge to infinity with the sample size.

Moreover, the inference under the fixed alternative can not capture the finite-

sample behavior of the test well and lacks uniform validity. On the contrary, the

local alternative provides a useful tool to study the finite-sample distribution of

non-regular estimators when the signal of violation is weak, i.e., in the n

−1/2

B

neighborhood of zero. In such cases, we allow the existence of a set of unmea-

sured covariates whose association with either the possibility of being selected

into Sample B or the outcome is small. Also, the local alternative H

a,n

is more

general in the sense that it reduces to H

a

with η = ±∞, and has been widely

employed to illustrate the non-regularity settings, such as weak instrumental

variables regression [59], regression estimators of weakly identified parameters

[13] and test errors in classification [33]. We will mainly exploit the local alter-

native to show the inherent non-regularity of the pretest estimator.

Under the null hypothesis (3.1), μ

B

is consistent, and hence, it is reasonable to

combine μ

A

and μ

B

for efficient estimation. However, when the null hypothesis

is violated as in (3.3), the efficient estimator is biased. Lemma 3.1 presents the

asymptotic properties of the separate and efficient estimators under H

a,n

.

1500 C. Gao and S. Yang

Lemma 3.1. Suppose Assumptions 2.1, 2.2 (i) and (iii), and all the regular-

ity conditions in Lemma 2.1 hold. Then, under the local alternative H

a,n

, the

asymptotic distributions for μ

A

and μ

B

are

n

1/2

μ

A

− μ

g

μ

B

− μ

g

→N

0

l×1

−f

−1/2

B

E {∂Φ

B

(V,δ

A

,δ

B

; μ

g,0

)/∂μ}

−1

η

,

V

A

Γ

Γ

V

B

.

(3.4)

The asymptotic distribution of the efficient estimator μ

eff

is

n

1/2

(μ

eff

− μ

g

)→N {b

eff

(η),V

eff

},

where b

eff

(η)=−f

−1/2

B

ω

B

(Λ

eff

)E {∂Φ

B

(V,δ

A

,δ

B

; μ

g,0

)/∂μ}

−1

η. The exact form

of ω

B

(Λ

eff

) and V

eff

are presented in Lemma A.3.

By Lemma 3.1, among the three estimators μ

A

, μ

B

and μ

eff

,whenH

0

holds,

μ

eff

is optimal because it is consistent and the most efficient; while when H

0

is

violated, μ

A

is optimal because it is consistent but the other two estimators are

not.

We now use pretesting to guide choosing the estimators. To test H

0

,thekey

insight is that μ

A

is always consistent for μ

g

by Assumption 2.1,andifH

0

holds,

Φ

B,n

(μ

A

)=n

1/2

B

N

−1

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ

A

) should behave as a mean-zero

random vector asymptotically. Thus, we construct the test statistic T as

T =

Φ

B,n

(μ

A

)

Σ

−1

T

Φ

B,n

(μ

A

)

, (3.5)

where Σ

T

is the asymptotic variance of Φ

B,n

(μ

A

, τ), and

Σ

T

is a consistent

estimator of Σ

T

. The exact form of Σ

T

in (A.15) involves V

A

, V

B

, and Γ. Thus,

Σ

T

can be obtained by replacing the unknown components in the expression of

Σ

T

with their estimated counterparts, and the expectations with the empirical

averages. In addition, we can consider the replication-based method for variance

estimation in Algorithm B.1 adapted from [35].

Lemma 3.2 serves as the foundation for our data-driven pooling step in Sec-

tion 3.2.

Lemma 3.2. Suppose Assumptions 2.1, 2.2 (i) and (iii), and all the regularity

conditions in Lemma 2.1 hold. Under H

0

, the test statistic T →χ

2

l

, i.e., a chi-

square distribution with degree of freedom l.UnderH

a,n

, T →χ

2

l

(η

Σ

−1

T

η/2) with

non-central parameter η

Σ

−1

T

η/2 as n →∞.

3.2. Data-driven pooling

If T is large, it indicates that H

0

may be violated and thus it is desirable to retain

only the probability sample for estimation. If T is small, it indicates that H

0

may

be accepted and suggests combining the probability and non-probability samples

Test-and-pool estimator 1501

for efficient estimation. This strategy leads to the test-and-pool estimator μ

tap

as the solution to

N

i=1

{

Φ

A

(V

i

,δ

A,i

; μ)+1(T<c

γ

)Λ

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ)} =0, (3.6)

where c

γ

is the (1 − γ) critical value of χ

2

l

.In(3.6), we can fix Λ to be the

optimal form Λ

eff

leading to an efficient estimator under H

0

in Section 2.3.

Alternatively, we view c

γ

and Λ jointly as tuning parameters that determine how

much information from the non-probability sample can be borrowed in pooling.

Larger c

γ

and Λ borrow more information from the non-probability sample,

leading to more efficient but more error-prone estimators, and vice versa. We

will use a data-adaptive rule to select (Λ,c

γ

) that minimizes the mean squared

error of μ

tap

.

Remark 3.1. Compare to the t-test-based pooling estimator in [38], our pro-

posed method is more general in the sense that (a) the auxiliary covariates are

used to provide a more informative model of μ

g

; (b) our test statistic T is mo-

tivated by the estimating function, which can be more robust to model misspec-

ification, and (c) a data-adaptive selection of (Λ,c

γ

) is adopted for minimizing

the post-integration mean squared error.

4. Asymptotic properties of the test-and-pool estimator

In this section, we characterize the asymptotic properties of μ

tap

. Before pro-

ceeding further, we introduce more notations. Let I

l×l

be a l ×l identify matrix,

F

l

(·; η) be the cumulative distribution function for χ

2

l

with non-central param-

eter η,andF

l

(·)=F

l

(·; 0). Denote V

A-eff

= V

A

− V

eff

and V

B-eff

= V

B

− V

eff

,

which are both positive-definite.

4.1. Asymptotic distribution

By construction, the estimator μ

tap

is a pretest estimator that first constructs T

for pretesting H

0

and then forms the test-based weights for combining μ

A

and

μ

B

. It is challenging to derive the asymptotic distribution of μ

tap

because it is

involved with the test statistic T and two asymptotically dependent components

μ

A

and μ

B

. In order to formally characterize the asymptotic distribution of

μ

tap

, we decompose the asymptotic representation of μ

tap

by two orthogonal

components, one is affected by the testing and the other is not.

First, by Lemma 3.1,letn

1/2

(μ

A

− μ

g

)→Z

1

and n

1/2

(μ

B

− μ

g

)→Z

2

,where

Z

1

and Z

2

are multivariate normal random vectors as in (3.4).

Second, by Lemma 3.2, asymptotically, we write T as a quadratic form W

T

2

W

2

with W

2

= −f

1/2

B

Σ

−1/2

T

E {∂Φ

B

(μ

g,0

,τ

0

)/∂μ}

−1

(Z

1

− Z

2

). We then find an-

other standardized l-variate normal vector W

1

= f

1/2

B

Σ

−1/2

S

{(Γ

− V

B

)(Γ −

V

A

)

−1

Z

1

+ Z

2

} that is orthogonal to W

2

,wherecov(W

1

,W

2

)=0

l×l

, E(W

1

)=

1502 C. Gao and S. Yang

μ

1

, var(W

1

)=I

l×l

and E(W

2

)=μ

2

, var(W

2

)=I

l×l

,Σ

S

is introduced for the

purpose of standardization.

Third, μ

tap

can be asymptotically represented by two components involving

W

1

and W

2

, respectively, one component is affected by the test constraint and

the other component is not. Following the above steps, Theorem 4.1 character-

izes the asymptotic distribution of μ

tap

.

Theorem 4.1. Suppose the assumptions in Lemma 3.1 hold except that As-

sumption 2.2 (ii) may be violated as dictated by H

a,n

in (3.3). Let W

1

and W

2

to be independent normal random vectors with mean μ

1

and μ

2

(given below,

which vary by hypothesis) and variance matrices I

l×l

. The test-and-pool estima-

tor μ

tap

follows the following asymptotic distribution

n

1/2

(μ

tap

− μ

g

)→

−V

1/2

eff

W

1

+(ω

A

V

1/2

A−eff

− ω

B

V

1/2

B−eff

)W

t

[0,c

γ

]

w.p. ξ,

−V

1/2

eff

W

1

+ V

1/2

A−eff

W

t

[c

γ

,∞]

w.p. 1 − ξ,

where W

t

[a,b]

is the truncated normal distribution W

2

| (a ≤ W

2

W

2

≤ b) and

ξ = F

l

(c

γ

; μ

2

μ

2

/2).

(a) Under H

0

, μ

1

= μ

2

=0,ξ = F

l

(c

γ

;0)=γ.

(b) Under H

a,n

, μ

1

= −Σ

−1/2

S

E {∂Φ

B

(μ

g,0

,τ

0

)/∂μ}

−1

η, μ

2

= −Σ

−1/2

T

η and

ξ = F

1

(c

γ

; μ

T

2

μ

2

/2).

Theorem 4.1 reveals that the asymptotic distribution of μ

tap

depends on

the local parameter η and thus characterizes the non-regularity of the pretest

estimator. When H

0

is violated weakly (a small perturbation in the true data

generating model), the asymptotic distribution of μ

tap

can change abruptly

depending on η. The non-regularity of μ

tap

also poses challenges for inference

as shown in Section 4.3. Based on Theorem 4.1, we derive the asymptotic biases

and mean squared errors of μ

tap

under H

0

and H

a,n

, which serve as the stepping

stone to a data-driven procedure to select the tuning parameters Λ and c

γ

.

4.2. Asymptotic bias and mean squared error

BasedontheTheorem4.1, the asymptotic distribution of μ

tap

involves elliptical

truncated normal distributions [60, 4]. To understand the asymptotic behavior

of our proposed estimator, it is crucial to comprehend the essential properties

of elliptical truncated multivariate normal distributions. We derive the moment

generating function and subsequently the mean square error of the estimator

μ

tap

. The exact form of mean squared error given by mse(Λ,c

γ

; η)in(B.13),

albeit complicated, reveals that the amount of information borrowed from the

non-probability sample (controlled by Λ and c

γ

) should tailor to the strength

of violation of H

0

(dictated by local parameter η). For illustration, we consider

a toy example in the supplemental material.

We search for the optimal values (Λ

∗

,c

∗

γ

) that minimize mse(Λ,c

γ

; η)using

standard numerical optimization algorithm [39], where η =Φ

B,n

(μ

A

, τ). Note

that the decision of rejecting H

0

or not is subject to the hypothesis testing

Test-and-pool estimator 1503

errors, namely the Type I error and Type II error. That is, the test statistic

T can be larger than c

γ

even when H

0

holds; similarly, it can be small when

H

a,n

holds. However, the data-adaptive tuning procedure aims at minimizing

the mean squared error of the estimator μ

tap

, which implicitly restricts these

two testing errors to be small.

4.3. Adaptive inference

Standard approaches to inference, e.g., the nonparametric bootstrap, require the

estimators to be regular [56]. In non-regular settings, researchers have proposed

alternative approaches such as the m-out-n bootstrap or subsampling. However,

these approaches critically rely on a proper choice of m or the subsample size;

otherwise, the small sample performances can be poor. The non-regularity is

induced because the asymptotic distribution of the estimator μ

tap

depends on

the local parameter, thus, it does not converge uniformly over the parameter

space. [33] propose adaptive confidence intervals for test errors in the classifi-

cation problems. Following this idea, we construct the bound-based adaptive

confidence interval (BACI) for the estimator μ

tap

that guarantees good cover-

age properties. To avoid the non-regularity, our general strategy is to derive

two smooth functionals that bound the estimator μ

tap

. Because these two func-

tionals are regular, standard approaches to inference can be adopted and valid

confidence intervals follow.

To be concrete, we construct a bound-based adaptive confidence interval

for a

μ

g

,wherea ∈ R

l

is fixed. By Theorem 4.1, we can reparametrize the

asymptotic distribution of a

n

1/2

(μ

tap

− μ

g

)as

a

n

1/2

(μ

tap

− μ

g

)→R

n

+ a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)U

n

, (4.1)

where

R

n

= −a

V

1/2

eff

W

1

+ a

(ω

A

V

1/2

A-eff

− ω

B

V

1/2

B-eff

)W

2

+ a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)μ

t

[c

γ

,∞)

,

U

n

= W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

,

and μ

t

[c

γ

,∞)

= μ

2

1

μ

2

μ

2

>c

γ

. By construction, R

n

is regular and asymptotically

normal, but U

n

is nonsmooth. Nonsmoothness and nonregularity are interre-

lated. To illustrate, if μ

2

=0,U

n

follows a standard truncated normal distribu-

tion with truncated probability P(W

2

W

2

≤ c

γ

| μ

2

= 0); whereas, if |μ

2

|→∞,

P(W

2

W

2

≤ c

γ

| μ

2

) diminishes to zero, implying that U

n

follows a standard

normal distribution. Thus, the limiting distribution of a

n

1/2

(μ

tap

− μ

g

)isnot

uniform over local parameter μ

2

(or equivalently η).

Our goal is to form the least conservative smooth upper and lower bounds.

An important observation is that if |μ

2

| is sufficiently large, we may treat

U

n

as regular. Thus, we define B as the nonregular zone for μ

2

μ

2

such that

max

μ

2

μ

2

∈B

P(W

2

W

2

≥ c

γ

| μ

2

) ≤ 1 − ε for small >0andB

the regular

zone. When μ

2

μ

2

∈ B

, standard inference can apply, and bounds are only

1504 C. Gao and S. Yang

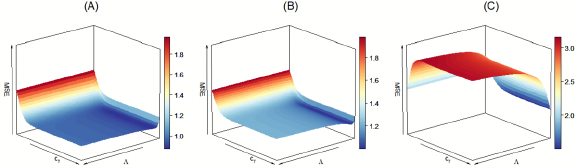

Fig 1. Illustration of the nonregular zone B (shaded) and two power functions: the solid and

dash lines are P(W

2

W

2

>c

γ

| μ

2

μ

2

) and P(T ≥ v

n

| μ

2

μ

2

) as functions of μ

2

μ

2

, respectively.

needed when μ

2

μ

2

∈ B to avoid the inference procedure to be overly con-

servative. We then require another test procedure to test μ

2

μ

2

∈ B against

μ

2

μ

2

∈ B

. Toward this end, we use T ≥ v

n

,wherev

n

is chosen such that

max

μ

2

μ

2

∈B

P(T ≥ v

n

| μ

2

)=˜α for a pre-specified ˜α. Figure 1 illustrates the

regular and nonregular zones and the test. If T ≥ ν

n

, we conclude the regularity

of the estimator μ

tap

and construct a normal confidence interval, but if T<ν

n

,

we construct the least favorable confidence interval by taking the union for all

μ

2

∈ R

l

. In practice, v

n

can be determined by the double bootstrapping sat-

isfying the regularity condition that lim

n→∞

v

n

/n = 0; see Section B.4 of the

supplemental material for more details.

Accordingly, U

n

can be decomposed into two components U

n

=(W

t

[c

γ

,∞)

−

μ

t

[c

γ

,∞)

)1

T ≥υ

n

+(W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

)1

T<v

n

and only regularize (i.e., deriving

bounds for) the latter component. Continuing with (4.1), we can take the supre-

mum over all μ

2

in the nonregular zone to construct the upper bound U(a),

U(a)=R

n

+ a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)(W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

)1

T ≥υ

n

+sup

μ

2

∈R

l

a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)(W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

)

1

T<v

n

(4.2)

The lower bound L(a)fora

n

1/2

(μ

tap

− μ

g

) can be computed in an analogous

way by replacing sup with inf in (4.2). Taking the supremum and the infimum

of μ

2

over R

l

renders the two bounds U(a)andL(a) smooth and regular. The

limiting distribution of U(a)is

U(a)→R + a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)(W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

)1

μ

2

μ

2

∈B

Test-and-pool estimator 1505

+sup

μ

2

∈R

l

a

ω

B

(V

1/2

B-eff

+ V

1/2

A-eff

)(W

t

[c

γ

,∞)

− μ

t

[c

γ

,∞)

)

1

μ

2

μ

2

∈B

. (4.3)

Similarly, the limiting distribution of L(a)is(4.3) by replacing sup with inf.

Based on the limiting distribution of U(a)andL(a), if P(μ

2

μ

2

∈ B)=0,U(a)

and L(a) have approximately the same limiting distributions as a

n

1/2

(μ

tap

−

μ

g

). However, if P(μ

2

μ

2

∈ B) =0,U(a) is stochastically larger and L(a)is

stochastically smaller than a

n

1/2

(μ

tap

− μ

g

).

Based on the regular bounds U (a)andL(a), we construct the (1 −α) ×100%

bound-based adaptive confidence interval of a

μ

g

as

C

BACI

μ

g

,1−α

(a)=

a

μ

tap

−

U

1−α/2

(a)/

√

n, a

μ

tap

−

L

α/2

(a)/

√

n

, (4.4)

where

U

d

(a)and

L

d

(a) approximate the d-th quantiles of the distribution of U(a)

and L(a), respectively, which can be obtained by the nonparametric bootstrap

method.

Theorem 4.2. Assume the conditions in Theorem 4.1 hold true. Furthermore,

assume matrices Σ

T

, Σ

S

in Lemma 3.1 and their consistent estimates

Σ

T

,

Σ

S

are strictly positive-definite, and the sequence v

n

satisfies v

n

→∞and v

n

/n → 0

with probability one. The asymptotic coverage rate of (4.4) satisfies

P

a

μ

g

∈ C

BACI

μ

g

,1−α

(a)

≥ 1 − α. (4.5)

In particular, if Assumption 2.2 is strongly violated with P(μ

2

μ

2

∈ B

)=1, the

inequality in (4.5) becomes equality.

Remark 4.1. We discuss an alternative approach to construct valid confidence

intervals for the non-regular estimators using projection sets [48] (referred to as

projection-based adaptive confidence intervals (PACI), C

PACI

μ

g

,1−α

(a)). The basic

idea is as follows. For a given μ

2

, the limiting distribution of μ

tap

is known and

aregular(1 − ˜α

1

) × 100% confidence interval C

μ

g

,1− ˜α

1

(a; μ

2

) of a

μ

g

can be

formed through the standard procedure. Since μ

2

is unknown, a (1 −α) ×100%

projection confidence interval of μ

g

can be conservatively constructed as the

union of all C

μ

g

,1− ˜α

1

(a; μ

2

) over μ

2

in its (1 − ˜α

2

) × 100% confidence region,

where α =˜α

1

+˜α

2

. Such strategy may be overly conservative, and in that way, the

projection-based adaptive confidence interval then introduces a pretest in order to

mitigate the conservatism. If the pretest rejects H

0

: μ

2

μ

2

∈ B, C

μ

g

,1− ˜α

1

(a; μ

2

)

is used; otherwise, the union of C

μ

g

,1− ˜α

1

(a; μ

2

) is used. The technical details

for the C

PACI

μ

g

,1−α

(a) are presented in the supplemental material. Our simulation

study later shows that the C

PACI

μ

g

,1−α

(a) is more conservative than the proposed

C

BACI

μ

g

,1−α

(a).

5. Simulation study

In this section, we evaluate the finite-sample performances of the proposed esti-

mator μ

tap

and C

BACI

μ

g

,1−α

(a). First, we generate the finite population F

N

with size

1506 C. Gao and S. Yang

N =10

5

. For each subject i, generate X

i

=(1,X

1,i

,X

2,i

)

,whereX

1,i

∼N(0, 1)

and X

2,i

∼N(1, 1), and generate Y

i

by Y

i

=1+X

1,i

+X

2,i

+u

i

+u

2

i

+ε

i

,where

u

i

∼N(0, 1) and

i

∼N(0, 1). Generate samples from the finite population F

N

by Bernoulli sampling with specified inclusion probabilities

log

π

A,i

1 − π

A,i

| X

i

= ν

A

+ .2X

1,i

+ .1X

2,i

,

log

π

B,i

1 − π

B,i

| X

i

= ν

B

+ .1X

1,i

+ .2X

2,i

+ .5n

−1/2

B

bu

i

,

where ν

A

and ν

B

are adaptively chosen to ensure the target sample sizes n

A

≈

600 and n

B

≈ 5000. We assume that (X

i

,Y

i

) are observed but u

i

is unobserved,

and we vary b in {0, 10, 100} to represent the scenarios where H

0

holds, is slightly

violated or strongly violated, respectively.

We compare the estimator μ

tap

with other estimators: (a) μ

A

: the solu-

tion to

N

i=1

Φ

A

(V

i

,δ

A,i

; μ) = 0 with Φ

A

(V

i

,δ

A,i

; μ) defined in (2.4). (b) μ

B

:

the naive sample mean

μ

B

=(

N

i=1

δ

B,i

)

−1

N

i=1

δ

B,i

Y

i

.(c)μ

dr

: the solution

to

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ, α, β) = 0 with Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ, α, β) defined in

(2.5), where (α, β) are estimated by using the maximum pseudo-likelihood es-

timator α and the ordinary least square estimator

β [26]; see Equations (B.2)

and (B.4). (d) μ

eff

: the solution to (2.7) with the optimal choice Λ

eff

speci-

fied in (A.11) and the consistent estimators (α,

β) obtained from (c). (e) μ

eff:B

:

μ

eff

,whereα is estimated in the same manner as (c) but β is estimated solely

based on the non-probability sample; see Equation (B.3). (f) μ

eff:KH

: μ

eff

,where

(α, β) are estimated simultaneously by adopting the methods proposed by [29];

see Equations (B.5)and(B.6). (g) μ

tap

, μ

tap:B

, μ

tap:KH

: the solution to (3.6),

where (Λ,c

γ

) are chosen by our data-adaptive procedure with (α,

β) obtained

from (d), (e), (f), respectively. (h) μ

Bayes:1

, μ

Bayes:2

, μ

Bayes:3

: the Bayesian ap-

proaches for combining the non-probability sample with the probability sample

assuming different informative priors [52].

For all estimators, we specify the model π

B

(X; α) to be a logistic regression

model with X

i

and the outcome mean model m(X; β) to be a linear regression

model with X

i

. For non-regular estimators μ

tap

, μ

tap:B

and μ

eff:KH

,wecon-

struct the C

BACI

μ

g

,1−α

(a)in(4.4) with a data-adaptiv choice of ν

n

,theC

BACI

μ

g

,1−α

(a)

with a fixed v

n

= log log n{C

BACI:F

μ

g

,1−α

(a)} (BACI

F

), and the C

PACI

μ

g

,1−α

(a). For any

confidence intervals requiring the nonparametric bootstrap, the bootstrap size

is 2000. For the Bayesian estimators, the point estimates are obtained by the

Markov chain Monte Carlo sampling with size 2000 after additional 500 burn-in

samples.

Table 2 reports the bias, variance and mean squared error of each estimator

over 2000 simulated datasets. The benchmark estimators μ

A

have small biases

across all scenarios, guaranteed by the probability sampling design. On the other

hand, the non-probability-only estimators

μ

B

exhibit high biases in all cases,

mainly due to the effect of selection bias. When the impact of the unmeasured

confounder b increases, the pooled estimators μ

eff

, μ

eff:B

and μ

eff:KH

are be-

Test-and-pool estimator 1507

Table 2

Simulation results for bias (×10

−3

),variance(var)(×10

−3

) and mean squared error

(MSE) (×10

−3

) of μ

A

, μ

B

, μ

dr

, μ

eff

, μ

Bayes

and μ

tap

when H

0

holds, is slightly violated or

strongly violated.

H

0

holds slightly violated strongly violated

bias var MSE bias var MSE bias var MSE

Regular μ

A

−4.1 10.4 10.4 −4.1 10.4 10.4 −4.1 10.4 10.4

μ

B

284.1 1.2 81.9 355.3 1.2 127.4 1318.8 2.0 1741.4

μ

dr

−0.4 4.2 4.2 71.0 4.3 9.3 1048.0 5.0 1103.2

μ

eff

−0.9 4.1 4.1 62.3 4.2 8.1 851.5 6.6 731.7

μ

eff:B

−0.9 4.1 4.1 62.3 4.2 8.1 851.7 6.6 732.1

μ

eff:KH

−0.9 4.1 4.1 62.3 4.2 8.1 851.5 6.7 731.7

Bayes μ

Bayes:1

−3.7 14.1 14.1 1.0 14.0 14.0 −4.3 14.1 14.1

μ

Bayes:2

−4.1 10.8 10.8 17.1 11.1 11.4 7.0 13.8 13.8

μ

Bayes:3

−2.4 8.9 8.9 51.2 9.0 11.6 614.0 10.8 387.9

TAP μ

tap

−4.8 7.6 7.6 10.1 9.3 9.4 −4.1 10.4 10.4

μ

tap:B

−4.8 7.6 7.6 10.1 9.3 9.4 −4.1 10.4 10.4

μ

tap:KH

−4.8 7.6 7.6 10.1 9.3 9.4 −4.1 10.4 10.4

Table 3

Simulation results for coverage rates (CR) (×10

−2

) and widths (×10

−3

) for 95% confidence

intervals when H

0

holds, is slightly violated or strongly violated.

H

0

holds slightly violated strongly violated

CIs CR width CR width CR width

μ

A

Wald 95.2 404.1 95.3 404.1 95.2 404.0

μ

B

0.0 135.5 0.0 138.8 0.0 173.7

μ

dr

95.9 262.8 81.8 264.4 0.0 282.4

μ

eff

95.9 259.5 85.1 260.9 0.0 273.6

μ

Bayes:1

hpdi 98.3 463.0 97.5 461.5 97.3 462.8

μ

Bayes:2

97.8 404.2 97.4 409.8 97.5 458.3

μ

Bayes:3

99.3 368.2 97.4 370.6 0.0 407.0

μ

tap

paci 98.4 558.7 98.4 535.7 99.2 541.0

baci

F

94.7 399.1 95.9 402.3 94.7 402.6

baci 92.1 363.1 93.3 367.2 94.8 402.8

coming more biased. Additionally, the Bayesian methods, particularly μ

Bayes:2

,

perform reasonably well when H

0

holds or is slightly violated, but it tends to

have large biases when H

0

is strongly violated. Whereas the proposed estima-

tors μ

tap

, μ

tap:B

and μ

tap:KH

have small biases regardless of the strength of the

unmeasured confounder. When H

0

is slightly violated, our proposed estimators

have slightly larger biases but smaller mean squared errors than μ

A

by inte-

grating the non-probability sample. When H

0

is strongly violated, the proposed

estimators perform similarly to μ

A

with the protection of pretesting.

Table 3 reports the properties of 95% Wald confidence intervals for the regu-

lar estimators, the highest posterior density intervals (HPDIs) for the Bayesian

estimators, and various adaptive confidence intervals for the non-regular es-

timators μ

tap

, where the Wald confidence intervals are constructed, and the

Bayesian credible intervals are constructed based on the posterior samples after

1508 C. Gao and S. Yang

burn-in. Because the confidence intervals (and the point estimates; see Table 2)

are not sensitive to the methods of estimating the nuisance parameters (α, β),

we only present the confidence intervals for μ

eff:KH

and μ

tap:KH

for simplicity.

BasedonTable3, C

PACI

μ

g

,1−α

tend to overestimate the uncertainty, leading to

over-conservative confidence intervals. C

BACI

μ

g

,1−α

and C

BACI:F

μ

g

,1−α

are less conserva-

tive and alleviate the over-coverage issues; thus, the empirical coverage rates are

close to the nominal level in all cases. Moreover, C

BACI

μ

g

,1−α

have narrower inter-

vals than C

BACI:F

μ

g

,1−α

by using the double bootstrap procedure to select v

n

at the

expense of computational burden. When H

0

holds, the C

BACI

μ

g

,1−α

are narrower

than the Wald for the probability-only estimator μ

A

, indicating the advantages

of implementing the test-and-pool strategy in these cases. When H

0

is slightly

violated, the benefit in coverage rate is not significantly observed under similar

coverage rates. When H

0

is strongly violated, the adaptive confidence interval

C

BACI

μ

g

,1−α

reduces to the Wald confidence intervals for μ

A

. Lastly, the credible in-

tervals for the Bayesian estimators do not have satisfactory coverage properties

as the model misspecification persists across scenarios, which is aligned with the

Bernstein-von Mises Theorem [65, Chapter 10.2].

6. A real-data illustration

To demonstrate the practical use, we apply the proposed method to a probability

sample from the 2015 Current Population Survey (CPS) and a non-probability

sample from the 2015 Behavioral Risk Factor Surveillance System (BRFSS)

survey. Note that the Behavioral Risk Factor Surveillance System survey itself

is a probability sample and we manually discard its sampling weights to recast

it as a non-probability sample for illustrating our proposed method.

To apply the proposed method, we use a two-phase sampling survey data with

sizes n

A

= 1000 and n

B

= 8459. We focus on two outcome variables of interest:

employment (percentages of working and retired) and educational attainment

(high school or less as h.s.o.l, and college or above as c.o.a.). Both datasets

provide measurements on the outcomes of interest and some common covariates

including age, sex (female or not), race (white and black), origin (Hispanic or

not), region (northeast, south, or west), and marital status (married or not).

To illustrate the heterogeneity in the study populations, Table 4 contrasts the

means of variables from the CPS sample (design-weighted averages) and the

BRFSS sample (simple averages). Based on Table 4, the BRFSS sample may

not be representative of the target population, and the pretesting procedures

before pooling should be expected.

Table 5 presents the results. For all estimators, we specify the propensity

score model to be a logistic regression model with the covariates (all variables

excluding the outcome variable) and the outcome mean model to be a logistic

regression model with the covariates. The efficient estimator μ

eff

gains efficiency

in all estimators compared to both μ

A

and μ

dr

; however, it may be subject to

biases if the non-probability sample does not satisfy the required assumptions. In

the test-and-pool analysis, the pretesting rejects the use of the non-probability

Test-and-pool estimator 1509

Table 4

The covariate means by two samples: CPS sample (a probability sample) and BRFSS

sample (a hypothetical non-probability sample.)

Data source age %sex %white %black %hispanic %northeast %south

CPS 47.5 56.5 81.9 11.0 13.3 18.1 37.7

BRFSS 48.3 54.2 83.2 8.4 8.3 20.0 27.6

%west %married %working %retired %h.s.o.l. %c.o.a.

CPS 24.1 52.5 58.7 13.6 39.4 30.3

BRFSS 29.5 50.8 52.2 24.5 21.2 41.9

Table 5

Estimated population mean (EST), standard errors (SE) and confidence intervals of μ

g

for

selected covariates when combining two datasets.

Outcome Y %working %retire %h.s.o.l. %c.o.a.

μ

A

est 58.7 13.6 39.4 30.3

se 1.51 1.17 1.60 1.59

Wald (54.8,62.3) (11.6,16.2) (35.7,43.0) (27.2,33.7)

μ

dr

est 56.5 20.0 25.8 32.3

se 1.03 1.24 0.93 1.20

Wald (54.2,58.8) (17.9,22.4) (234.0,27.5) (30.3,34.5)

μ

eff:KH

est 56.6 17.3 26.4 32.1

se 0.80 0.19 0.87 0.62

Wald (54.3,58.9) (15.4,19.6) (24.6,28.1) (30.1,34.3)

μ

Bayes:1

est 59.8 14.1 40.5 30.7

se 1.97 1.37 2.00 1.84

hpdi (56.0, 63.6) (11.4,16.8) (36.6,44.4) (27.2,34.4)

μ

Bayes:2

est 59.8 14.0 40.3 30.9

se 2.01 1.33 1.92 1.84

hpdi (56.1,63.9) (11.4,16.4) (36.4,44.0) (27.2,34.5)

μ

Bayes:3

est 58.6 14.1 37.6 31.1

se 1.94 1.30 1.92 1.76

hpdi (54.7, 62.4) (11.6,16.7) (33.7,41.4) (27.7,34.7)

μ

tap:KH

est 58.7 13.6 39.0 31.7

se 1.51 1.17 1.55 0.64

baci (54.9,62.6) (11.6,15.8) (35.8,42.6) (31.0,33.6)

sample for the employment variables “working” and “retired” but accepts the use

of the non-probability sample for the education variables “high school or less”

and “college or above”. Thus, for the employment variables, μ

tap

= μ

A

,andfor

the educational attainment variables, μ

tap

gains efficiency over μ

A

. The Bayesian

estimators with the informative priors 2 and 3 are more efficient than the prior

1. However, they still yield larger standard errors compared to the probability-

only estimator μ

A

perhaps because the non-probability-based informative priors

are biased for the model parameters for the probability sample. From the test-

and-pool analysis, the employment rate and the retirement rate are 58.7% and

13.6%, respectively, the percentage of the U.S. population with a high school

education or less is 39.0% and the percentage of the population with a college

education or above is 31.7% in 2015.

1510 C. Gao and S. Yang

7. Concluding remarks

When utilizing the non-probability samples, researchers often assume that the

observed covariates contain all the information needed for recovering the sam-

pling mechanism. However, this assumption may be violated, and hence the

integration of the probability and non-probability samples is subject to biases.

In this paper, we propose the test-and-pool estimator that firstly scrutinizes

the assumption required for combining by hypothesis testing and carefully com-

bines the probability and non-probability samples by a data-driven procedure to

achieve the minimum mean squared error. In theoretical development, we treat

(Λ,c

γ

) jointly as two tuning parameters and establish the asymptotic distribu-

tion of the pretesting estimator without taking their uncertainties into account.

The non-regularity of the pretest estimator invalidates the conventional method

for generating reliable inferences. To address this issue, the proposed adaptive

confidence interval has been designed to effectively handle the non-smoothness

of the pretest estimator and ensure uniform validity of inferences. It is important

to note, however, that this approach may result in a little gain in the precision

of the confidence interval, although the point estimator might have a signifi-

cant gain in the MSE compared to the estimator based only on the probability

sample. Further research is required to develop a valid post-testing confidence

interval that offers reduced conservatism.

Pretest estimation is the norm rather than the exception in applied research,

so the theories that we have established are highly relevant to researchers who

engage in applied work. The proposed framework can be extended in the fol-

lowing directions. First, in this work, we study the implications of pretesting

on estimation and inference under one single pretest. In practice, researchers

may engage in multiple presetting. For example, in the data integration con-

text, one can encounter multiple data sources [51, 71, 16], requiring pretesting

of the comparability of each data source and the benchmark. Multiple preset-

ting alters the current asymptotic results and is an important future research

topic. Second, our framework considers a fixed number of covariates; however,

in reality, practitioners often collect a rich set of auxiliary variables, rendering

variable selection imperative [75]. Developing a valid statistical framework to

deal with issues arising from selective inference is a challenging but important

topic for further investigation. Third, small area estimation has received a lot of

attention in the data integration context [44, 28, 25]. The typical estimator in

small area estimation is a weighted average of the design-based estimator and

a model-based synthetic estimator. [5] discussed the trade-off of the efficiency

gain from invoking model assumptions and the risk that these assumptions do

not hold. Thus, pretesting can be potentially useful for small-area estimation,

which we will investigate in the future.

Appendix A: Proofs

A.1. Regularity conditions

Let F

N

= {V

i

=(X

i

,Y

i

)

: i ∈ U },Φ

A

(V,δ

A

; μ)andΦ

B

(V,δ

A

,δ

B

; μ, τ)be

l-dimensional estimating functions for the parameter μ

g

∈ R

l

when using the

Test-and-pool estimator 1511

probability sample and the combined samples, respectively. Let Φ

τ

(V,δ

A

,δ

B

; τ)

be the k-dimensional estimating equations for the nuisance parameter τ

0

∈

R

k

. Then, we construct one stacked estimating equation system Φ(V, δ

A

,δ

B

; θ)

with θ =(μ

A

,μ

B

,τ

)

and dim(θ)=2l + k. For establishing our stochastic

statements, we require the following regularity conditions.

Assumption A.1. The following regularity conditions hold.

a) The parameter θ =(μ

A

,μ

B

,τ

)

belongs to a compact parameter spaces

Θ in R

2l+k

.

b) There exist a unique solution θ

0

=(μ

A,0

,μ

B,0

,τ

0

)

lying in the interior

of the compact space Θ such that

E{Φ

A

(V,δ

A

; θ

0

)} = E{Φ

B

(V,δ

A

,δ

B

; θ

0

)} = E{Φ

τ

(V,δ

A

,δ

B

; θ

0

)} =0.

c) Φ(V,δ

A

,δ

B

; θ) is integrable with respect to the joint distribution of (V , δ

A

,

δ

B

) for all θ in a neighborhood of θ

0

.

d) The first two partial derivatives of E{Φ(V,δ

A

,δ

B

; θ)} and their empirical

estimators are invertible for all θ in a neighborhood of θ

0

.

e) For all j, k, l ∈{1, ···, 2l +k}, there is an integrable function B(V,δ

A

,δ

B

)

such that

|∂Φ

j

(V,δ

A

,δ

B

; θ)/∂θ

k

∂θ

l

|≤B(V,δ

A

,δ

B

), E {B(V,δ

A

,δ

B

)} < ∞,

for all θ in a neighborhood of θ

0

almost surely.

f) {V

i

: i ∈U}are a set of i.i.d. random variables s.t. E{|Φ(V,δ

A

,δ

B

; θ)|

2+δ

}

is uniformly bounded for θ in a neighborhood of θ

0

.

g) The sample sizes n

A

and n

B

are in the same order of magnitude, i.e., n

A

=

O(n

B

). The sampling fractions for both Sample A and B are negligible, i.e.,

n/N = o(1),wheren = n

A

+ n

B

.

h) There exist C

1

and C

2

such that 0 <C

1

≤ Nπ

A,i

/n

A

≤ C

2

and 0 <C

1

≤

Nπ

B,i

/n

B

≤ C

2

for all i ∈U.

Assumption A.1 a)-e) are typical finite moment conditions to ensure the

consistency of the solution to the estimating functions [49, Appendix B], [64,

Section 3.2], [9, page 293] and [67, Appendix C]. Assumption A.1 f) is required

for obtaining the asymptotic normality of μ

g

under superpopulation. Assump-

tion A.1 g) states that the sampling fraction is negligible, which is helpful

for subsequent variance estimation, and we can use O(n

−1/2

A

), O(n

−1/2

B

)and

O(n

−1/2

) interchangeably. Assumption A.1 h) implies that the inclusion prob-

abilities for Samples A and B are in the order of n/N , which is necessary to

establish their root-n consistency.

It is noteworthy that in Assumption 2.1, the asymptotic normality is as-

certained for the design-weighted estimators given the finite population F

N

.

Hereby, we extend the conditional normality to the unconditional one, which

averages over all possible finite populations satisfying the Assumption A.1 (f).

The following lemma plays a key role to establish the stochastic statements [24,

Theorem 1.3.6.].

1512 C. Gao and S. Yang

Lemma A.1. Under Assumption 2.1 and Assumption A.1 (f), let {F

N

} be

a sequence of finite populations and A

N

be a sample selected from the Nth

population by PR design with size n

N

. Assume that

lim

N→∞

n

N

= ∞, lim

N→∞

N − n

N

= ∞.

We know that the distribution of the design-weighted estimator μ

g

and finite-

population estimator μ

g

are both asymptotically normal distributed such that

μ

g

|F

N

·

∼N(μ

g

,V

1

),μ

g

·

∼N(μ

g,0

,V

2

),

where

·

∼ denotes the asymptotic distribution. Then, μ

g

−μ

g

is also asymptotically

normal.

By lemma A.1, the sampling fraction is negligible, and therefore the limiting

variance of lim

N→∞

n

1/2

N

(μ

g

− μ

g,0

) is 0, indicating that the intermediate step

of producing the finite population is of little significance.

A.2. Proof of Lemmas 2.1 and 3.1

In the general case, we begin to investigate the statistical properties of

Φ

A,n

(μ

A

, τ)=n

1/2

N

−1

N

i=1

Φ

A

(V

i

,δ

A,i

; μ

A

, τ)

and

Φ

B,n

(μ

B

, τ)=n

1/2

N

−1

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ

B

, τ).

First, to simplify our notations, let

˙

Φ

A

(V,δ

A

; μ, τ)=∂Φ

A

(V,δ

A

; μ, τ)/∂μ,

˙

Φ

B

(V,δ

A

,δ

B

; μ, τ)=∂Φ

B

(V,δ

A

,δ

B

; μ, τ)/∂μ,

φ

B,τ

(V,δ

A

,δ

B

; μ, τ)=∂Φ

B

(V,δ

A

,δ

B

; μ, τ)/∂τ,

φ

τ

(V,δ

A

,δ

B

; τ)=∂Φ

τ

(V,δ

A

,δ

B

; τ)/∂τ.

By the Taylor expansion of Φ

B,n

(μ

B

, τ)at(μ

g

,τ

0

), we have

0=Φ

B,n

(μ

B

, τ)

= n

1/2

N

−1

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ

g

,τ

0

)

+n

1/2

N

−1

N

i=1

φ

B,τ

(V

i

,δ

A,i

,δ

B,i

; μ

∗

B

, τ

∗

)(τ − τ

0

)

Test-and-pool estimator 1513

+n

1/2

N

−1

N

i=1

˙

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ

∗

B

, τ

∗

)(μ

B

− μ

g

), (A.1)

for some (μ

∗

B

, τ

∗

) lying between (μ

B

, τ)and(μ

g

,τ

0

), which leads to

− n

1/2

N

−1

N

i=1

˙

Φ

B

(V

i

; μ

∗

B

, τ

∗

)(μ

B

− μ

g

) (A.2)

= n

1/2

N

−1

N

i=1

Φ

B

(V

i

,δ

A,i

,δ

B,i

; μ

g

,τ

0

)

+ n

1/2

N

−1

N

i=1

φ

B,τ

(V

i

,δ

A,i

,δ

B,i

; μ

∗

B

, τ

∗

)(τ − τ

0

).

Also, under Assumption A.1 a), b) and c), by the Taylor expansion, we have

n

1/2

(τ − τ

0

)=−

1

N

N

i=1

φ

τ

(V

i

; τ

0

)

−1

×

n

1/2

N

−1

N

i=1

Φ

τ

(V

i

,δ

A,i

,δ

B,i

; τ

0

)

+ o

ζ-p-np

(1), (A.3)

as τ → τ

0

. Also, under Assumption A.1 (e), we know that

N

−1

N

i=1

˙

Φ

A

(V

i

; μ

∗

A

, τ

∗