Air Force Institute of Technology Air Force Institute of Technology

AFIT Scholar AFIT Scholar

Theses and Dissertations Student Graduate Works

3-2023

Analysis and Optimization of Contract Data Schema Analysis and Optimization of Contract Data Schema

Franklin Sun

Follow this and additional works at: https://scholar.a6t.edu/etd

Part of the Databases and Information Systems Commons, and the Operational Research Commons

Recommended Citation Recommended Citation

Sun, Franklin, "Analysis and Optimization of Contract Data Schema" (2023).

Theses and Dissertations

.

7014.

https://scholar.a6t.edu/etd/7014

This Thesis is brought to you for free and open access by the Student Graduate Works at AFIT Scholar. It has been

accepted for inclusion in Theses and Dissertations by an authorized administrator of AFIT Scholar. For more

information, please contact AFIT[email protected].

ANALYSIS AND OPTIMIZATION OF CONTRACT DATA SCHEMA

THESIS

Franklin Sun, Second Lieutenant, USAF

AFIT-ENS-MS-23-M-155

DEPARTMENT OF THE AIR FORCE

AIR UNIVERSITY

AIR FORCE INSTITUTE OF TECHNOLOGY

Wright-Patterson Air Force Base, Ohio

DISTRIBUTION STATEMENT A

APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED

The views expressed in this thesis are those of the author and do not reflect the official policy or

position of the United States Air Force, DoD, or the United States Government. This material is

declared a work of the U.S. Government and is not subject to copyright protection in the United

States.

AFIT-ENS-MS-23-M-155

ANALYSIS AND OPTIMIZATION OF CONTRACT DATA SCHEMA

THESIS

Presented to the Faculty

Department of Operational Sciences

Graduate School of Engineering and Management

Air Force Institute of Technology

Air University Air Education and Training Command

In Partial Fulfillment of the Requirements for the

Degree of Master of Science in Data Science

Franklin Sun, BS

Second Lieutenant, USAF

March 2023

DISTRIBUTION STATEMENT A.

APPROVED FOR PUBLIC RELEASE; DISTRIBUTION UNLIMITED.

AFIT-ENS-MS-23-M-155

ANALYSIS AND OPTIMIZATION OF CONTRACT DATA SCHEMA

Franklin Sun, BS

Second Lieutenant, USAF

Committee Membership:

Major Michael J. Garee, PhD

Chair

Dr. Nathan B. Gaw

Member

iv

AFIT-ENS-MS-23-M-155

Abstract

The management, development, and growth of U.S Air Force assets demand extensive

organizational communication and structuring. These interactions yield substantial amounts of

contracting and administrative information. Air Force Life Cycle Management Center’ Data

Analytics Research Team (DART) has aggregated and labeled the text content of over 4 million

such contracts as a means towards obtaining valuable insights on Department of Defense

resource usage. This set of contracting data is largely not optimized for backend service in an

analytics environment. To this end, the following research evaluates the efficiency and

performance of various data structuring methods. Evaluated designs include a baseline

unstructured schema, a Data Mart schema, and a snowflake schema. Overall design success

metrics include ease of use by end users, database maintainability, and overall computational

performance. Ease of use and maintainability are determined by examining the structural

complexity of each schema. The resource demands raised by a set of benchmark queries are used

to compare computational performance across schema. Results aggregated from this process

suggest that the Data Mart schema is the strongest and most balanced design for this application.

v

Acknowledgements

I’d like to sincerely thank to my advisor (Major Michael J. Garee, PhD) and reader (Dr.

Nathan B. Gaw) for advising me during the thesis writing process. I would also like to take this

opportunity to thank the American taxpayers for everything they do.

vi

Table of Contents

Abstract ................................................................................................................................... iv

Acknowledgements ................................................................................................................. v

List of Figures ....................................................................................................................... viii

List of Tables .......................................................................................................................... ix

I. Introduction ................................................................................................................................ 1

Background .............................................................................................................................. 1

Problem Statement ................................................................................................................... 1

Research Objective .................................................................................................................. 2

Scope ....................................................................................................................................... 2

Paper Overview ....................................................................................................................... 3

II. Literature Review ....................................................................................................................... 4

Chapter Overview .................................................................................................................... 4

Natural Language Processing .................................................................................................. 4

Named Entity Recognition ...................................................................................................... 5

Contracting Information Extraction ......................................................................................... 7

Database Design Background .................................................................................................. 7

Database Schema Considerations ............................................................................................ 8

Normalization ........................................................................................................................ 10

Schema Performance Comparison ......................................................................................... 12

Evaluating Database Complexity .......................................................................................... 13

Effects of Schema Complexity on Query Construction ........................................................ 14

Data Warehouses ................................................................................................................... 15

Inmon and Kimball Methodologies ....................................................................................... 16

Postgres Background and Discussion .................................................................................... 18

R Shiny .................................................................................................................................. 19

III. Methodology ........................................................................................................................... 20

Chapter Overview .................................................................................................................. 20

Research Background ............................................................................................................ 20

Data Warehouse Design Framework ..................................................................................... 26

Effects of Structural Complexity on Human Performance and Longevity ........................... 33

Evaluation of Structural Complexity ..................................................................................... 34

vii

Evaluation of Computational Performance ........................................................................... 35

Novel Data Structure ............................................................................................................. 38

Holistic Schema Evaluation................................................................................................... 39

Chapter Summary .................................................................................................................. 40

IV. Results and Analysis ............................................................................................................... 41

Chapter Overview .................................................................................................................. 41

Evaluated Database Schemas ................................................................................................ 41

Structural Complexity Evaluation and Analysis ................................................................... 43

Benchmark Query Evaluation and Analysis .......................................................................... 47

Holistic Scoring of Evaluated Schemas ................................................................................. 55

Chapter Summary .................................................................................................................. 56

V. Conclusions and Recommendations ........................................................................................ 57

Chapter Overview .................................................................................................................. 57

Research Findings.................................................................................................................. 57

Recommendations ................................................................................................................. 59

Limitations ............................................................................................................................. 59

Future Work ........................................................................................................................... 60

Summary ................................................................................................................................ 61

Appendix A: Data Exploration SQL Queries ............................................................................... 62

Appendix B: Sample Data From dm_acft_acty ............................................................................ 64

Appendix C: Schema Complexity Metric SQL Queries ............................................................... 66

Appendix D: Benchmark SQL Queries ........................................................................................ 67

Appendix E: Tabulated Benchmark Query Runtimes .................................................................. 70

Bibliography ................................................................................................................................. 71

viii

List of Figures

Figure 1: NER Visualization created using NLTK and SpaCy (Li, 2018) ..................................... 6

Figure 2: Graphic Summarizing First Three Normal Forms (Hoffer, 2010) ................................ 11

Figure 3: Comparison of Schema Performance in Prevailing Literature ...................................... 13

Figure 4: Example of Star Schema (Smallcombe, 2019) .............................................................. 27

Figure 5: Example of Snowflake Schema (Smallcombe, 2019) ................................................... 28

Figure 6: Entity Relationship Diagram of the Staging stg_acft_acty from AFDart2 ................... 31

Figure 7: Entity Relationship Diagram of the Data Mart ready dm_acft_acty from AFDart2 ..... 32

Figure 8: Proposed Entity Relationship Diagram of the sf_acft_acty Snowflake Schema ........... 42

Figure 9: Example of pgAdmin EXPLAIN/ANALYZE’s Query Plan Output (Query 3) ........... 50

Figure 10: Example of pgAdmin EXPLAIN/ANALYZE’s Per Node Statistics (Query 3) ......... 51

Figure 11: Graphical Comparison of stg_acft_acty and dm_acft_acty Resource Usage .............. 52

ix

List of Tables

Table 1. Comparison of Databases and Data Warehouses (Naeem 2022) ................................... 16

Table 2. Comparison of Kimball Star and Snowflake Schema (Smallcombe 2022) .................... 18

Table 3. Current Development Environment Specifications ........................................................ 21

Table 4. AFDart1 Data Schema Size Overview (Initial State CAO 27 OCT 22) ......................... 23

Table 5. AFDart2 Data Schema Size Overview (Current Development CAO 2 JAN 23) ........... 25

Table 6. AFDart1 and AFDart2 Data Schema Dimensionality Comparison (CAO 2 JAN 23) ... 25

Table 7. Selected Benchmark Query Descriptions ....................................................................... 37

Table 8. Category Weights for Holistic Schema Evaluation ........................................................ 40

Table 9. Raw Structural Complexity Score Component (AFDart2, CAO 30 DEC 22) ............... 43

Table 10. Truncated Complexity Score Components (AFDart2 CAO 30 DEC 22) ..................... 44

Table 11. Schema Suitability Findings for Human Performance ................................................. 46

Table 12. Schema Suitability Findings for Direct Database Maintenance ................................... 47

Table 13. Benchmark Query Runtime Comparison ...................................................................... 48

Table 14. Tabulated Comparison of stg_acft_acty and dm_acft_acty Resource Usage ............... 51

Table 15. Schema Suitability Findings for Direct Database Maintenance ................................... 55

Table 16. Point Values Awarded by Category .............................................................................. 56

Table 17. Overall Schema Performance Evaluation Under Original Weighing ........................... 56

1

ANALYSIS AND OPTIMIZATION OF CONTRACT DATA SCHEMA

I. Introduction

Background

Each year, the Department of Defense (DoD) allocates billions of taxpayer dollars

towards various acquisitions and development projects across the country. The resource

expenditure and manpower involved in these endeavors demand a high degree of documentation

and communication, resulting in a vast quantity of contracts.

At present, Air Force Life Cycle Management Center’s (AFLCMC) Data Analytics

Resource Team (DART) have aggregated over 4 million Air Force contracts across a diverse

range of DoD operations, enterprises and ventures. Collectively, these contracts represent nearly

50 million pages of organizational communications. DART is currently applying natural

language processing techniques to convert raw PDF and image files into an interactive database

(Butcher, 2021). Additional preprocessing of this data is being undertaken using Named Entity

Recognition models, allowing for improved isolation of various contract pricing, serial numbers,

and inventory item identifiers (Haberstich, 2021). The resulting contract data from DART’s

extraction efforts are compiled in a Postgres database. Ultimately, this database will serve as the

backend for an analytical dashboard facilitating analysis of Air Force finances and operations.

Problem Statement

In support of future analysis efforts, DART is attempting to restructure the aggregated

information using standard industry practices for supporting data analytics. DART is seeking to

evaluate the performance of their target database design relative to an unstructured baseline.

Accordingly, the performance of their restructured schema will be compared against the original

raw extracted data schema. Various alternative candidate schemas for data warehousing will also

be evaluated to further explore the schema design solution space. Schema evaluation will utilize

2

structural complexity and query speed as key comparative metrics. A stronger understanding of

data schema effects on backend performance offers the potential for reduced computational and

manpower resources during data analytics, improving the Air Force’s organizational efficiency.

Research Objective

The primary research objective is to compare the suitability of various data schema for

usage as a backend for an analytical pipeline. A comparative evaluation of the unorganized

schema, DART restructured data warehouse schema, and novel alternate schema will be

undertaken. This analysis will contain the following areas of focus:

1. Structural complexity of each tested database schema.

a. Impact on end user ease of use and human performance

b. Impact on database longevity and upkeep

2. Performance speed under a range of standardized evaluation queries and transactions.

Results from these areas of evaluation will grant DART insights and guidance on their current

and future data structuring activities.

Scope

The following research examines the suitability and performance of DART’s efforts to

structure contracting text data. As of the writing of this thesis, only one schema has been

prepared for usage in a Data Mart environment. Accordingly, the following analysis is primarily

focused on one schema and its corresponding unstructured baseline. Limitations in development

environment resources and permissions prevented us from creating our own comparative data

schemas. The outlined methodology is intended to be generalizable and may be carried out

shortly after any future contracting schema restructuring efforts by DART. However, it should be

3

cautioned that the use cases and parameters for deployment environments are not in

consideration during this research due to the developmental nature of the dataset.

Paper Overview

Background information and literature on database structuring and evaluation is

presented in Chapter II. Detailed information concerning the methodology and execution of this

research is contained in Chapter III. Research results are tabulated and discussed in detail in

chapter IV. Research findings, limitations, and future work are summarized in Chapter V.

4

II. Literature Review

Chapter Overview

The review conducted below serves to explore current literature in the field of natural

language processing, named entity recognition, and database design principles. These subjects

and their corresponding publications were selected based on their relevance within the context of

a large government contract dataset. Additional remarks concerning AFLCMC’s development

environment and infrastructure are also included in this chapter.

Natural Language Processing

Natural language processing (NLP) is defined as the field of machine learning dedicated

towards automatically extracting and interpreting natural language data. NLP applications

include (but are not limited to) document summarization, machine translation, and speech

recognition (Stubbs & Pustejovsky, 2013). These applications are dependent on organized and

labelled natural language datasets known as corpora. A given corpus can often be further broken

down into categories, with each category offering pattern insights into specific text formats

(informative reporting, general fiction, legal documentation, etc.) – an appropriate corpus is

ultimately used to train NLP models. Accordingly, the content of these categories as well as the

quality of their labels are essential for maximizing the accuracy of a given NLP objective (Leech,

1991).

Raw contracting data must undergo extensive preprocessing before conducting any NLP

driven analysis. The first of these steps is the tokenization of a given natural language dataset.

Tokenization is the process in which a document is broken down into tokens, where a token is a

5

smaller information unit that allows meaning to be more easily accessed during natural language

processing. The size and complexity of these tokens vary depending on the target application.

For example, a book could be tokenized into individual sentences. In contrast, a smaller body of

information such as a chapter or paragraph may instead be tokenized into individual words

(Banerjee, 2020).

At this point, removal of punctuation and stop words is quite straightforward. Stop words

are defined as high frequency words or phrases deemed to have little impact on the meaning of a

given document (‘a’, ‘the’, ‘am’, etc.). More often than not, these terms simply introduce

unnecessary noise and complexity in a given dataset (Banerjee, 2020). The remaining tokens are

then lemmatized, a process that reduces a word to its base form (e.g., lemmatizing ‘playing’ will

yield the reduced form ‘play’). This step further reduces dataset complexity and noise. Third-

party software libraries such as Wordnet are often used towards this end. At this point, the

remaining tokens undergo part of speech tagging in an effort to introduce additional lexical

context (Bird, 2009).

Named Entity Recognition

Named entity recognition (NER) is a crucial component of NLP and is a supervised

machine learning technique used to isolate user-defined terms of interest. These terms are then

further sorted into user-defined categories, enabling the extraction of a given document’s most

pertinent details (Roldos, 2020).

Open-source APIs and libraries such as the Stanford Named Entity Recognizer (SNER),

Scikit-Learn, SpaCy, and Natural Language Toolkit (NLTK) are most frequently used to achieve

this objective. These open-source libraries often come preloaded with standard NER models,

6

allowing commonly recognized categories to be isolated (a visualization of this labeling process’

end state is presented in Figure 1). However, objectives demanding the extraction of uncommon

or specific entity types require training customized NER models (Shrivarsheni, 2020).

Figure 1: NER Visualization created using NLTK and SpaCy (Li, 2018)

At this time, it should be noted that the development of a custom NER model is quite

time and labor intensive, as it requires the creation of a custom corpus (Pustejovsky & Stubbs,

2013). Within the DoD, there exist a number of unique entities and formats, each of which

require manual labeling. Entities specific to the field of government contracting include CAGE

(Commercial and Government Entity) codes, Contract Line Item Numbers (CLINs), and

National Stock Numbers (NSNs). Fortunately, natural language annotation toolkits (such as

PyLighter) have been used in conjunction with SpaCy to create DART contract-specific NER

models (Haberstich, 2021). These libraries and techniques are typically combined with regular

expressions to preprocess and prepare DART’s contracting data.

7

Contracting Information Extraction

Research applying NLP methods towards DART contract data has been recently

underway. Butcher (2021) applied RegEx (regular expression) and standard NER models to

extract information from government contracts. Butcher found that text mining and RegEx were

highly accurate and computationally efficient, but only in situations where desired entity data

was in a standard format and location. In contrast, standard NER models yielded notably higher

degrees of flexibility, but at a substantially increased computational cost. Moreover, application

of existing NER models simply returned all organizations and identification numbers – no

refinement or discrimination was possible. Ultimately, Butcher recommended the development

of a custom NER model tailored to DART’s contracting data to obtain flexible and accurate

results.

Butcher’s work was directly continued by Haberstich (2021). Here, Haberstich developed

a custom NER model using the open-source Python library SpaCy. This custom model was

found to yield significant improvements in identifying CAGE codes, CLINs, and Part Numbers.

No significant improvements were found in the identification of NSNs.

Database Design Background

The entity isolation and identification outlined in the previous sections ultimately serves

the end objective of facilitating efficient queries and analytics on DART contracting data.

Meeting this goal requires the extracted information to be organized into a database. The method

of organizing data can be defined a databases’ schema. At the highest level, database schema can

be classified as either a relational database or NoSQL database.

8

Relational databases are the most used class of database schema and are characterized by

a series of interconnected data tables. Tables add dimensionality and information to each of their

datapoints through a series of attributes. Engines and languages implementing relational schema

include SQL Server, MySQL, and PostgreSQL (Kolonko 2018). In contrast, NoSQL database

schema (also known as distributed or non-relational databases) do not aggregate data into tables.

Instead, these schemas most frequently structure data using graph theory, key-value pairs,

column sections, or document storage (Gupta, 2017).

Database Schema Considerations

Oftentimes, normalization techniques are applied to optimize and strengthen a relational

database’s structure. The extent and restrictiveness of these normalization processes vary widely.

However, the general goal of normalization remains the removal and resolution of multivalued

attributes, partial functional dependencies, and transitive dependencies (Hoffer, 2011). Effective

application of this process reduces redundancy and anomalies during data transactions, offering

the potential to decrease overall query runtime.

When creating a relational schema, data engineers should ensure that data transactions

adhere to ACID (Atomicity, Consistency, Isolation, Durability) principles. Atomic transactions

can only fully fail or succeed so that failed transactions do not alter any data. Consistency

indicates that transactions do not pose a danger to the underlying structure of the database – all

data is immediately consistent. Isolation dictates that there exists no interaction between

transactions and that only one transaction is carried out at a time. Durability ensures that

completed transactions are immediately committed and remain in place even in the event of a

subsequent network or power failure (Hoffer, 2011).

9

In contrast, NoSQL data schema follows a less restrictive set of guidelines known as the

BASE (Basically Available, Soft State, Eventually Consistent) principles. Basic availability

indicates that aggregated data is spread across multiple data storage systems. Effectively, this

principle emulates ACID’s durability principle through large scale redundancy. Soft state

indicates that data consistency is not inherently guaranteed by the database, shifting this task to

the database’s developers and maintainers. Finally, eventual consistency ensures that data

eventually converges to a consistent state across all storage nodes. There is no requirement for

when to reach this consistent state. In practice, a number of well-designed NoSQL databases

mostly satisfy the more restrictive ACID criteria (Chapple, 2020).

Relational database schemas are best suited for structured datasets, while the less

restrictive NoSQL database schemas are best suited for semi-structured and non-structured

datasets. Structured data can be defined as data adhering to a cleanly organized and readable

format, often presented in a row and column format. In contrast, unstructured data represents raw

and complex data with no consistent formatting. Semi-structured data represents data that

generally follows a set of data rules and characteristics. However, variability and inconsistencies

may still be found (Naeem, 2020).

We may consider DART’s contract database in its raw and original format to be a case

study in unstructured data. A wide range of information is embedded across millions of PDF

files. Ideally, text mining and NER techniques can be applied to perfectly extract and standardize

key data from each of the contracts. This hypothetical outcome would lend itself towards a

structured database schema. However, the prior work carried out by Butcher (2021) and

Haberstich (2021) have demonstrated that there remains a degree of imperfection and variability

10

in the extract of key contract information. Thus, we may realistically classify the format of a

postprocessed DART contracting database as semi-structured.

Normalization

Normalization can be best understood as a series of increasingly restrictive guidelines for

database attribute relationships. This process can be understood as a means to reduce data

anomalies and redundancies, offering the potential for improved transaction execution time and

consistency. The first three normal forms focus on analyzing and streamlining functional

dependencies, or the scenarios where the value of one attribute is dependent on the value another

(Hoffer, 2010).

A database may be considered to be in first normal form if there are no table objects

contained within other tables or duplication. Table cells contain a single value and stored records

are unique. Furthermore, values in a given table are all dependent on one or more primary keys

(Hoffer, 2010).

Second normal form guidelines require the further removal of partial functional

dependencies. A partial functional dependency may be defined as a situation where non-key

attributes are only dependent on a subcomponent of a defined table primary key (Hoffer 2010).

Second normal form is often achieved by partitioning data across multiple data tables.

A database in third normal form further removes any transitive functional dependencies.

These transitive functional dependencies may be defined as situations where one non-key

attribute is dependent on the values of another non-key attribute (Hoffer, 2010). Third normal

form can also be obtained by the partitioning of data tables.

11

Errors that may potentially arise from data tables not in being normal form include (but

are not limited to) insertion, deletion and update anomalies. Insertion anomalies encapsulate

situations where row insertions are duplicated, incomplete, or not possible due to multiple partial

dependencies within the same table. Deletion anomalies describe situations where the removal

of a given row in one table eliminates access to unrelated information elsewhere. Update

anomalies refer to situations where update transactions fail to fully propagate or are made

notably less efficient (Hoffer, 2010). A graphical representation of the steps required to convert a

multivalued table to third normal form is presented in Figure 2.

Figure 2: Graphic Summarizing First Three Normal Forms (Hoffer, 2010)

12

Schema Performance Comparison

As previously outlined, the more intuitive nature of relational database schemas lead to a

more user-friendly environment. Furthermore, the more rigorous ACID standards for relational

schemas demand near-perfect or perfect transaction accuracy. In contrast, the more distributed

nature of NoSQL schema yields an increased potential for data inconsistencies. It should be

noted that the time and memory complexity of data transactions for both relational and NoSQL

databases are both linear (O𝑘𝑛). However, computational demands increase faster with scale

in relational schemas than NoSQL schemas (that is to say, K

Relational

> K

NoSQL

). This

performance gap in large, unstructured datasets can further be widened by selecting the right

NoSQL schema for the job. For instance, document-based NoSQL platform MongoDB

outperforms native XML NoSQL platform eXist (Sánchez-de-Madariaga, 2017).

A meta-analysis examined a wide range of literature in database schema performance.

Here, performance parameters were defined by query latency, writing latency, volume, accuracy,

and scalability. Query and writing latency refer the mean time required to retrieve or write data

to a given database. Volume refers to the potential for parallel processing of data transactions

within a given database. Accuracy indicates the robustness of the database against data

inconsistency and duplication. Scalability describes the rate of change in the other four

performance metrics with respect to database size and complexity. The proportion of times each

given schema outperformed the other across a range standardized research datasets is aggregated

in Figure 3. This data visualization was generated from data from Grove, 2021.

13

Figure 3: Comparison of Schema Performance in Prevailing Literature

Evaluating Database Complexity

An information system’s ease of access, development, and maintenance are tied to its

scale and complexity. Accordingly, an objective measure should be applied towards DART’s

database schema as a means of quantifying its respective complexities. These complexity metrics

serve to expand on the usage of classical normalization forms for the quality assessment of

relational schema. Examples of individual relational database metrics in current literature include

the number of attributes across schema tables, number of foreign keys within a schema, the depth

referential tree of the schema, and the cohesion of the schema (Calero, 2001). A schema’s depth

referential tree refers to the ‘length of longest referential path in a given schema, or the longest

series of connections between a schema’s tables. Cyclical references are counted within this

total, but only once. Schema cohesion refers to the squared summation of the number of tables

contained within each unique subgraph of a schema.

14

Calero’s publication suggests that the number of foreign keys represents the most direct

measure of a relational database’s complexity. Depth referential trees are described as a measure

of database length (depth), while the number of attributes and cohesion are described as a

measure of size (breadth). Calero cautions that these metrics should not be considered in a

vacuum, and that the overall purpose and context of each database should also be factored into

complexity analysis (Calero, 2001).

A later publication acknowledges the need to balance the consideration of multiple

database schema properties during the development of a complexity metric (Pavlic, 2008). Pavlic

et al. proposes assigning a weight for each individual relation in a schema (termed W). This

value is set by the formula 𝑊 𝐴 𝐾 𝐼 𝐹. Here, 𝐴 represents the number of attributes,

𝐾 represents the number of primary and secondary keys, 𝐼 represents the number of additional

non-unique indices (note: unique indices acting as keys are counted in 𝐾), and 𝐹 is the number of

foreign keys. The summation of each relation’s weight 𝑊 is termed 𝐶 and reflects the total

database complexity. This metric considers the total number of relations and several potential

complexity factors holistically. Furthermore, these metrics may be garnered through a series of

relatively straightforward SQL queries in any modern database management system (Pavlic,

2008).

Effects of Schema Complexity on Query Construction

Research within an educational context has indicated that increasing a database’s logical

complexity results in a decreased rate of successful SQL query formulation. The rate of

complications in SQL queries was also found to increase, where complications are defined as

syntactical inefficiencies that negatively impact query readability and performance without

15

influencing final data output (Taipalus 2020). Subjects in this study were database programing

students, who were instructed to complete a series of query construction tasks on schema of

increasing complexity. A weekly time limit was imposed for each tested schema, however there

was no limit on the number of attempts to solve each task. The metric used to compare the

logical complexity of tested relational schema is the summed weight method outlined in Pavlic’s

2008 publication (outlined in the preceding subsection). An increase in schema complexity was

found to yield a commensurate increase in overall query error rate. However, Taipalus’ research

discovered that there was no evidence to suggest that the distribution of error type (syntactic,

semantic, logical, or complications) was dependent on schema complexity (Taipalus 2020).

The environment exhibited in this study may be considered as reflective of a typical

government work environment. Queries are to be constructed within a set time frame with no

practical limitation on repeat attempts. Organization interests stand to benefit from decreased

database complexity, as Taipalus indicates that this would result in decreased query error rates

and improved operational efficiency.

Data Warehouses

Data warehouses are a concept frequently seen in data engineering in enterprise

applications. Data warehouses are subject oriented, integrated, time variant, and nonvolatile

(Naeem, 2022). In this context, a subject-oriented design means that the dataset is created to

handle a certain business task or category of data. In other words, there is a common theme in the

information contained within the warehouse. Information integration describes the pooling of

multiple datasets or databases into one location in a consistent manner. Stored data is processed

to follow a common, unified set of conventions. Time-variant information represents the idea

16

that data should contain time-series labels, allowing for chronological analysis. Finally, the

concept of nonvolatile data represents the idea that stored information is permanent. Data is not

replaced or deleted, and may generally be regarded as functionally read only (Naeem, 2022). A

table comparing the features of databases and data warehouses is presented in Table 1. It should

be noted Online Transactional Processing (OLTP) represents simpler read-write queries at a high

rate, while Online Analytical Processing (OLAP) represents relatively infrequent complex

queries that are optimized for analysis and reporting purposes (Reis, 2022).

Table 1. Comparison of Databases and Data Warehouses (Naeem, 2022)

Database Data Warehouse

Amalgamation of related data

Information system containing historical and

commutative data from one or several sources

Used for recording data Used for analyzing data

Application-oriented collection of data Subject-oriented collection of data

Online Transactional Processing (OLTP) Online Analytical Processing (OLAP)

Data and structure normalized Data and structure may be denormalized

Inmon and Kimball Methodologies

In the world of data modeling, Inmon and Kimball’s methods are considered the

prevalent standards for data warehouses. Despite the contemporaneous development of these

approaches, their philosophies remain fundamentally opposed (Reis, 2022). Inmon emphasizes a

highly structured and organized approach towards data warehouse construction, believing that

the end goal of a data warehouse is to maintain single source of truth for an enterprise. However,

17

this approach is more resource intensive to set up than Kimball’s method, and ongoing

normalization may increase operations complexity (Naeem, 2022). Fundamentally, the Inmon

philosophy is a “data first” approach, where business operations draw upon a single structured

source (Reis, 2022).

In contrast, Kimball’s philosophy can be said to follow a “mission first” approach, where

data storage methods are designed with the intent of serving specific business divisions or goals.

A key concept in the Kimball method is the star schema. Here, a central ‘fact table’ outlines a

series of event related data. Additional context concerning certain aspects of fact table entries are

tabulated in separate dimension tables. Many modern database structures extend this concept

with snowflake schemas (Smallcombe, 2019). These schemas attach additional dimension tables

to dimensions tables in a pattern that resembles a snowflake or fractal. This shape is a function of

normalizing star schema dimension tables. A succinct comparison of the differences between star

and snowflake schemas is contained in Table 2.

The denormalization often seen in these Kimball schemas may lead to potential

redundancies and inconsistencies (Naeem, 2022). Furthermore, they involve executing more

potential JOIN statements than their Inmon alternatives. Overall, the emphasis is shifted from

normalization and structure towards flexibility and development speed (Reis, 2022).

18

Table 2. Comparison of Kimball Star and Snowflake Schema (Smallcombe 2022)

Star Schema Snowflake Schema

Fact tables surrounded by only a single layer

of dimension tables

Dimension tables may have additional

dimension tables attached

Denormalized data structure Normalized data structure

High level of data redundancy, slightly lower

storage efficiency

Low level of data redundancy, slightly higher

storage efficiency

Lower structural and query complexity Higher structural and query complexity

Better suited for data marts and smaller

datasets (less than 100 GB)

Better suited for data warehouses and larger

datasets (greater than 100 GB)

Less suited for diverse and complex analytical

queries

Better suited for diverse analytical demands

(focus on analytics and modeling)

Postgres Background and Discussion

At present, DART structures its contracting database using PostgreSQL (also known as

Postgres). This relational database management system’s open-source philosophy allows free use

by any organization, both public and private. Furthermore, open-source development offers end

users a wide range of community-sourced extensions. These factors contribute towards

PostgreSQL’s popularity and versatility, providing ample documentation and flexibility during

AFLCMC contract data structuring (Postgres Documentation 2022).

Postgres provides the mainstays of a modern relational database management system,

providing rigid adherence to ACID design principles. Data consistency is guaranteed through the

application of Multiversion Concurrency Control (MVCC). MVCC works by storing multiple

versions of objects undergoing revision. Thus, the results of one user’s incomplete write

transaction is prevented from being retrieved by another user’s read transaction. This robustness

towards error provides advantages during periods of heavy changes and development on a

19

database with many end users. Standout security features such as extensive user permissions

configuration and data encryption strengthen the case for usage in multi-person government

development environment. Furthermore, PostgreSQL is simply an iteration of Structured Query

Language (SQL). This property allows syntactic knowledge to be readily gained by those who

are already familiar with other members of the SQL family such as MySQL (Postgres

Documentation 2022).

A substantial amount of labor and infrastructure has already been invested by AFLCMC

and DART towards onboarding contracting information to Postgres. Accordingly, this database

management system will likely host future schema of DART contracting data.

R Shiny

The intended frontend interface for contract analysis is R Shiny, an R package that

enables the development of interactive web applications. In contrast to other common business

intelligence packages such as Tableau, R Shiny is free and open source (Wickham 2021). The

software’s integration into the R programming language allows the usage of other R packages.

Various dependencies are automatically monitored for currency and validity, improving the end

user’s experience and the organization’s security. Additionally, developers using R Shiny do not

require the knowledge of HTML, CSS, or JavaScript that traditional web application developers

require.

DART’s usage of this tool facilitates stronger communication of analysis and modeling to

non-technical end users. However, it should be noted that the elements that are incorporated into

the R Shiny interface may indirectly influence the type and frequency of queries sent to the

contracting database backend.

20

III. Methodology

Chapter Overview

The content below serves to outline the methods used to compare simple non-

interconnected database schemas, DART-designed Kimball Star schemas, and novel snowflake

schemas. The structural complexity of each candidate schema will be evaluated. These

complexity metrics will serve to create a stronger understanding of potential query construction

challenges for end users. These results may additionally yield insights leading to improvements

in database upkeep and maintenance. The overall computational performance of each candidate

schema will also be evaluated using a series of bespoke benchmark queries. Each benchmark

query’s computational resource demands will be compared in an attempt to develop an empirical

understanding of potential performance advantages and disadvantages for evaluated schemas. A

holistic analysis factoring in end user ease of use, data maintenance, and computational

performance will be carried out for each schema. This analysis will ultimately serve as a set of

potential guidelines for future areas of research and development.

Research Background

Over the past two years, DART has applied a range of text mining techniques to extract

actionable text data from more than four million Air Force contracts (Haberstich, 2021). The end

goal of DART is to provide a simple, user-friendly search tool for contract analytics using R

Shiny as a frontend interface. Accordingly, a well-developed backend design is paramount. The

chief concern of the following analysis is the quality of the schema governing the relationships

between data tables, and not of the constituent data points.

21

Development Environment

All extracted contracting data has been loaded into a PostgreSQL database, which is

presently hosted on the US Air Force’s REEF high performance computing cluster. Relevant

hardware and software specifications are shown in Table 3 for future reference and

reproducibility. All the below specifications represent the resources made available to this

project and do not capture the entire performance potential of the REEF cluster. It should be

noted that DART personnel are planning on migrating operations to private cloud computing

platforms (Microsoft Azure, Amazon Web Services, etc.) in the latter portion of Q2 FY2023.

However, the specifications detailed in Table 3 accurately reflect the resources available for this

research.

Table 3. Current Development Environment Specifications

Node CPU Dual Intel Xeon Gold 6248 Cascade Lake

Node Core Count 40 cores

Node Processor Speed 2.5 GHz

Node Memory 768 GB DDR4

Available Storage for DB 109 TB

Operating System Red Hat Enterprise Linux 7

Relational DBMS PostgreSQL 11.16

DB Admin Tool pgAdmin 4.27

The contracting data contained on REEF is split into a testing and development database.

These databases are labelled afdart1 and afdart2 respectively. At the time of this research, afdart2

contains ongoing work to restructure and prepare cleaned and formatted iterations of the ingested

contracting data. Finalized schema will be pushed to afdart1 in preparation for an R Shiny

interface.

22

Initial Exploration of DART Schemas

DART personnel have organized the extracted data of interest into 14 distinct schemas

within the afdart1 development environment. Three schemas on this server (eda_jobs, eda_fpds,

and public) were initialized but unpopulated and thus removed from further consideration. Thus,

11 total schemas from afdart1 are selected for initial analysis. The afdart2 development

environment contains 38 distinct schemas. However, it should be noted that many of these

schemas are under active redevelopment, act as personal user sandboxes, or are inaccessible due

to organizational permission policies. Initial analysis concerning the size of each schema and its

constituent tables were conducted within pgAdmin to determine which schema was best suited

for initial benchmarking, exploratory analysis, and further development.

The large file size of the DART databases raises significant transaction runtime concerns

during research. Accordingly, a smaller subset of data tables and schema were selected for initial

benchmarking and development. For reference, a complete initial row count for all schema on

afdart1 required nearly two hours of runtime on the REEF computing cluster. For this row count,

PostgreSQL executed a sequential scan for each COUNT(*) operation, which can be expected to

scale linearly with complexity 𝑂𝑛 where 𝑛 is the number of rows (Postgres Documentation,

2022). More computationally complex operations such as MERGE JOIN can be expected to

take even longer.

Row count, disk space, and table count totals were gathered for each schema common to

both afdart1 and afdart2. The results of this exploration for schemas from afdart1 are presented

in Table 4 and are sorted in descending order by row count. The corresponding dimensionality

exploration for afdart2 schemas is captured in Table 5. The queries used to construct Tables 4

and 5 are contained in Appendix A. A further comparison of size differences across the eleven

examined schema common to both afdart1 and afdart2 is contained in Table 6. It should be noted

23

that the values captured in Table 4 represent the original, unstructured state for all contracting

schemas. This initial dimensionality exploration was conducted on 27 October 2022, prior to any

notable restructuring efforts by DART. These earlier figures are maintained for comparative

purposes, as more completed restructuring efforts reduce the difference between afdart1 and

afdart2. For instance, the dm_acft_acty schema has been pushed from afdart2 to afdart1 in

December 2022 in preparation for final testing and evaluation. The dimensionality data gathered

for afdart2 in Table 5 accurately reflects the ongoing restructuring efforts by DART and is

current as of 2 January 2023.

Table 4. AFDart1 Data Schema Size Overview (Initial State CAO 27 OCT 22)

Schema Total Rows File Size (GB) Number of Tables

express 5,792,302,938 1,489.0 43

ils_s 3,542,955,817 1,101.0 89

remis 3,180,065,324 346.0 136

dm_acft_acty 2,607,981,846 1,176.0 38

d043 1,533,408,558 249.0 18

aftoc 173,448,771 151.0 4

manpower 28,937,637 7.8 1

supply 3,275,078 0.6 1

master_ref 1,885,237 0.3 13

etl_jobs 3,584 0.0 5

dart_meta 1,652 0.0 12

Total

16,864,266,442 4,521 360

Note that the table counts for each schema excludes empty tables and includes table

partitioning. The ils_s, remis, dm_acft_acty, d043, aftoc, manpower, and etl_jobs schemas all

saw notable increases in their row counts and schema sizes between late October and early

December of 2022. This result is expected and is a result of quarterly contract data ingestion by

DART. Elimination of redundant attributes in the express schema (the largest schema in both

24

afdart1 and afdart2) has facilitated a net decrease in the amount of data present in afdart2. No

tables were removed or added during this process in the express schema.

Restructuring efforts are most pronounced in the remis and dm_acft_acty schemas and is

evidenced by a significant increase in schema table count. Note that the very large increase in

tables for the remis and dm_acft_acty schemas reflect the introduction of table partitioning, a

method used in database engineering to improve memory and query management of very large

data tables. The table counts for remis and dm_acft_acty are otherwise unchanged after removing

these logical partitions from consideration. Size data for stg_acft_acty is also shown in Table 5

due to its functionality as a staging platform for raw contract data as well as its role in later

comparative schema analysis. The size decrease between the earliest iteration of dm_acft_acty

seen in Table 4 and the dm_acft_acty in Table 5 can be partially explained by the division of data

between Data Mart ready and staging schemas.

25

Table 5. AFDart2 Data Schema Size Overview (Current Development CAO 2 JAN 23)

Schema Total Rows File Size (GB) Number of Tables

express 5,792,302,938 1,387.0 43

ils_s 5,123,287,783 1,129.0 90

remis 3,202,658,522 354.0 738

dm_acft_acty 1,981,853,870 682.0 810

stg_acft_acty 607,416,969 150.0 248

d043 1,588,086,570 254.0 18

aftoc 175,042,028 152.0 4

manpower 31,694,126 9.0 1

supply 3,275,078 0.6 1

master_ref 1,885,237 0.3 13

etl_jobs 9,533 0.0 5

dart_meta 1,652 0.0 12

Total

18,528,776,328 4,487.8 1,743

Table 6. AFDart1 and AFDart2 Data Schema Dimensionality Comparison (CAO 2 JAN 23)

Schema

Change in Row

Count

Absolute Size

Change (GB)

Relative Size

Change

Table Count

Change

express

--- -102.0

-6.85 %

---

ils_s

+1,580,331,966 +28.0

+2.54 %

+1

remis

+22,593,180 +7.0

+2.02 %

+602

dm_acft_acty

-626,127,976 +27.0

-42.00 %

+772

d043

+54,678,012 +5.0

+2.01 %

---

aftoc

+1,593,257 +1.0

+0.66 %

---

manpower

+2,756,489 +1.1

+14.10 %

---

supply

--- 0.0

0.00 %

---

master_ref

--- 0.0

0.00 %

---

etl_jobs

+5,949 0.0

+335.23%

---

dart_meta

--- 0.0

0.00 %

---

Net Change

+1,664,509,886 -32.9

-0.73 % 1,383

26

Data Warehouse Design Framework

As discussed previously, DART is seeking to prepare the extracted data for future

analytics and decision making. Data collections and updates will primarily occur at the start of

each fiscal quarter as new contracts are read into the database. Overall data structure and content

will remain relatively static within each quarter. This information represents the aggregated

results of extensive text mining on DoD acquisitions contracts. Prior work during this text

mining process has ensured a degree of data standardization. Additionally, the publication date

of the original copy of each contract is logged when available. Given these conditions, we may

consider the contracting information to be non-volatile, subject-oriented, integrated, and time

variant. Accordingly, the dataset’s design and optimization will be considered within a data

warehouse context.

Updates to contracts contained within the DART data warehouse do occur on a semi-

regular basis. However, associated properties such as originating organization or involved

personnel remain unchanged and may be repeated across several data sets. Furthermore, the core

goal of the project remains the facilitation of DART data analysis. Thus, the Kimball Star

schema can assist data analysts in this task by organizing data into a series of fact tables, which

are in turn augmented with a number of dimension tables. An example of a simple database

applying this concept is illustrated in Figure 4.

In larger datasets, the potential storage savings brought on by star schema may be further

developed by breaking dimension tables into additional fact-dimension table groupings. This

multi-tiered star schema system is known as a snowflake schema and may present substantial

storage savings by eliminating potential data redundancies (Nguyen, 2020). The star schema

example in Figure 4 is rebuilt in snowflake form in Figure 5.

27

Figure 4: Example of Star Schema (Smallcombe, 2019)

28

Figure 5: Example of Snowflake Schema (Smallcombe, 2019)

29

Current DART schema restructuring efforts have involved organizing unconnected data

tables into a Kimball Star schema. Each schema acts as a unique topic data warehouse. These

subject-oriented data warehouses are also known as Data Marts and will serve as the backbone

for an R Shiny frontend. As of 2 January 2023, only the remis and dm_acft_acty schemas have

seen any restructuring efforts into a Data Mart-ready format. Note that both of these schemas

have a corresponding staging schema for the associated raw contract data. These staging schemas

are stg_remis, and stg_acft_acty respectively and offer the potential to act as baseline

comparisons for remis and dm_acft_acty schema analysis. However, discussion with DART

personnel on 8 December 2022 has indicated that the remis schema has only undergone a very

initial degree of structuring and remains under active development for the near future. Note that

the stg and dm abbreviations in the schema names act as staging and Data Mart designators.

In contrast, a portion of the dm_acft_acty schema design is Data Mart ready and has been

pushed to the testing environment on afdart1. Some raw contract data remains on the

corresponding stg_acft_acty schema, leaving the schema itself online as a research baseline.

Accordingly, the stg_acft_acty and dm_acft_acty schemas will remain the primary focus for the

following research and analysis.

Target Schema Overview

The stg_acft_acty and dm_acft_acty schemas represent information drawn from a range

of personnel and aircraft maintenance documents. Entity relationship diagrams of the non-Data

Mart ready staging schema is shown in Figure 6. An entity relationship diagram of the completed

Data Mart ready dm_acft_acty schema is shown in Figure 7 for comparison. Both entity

relationship diagrams are truncated, as there exists only one star schema redesign within the

entirety of dm_acft_acty. Unconnected tables were accordingly excluded from Figure 7 to better

30

highlight restructuring efforts. Tables only existing in both stg_acft_acty and dm_acft_acty

remain in Figure 6 for the same reason.

The restructured data tables represent a range of monthly personnel and aircraft

summaries across all U.S Air Force base locations. The Data Mart structure is a simple Kimball

Star Schema, with the fact_mnpwr_mo_agg table acting as the central ‘fact’ table. Note that the

dm_acft_acty has introduced a degree of redundant data in the dim_dt dimension table. This

table divides the raw datetime data already contained in the dt_mo_cd column of

fact_mnpwr_mo_agg into various components to allow for expedited analysis.

It should be noted that the dm_acft_acty and stg_acft_acty schemas are primarily

comprised of maintenance, personnel, and resource allocation data. However, the methodology

for this research primarily focuses on structural development concerns and is agnostic to attribute

datatypes and content. However, the datatypes for each attribute do remain available for perusal

in Figures 6 and 7. Sample outputs from the fact_mnpwr_mo_agg, and dim_unit tables are

available in Appendix B. These tables were chosen to represent examples of data from both fact

and dimension tables.

31

Figure 6: Entity Relationship Diagram of the Staging stg_acft_acty from AFDart2

32

Figure 7: Entity Relationship Diagram of the Data Mart-ready dm_acft_acty from AFDart2

33

Effects of Structural Complexity on Human Performance and Longevity

For a given analytical task, more structurally complex database schema were found to

increase query construction time and error rate (Taipalus, 2020). In this context, schema

complexity is dependent on schema attribute, key, and index counts. Taipalus further concludes

that less complex schema designs offer improved ease of use and access for analysts directly

querying a relational database.

It should be further reiterated that the AFLCMC DART dataset aims to simply serve as

the backend to an R Shiny-driven frontend. End user analysts will query data in an abstracted

manner and the actual database schema will be relatively constant, limiting potential update

inconsistencies common to denormalized schema. This impact on query construction and human

performance may accordingly be granted a lower weight during the holistic evaluation.

Relational queries interfacing between the abstracted frontend and the proper backend

still need to be constructed during the development of this pipeline. Thus, structural complexity

across the schema evaluated in the following analysis remains valuable as a proxy for potential

development manpower costs.

Higher schema complexity scores may also illustrate potential future maintenance and

upkeep issues. Kimball Star schemas may directly sacrifice normalization in simple one-to-one

relations as an exchange for potential gains in development and query speed. Additionally,

many-to-many relationships or many-to-one relationships often are not able to be normalized

within the constraints of a fact-dimension table architecture. This increased level of redundancy

and complexity may lead to increased manpower costs during future data ingestion and

debugging (Naeem, 2022).

34

Evaluation of Structural Complexity

The comparative analysis for structural complexity across DART data schema is

conducted using the methodology outlined in Pavlic (2008). In summary, each schema’s

complexity is represented as the sum of its unique attributes, primary keys, foreign keys, and

additional indices. This measure of complexity is more straightforward to implement across a

large range of tables within PostgreSQL than the relational algebra or graph-based metrics

discussed in Calero (2001). Pavlic’s metrics may be gathered using a set of simple queries.

Commonly used alternative measures of complexity such as those proposed in Calero

(2001) are less intuitive and much more challenging to implement at scale. The alternatives

discussed by Calero call upon graph theory and relational algebra and include but are not limited

to metrics such as depth referential tree and schema cohesion. Overall, the relative simplicity and

interpretability of Pavlic’s methodology ultimately led to its selection as the primary complexity

metric during the analysis of DART schemas. The queries used to construct these complexity

metrics are shown in Appendix C.

Acquiring the requisite information to calculate each schema’s complexity is facilitated

through pgAdmin metadata schemas. Relevant metadata is automatically generated and updated

within the information_schema and pg_catalog schemas. information_schema contains

information concerning all defined objects in a given database. This information is defined in a

SQL standard, promoting portability across development environments. In contrast, pg_catalog

is a system schema and provides information concerning PostgreSQL-specific features in

addition to object metadata (Postgres Documentation, 2022).

It is cautioned that the approach outlined by Pavlic holds each of the aforementioned

properties in equal weight, which may not be truly representative of practical complexity. For

example, a relatively minor decrease in table attribute counts may outweigh a potentially large

35

introduction of relatively major increase in table key constraints. Indeed, this specific example

proves to be the case when comparing stg_acft_acty to dm_acft_acty. Accordingly, a range of

potential weights will be considered and evaluated for each evaluated schema to allow for a

degree of sensitivity analysis.

Evaluation of Computational Performance

At present, DART personnel have indicated plans to link the resulting contracting

database schema to an R Shiny frontend. Accordingly, data retrieval and a report construction by

data analysts will likely be executed without direct query construction. More involved analytics

are slated to be carried out within Elasticsearch, an open-source data analytics search engine.

R Shiny, Elasticsearch, or any other analytics frontend still act as abstractions on top of a

Data Mart backend. Thus, a series of benchmark queries will be constructed to evaluate the

runtime performance of the original and novel schema structure.

Benchmark Queries

Present planning for the contracting Data Mart indicates that database analytics will be

conducted on an ad hoc basis. In other words, there is a limited degree of access to representative

end user database interactions. A set of standardized queries are often run on a standard testing

and development Kimball Star Schema as a means to evaluate the performance of various

Relational Database Management Systems. This process is known as the Star Schema

Benchmark (SSB), and represents a range of aggregation functions, conditional parameters, table

joins, and other operations that are likely to be encountered in an enterprise or analytics data

warehouse environment (O’Neil, 2009).

36

A total of eight benchmark queries were developed and applied to the dm_acft_acty and

stg_acft_acty schemas. These custom benchmark queries were created with the intent to capture

a range of analytical functions similar to those seen in the Star Schema Benchmark. The

analytical functions addressed by each benchmark query is contained in Table 7, and each

query’s syntax is available in Appendix D. Queries with identical output targets were executed in

the unstructured stg_acft_acty staging schema, holding the number of operations (number of

JOINS, aggregation functions, etc.) constant where possible. Note that there is no direct

timestamp dimension table equivalent in stg_acft_acty for query 3, 6, and 8. Accordingly, the

equivalent information was extracted from timestamp attribute information contained in the

stg_acft_acty precursor for dm_acft_acty’s central star fact table (fact_mnpwr_mo_agg). The

additional computational overhead introduced from this extraction serves to highlight yet another

potential difference in performance between the Data Mart ready star schema and a simple

unstructured staging schema.

The benchmark queries were run in one batch during a period of low demand (overnight

during the December 2022 holiday season) to control for the resource fluctuations brought on by

a shared computing environment userbase’s variable demands. The average of three query

executions were logged for each benchmark query and database schema combination. Additional

benchmarking data details such as computational resource usage were gathered using pgAdmin’s

‘Explain/Analyze’ functionality. This functionality breaks down the component functionalities of

each query and provides step-by-step overviews of computational demands. Resource usage is

measured using an arbitrary unit selected by pgAdmin developers, with each unit reflecting the

resources required by the host system to sequentially scan through 8 kB of text. However, this

analysis function remains under development and currently yields negative runtime values when

37

certain operations are run in parallel (commonly seen in high performance computing clusters

such as REEF). Accordingly, accurate runtime breakdowns for specific resource-intensive

processes that utilize parallel operation (such as MERGE operations) are not available (Postgres

Documentation, 2022). Running the benchmark queries within the pgAdmin interface does

introduces additional computational demands. However, all benchmark queries are run in the

same environment and therefore incur identical resource overheads.

Table 7. Selected Benchmark Query Descriptions

Query General Tested Functionalities and Description

Q1 Multiple Aggregate, Multiple JOIN, GROUP, ORDER

Q2 Multiple JOIN, ORDER, LIMIT

Q3 Multiple JOIN, LIMIT

Q4 Multiple JOIN, Multiple Conditional Constraint, LIMIT

Q5 Single Aggregate, Multiple JOIN, Single Conditional Constraint, GROUP

Q6 Multiple Aggregate, Multiple JOIN, GROUP, ORDER

Q7 Single JOIN, LIMIT

Q8 Single Aggregate, Multiple JOIN, Multiple Conditional Constraint, GROUP,

ORDER

Note that SQL aggregate functions involve arithmetic operations such as COUNT, AVG,

MIN, or MAX. All evaluated aggregate functions included a ROUND operation to emulate a

demand for readable output. All JOIN statements were INNER JOINS, including only non-null

rows for both the fact and target dimension tables. Queries involving multiple JOIN operations

only included a total of two dimension tables for consideration. Additionally, the size of the fact

table itself led to output being limited to 100,000 rows in the interest of time. These LIMIT

operations were not necessary in the case of certain queries due to operations being carried out

on smaller dimension tables. Specific syntax for each query is made available in Appendix D.

38

Novel Data Structure

At its core, the focus of the research remains on promoting a resource-efficient data

structure that is conducive to end user productivity. The insights garnered from the preceding

data exploration and analysis will be used to explore alterations to the existing DART

contracting database. To this end, additional schema considerations and designs are compared

with the original data present in afdart1 and the novel data warehouse architecture put forward

by DART in afdart2.

Development and Evaluation of Novel Data Structure

Prior literature reveals a range of potential advantages and disadvantages associated with

star and snowflake schema. The optimal choice between these design options varies with respect

to project goals and parameters. Accordingly, dm_acft_acty will be additionally restructured in

snowflake form and undergo the previously outlined structural complexity and maintainability

evaluations. Details concerning the execution and evaluation of this novel schema will be

discussed in Chapter 4.

Benchmark query collections for the novel snowflake schema are omitted. Storage

availability on REEF is already a large concern for DART personnel, and the DART contracting

database already exceeds previously established storage allowances. Accordingly, the evaluation

of the novel snowflake schema on a modified copy of dm_acft_acty on REEF is not possible due

to resource limitations. Further resource and security limitations do not allow the transfer of

stg_acft_acty or dm_acft_acty data onto computing devices accessible to the researcher. Running

benchmark queries on synthetic data similar to those seen in stg_acft_acty and dm_acft_acty on a

non-high performance computing cluster would demand impractical runtimes. Accordingly,

39

benchmark query performance conclusions for the novel data structure are extrapolated using

current literature and trends in computational usage between the other evaluated schemas.

Holistic Schema Evaluation

The impacts of structural complexity on query construction and data longevity will be

considered in tandem with computational performance to holistically rank candidate schemas. A

ranking ranging from one to three will be assigned for each category, where a rank of one and

three respectively reflect the strongest and weakest performance. These rank values will then be

multiplied by a range of categorical weights (Table 8) to arrive at a weight-adjusted composite

rank. The schema with the best (lowest) overall weight adjusted rank is then labeled as the

highest performing-tested design.

As discussed in earlier sections, the relevance of database human factors will be

diminished following the implementation of frontend analytic interfaces. Furthermore, near-term

migrations to civilian cloud services may potentially limit or ameliorate long term upkeep

concerns. Throughout this evaluation process, overall query speed and performance remains the

most user-relevant and quantifiable metric. At present, potential queries are ad hoc and may

reflect a wide range of possible computational demands. Additionally, the resources of the

computing cluster hosting the afdart1 and afdart2 databases are limited. Accordingly, the

following weights for each category are outlined for consideration in Table 8 and applied in

Chapter 4.

40

Table 8. Category Weights for Holistic Schema Evaluation

Category Weight

Human Factors 0.2

Data Longevity 0.3

Query Performance 0.5

Chapter Summary

Accurately evaluating the relative performance of different database schemas is

challenging and dependent on the specific use case. Human, organizational, and computational

factors were taken into careful consideration in an attempt to compare the overall potential of

various DART candidate schemas.

41

IV. Results and Analysis

Chapter Overview

The following chapter captures and explains the results yielded by the preceding

methodology. The human performance and database longevity impacts of each schema’s

structural complexity are evaluated and discussed. Computational benchmarks are collected and

examined for the baseline and Data Mart data schemas. A holistic quality evaluation of all

evaluated schemas is additionally included.

Evaluated Database Schemas

As of early January 2023, only the dm_acft_acty schema was adequately redesigned in

preparation for usage as a Data Mart. The stg_acft_acty hosted on afdart2 acts as an unstructured

staging area for recently ingested contracting data. Accordingly, the stg_acft_acty and

dm_acft_acty schemas were selected as baseline and Data Mart benchmark schemas respectively.

Novel Schema Development

It should be reiterated that DART restructuring efforts for the dm_acft_acty schema have

been centered on the implementation of a Kimball Star schema, which can be considered a

single-layered snowflake schema. Thus, a novel schema applying a higher dimension snowflake

architecture was developed and evaluated as a potential Data Mart solution. A graphical

representation of dm_acft_acty modified into a snowflake form (titled sf_acft_acty) is illustrated

in Figure 8. The below entity relationship diagram may be compared to the entity relationship

diagrams corresponding to stg_acft_acty and dm_acft_acty (see Figures 6 and 7 in Chapter 3).

42

Figure 8: Proposed Entity Relationship Diagram of the sf_acft_acty Snowflake Schema

43

The sf_acft_acty schema seen in Figure 8 was designed by carrying over normalization

principles to the dm_acft_acty schema. All attributes in a given table are solely dependent on their

primary key. Any attributes that have additional dependencies become the primary keys of their

own table. For instance, the afsc_crr_fld_desc attribute is a description of a given Air Force career

field and is directly dependent on the afsc_crr_fld_cd attribute. Both these attributes were

previously found in the dim_afsc table. However, they are given their own table to limit irrelevant

information during data analysis.

Structural Complexity Evaluation and Analysis

The following structural complexity analysis is conducted using the complexity evaluation

methodology discussed in Chapter 3. To this end, queries on counts from afdart2’s

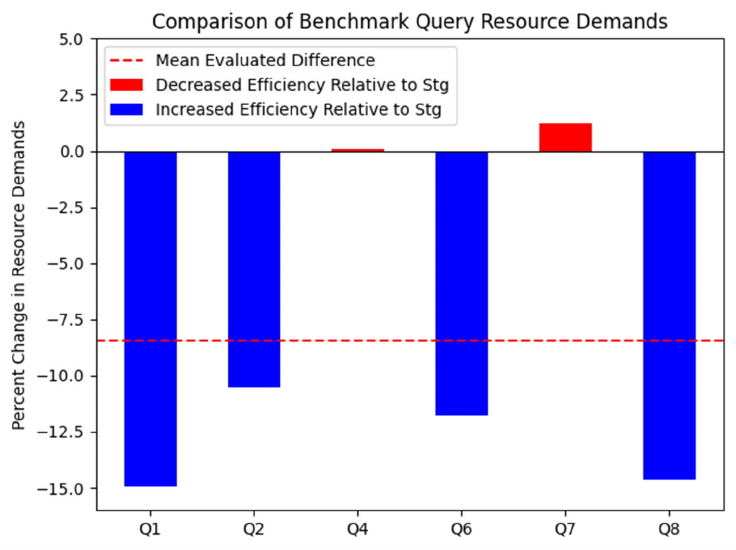

information_schema and pg_catalog schemas yielded the total attribute, primary key, foreign key,