APPROVED FOR PUBLIC RELEASE

Reliable Software Statement of Work

Language Guidance

December 2022

Report No. FCDD-AMR-MR-22-08

Reliability, Availability, and Maintainability

Engineering and System Assessment Division

Systems Readiness Directorate

U.S. Army Combat Capabilities Development Command

Aviation & Missile Center

Redstone Arsenal, Alabama 35898-5000

APPROVED FOR PUBLIC RELEASE

Reliable Software Statement of Work Language Guidance

Report No. FCDD-AMR-SR-22-08

Foreword

This document provides the guidance for reliability engineers who are responsible

for writing statement of work language for reliability, availability, and maintainability.

The guidance is for selecting the relevant tasks for reliable software based on the type

and size of the program, current phase of acquisition, and maturity of the software.

APPROVED FOR PUBLIC RELEASE

i

EXECUTIVE SUMMARY

This document provides guidance for the reliability engineer(s) who are responsible

for generating reliable software requirements into the Statement of Work (SOW). This

guidance addresses:

• Selecting the relevant tasks for reliable software based on the type and size of

the program, current phase of acquisition, and maturity of the software.

• Tailoring the language for those tasks based on how much software is in the

system, the degree to which the software can contribute to a mission failure, how

high the reliability requirement is, the complexity of the system and software, the

contractor’s capabilities, the risks imposed by changes to the mission, hardware

or interfaces, and other factors.

The guidance document is applicable for weapon and combat systems, and the

mission systems that support weapon and combat systems. This guidance document is

not intended for or use with enterprise or business systems acquisitions (electronic mail

systems, accounting systems, travel systems, and human resources databases).

This guidance and the tailoring of the SOW language is intended for “software

intensive” systems. The Defense Acquisition University definition is “A system in which

software represents the largest segment in one or more of the following criteria: system

development cost, system development risk, system functionality, or development

time.”

1

The term software intensive is applied more broadly in this document. Any

weapon or combat system with software is considered to be software intensive for this

SOW document. Most modern weapon and combat systems have software and are

therefore software intensive for the purposes of this guidance document. The reliability

engineer can determine from the software engineering counterpart if the system is

software intensive.

This document is intended to address the following lessons learned about unreliable

software:

• The system reliability is not meeting specifications because of software failures.

• The Department of Defense (DoD) is finding out far too late in development and

test that system requirements are not being met due to the software.

• Software intensive systems have too many restarts, resets, and/or reboots which

collectively cause the system to be down longer than required.

The goals for this document are:

1

https://www.dau.edu/glossary/Pages/Glossary.aspx#!both|S|28508

APPROVED FOR PUBLIC RELEASE

ii

• Provide insight into the software development artifacts and activities so that the

Government can independently assess both the software artifacts and the

contractor’s ability to make the software mission ready.

• Define acceptable system metrics supported by Reliability and Maintainability

(R&M) to measure and evaluate (define how software related failures impact

current R&M system metrics).

• Implement effective R&M requirements and metrics into software development

programs that are employing Development, Security, and Operations

(DevSecOps).

• Contract for reliable software and effectively evaluate the risks of contractor’s

proposal to achieve reliable software.

• Differentiate roles, responsibilities, and interactions of reliability, software, and

systems engineering.

• Provide for a contractual means for using lessons learned for reliable design to

build software that is more failure resistant and fault tolerant.

• Reduce the occurrence or impact of software failures during operation.

Software does not wear out like hardware. However, software does cause failures

due to hundreds of different root causes. Software does not have to be “down” to cause

a major function failure. The software can cause failures even when operating by:

• Executing irreversible actions or decisions that contribute to a hazardous event.

• Executing a required function the wrong way.

• Executing the function at the wrong time or order.

• Inadvertently executing a function in the wrong state.

• Not executing a function at all when commanded.

• Inability to detect and recover from faults in itself and the system.

• Degraded function or malfunction for the subsystems, components, and

interfaces

Due to the immense size of today’s complex software intensive systems, finding all

the root causes in development and test is a challenge due to time and budget

constraints. For software, the likelihood of each failure is driven by:

• How detectable the underlying defect is in development and test.

• Whether there are any controls over the failure.

• The level of rigor of the test activities.

APPROVED FOR PUBLIC RELEASE

iii

TABLE OF CONTENTS

1.0 Summary of Reliable Software Tasks and Tailoring Guidance ..................... 1

1.1 Reliable Software Program Plan (RSPP) Task ............................................. 7

1.2 Inclusion of Software in System Reliability Model Task ................................ 9

1.3 Reliable Software Allocations Task ............................................................ 13

1.4 Reliable Software Prediction Task .............................................................. 17

1.5 Reliable Software Evaluation Task .............................................................. 21

1.6 Software FMEA (SFMEA) Task ................................................................... 26

1.7 Inclusion of Software in FRACAS Task ...................................................... 36

1.8 Software Reliability Risk Assessment Task ................................................. 38

1.9 Testing for Reliable Software Task ............................................................. 40

2.0 Customer and Contract Reliability Requirement ............................................. 46

3.0 Section L ......................................................................................................... 46

4.0 Section M ........................................................................................................ 47

Appendix A DoD Acquisition Pathways .................................................................. 48

Appendix B Common Defect Enumeration (CDE) .................................................. 51

Appendix C Document Summary List and CDRLs ................................................ 153

Appendix D Terms and Definitions ....................................................................... 162

List of Tables

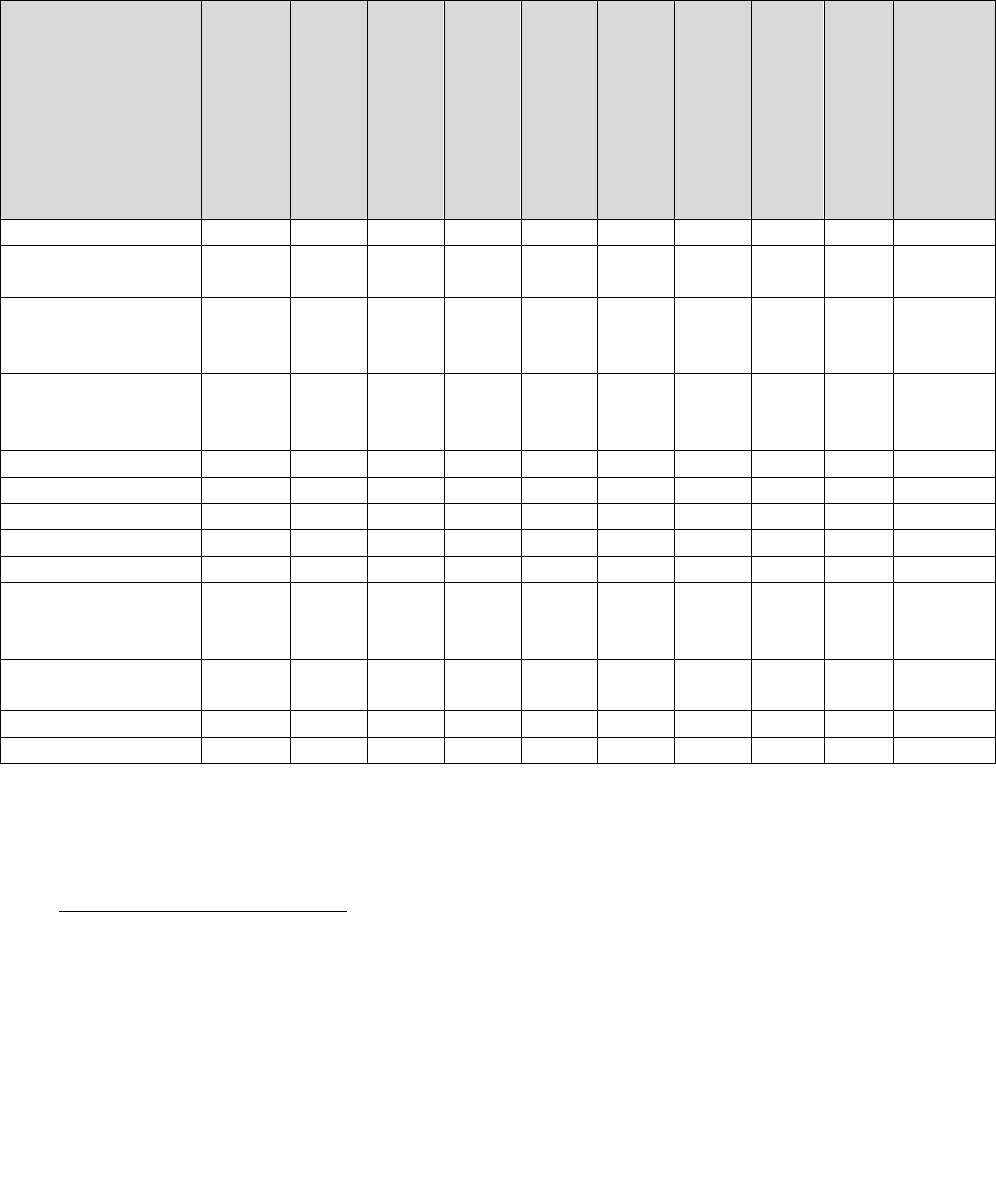

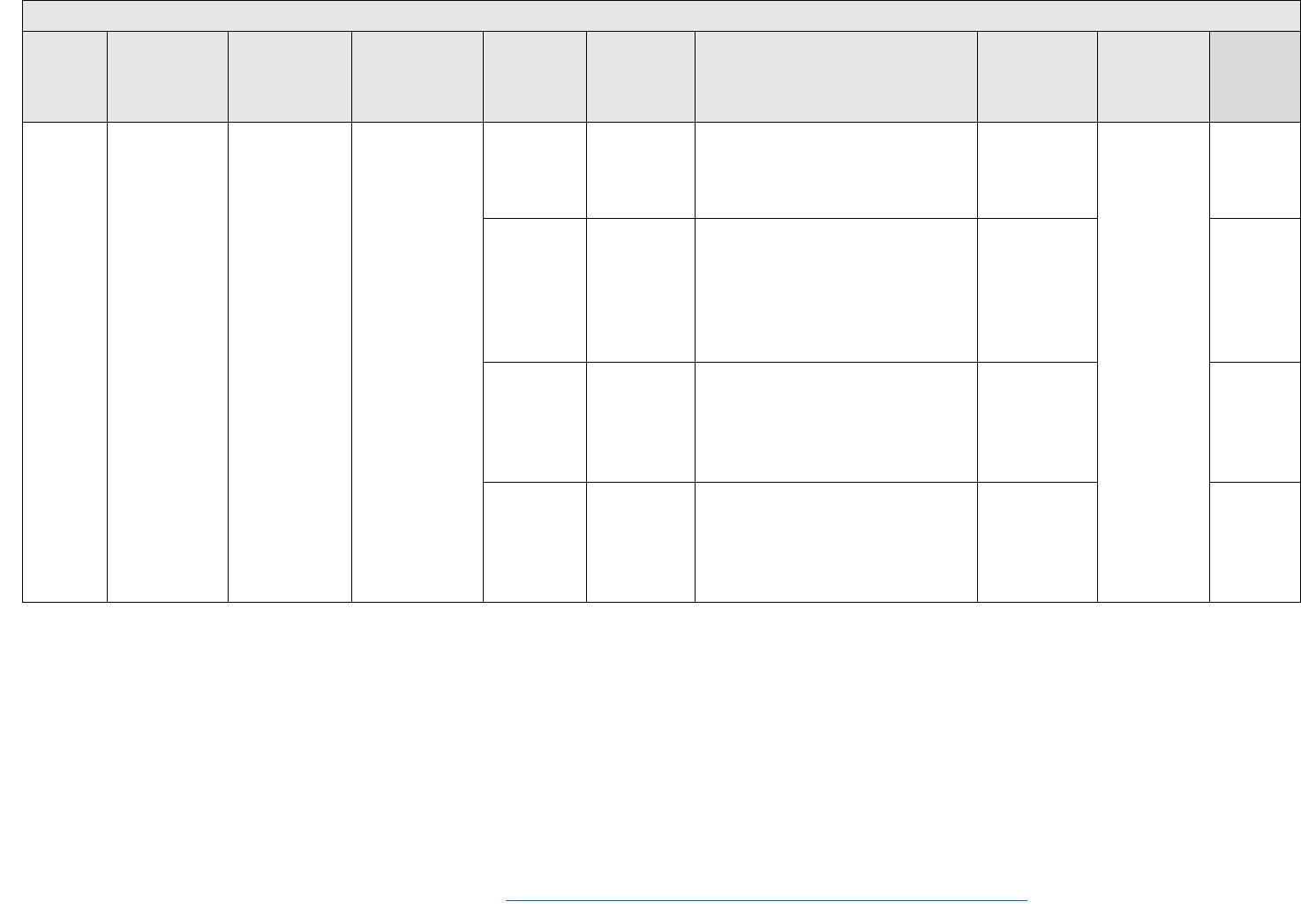

Table 1-1 Reliable Software Tasks ............................................................................ 1

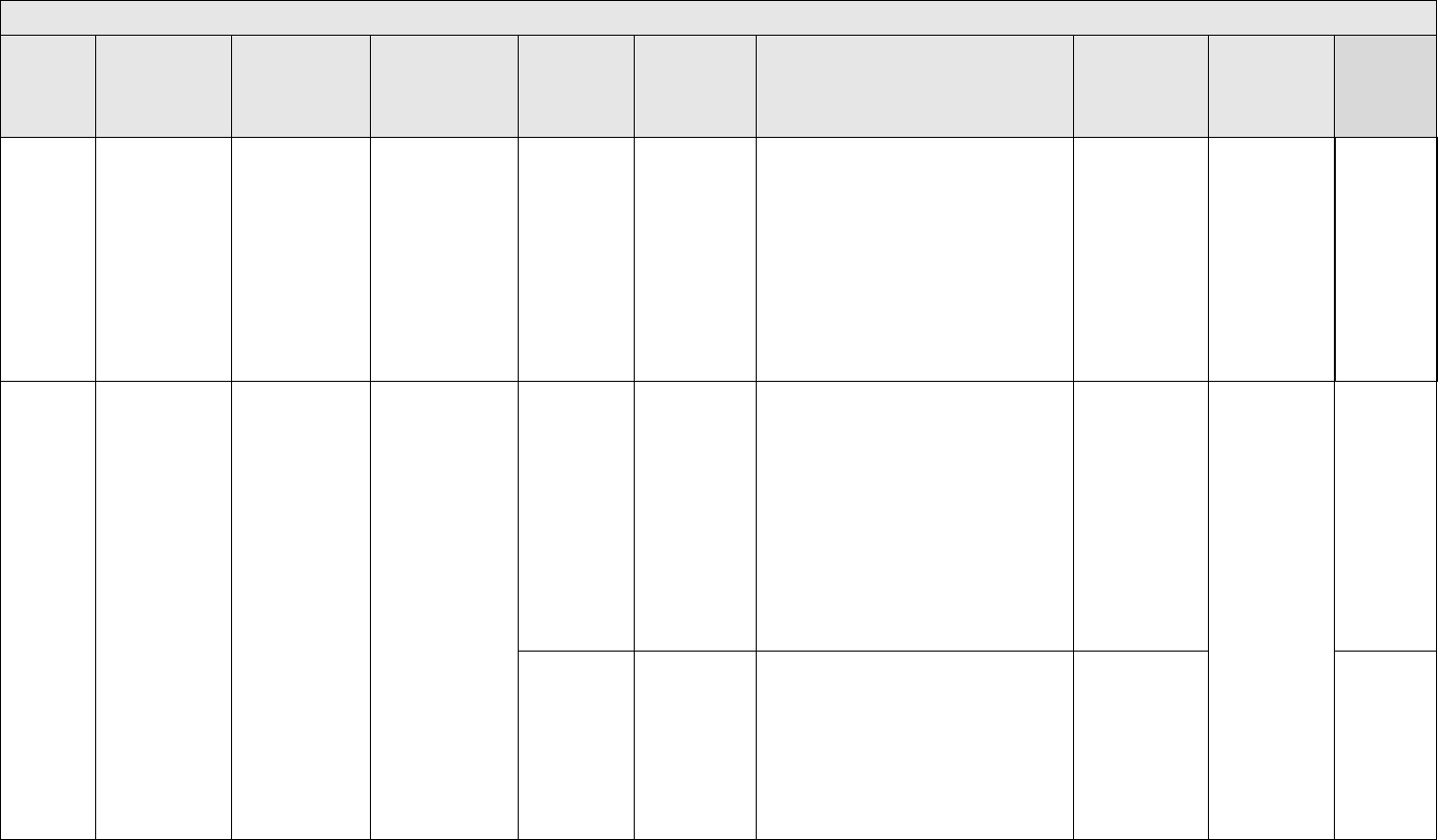

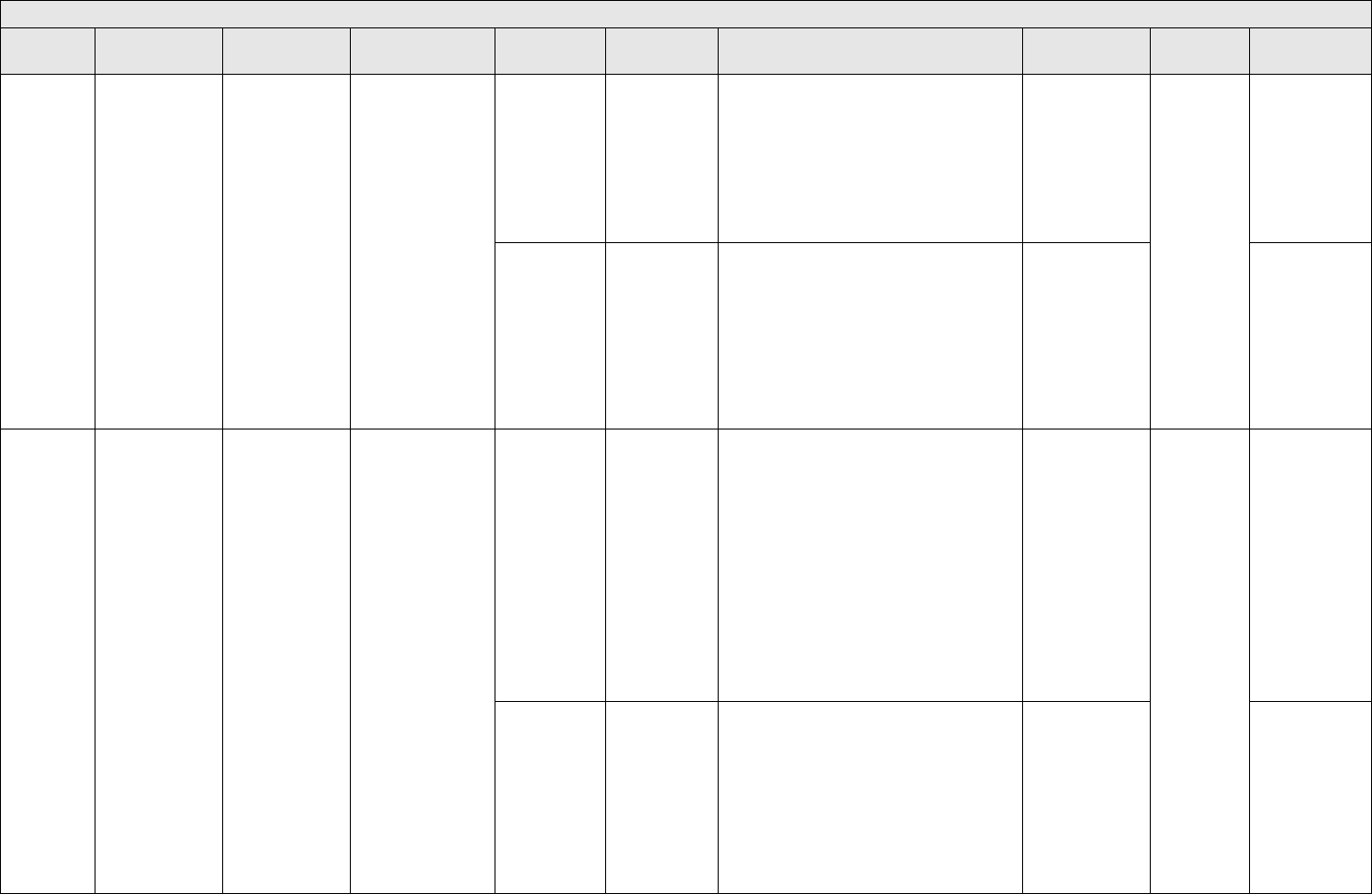

Table 1-2 Tailoring for Level of Rigor for MCA and MTA Acquisition Paths ............... 6

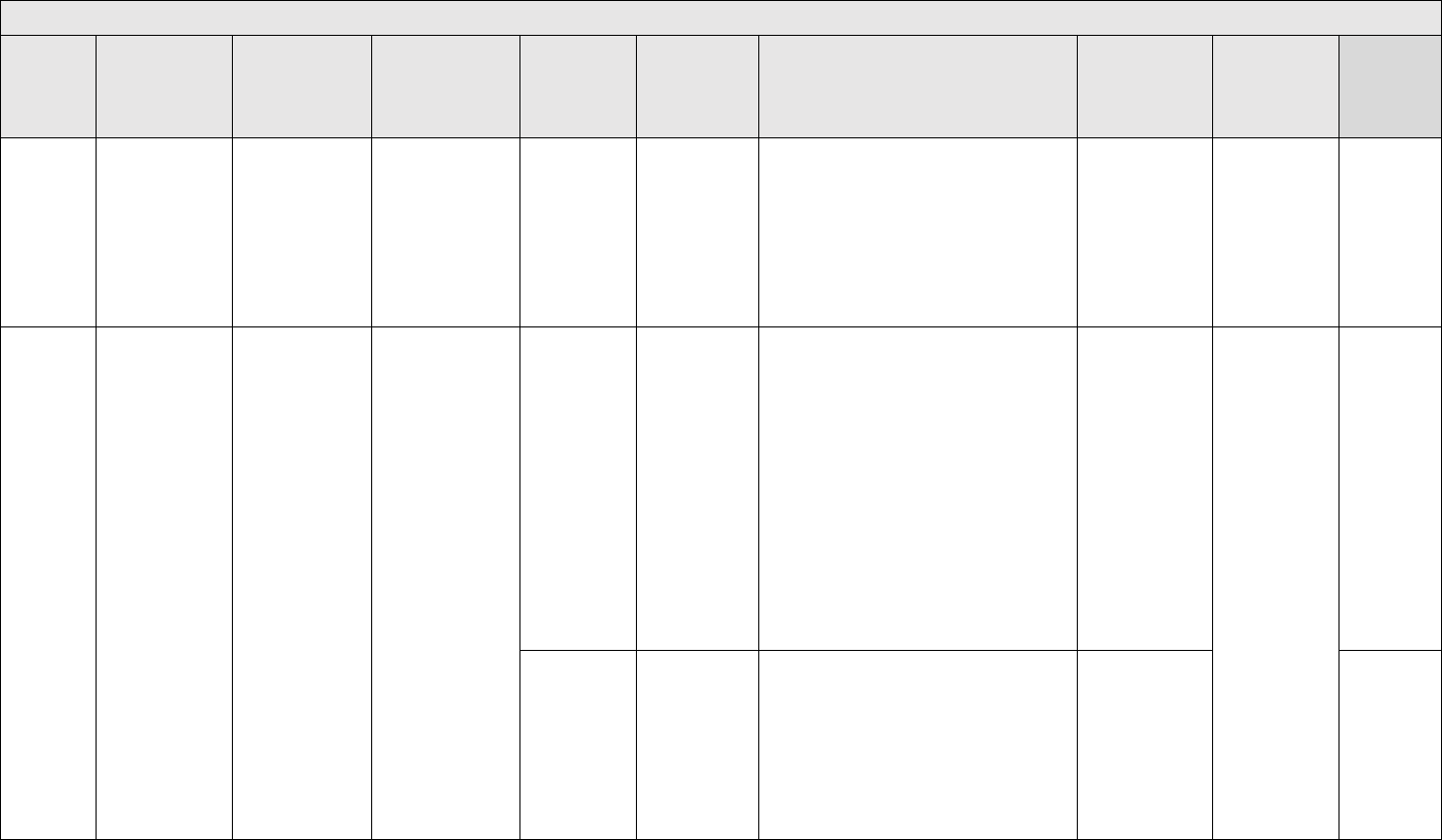

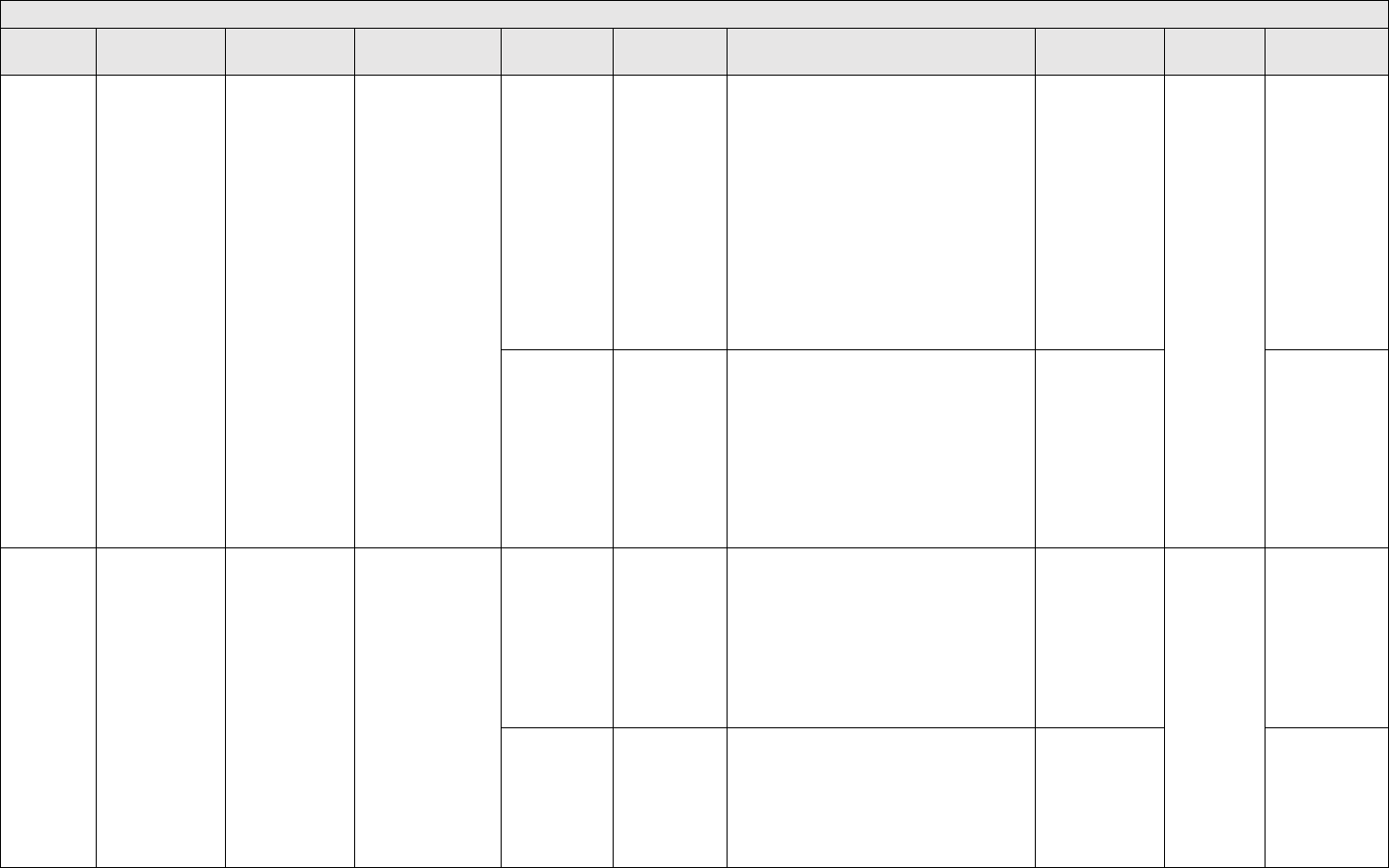

Table 1-3 Allocation methods for software ............................................................... 14

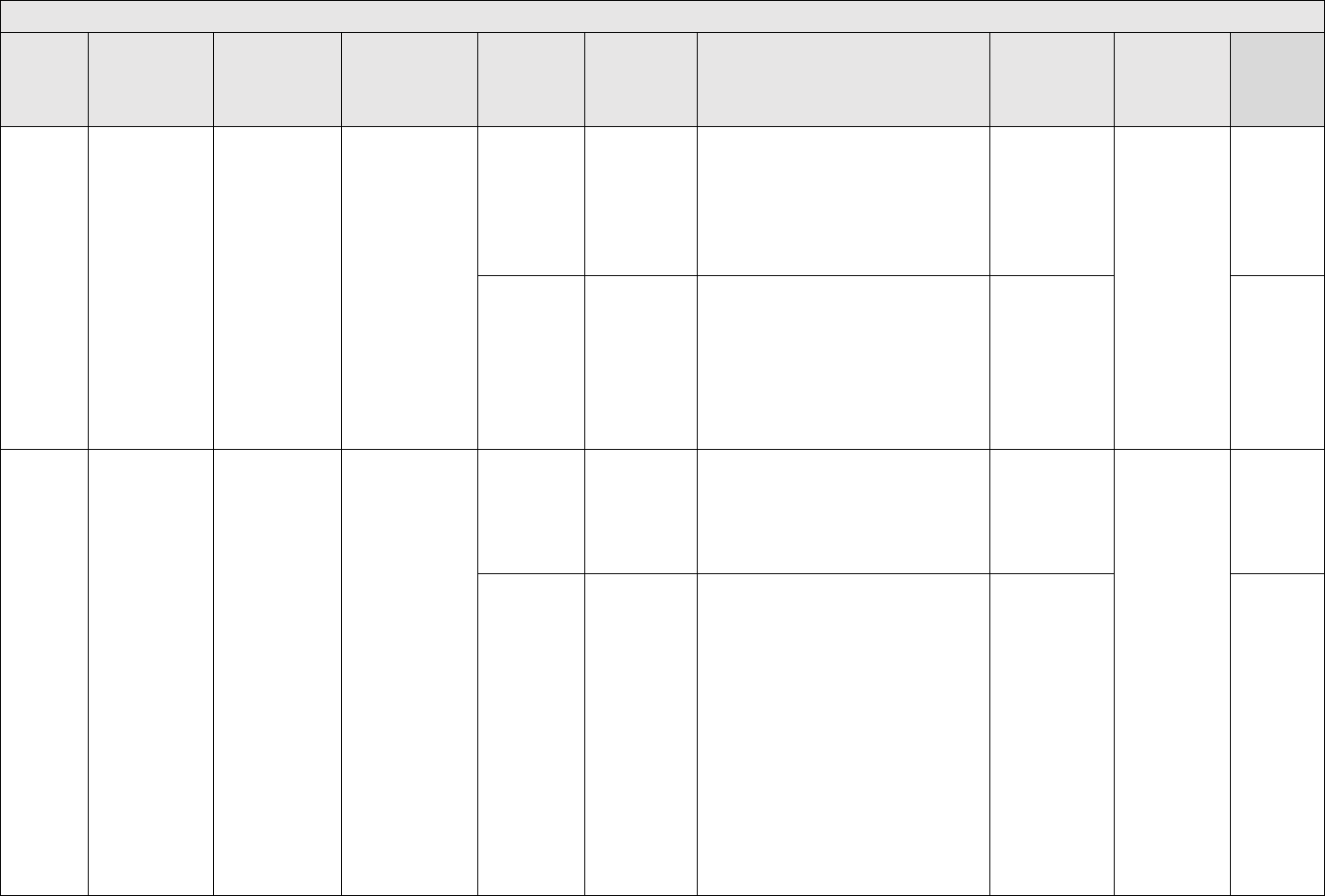

Table 1-4 Summary of prediction models for software ............................................. 19

Table 1-5 Software FMEA points of view ................................................................. 28

Table 1-6 Tailoring the SFMEA SOW language ...................................................... 29

Table 1-7 Reliable Software Testing Examples ....................................................... 42

Table 1-8 Justification and Applicability for Reliable Software Tests ....................... 44

List of Figures

Figure 1-1 Top Level Decision Tree for Determining Which Reliable Software Tasks

are Relevant for MCA program ............................................................... 2

Figure 1-2 Top Level Decision Tree for Determining Which Reliable Software Tasks

are Relevant for MTA program ................................................................ 3

Figure 1-3 Tailoring for Level of Rigor for MCA and MTA Acquisition Paths .............. 6

Figure 1-4 Agile Software Development .................................................................. 22

Figure 1-5 Defect Discovery Profile ......................................................................... 23

Figure 1-6 Example of a Defect Discovery for Incremental Development ................ 23

Figure 1-7 Example #2 of a Defect Discovery for Incremental Development ........... 24

Figure 1-8 Venn diagram of Coverage via Various Test Methods ........................... 41

APPROVED FOR PUBLIC RELEASE

1

1.0 Summary of Reliable Software Tasks and Tailoring Guidance

This section provides the Government reliability engineer the reliable software tasks,

rationale, and tailoring guidance applicable to Major Capability Acquisition (MCA) and

Middle Tier Acquisition (MTA). The “Software Acquisition Pathway” should use the

MCA pathway guidance in this document. Reliable software tasks are in Sections 1.1 to

1.9. The guidelines (see example SOW language for each task) are as follows:

• The Statement of Work language is italicized. Any language that can be

removed will be bolded.

• Instructions for removing language is contained in <>.

• Undo “bolding” prior to placing the language in the SOW.

• Remove all <> text prior to placing the language in the SOW.

1.0.1 Reliable Software Task and Rationale.

Table 1-1 below summarizes the reliable software tasks and rationale for the below

tasks for a successful acquisition.

Tasks

Rationale

Reliable Software

Program Plan (Section

1.1)

Ensures the tasks required for reliable software are

integrated with the engineering processes and the software,

reliability, and systems engineering personnel interact.

Inclusion of Software in

System Reliability

Model (Section 1.2)

Ensures the software is integrated into the system reliability

model to avoid underestimating the system reliability.

Reliable Software

Allocations (Section

1.3)

Ensures the software is not ignored in the system reliability

allocations and the software team knows to test a specific

reliability goal.

Reliable Software

Predictions (Section

1.4)

Ensures the contractor is predicting reliable software early in

development while there is still time to determine alternative

solutions.

Reliable Software

Evaluation (Section 1.5)

Ensures the contractor demonstrates software under test is

trending to meet or exceed the reliable software allocation.

Software Failure

Modes, Effects,

Analysis (FMEA)

(Section 1.6)

Identifies failure modes in the software that are exceedingly

difficult to identify during testing but are costly in terms of

mission failures.

Inclusion of Software in

FRACAS (Section 1.7)

Ensures the contractor is providing all software failures to

the Government for review.

Reliable Software Risk

Assessment (Section

1.8)

Ensures commonly overlooked risks do not derail the

reliability of the software.

Reliable Software

Testing (Section 1.9)

Provides confidence the software has been exercised in a

manner consistent with its operational use.

Table 1-1 Reliable Software Tasks

APPROVED FOR PUBLIC RELEASE

2

1.0.2 MCA Reliable Software Relevant Tasks Decision Tree (Figure 1-1).

The Figure 1-1, decision point #1 assesses whether the program is software

intensive and the software is mission critical. For most modern combat/weapon/mission

systems this will be affirmative. The Defense Acquisition University definition is “A

system in which software represents the largest segment in one or more of the following

criteria: system development cost, system development risk, system functionality, or

development time.”

2

The definition of software intensive for this document is broader

than the DAU definition. Any weapon or combat system with software is in scope for

this document. If there is any doubt, the reliability engineer should discuss the program

with the software and systems engineering counterpart.

Figure 1-1 Top Level Decision Tree for Determining Which Reliable Software

Tasks are Relevant for MCA program

The Figure 1-1, decision point #2 is to determine if the program is beyond the

Material Solutions Analysis (MSA) phase. Typically, there is software development in

the MSA phase and the relevant tasks for Technology Maturation & Risk Reduction

(

TMRR) or Engineering and Manufacturing Development (EMD) are relevant for MSA.

If a specific reliability objective is not yet established in MSA, reliable software tasks are

still relevant. The software FMEA and risk assessment tasks are not tagged to a

specific quantitative objective.

2

https://www.dau.edu/glossary/Pages/Glossary.aspx#!both|S|28508

APPROVED FOR PUBLIC RELEASE

3

If the software development has not started in MSA, only the reliable software risk

assessment and coordination of reliability and software personnel are relevant (Figure

1-1, decision point # 3).

If software development has started and has not entered either Production and

Deployment or Operations and Support Phase (Figure 1-1, decision point #4), then all

reliable software tasks are relevant and should be tailored as per sections 1.1 to 1.9.

If the phase is either Production and Deployment or Operations and Support and

there are still software development activities (Figure 1-1, decision point #5), then all

reliable software tasks are relevant and should be tailored as per sections 1.1 to 1.9.

If development is complete (i.e., there are no more sprints) but the reliability

objective has not been met (Figure 1-1, decision point #6), then it is too late for the

reliable software predictions or Software FMEA (SFMEA) to be a benefit by influencing

the design.

If the reliability objective has been met by the software and there are no more

planned Engineering Change Proposals (ECP), major changes or new capabilities

planned (Figure 1-1, decision point #7), then the reliable software tasks are not relevant;

If this is not true, then all the reliability tasks are relevant and should be tailored.

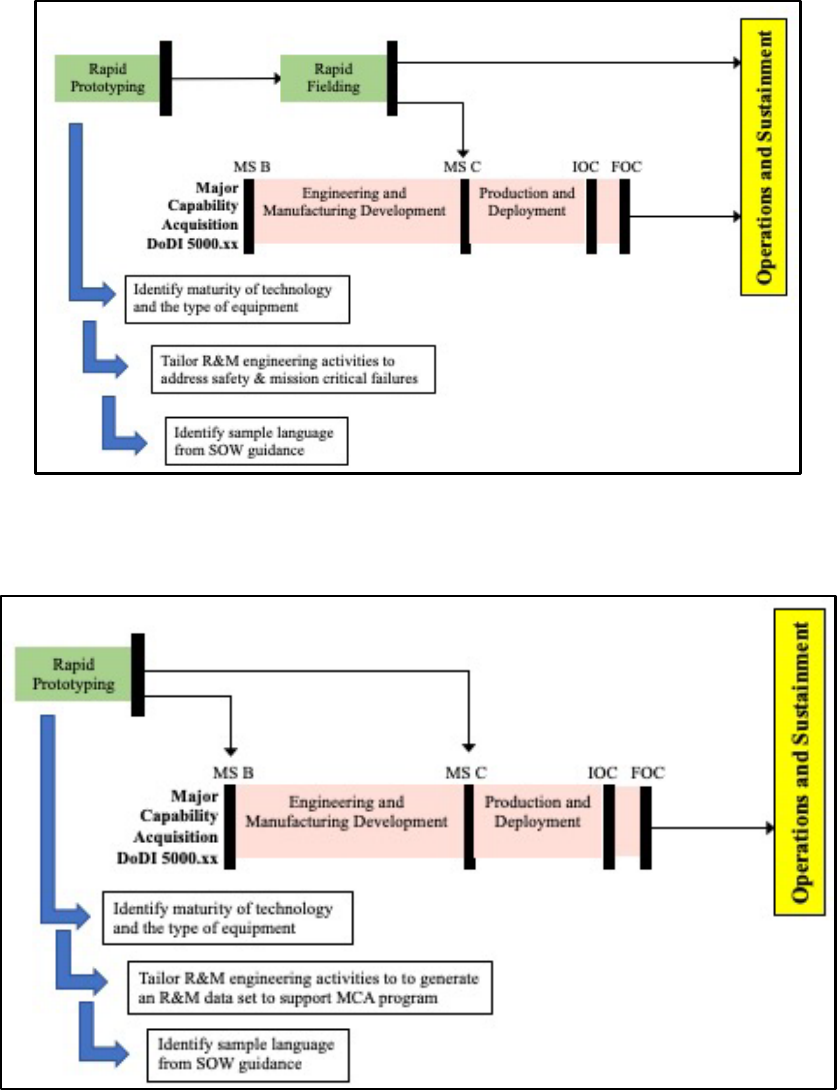

1.0.3 MTA Reliable Software Relevant Tasks Decision Tree (Figure 1-2).

The MTA decision path for reliable software starts out similarly to the MCA path -

only programs performing a mission critical function for a combat, weapon, or mission

system are subject to the reliable software tasks. The Figure 1-2, decision point #1,

determination of software intensive for MTAs is the same as MCA (Refer to MCA

Section 1.0.2).

The Figure 1-2, decision point # 2 is whether the MTA will transition to an MCA. If

so, then the MCA decision tree (Section 1.0.2) should be used.

The Figure 1-2, decision point # 3 is whether the MTA program is Rapid Prototyping

(RP) or Rapid Fielding (RF).

If the MTA program type is RP and a direct transition to deployment is planned

(Figure 1-2, decision point #4), then several of the reliable software tasks may require

tailoring because of the lack of calendar time. See Appendix A for an illustration of this

DoD Acquisition pathway.

If the RP will transition to RF, then the tasks should be tailored as if the program is

RF. If the software development is complete (final sprint) then the remaining decisions

are similar to Figure 1-1, decision points # 5-7 (MCA Section 1.0.2). If the development

is not complete (Figure 1-2, decision point #5), then the reliable software tasks must be

APPROVED FOR PUBLIC RELEASE

4

tailored to fit into the five (5) year calendar time requirement for MTA. The tasks in

Sections 1.1 to 1.9 are tailored within the MTA timeframe and some tasks might be

removed if the calendar time available is particularly short. This will be discussed later

in this section.

The Defense Acquisition University definition is “A system in which software

represents the largest segment in one or more of the following criteria: system

development cost, system development risk, system functionality, or development

time.”

3

The definition of software intensive for this document is broader than the DAU

definition. Any weapon or combat system with software is in scope for this document. If

there is any doubt, the reliability engineer should discuss the program with the software

and systems engineering counterpart.

Figure 1-2 Top Level Decision Tree for Determining Which Reliable Software

Tasks are Relevant for MTA program

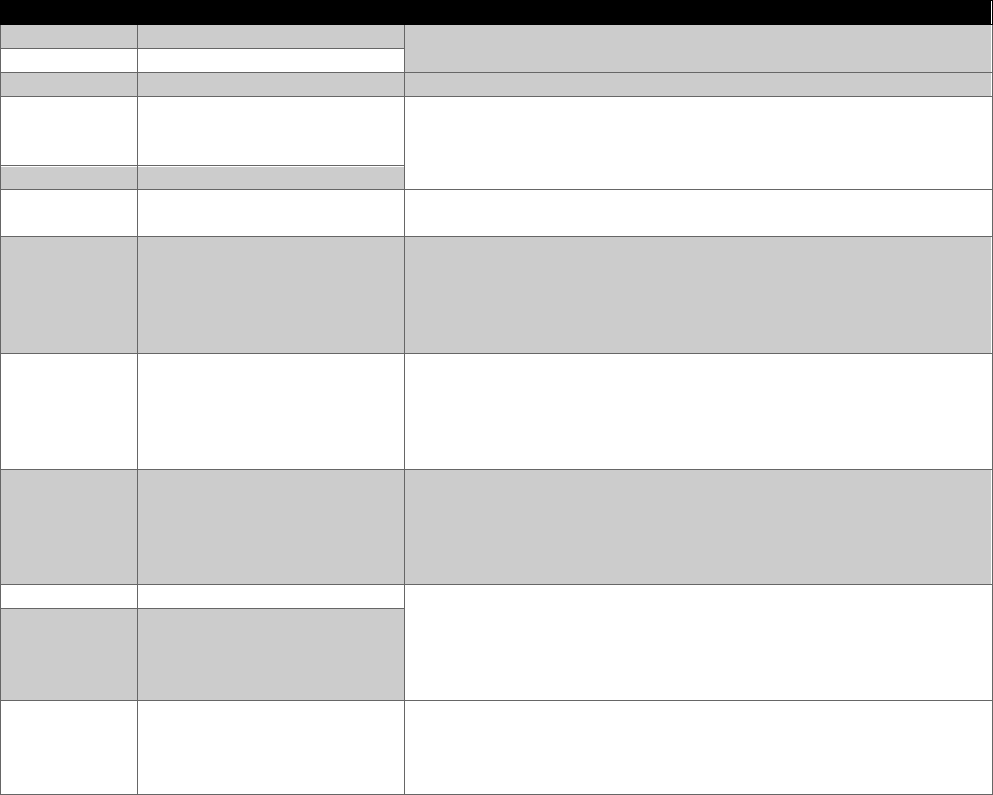

1.0.4 Level of Rigor for MCA and MTA Pathways

Table 1-2 summarizes the tailoring scheme for the Level of Rigor (LOR) for the MCA

and MTA pathways. For most of the tasks, there are minimalistic or detailed

approaches available. Depending on the phase of the program, the complexity of the

software, and other factors, the LOR can be selected. This table assumes that the

program is software intensive and has mission critical software.

3

https://www.dau.edu/glossary/Pages/Glossary.aspx#!both|S|28508

APPROVED FOR PUBLIC RELEASE

5

For MTA programs that do not transition to MCA, all the reliable software tasks

should be tailored for minimal metrics or minimalistic models. However, further tailoring

may be needed due to the limited calendar time available for the tasks. The tasks with a

√ are generally not costly and can be complete with relatively short calendar time. As

for the other tasks, below is a ranked order of importance to MTA programs:

1. Testing for reliable software for mission critical software Line Replaceable

Units (LRUs) (Section 1.9). The best way to achieve reliable software is to test

the trajectories, boundaries, faults, data, zero values, etc. This task alone

provides the most confidence in the reliability of the mission critical software.

2. Reliable software evaluation (Section 1.5). If the software is highly unstable,

this evaluation will make that noticeably clear. This evaluation will identify the

additional test effort to make the software stable but does not guarantee that the

contractor has or will test the inputs that are most likely to result in a software

failure. This task should always be in addition to the testing for reliable software

and not instead of it.

3. Top level SFMEA (Section 1.6). This task can identify top level failure modes

that should be considered in testing. However, without the testing for reliable

software task the tests might not be executed.

For MCA programs with limited time or funding, the above tailoring scheme can be

applied.

APPROVED FOR PUBLIC RELEASE

6

Reliable

Software Tasks

MCA or MTA with

transition to MCA

path

MTA RP path with

direct transition to

deployment

MTA RP transition to

RF path

MTA RF path

Reliable Software

Program Plan

√

√

√

√

Inclusion of

Software in

System Reliability

Model

Model type can be

tailored to complexity

of SW/HW

1

Can be tailored for

simple model

1

Can be tailored for

simple model

1

Can be tailored for

simple model

1

Reliable Software

Allocations

Model selected based

on accuracy/

availability of data

1

Can be tailored for

simple model

1

Can be tailored for

simple model

1

Can be tailored for

simple model

1

Reliable Software

Predictions

Select models

depending on risk

2

Either remove task or

use simplest models

2

Either remove task or

use simplest models

2

Either remove task or

use simplest models

2

Reliable Software

Evaluation

Full or minimal metric

set depending on risk

3

Full or minimal metric

set depending on risk

3

Full or minimal metric

set depending on risk

3

Full or minimal metric

set depending on risk

3

Software FMEA

Tailored by risk.

4

Tailored by risk.

4

Tailored by risk.

4

Tailored by risk.

4

Inclusion of

Software in

FRACAS

√

√

√

√

Reliable software

risk assessment

√

√

√

√

Reliable software

testing

Tailored to apply to the most mission critical software LRUs

Table 1-2 Tailoring for Level of Rigor for MCA and MTA Acquisition Paths

√

- Applicable anytime there is mission critical software intensive system

1

-

Applies

if either the reliable software predictions or reliable software evaluation is

relevant

2

- Most useful early in the program. Not useful if the coding activities are complete.

3

-

Unless the reliability objective has been demonstrated this task is relevant.

4

-

Most useful before code is complete. Not useful if all testing is complete.

Figure 1-3 illustrates the process for how the reliable software tasks interface with each

other.

APPROVED FOR PUBLIC RELEASE

7

Figure 1-3 Tailoring for Level of Rigor for MCA and MTA Acquisition Paths

1.1 Reliable Software Program Plan (RSPP) Task

The RSPP documents the contractor’s plan for executing the reliable software tasks.

The following sections provide the basis / justification for the task and tailoring the SOW

language to the Acquisition Strategy.

1.1.1 Basis / Justification

Without the RSPP, there is no means for the government to assess the contractor’s

plan for reliable software. For example, the contractor may be planning to use “subject

matter expertise” for all reliable software tasks. By having a written plan, the

government will know in advance that the contractor is taking a high-risk approach. The

RSPP is not a cost driver, the tasks selected drive cost.

1.1.2 Tailoring the RSPP SOW Language

For maximum effectiveness, the RSPP must be integrated with the hardware

reliability plan and clearly referenced in the contractor’s Software Development Plan

(SDP). The contractor’s reliability engineers are to coordinate with the contractor’s

software personnel to ensure that the RPP RSPP section is referenced from the SDP.

The reliability engineer must tailor the SOW language per the following steps:

APPROVED FOR PUBLIC RELEASE

8

Step 1: Determine which reliable software tasks are relevant for the program as per

Figures 1-1 or 1-2 and/or Table 1-2. The SOW language for the RSPP is not affected

by the development framework. The tasks selected are affected by Agile / DevSecOps.

Step 2: Modify the RSPP SOW language:

• Remove any bolded tasks from the SOW language that are deemed to be

not relevant as per the applicable decision tree.

• Remove this text <writer shall remove items as per the guidance>

• If either condition below is not true, then remove (11) site reliability

engineer from the SOW language for the RSPP. Unless the

weapon/system is a providing network capability, the site reliability engineer

is likely to be out of scope for the program.

• Software downtime requires immediate action by on site engineer.

• The site reliability engineer is funded by the program.

• The RSPP section of the Reliability, Availability, and Maintainability Program

Plan (RPP) must be explicitly referred from the SDP to ensure the software

engineering is aware of the reliability requirements and is working to meet

the requirements. The Data Item Description (DID) for the SDP is DI-IPSC-

81427 Rev. B. This may require SOW language for the SDP.

Step 3: Merge the RSPP language with the reliability program plan language for the

hardware in the SOW.

The RSPP SOW language as follows:

“The contractor shall provide the Government an overview of their system reliability

program that includes scope to develop reliable hardware and software, as a briefing at

the Post-Award Orientation. The reliable software program shall address: <writer shall

remove items as per the guidance> 1) inclusion of software in the reliability

model; 2) reliability allocations for software; 3) the method for predicting reliable

software; 4) demonstrate reliability curves of the software in a diverse operational

environment; 5) the method to identify and mitigate software failure modes early

in development; 6) software failure mode and defect identification, tracking and

resolution; 7) software risk management; 8) the methods for development and

testing of reliable software; 9) coordination of the reliability, test, design, systems,

software, embedded software functional areas; 10) the integration of reliable software

tasks into the software development schedule to ensure that reliability is designed in

early; and 11) site reliability engineer. The contractor shall identify all mission critical

software LRUs and functions. The contractor shall describe the planning and

implementation of reliable software activities as well as coordination with reliability, test,

design, systems, software, and embedded software. The contractor shall integrate the

reliable software effort with the overall system reliability program. The contractor shall

participate and be prepared to share any reliable software task updates during the

government working group meetings per the program integrated master schedule

Reliability & Maintainability Working Group. The contractor shall reference the RSPP in

the software development plan. The contractor shall deliver the Reliable Software

Program Plan (RSPP) as part the R&M Program Plan (RPP) per DI-SESS-81613.”

APPROVED FOR PUBLIC RELEASE

9

1.1.3 Tailoring the Contract Data Requirements List (CDRL) (DD Form 1423)

See Appendix C for the CDRL template. Steps for tailoring as follows:

Step 1: Do not create a separate CDRL for software. Insert language for both the

hardware reliability and reliable software plans in the same CDRL for the R&M Program

Plan, DI-SESS-81613.

Step 2: All information related to due dates, frequency, and government approval

shown in Appendix C CDRLs are recommendations. The reliability engineer should

complete all blocks based on program-specific information. Coordinate with the

software engineering counterpart so that this deliverable coincides with the SDP.

Step 3: Coordinate with the software engineering counterpart and ensure that the

reliability engineer’s office symbol is placed into block 14 of the SDP CDRL. The DID

for the SDP is DI-IPSC-81427.

Step 4: Remove any shaded text within <>

1.2 Inclusion of Software in System Reliability Model Task

The System Reliability Model (SRM) is a graphical depiction of the system with an

underlying analysis, such as the Markov model, Sequence Diagram, Mission model,

Reliability Block Diagram (RBD) and / or Fault Tree Analysis (FTA). The initial delivery

of the model must meet the Government’s requirements to include all software

components in an appropriate manner and the structure of model includes relationship

between software and hardware components prior to approval. The following sections

provide the basis / justification for the task and tailoring the SOW language to the

Acquisition Strategy.

1.2.1 Basis / Justification

Systems may be represented by more than one model. For example, software

operated at discrete mission times may be best represented by a mission model while

software operating continuously may be best represented by a Markov model. The

analysis identifies critical weaknesses in the system design which impact reliable

software. The following are lessons learned if the contractor is not required to explicitly

list the software LRUs in the system reliability model. Reliable Software is often

disregarded / under resourced / inadequate mission reliability testing resulting in failure

to achieve mission reliability:

• Software is entirely missing from the SRM.

• Software can be partially missing. For instance, the reused or commercial

off the shelf (COTS) software might not be represented on the SRM.

APPROVED FOR PUBLIC RELEASE

10

• Software is represented on the SRM but represented as one big block. With

today’s exceptionally large and complex systems, the software is almost never

architected in one big LRU. Some software LRUs rarely need updating while

others are continually evolving with capabilities. By designing the system with

independent software LRUs, the software organization can update one LRU

without affecting the other software capabilities and functionality. The reliability

engineers often represent several software LRUs as one reliability block without

consideration of varying duty cycles or interactions. The system models provide

for a means to model the software LRUs more closely with a true operational

profile.

Including software on the system reliability model requires the government reliability

engineer to determine the Figure of Merit (FOM) in accordance with Section 1.2.2. This

allows the contractor to:

• Understand the interaction of software LRUs with the rest of the components in

the system.

• Ensure software engineering develops block diagrams as part of software

architecture. Most of the mathematical effort is conducted in the reliable software

predictions and reliable software evaluation tasks. Hence, this task, excluding

the work required to assign quantitative values, is a relatively small cost. Various

automated tools are used for system reliability modeling.

• Assess the reliability of each software LRU either via the predictions or the

reliability evaluation curves.

Cost / Schedule Impact: The software LRUs should be defined at the highest level

for the contractor to propose, therefore putting the LRUs into the system reliability

model should result in minimal to no cost or schedule impact to this task.

1.2.2 Identifying Specific FOM

If the result Figures 1-1 or 1-2 and/or Table 1-2 determines reliable software model

task is relevant, the government reliability engineer needs to identify the FOM in the

SOW language. For example, if availability and Mean Time Between Essential Function

Failures (MTBEFF) are required to be measured then place the metrics into the SOW

identified by < >. FOM examples as follows:

• “Reliability” is the probability of success over some specific mission time. This

measure is applicable for any software involved with a “mission.” This would

include missiles, aircraft, landing gear, vehicles, etc. However, if the mission

is an extended duration, “availability” typically makes more sense. Example:

Refrigerators are always on. Dishwashers are only on for discrete time

periods (missions) per day.

• “Availability” is appropriate for systems that are on for an extended duration,

such as security systems, networks, radar, or any system that does continuous

APPROVED FOR PUBLIC RELEASE

11

monitoring. Availability measures the downtime for preventive and restorative

actions. To predict software availability, the restore time must be predicted.

• “Mean Time to SoftWare Restore (MTSWR)” is the metric to measure software

downtime. This includes time to: 1) restart, 2) reboot, 3) workaround, 4)

reinstall software, 5) downgrade software, and/or 6) wait for a software

upgrade. These are listed in relative order of time required. Not all software

failures can be addressed with a restart or reboot. Some may need to be

avoided with a workaround. In a few rare cases, some issues are resolved by

reinstalling the software. In cases in which a new version of software has

defects not seen in prior releases, the software might have to be downgraded.

In some cases, in which a software failure cannot be avoided or worked

around and effects the mission the software might not be used until the

software engineering team fixes the problems and deploys an upgrade. Mean

Time to Repair (MTTR) does not apply to software because software does not

wear out.

• “Mean Time Between System Abort (MTBSA), MTBEFF, etc. and failure rate”

can be measured for any software system.

• “Total predicted software defects” is valid metric for contractor Development

Tests (DT) and/or field operation. While the predicted software defects cannot

be necessarily merged with hardware predictions, the software defect

prediction can be a useful indicator for validating the other predictions. If the

contractor’s predictions for defects are unreasonable (i.e., very close to 0 for

example) then the contractor prediction for failure rate, availability will also be

unreasonable.

1.2.3 Tailoring the SOW Language

Step 1: If the result of the decision tree in Figures 1-1 and 1-2 and/or Table 1-2 is

that software does not need to be included in the system reliability model then do not

include the entire SOW language. Otherwise, the reliability engineer must tailor the

SOW language per the following steps:

Step 2: Modify the SOW language by removing any bolded tasks from the SOW

language that are deemed to be not relevant as per the applicable decision tree.

Step 3: Determine the reliability Figure of Merit as per section 1.2.2. and <Insert the

selected figures of merit here as per guidance> in the SOW.

Step 4: If any of the below are true, the more complex models; such as the event

sequence diagrams, fault trees, Markov and mission models; are more appropriate than

the simpler models such as the reliability block diagram. In that case, make sure to

include all the choices in this statement. 3) generate event sequence diagrams, fault

trees, Markov models, reliability block diagram and/or mission models.

APPROVED FOR PUBLIC RELEASE

12

• Complex interactions between hardware and software or software and software

• The software LRUs are not up all the time.

• There is redundancy in the hardware.

• There is N version programming (This is essentially redundant software which is not

very common due to the very high cost.)

• Highly fault tolerant software

• The system and software are difficult to represent without a system model

Step 5: SOW Language is as follows:

“The contractor shall 1) incorporate all software Line Replaceable Units (LRUs)

including deployed custom software, commercial off the shelf (COTS), Free Open

Source Software (FOSS), embedded software as defined by the IEEE 1633 2016

clause 5.1.1.1 into the overall System Reliability Model (SRM) IAW DI-SESS-81496; 2)

Describe how the <Insert the selected figures of merit here as per guidance> will be

documented for comparison against system requirements; 3) generate event sequence

diagrams, fault trees, Markov models, reliability block diagram and/or mission models to

identify mission critical SW. IEEE 1633 2016 clause 5.3.4 and System and Software

Reliability Assurance Notebook FSC-RELI chapters 4 and 5 provide guidance.

The Software components identified in the SRM shall be traceable and consistent

with the software components identified in the software design. System reliability

models shall explicitly identify software LRUs. The SRM shall be used to: 1) generate

and update the reliable software allocations, and 2) identify critical software items and

additional design or testing activities required to achieve the reliable software

requirements. Critical items are defined as those items whose inoperability impacts

mission completion, essential functions per the Failure Definition Scoring Criteria

(FDSC), Preliminary Hazards Analysis (PHA), Functional Hazards Analysis (FHA), or

items whose failure rates contribute significantly to the overall system degradation. The

contractor shall keep the models up to date and be prepared to share any updates

during working group meetings.”

1.2.4 Tailoring the CDRL

See Appendix C for the CDRL template. Steps for tailoring as follows:

Step 1: Do not create a separate CDRL for software. Insert language for both the

hardware and software system reliability model in the same CRDL for Reliability and

Maintainability (R&M) Block Diagrams and Mathematical Models Report, DI-SESS-

81496.

Step 2: All information related to due dates, frequency, and government approval

shown in Appendix C CDRLs are recommendations. The reliability engineer should

complete all blocks based on program-specific information.

Step 3 Remove all shaded text within <>.

APPROVED FOR PUBLIC RELEASE

13

1.3 Reliable Software Allocations Task

This analysis ensures that the portion of the system reliability requirement is

allocated appropriately to the software LRUs. Allocations are an ongoing process which

goes hand in hand with the reliability modeling activity.

Allocation can be made based on several different techniques as illustrated in Table

1-3. IEEE 1633 Recommended Practices for Software Reliability, 2016 clauses 5.3.5

and 5.3.8 discusses several methods for allocation. In addition, the System Software

Reliability Assurance Notebook

4

section 6.3 discusses software reliability allocation.

The methods in Table 1-3 are listed in order of preference. Historical data can be

most accurate but is often difficult to acquire and must be from a recently developed

similar system. Test data is relatively accurate if it is from a recent operational test.

Bottom up allocations employ predictive models to establish the allocation so the

accuracy depends on the models selected. Allocating by relative duty cycle is

applicable if there are varying duty cycles among the components. This allows

components that are on the most to receive a proportional allocation. Allocating by

research and development cost or by number of components are the least accurate

methods but are often more accurate than subject matter expert guess.

This task applies to software projects using any development framework that has no

bearing on how each of the software LRUs is allocated its fair share of the system

reliability requirement. The timing of the deliverables may be affected by the

development framework. Note that IEEE 1633 2016 has guidance in clause 4.4 for the

reliable software tasks for agile, incremental and waterfall deliveries. For agile

development, the software specifications are provided in “user stories” as opposed to

“software requirements specifications.”

The work required for the allocations is driven by the model selected. Allocation by

cost and/or the number of LRUs are the least expensive but also least accurate.

However, either of these quick and easy approaches is more accurate than subject

matter expertise. The downside of the allocation by number of LRUs is that it is not

accurate if the reliability engineer assumes that all the software is in one big LRU and if

the software LRUs are significantly bigger in functionality than the hardware LRUs.

4

https://www.cs.colostate.edu/~cs530/rh/secs1-3.pdf

APPROVED FOR PUBLIC RELEASE

14

Allocation Method

Preference

Historical data which indicates X% of the

fielded failures are due to software.

Usually most accurate if the data is recent and

the historical data is from a similar system with

similar mission. While the accuracy of

historical data is typically the best, it’s also

difficult to collect for DoD systems.

Recent testing data which indicates X%

of testing failures are due to the

software.

Relatively accurate if the software is being

tested in an operational environment (with the

target hardware).

Bottom-up allocation – All system

configuration items undergo reliability

assessment. The hardware and software

configuration items are applied to the SRM.

The allocation for software is simply the

predicted failure rate over total of all

predicted failure rates. Even if the

assessment does not meet the system

requirement, the allocation is still the

relative contribution of the prediction to the

system prediction.

The accuracy depends on the models used for

the bottom-up predictions. More inputs to the

model usually mean more accuracy if the

model is used correctly and inputs are correct.

Allocation by duty cycle. The % allocated

to SW depends on the duty cycle of each of

the components in the system.

This model is useful when there is varying

duty cycle of the system components.

Accuracy depends on the accuracy of the

prediction model discussed in the reliable

software prediction task. If historical data is

used, this method is typically accurate.

Allocation by Research and

Development cost. The % of R&D

engineering $ spent on SW versus %

R&D engineering $ spent on HW

Cost is a good indicator of reliable software

but only if the cost is accurately predicted. If

the cost of developing the software

components is in the same range as the cost

of the hardware R&D, then the software

contribution to failure rate is likely to be similar

to the hardware.

Allocation by number of Configuration

Items. Count the hardware LRUs and the

software LRUs. Allocation is based on

relative number of LRUs.

Not as accurate as other methods. There is

much variation on how much code comprises

an LRU. If there are many small LRUs, this

method can over-allocate the software or

hardware. If there is one large software LRU,

this method can under-allocate the software

portion.

Table 1-3 Allocation methods for software

The bottom-up allocation requires using a prediction model. If the SOW requires a

reliable software prediction model, then there is no additional cost in using that

approach. The historical data and recent test data approach are not expensive but are

only useful if the contractor has the historical data. The cost of allocations by duty cycle

depends on the underlying model selected for the predictions.

APPROVED FOR PUBLIC RELEASE

15

The following sections provide the basis / justification for the task and tailoring the

SOW language to the Acquisition Strategy.

1.3.1. Basis / Justification

Reliable Software is often disregarded / under resourced / inadequate mission

reliability testing resulting in failure to achieve mission reliability.

• Contractors allocate too little if any of the system allocation to the software even

though software is a considerable part of most military weapon systems.

• Contractors may allocate part of the system objective to software but have no

means for justifying the allocation - i.e., the “leftover” method in which the

software gets whatever is left over from the hardware prediction.

• Contractors allocate the reliability objective using the “big blob” approach which

makes it difficult to track progress against when there are multiple software LRUs

Reliability engineers often assume that the software gets one big allocation of the

system reliability. Today’s large complex systems almost never have all the software

code in one LRU. One would not allocate all the hardware reliability to exactly one LRU

so this should not be done for software either. Software LRUs may/will be developed by

different teams within the same organization, or different organizations. Some LRUs will

be bigger than others and hence require a larger portion of the allocation. Some LRUs

will execute more often than other LRUs. If the software organization has one big

number to meet for the software, the software organization cannot incrementally work

towards the requirement. But if the allocation is apportioned to each LRU, then the LRU

can be designed to and tested against the allocation. Table 1-3 is a summary of the

some of the industry methods employed for reliable software allocations. Methods that

employ recent historical data are preferred.

1.3.2 Tailoring the SOW language

Step 1: If the result of the decision tree in Figures 1-1 and 1-2 and/or Table 1-2 is

that software does not need to be included in the software allocation task then do not

include the entire SOW language. Otherwise, the reliability engineer must tailor the

SOW language per the following steps:

Step 2: Modify the SOW language by removing any bolded tasks from the SOW

language that are deemed to be not relevant as per the applicable decision tree.

Step 3: Identify and tailor <Identify any components that are out of scope such

as GFE>.

• Identify any boundaries that do not need to be included in the allocations. For

example, Government Furnished Software (GFS) may be included/excluded in

the allocations or interfaces to GFS.

• Replace the above text with any out of scope components.

• If no components are out of scope, remove the above text

APPROVED FOR PUBLIC RELEASE

16

Step 4: Identify any test data and tailor <Use of recent test data is acceptable>

• If this is an MTA program with direct transition to rapid fielding then the contractor

can be advised that using recent test data is acceptable as shown below.

• If recent test data is acceptable then unbold the below text and remove <>

• Otherwise delete the below text.

Step 5: SOW Language is as follows:

“The Contractor shall allocate the system reliability requirement to software LRUs

using allocation methods using IEEE 1633 2016 clause 5.3.8 and Table 28 as a guide.

<Identify any components that are out of scope such as GFE>. <Use of recent test

data is acceptable>. The results of the reliable software allocation shall be

incorporated into the system reliability model. The contractor’s Software Requirements

Specification (SRS) or user story shall include a statement of the numerical reliability

goals (consistent with the system Figure of Merit (FOM) for hardware and system) for

each identified software LRU. For Agile/ Continuous Improvement (CI)/Continuous

Development (CD) framework IEEE 1633 2016 clause 4.4 and Table 16 provide

guidance. The contractor shall keep the allocations up to date and be prepared to

share any updates during working group meetings. The contractor shall deliver the

allocated reliability of the software of each software LRU as part of the Reliability and

Maintainability (R&M) Report IAW DI-SESS-81968.”

Note: The allocations may change for software whenever the predictions or

reliability evaluations change. The reliable software predictions drive the allocations.

Early in the program the size estimations may be volatile and affect the allocations. The

allocations should be revisited by the contractor any time there is a major change in the

size of the software. However, the results do not need to be formerly delivered to the

Government except at formal milestones. The contractor should keep the models up to

date and be prepared to share any updates during working group meetings.

1.3.3 Tailoring the CDRLs

See Appendix C for the CDRL template. Steps for tailoring as follows:

Step 1: Do not create a separate CDRL for software. Insert language for both the

hardware and software system reliability allocation in the same CRDL for Reliability and

Maintainability (R&M) Allocation Report, DI-SESS-81968.

Step 2: All information related to due dates, frequency, and government approval

shown in Appendix C CDRLs are recommendations. The reliability engineer should

complete all blocks based on program-specific information.

Step 3 Remove all shaded text within <>.

Step 4: Ensure that the reliability engineer’s office code is added to block 14 of the

CDRL for the SRS (DI-IPSC-81433).

APPROVED FOR PUBLIC RELEASE

17

1.4 Reliable Software Prediction Task

This task is the prediction of the reliability of the software through comparable

systems software/items, industry models based on historical data of similar systems or

historical reliability from the same system. A “prediction” is conducted early in

development portion of the program. IEEE Recommended Practices for Software

Reliability, 2016 clauses 5.3.2 and 6.2 discusses the reliable software predictions early

in development. This task should be used in conjunction with the SSRM and the

reliable software allocation to quantify the reliable software metrics for each software

LRU and to identify low, medium, and high-risk critical items.

The frequency of the predictions should be the major milestones or annually. The

predicted reliability of the software can and will change more rapidly than the predictions

for hardware for the simple reason that predictions are primarily driven by how much

software is scoped. Software organizations are historically prone to underestimating the

amount of software to be developed. The predictions should be revisited by the

contractor every 6-12 months, at every milestone, or whenever it is demonstrated that

the allocated reliability objective is not being met or whenever there is an ECP. This

frequency applies whether the software is developed in an agile framework or a

waterfall framework. While the contractor should keep the predictions up to date and

make available during reliability working group meetings, the formal report to the

Government should be made at major milestones.

The following sections provide the basis / justification for the task and tailoring

the SOW language to the Acquisition Strategy.

1.4.1. Basis / Justification

Reliable Software is often disregarded / under resourced / inadequate mission

reliability testing resulting in failure to achieve mission reliability.

• Contractors assume that the reliability of the software = 1 or failure rate = 0.

• Contractor assumes that the reliability of the software is part of the hardware

reliability prediction.

• Contractor uses models that were developed more than 20 years ago.

• Contractor uses subject matter expertise which is historically the least accurate

method.

A common myth is time to failure for software cannot be predicted. The reason for

this myth is that reliability engineers are trying to predict the time between the same

failure mode. Software does not wear out. For software, time to failures predictions are

predicting the time in between different and previously unknown failure modes. This is

opposed to predicting the time between the same failure occurring repeatedly.

Predicting the time between the same failure mode only has value when that failure

mode is related to a hardware failure or resource usage. For example, one can

APPROVED FOR PUBLIC RELEASE

18

estimate the time it takes to a hard drive to run out of space because the software was

not designed to overwrite or offload the log files once the drive fills up. For all other

failure modes, the failure occurs based on the mission profile and inputs.

Example: Mean Time to Failure (MTTF) of 100 hours for software means a software

failure previously not detected will occur in the next 100 hours. Software does not wear

out. The MTTF means the time to the next failure due to a different root cause or

defect. Once a software failure occurs, the root cause of the failure (the defect) will

either be corrected by software engineering or avoided by the user until it can be

corrected. MTTF provides no real value to a maintenance engineer because the

maintainer has no idea as to where the software failure will be and the maintainer is not

the person who will ultimately remove the underlying defect when it does manifest into a

failure. However, the prediction does provide value to the software organization

responsible for maintaining the software. The software organization can schedule

software engineering maintenance effort based on the predicted failures per time unit to

ensure that the technical debt (unresolved defects) don’t pileup.

Software can’t fail if it’s not running. Hence, software predictions are not a function

of calendar time. The key parameters that effect reliable software are:

• Total number of inherent defects in the software. This is a function of the total

amount of software and the development practices.

o The amount of software – bigger software systems will have more inherent

defects

o Development factors

The level of rigor by the software development team with regards to

requirements, design, code, unit level, integration level, system

level testing

Software planning, execution, and project management

Defect reduction techniques

Other risks associated with the software such as whether the

software is for a relatively new weapon, availability of software

engineers experienced with the weapon, etc.

• The amount of usage time

• The degree to which the software is exercised in a real environment

Since the amount of the software is key parameter and the software does not fail as

a function of calendar time, reliability software is predicted by determining / estimating

the following:

• Total defects to escape into operation

• Usage time

• Rate at which inherent defects will expose themselves as failures (growth rate)

• MTBEFF as a function of defects, usage time and growth rate

• MTBSA by calibrating the MTBEFF by the percentage of EFFs that are

historically system aborts.

APPROVED FOR PUBLIC RELEASE

19

• MTSWR is used to predict availability.

• Probability of failure as a function of the MTBSA and the known mission time

The models shown in Table 1-4 are methods for predicting either the defect density

or defects in the reliability prediction models. The techniques range from simple to

complex. Typically, the models with more inputs are more accurate than models with

fewer inputs if the inputs to the model are correct.

Prediction

Method

Advantages

Disadvantages

Historical data

from similar

systems

Usually, the most accurate when

calibrated for any differences in

mission or development practices

Many organizations either do

not have any or do not have

processes to collect it

Detailed

assessment

surveys with

several input or

assessment

areas as per the

IEEE 1633

Next to historical data, the detailed

assessment surveys are most accurate

and based on historical data from real

software programs in which the actual

reliability as well as the development

practices are known. Accuracy

depends on 1) number of questions, 2)

ability for organization to answer all

questions accurately, and 3) the age of

the model (Models > 20 years old are

generally not accurate).

Requires contractor’s

software and reliability

people coordinated activities.

Time to complete

assessment depends on the

number of questions and if

the reliability engineer can

get the answers from

software engineering.

Rayleigh model

When based on historical data such as

QSM’s SLIM

5

, these models are

relatively accurate.

Requires contractor’s

software and reliability

people coordinated activities.

Weibull analysis

Generalization of Rayleigh model;

based on program’s own historical

data, not that of comparable systems.

Yields more accurate forecasts in

context. Defect data often readily

available. Widely recognized and

accepted.

Forecasts not reliable until

>60% of defects discovered.

Not reliable in early

development stages.

Requires access to defect

data.

Simple look up

tables based on

application type

or CMMi®

Quick and easy

The least accurate of the

other methods shown above

but significantly more

accurate than subject matter

estimates

Table 1-4 Summary of prediction models for software

This task applies to software projects using any development framework. While the

reliability of the software may be affected by agile development methods, the steps for

assessing the software LRU predictions are not affected by the software development

5

Quantitative Software Management Software Life Cycle Model Management Suite

APPROVED FOR PUBLIC RELEASE

20

framework. The timing of the deliverables may be affected by the development

framework and is discussed in the guidance for the CDRL/1423. Note that IEEE 1633

2016 has guidance in clause 4.4, Tables 16 and 23, clause 5.3.2.4, for the reliable

software tasks for agile, incremental and waterfall deliveries. Clause F.3.3. shows an

example of how predictions are applied in an agile framework.

The cost tailoring for this task depends on the degree of software in the system and

the risk level of that software in terms of stability. Stable programs which are having

relatively small or minor software upgrades are at less risk than a brand-new major

program. This task is not relatively expensive as there are several documented ways to

predict and assess the reliable software using IEEE 1633 2016. Even with the low

relative cost, some models require fewer inputs than others and less work by the

contractor’s reliability engineers to use the model. The government reliability engineer

may specifically allow for the models with fewer inputs if subject matter expertise is not

employed.

1.4.2 Tailoring the SOW Language

Step 1: If the result of the decision tree in Figures 1-1 and 1-2 and/or Table 1-2 is

that software does not need to be included in the system reliability prediction then do

not include the entire SOW language. Otherwise, the reliability engineer must tailor the

SOW language per the following steps:

Step 2: The only model that is not acceptable for a prediction for any program is

“subject matter expertise.” The SOW language as follows:

“The contractor shall predict the reliability of the software of each software LRU.

The contractor shall identify the method and justification for each prediction. The

predictive models discussed in IEEE 1633 2016 clauses 5.3.2, 6.2 and B.2, or historical

data from similar systems is acceptable. Predictions based on subject matter shall not

be used. The contractor shall update the reliable software predictions whenever size

estimations or other factors change and make the updates available to reliability

working groups. The contractor shall conduct reliable software predictions during

development through to testing. IEEE 1633 2016 Tables 16, 23, and clause 5.3.2.4

provide guidance for how the predictions are conducted in an agile/CI/CD framework.

The contractor shall deliver the predicted reliability of the software of each software LRU

as part of the Reliability and Maintainability (R&M) Report IAW DI-SESS-81497.”

1.4.3 Tailoring the CDRL

See Appendix C for the CDRL template. Steps for tailoring as follows:

Step 1: Do not create a separate CDRL for software. Insert language for both the

hardware and software system reliability predictions in the same CRDL for Reliability

and Maintainability (R&M) Prediction Report, DI-SESS-81497.

APPROVED FOR PUBLIC RELEASE

21

Step 2: All information related to due dates, frequency, and government approval

shown in Appendix C CDRLs are recommendations. The reliability engineer should

complete all blocks based on program-specific information.

Step 3 Remove all shaded text within <>.

1.5 Reliable Software Evaluation Task

Reliability growth is the positive improvement in reliability metric over a period of

time due to the implementation of corrective actions. For software, reliability

improvement is a function of:

• Amount of test hours with no new features added to the software system.

• The stability of the reused and off the shelf software components

• The number of installed sites (the number of weapons deployed) during reliability

growth - more installed sites and end users means faster growth while fewer

installed sites usually mean less rapid growth

• Implementation of corrective actions, fix effectiveness, and management

attention.

The Software Reliability Evaluation should measure the:

• Defect discovery due to software failures (increasing, peaking, or decreasing or

some combination)

• Actual reliability of software tracked against reliable software goals

• Capability drops and expected effect on reliability

• Degradation due to test environment, scalability, etc.

This task applies to software projects using any development framework. While the

actual reliability expected for Agile development may be different for Waterfall

development, the steps for tracking reliability is the same regardless of the development

framework.

The following sections provide the basis / justification for the task and tailoring the

SOW language to the Acquisition Strategy.

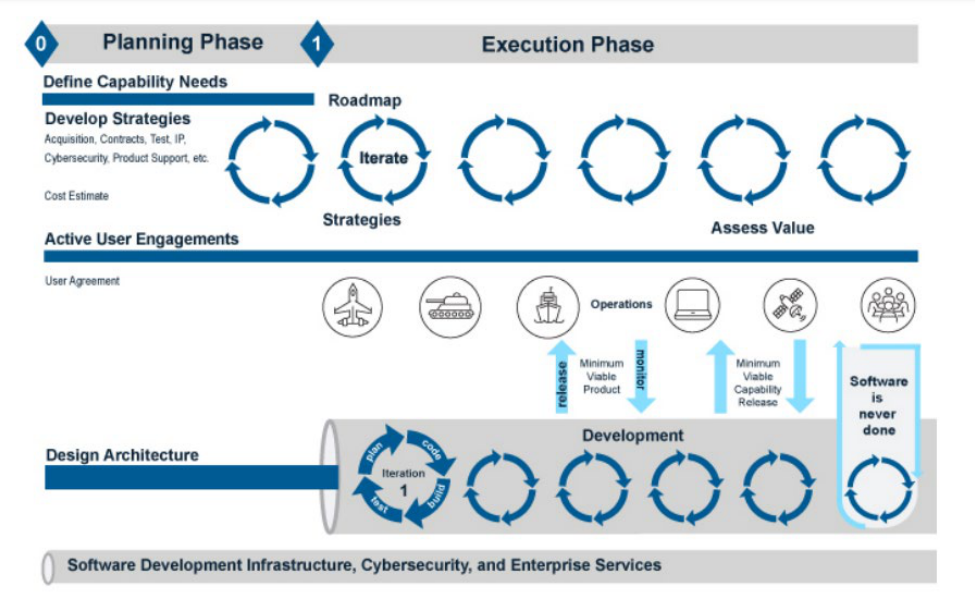

The reliable software evaluation is conducted during contractor testing as well as

Government testing. The below Figure 1-4 illustrates the agile development process.

The testing is conducted iteratively. The circles represent an iteration of development.

Within each circle is a testing activity. The software reliability is evaluated during each

testing activity of each iteration.

APPROVED FOR PUBLIC RELEASE

22

Figure 1-4 Agile Software Development

1.5.1 Basis / Justification

Reliable Software is often disregarded / under resourced / inadequate mission

reliability testing resulting in failure to achieve mission reliability.

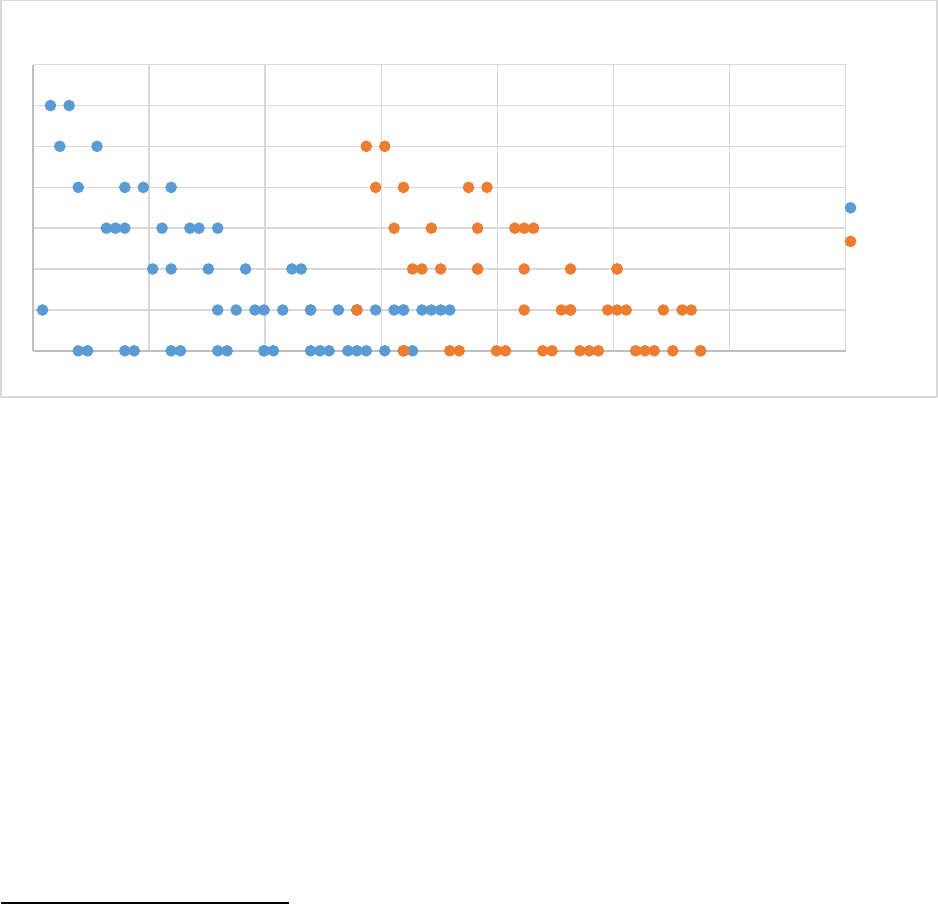

Figure 1-5 illustrates the typical defect discovery profile over the life of a software

version (Only unique defect discoveries graphed). If the contractor deploys the software

before the peak, the software is immature and not suitable for the customer. If

contractor deploys the software between the peak and when the software stabile

(defects flatten out), the software may be usable but not meet the reliability goals. If the

software deploys once the defect discovery rate flattens either the reliability objectives

of the program have been met or are on the path to meeting those objectives.

With Agile/CI/ Continuous Development may or will have multiple peaks with

(ideally) a final burn down at the final sprint. Every time there is a new software version,

there is a new profile.

APPROVED FOR PUBLIC RELEASE

23

Figure 1-5 Defect Discovery Profile

The defect profiles can and do overlap in the defects from version 1 or Sprint 1 can

and will be found in version 2 or Sprint 2. See Figure 1-6 for an example of reliability

evaluation with agile or incremental development. The figure shows an example of

merging in a new sprint after the peak but before the previous sprints stabilize. Note

only issues that have an effect on the mission should be graphed.

Figure 1-6 Example of a Defect Discovery for Incremental Development

The most important metric is the defect discovery trend. If the trend is not

decreasing, then most of the other metrics are largely irrelevant. The second most

important metric is the fix rate which ensures that the contractor is fixing the defects fast

enough to address the failures that effect reliability or availability. Also important are the

defects not piling up from release to release or Sprint to Sprint. Figure 1-6 is an

example of defect pileup. The discovered defects are plotted in increments of 10 usage

hours. When Sprint 2 was merged in at 190 hours, the most recent defect discovery rate

was at 1 defect per 10 hours. However, at 350 hours, the most recent rate is at 5 per

10 hours (4 from Sprint 2 and 1 from Sprint 1). Sprint 3 is about to be merged in despite

the increase in the rate and the fact that Sprint 2 received 30 hours less of testing than

0

2

4

6

8

0 50 100 150 200 250 300 350 400

on-

Cumulative unique defects

discovered

Usage in hours

Defects discovered

over usage time

Sprint 1

Sprint 2

Sprint 2

merged here

Sprint 3 will

be merged

here

APPROVED FOR PUBLIC RELEASE

24

Sprint 1. If Sprints 3 and beyond continue in this pattern, eventually the software will be

released with an increasing defect rate.

In the below Figure 1-7, the sprints are spaced far enough apart so that the defect

discovery rate is not increasing from sprint to sprint. At the start of sprint 2 the total

defects discovered per day peaks at 5 per day for sprint 2 plus 1 per day from sprint 1.

This isn’t worse than the peak defect discovery rate for sprint 1. Since the defects are

directly related to the amount of new code, sprint 2 was likely smaller in scope than

sprint 1. In order to deliver sprints of the same size as sprint 1, the sprints would need

to be spaced at 375 hours instead of 300 hours. At 375 usage hours is when there are

no more defects being found from sprint 1.

Figure 1-7 Example #2 of a Defect Discovery for Incremental Development

The below two (2) metrics are not relatively expensive and are generally required for

DevSecOps dashboards:

• The defect discovery fault rate (it should not be increasing)

• The fix rate should be keeping up with the discovered defects failures as per the

FDSC.

Stable programs having new software upgrades are at less risk than a brand-new

major program. This task is not terribly expensive as there are a variety of low

cost/open-source tools such as C-SFRAT

6

that trend the reliability as per the IEEE 1633

2016 clause 5.4.

If this is an MTA program, the reliability software evaluation will typically be one of

the most important tasks next to the testing for reliable software. MTA programs with no

transition to MCA can be specified to have only the fault and fix rates.

6

https://lfiondella.sites.umassd.edu/research/software-reliability/

0

1

2

3

4

5

6

7

0 100 200 300 400 500 600 700

Faults over usage time

Sprint 1

Sprint 2

APPROVED FOR PUBLIC RELEASE

25

1.5.2 Tailoring the SOW Language

Step 1: If the result of the decision tree in Figures 1-1 and 1-2 and/or Table 1-2 is

that software does not need to be included in the software reliability evaluation then do

not include the entire SOW language. Otherwise, the reliability engineer must tailor the

SOW language per the following steps:

Step 2: Modify the SOW language:

• Remove any bolded tasks from the SOW language that are deemed to

be not relevant as per the applicable decision tree.

• Remove the non applicable DID <DI-SESS-81628 or DI-SESS-80255>.

Step 3: The SOW language is:

“The contractor shall perform software Reliability Evaluation IAW IEEE 1633 2016

clauses 5.4.4, 5.4.5, 5.4.6, 6.3 and Annex C and shall identify: 1) justification for

selecting of reliability evaluation models; 2) provide the reliable software curves to the

Government; 3) trend of failure rate (increasing, peaking or decreasing); 4) evidence

that fix rate is addressing failures; 5) backlogged defects; 6) estimated defects, test

hours and test assets to achieve the specified/allocated reliability; 7) trend in severity of

discovered defects; 8) downtime (mean time to software restore). The contractor shall

deliver the reliability evaluation model(s), curve(s), and justification as part of the

Reliable Software Program Plan (RSPP) delivered in the R&M Program Plan (RPP) per

DI-SESS-81613.”

The reliability evaluation models shall be applied during contractor testing of each

build. The contractor shall include all software LRUs with the system in the reliability

agile/CI/CD framework. The contractor shall identify the test / usage hours per day or

week since software fails as a function of usage and not calendar time. The contractor

shall record the defects and corresponding failure modes found in the FRACAS system,

update the reliability models after each software build tests and update the system

reliability growth model. The contractor shall keep the models, tracking, and projections

up to date and be prepared to share any updates during working group meetings. The

contractor shall deliver reliability evaluation curves at the system level, and separately

for hardware and software. The reliability test results (models, tracking, and

projections) shall be delivered as per <DI-SESS-81628 or DI-SESS-80255.>”

1.5.3 Tailoring the CDRL

See Appendix C for the CDRL template. Steps for tailoring as follows:

Step 1: Do not create a separate CDRL for software reliability evaluation model(s),

curve(s), and justification(s). These should be delivered as part of the RSPP section of

the RPP DI-SESS-81613.

APPROVED FOR PUBLIC RELEASE

26

Step 2: All information related to due dates, frequency, and government approval

shown in Appendix C CDRLs are recommendations. The reliability engineer should

complete all blocks based on program-specific information.

• Initial delivery – The initial reliability evaluation should occur as soon as the first

developer test event concludes. If the Waterfall model is being used, then this

will be at the end of an external release cycle.

• Frequency of updates – If the contractor is employing Agile/CI/CD then the

testing is conducted iteratively. Sometimes testing sprints are very short in

duration so delivering a CDRL every test event will be expensive. Instead, the

contractor should make the reliability evaluations visible to the government

during test events by simply providing the government reliability engineer with the

failure data and times to failure to perform trend analysis

Step 3: Remove all shaded text within <>.

1.6 Software FMEA (SFMEA) Task

Software failure modes can originate in one of three ways:

• The specification - including Software Requirements Specifications (SRS),

Interface Requirements Specification (IRS) or design – is inherently faulty.

• The software specification is missing a crucially import detail or scenario.

• The code is not written exactly to the written specifications.

A common myth is only defects originating in the code are root cause failures as

opposed to the design or specifications being “failures.” Another common myth is that

failures due to systematic design faults don’t count. Another myth is that failures are

limited only to those that cause a shut down or termination. See the IEEE 1633

definitions in the appendix. As per the definitions, failure is defined by the effect with

regards to the specifications and not the underlying root cause. If the system fails

due to software, the cause does not matter if it was due to the implementation error or

the design / architecture error. The system still failed. The purpose of this task is to

focus on the failure modes due to the architecture, specifications, design, interfaces,

and code. Process related failure modes are those that pertain to flaws in

organizational structure and processes that allowed the defect to escape into operation.

A process FMEA is possible for software but is not the scope of this task or this

statement of work. The following sections provide the basis / justification for the task

and tailoring the SOW language to the Acquisition Strategy.

1.6.1 Basis / Justification

Since 1962, the same software failure modes have affected multiple missions

repeatedly. Below are a few examples of the failure modes:

APPROVED FOR PUBLIC RELEASE

27

• Faulty error handling – Quantas flight 72

7

un-commanded downward pitch

(incorrect fault recovery), Mars Polar Lander (software failed to detect spurious

data)

8

, Denver Airport (software assumed the luggage would not get jammed)

9

,

NASA Spirit Rover

10

(too many files on drive not detected)

• Faulty data definition – ESA Ariane 5 explosion (16/64-bit mismatch)

1112

, Mars

Climate Orbiter (Metric/English mismatch)

13

, TITANIV (wrong constant defined)

14

• Faulty logic/sequence – Solar Heliospheric Observatory spacecraft mishap

15

,

AT&T Mid Atlantic outage in 1991

16

, Operator’s choice of weapon release

overridden by software control

17

• Faulty state management – Incorrect missile firing from invalid setup

sequence

18

• Faulty algorithm – Flight controls fail at supersonic transition

19

, Mariner 1

20

mishap

• Faulty timing – 2003 Northeast blackout

21

, Therac 25 race condition

22

, Missile

launch timing error

23

, Apollo 11 lunar landing

24

• Faulty endurance – PATRIOT system failure

25

• Peak load conditions – IOWA caucus failure

26

• Faulty usability

• Software makes it too easy for humans to make irreversible mistakes –

Panama City, Panama over-radiation

27

• Insufficient positive feedback of safety and mission critical events

The SFMEA is beneficial when executing functions that cannot be reversed, have a

serious effect, cannot be avoided or overridden by humans and happen

instantaneously. Also, the SFMEA is beneficial when conducted against the design

and specifications as opposed to a source code line by line analysis. Historically,

greater than 50% of all software faults originate in the specifications or design

28

.

7

https://www.atsb.gov.au/publications/investigation_reports/2008/aair/ao-2008-070/

8

https://solarsystem.nasa.gov/system/internal_resources/details/original/3338_mpl_report_1.pdf

9

http://calleam.com/WTPF/wp-content/uploads/articles/DIABaggage.pdf

10

https://llis.nasa.gov/lesson/1483

11

https://www.nytimes.com/1996/12/01/magazine/little-bug-big-bang.html

12

https://www.esa.int/Newsroom/Press_Releases/Ariane_501_-_Presentation_of_Inquiry_Board_report

13

https://solarsystem.nasa.gov/missions/mars-climate-orbiter/in-depth/

14

https://www.faa.gov/regulations_policies/faa_regulations/commercial_space/media/Guide-Software-Comp-Sys-Safety-RLV-

Reentry.pdf

15

https://umbra.nascom.nasa.gov/soho/SOHO_final_report.html

16

https://telephoneworld.org/landline-telephone-history/the-crash-of-the-att-network-in-1990/

17